Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Search our knowledge base, curated by global Support, for answers ranging from account questions to troubleshooting error messages.

Featured Content

-

How to contact Qlik Support

Qlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical e... Show MoreQlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical experts. In this article, we guide you through all available avenues to secure your best possible experience.

For details on our terms and conditions, review the Qlik Support Policy.

Index:

- Support and Professional Services; who to contact when.

- Qlik Support: How to access the support you need

- 1. Qlik Community, Forums & Knowledge Base

- The Knowledge Base

- Blogs

- Our Support programs:

- The Qlik Forums

- Ideation

- How to create a Qlik ID

- 2. Chat

- 3. Qlik Support Case Portal

- Escalate a Support Case

- Resources

Support and Professional Services; who to contact when.

We're happy to help! Here's a breakdown of resources for each type of need.

Support Professional Services (*) Reactively fixes technical issues as well as answers narrowly defined specific questions. Handles administrative issues to keep the product up-to-date and functioning. Proactively accelerates projects, reduces risk, and achieves optimal configurations. Delivers expert help for training, planning, implementation, and performance improvement. - Error messages

- Task crashes

- Latency issues (due to errors or 1-1 mode)

- Performance degradation without config changes

- Specific questions

- Licensing requests

- Bug Report / Hotfixes

- Not functioning as designed or documented

- Software regression

- Deployment Implementation

- Setting up new endpoints

- Performance Tuning

- Architecture design or optimization

- Automation

- Customization

- Environment Migration

- Health Check

- New functionality walkthrough

- Realtime upgrade assistance

(*) reach out to your Account Manager or Customer Success Manager

Qlik Support: How to access the support you need

1. Qlik Community, Forums & Knowledge Base

Your first line of support: https://community.qlik.com/

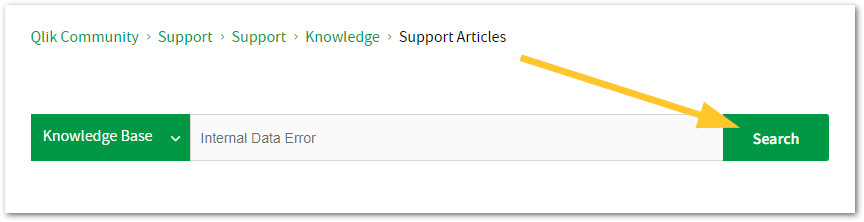

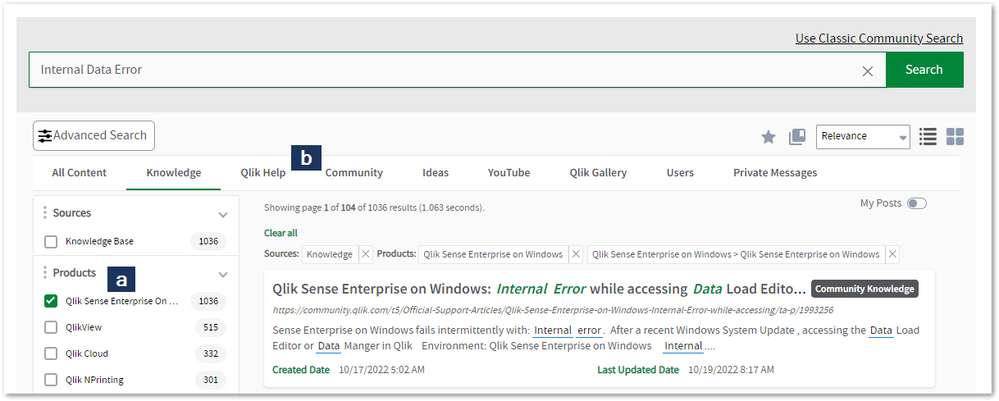

Looking for content? Type your question into our global search bar:

The Knowledge Base

Leverage the enhanced and continuously updated Knowledge Base to find solutions to your questions and best practice guides. Bookmark this page for quick access!

- Go to the Official Support Articles Knowledge base

- Type your question into our Search Engine

- Need more filters?

- Filter by Product

- Or switch tabs to browse content in the global community, on our Help Site, or even on our Youtube channel

Blogs

Subscribe to maximize your Qlik experience!

The Support Updates Blog

The Support Updates blog delivers important and useful Qlik Support information about end-of-product support, new service releases, and general support topics. (click)The Qlik Design Blog

The Design blog is all about product and Qlik solutions, such as scripting, data modelling, visual design, extensions, best practices, and more! (click)The Product Innovation Blog

By reading the Product Innovation blog, you will learn about what's new across all of the products in our growing Qlik product portfolio. (click)Our Support programs:

Q&A with Qlik

Live sessions with Qlik Experts in which we focus on your questions.Techspert Talks

Techspert Talks is a free webinar to facilitate knowledge sharing held on a monthly basis.Technical Adoption Workshops

Our in depth, hands-on workshops allow new Qlik Cloud Admins to build alongside Qlik Experts.Qlik Fix

Qlik Fix is a series of short video with helpful solutions for Qlik customers and partners.The Qlik Forums

- Quick, convenient, 24/7 availability

- Monitored by Qlik Experts

- New releases publicly announced within Qlik Community forums (click)

- Local language groups available (click)

Ideation

Suggest an idea, and influence the next generation of Qlik features!

Search & Submit Ideas

Ideation GuidelinesHow to create a Qlik ID

Get the full value of the community.

Register a Qlik ID:

- Go to: qlikid.qlik.com/register

- You must enter your company name exactly as it appears on your license or there will be significant delays in getting access.

- You will receive a system-generated email with an activation link for your new account. NOTE, this link will expire after 24 hours.

If you need additional details, see: Additional guidance on registering for a Qlik account

If you encounter problems with your Qlik ID, contact us through Live Chat!

2. Chat

Incidents are supported through our Chat, by clicking Chat Now on any Support Page across Qlik Community.

To raise a new issue, all you need to do is chat with us. With this, we can:

- Answer common questions instantly through our chatbot

- Have a live agent troubleshoot in real time

- With items that will take further investigating, we will create a case on your behalf with step-by-step intake questions.

3. Qlik Support Case Portal

Log in to manage and track your active cases in Manage Cases. (click)

Please note: to create a new case, it is easiest to do so via our chat (see above). Our chat will log your case through a series of guided intake questions.

Your advantages:

- Self-service access to all incidents so that you can track progress

- Option to upload documentation and troubleshooting files

- Option to include additional stakeholders and watchers to view active cases

- Follow-up conversations

When creating a case, you will be prompted to enter problem type and issue level. Definitions shared below:

Problem Type

Select Account Related for issues with your account, licenses, downloads, or payment.

Select Product Related for technical issues with Qlik products and platforms.

Priority

If your issue is account related, you will be asked to select a Priority level:

Select Medium/Low if the system is accessible, but there are some functional limitations that are not critical in the daily operation.

Select High if there are significant impacts on normal work or performance.

Select Urgent if there are major impacts on business-critical work or performance.

Severity

If your issue is product related, you will be asked to select a Severity level:

Severity 1: Qlik production software is down or not available, but not because of scheduled maintenance and/or upgrades.

Severity 2: Major functionality is not working in accordance with the technical specifications in documentation or significant performance degradation is experienced so that critical business operations cannot be performed.

Severity 3: Any error that is not Severity 1 Error or Severity 2 Issue. For more information, visit our Qlik Support Policy.

Escalate a Support Case

If you require a support case escalation, you have two options:

- Request to escalate within the case, mentioning the business reasons.

To escalate a support incident successfully, mention your intention to escalate in the open support case. This will begin the escalation process. - Contact your Regional Support Manager

If more attention is required, contact your regional support manager. You can find a full list of regional support managers in the How to escalate a support case article.

Resources

A collection of useful links.

Qlik Cloud Status Page

Keep up to date with Qlik Cloud's status.

Support Policy

Review our Service Level Agreements and License Agreements.

Live Chat and Case Portal

Your one stop to contact us.

Recent Documents

-

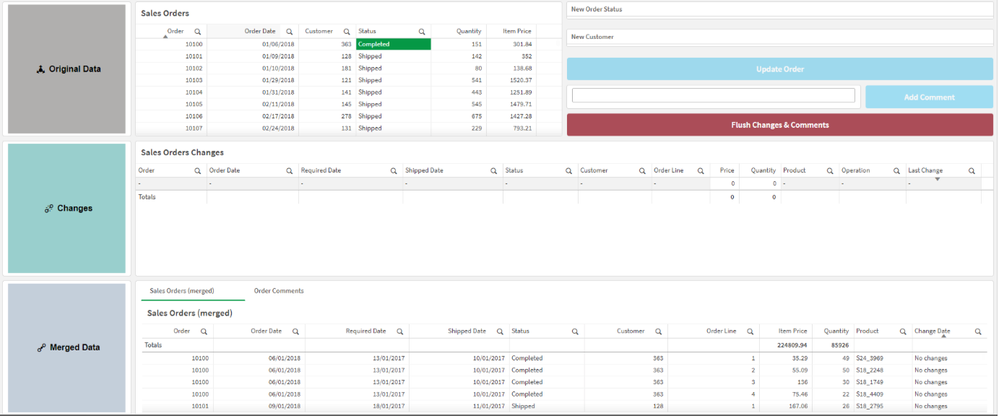

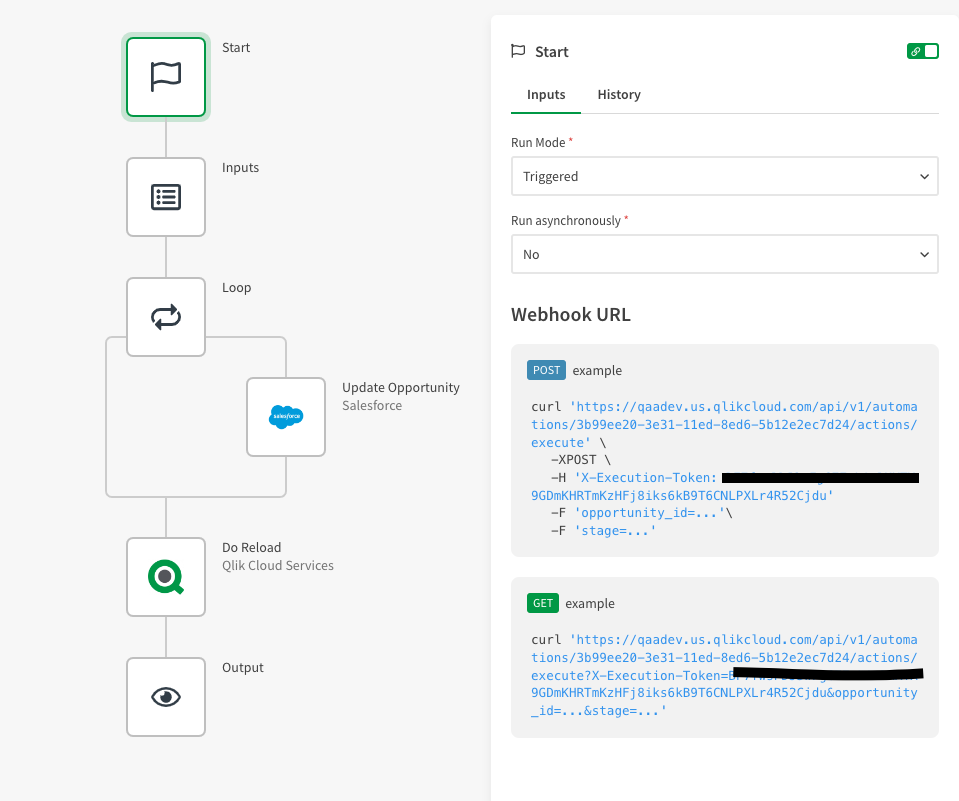

Make your Qlik Sense Sheet interactive with writeback functionality powered by Q...

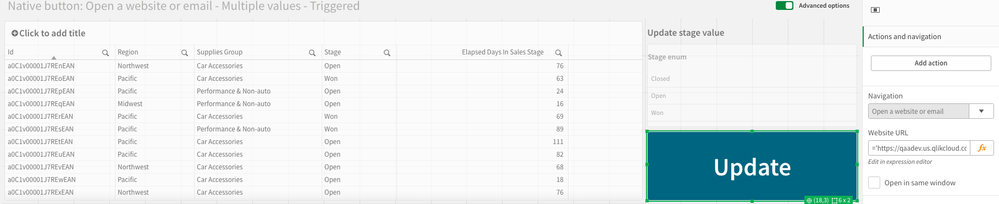

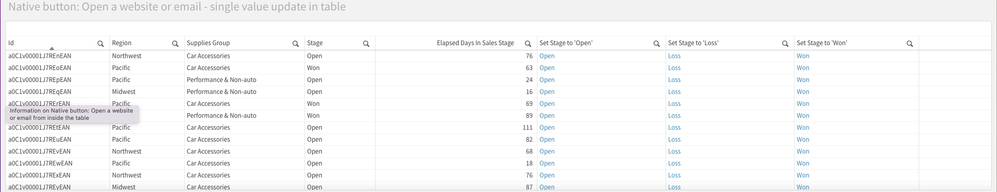

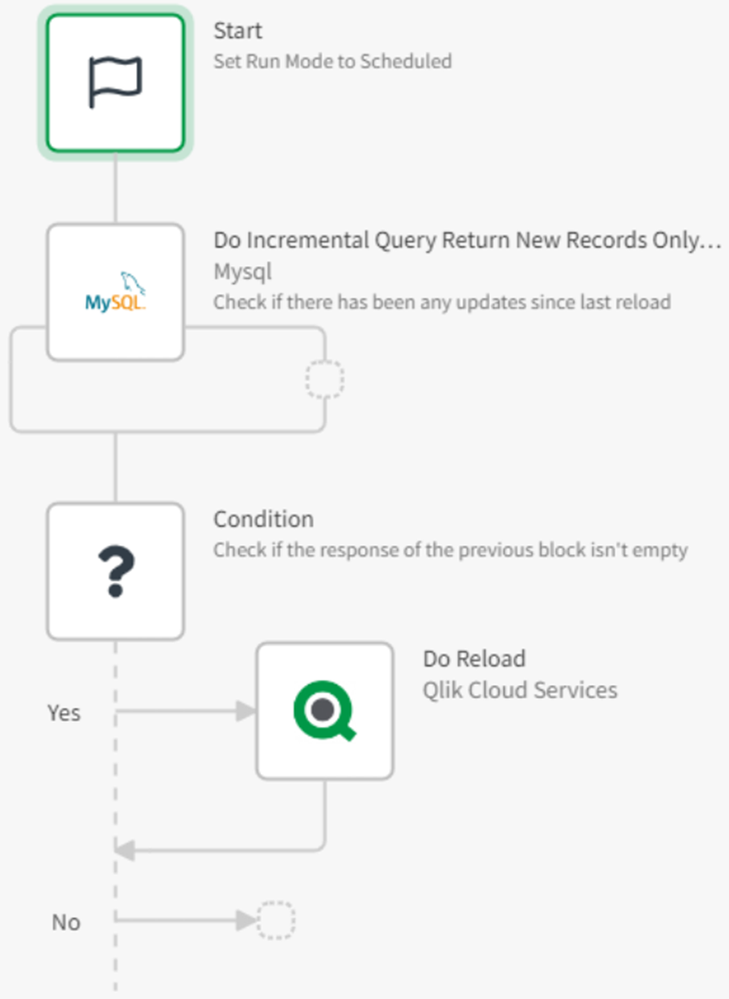

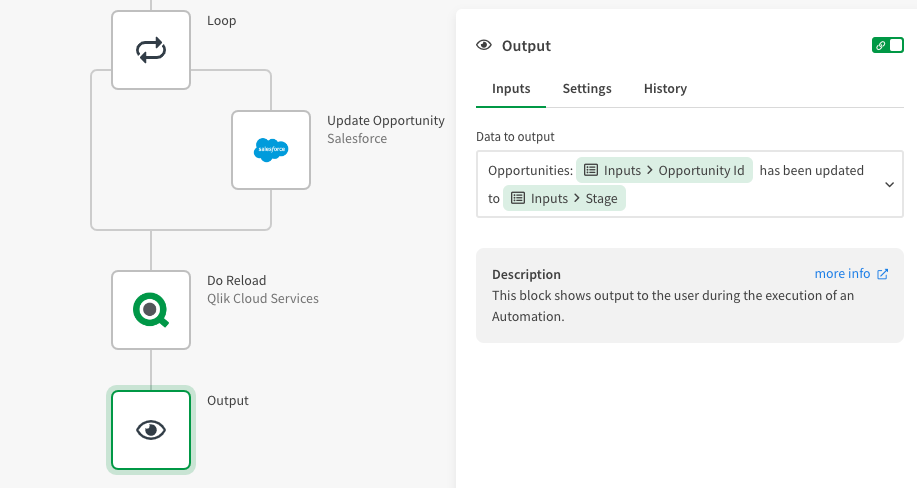

Table of content: Writeback use caseTwo ways to execute an automation from a Qlik Sense Sheet1. Native 'execute automation' action in the Qlik button... Show MoreTable of content:

- Writeback use case

- Two ways to execute an automation from a Qlik Sense Sheet

- 1. Native 'execute automation' action in the Qlik button

- 2. Link a triggered automation from your Qlik Sense Sheet.

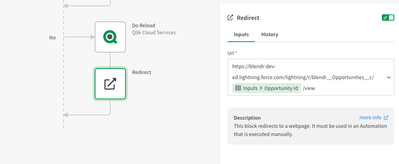

- Example 1: Open website or URL (button)

- Example 2: Add an action link in your straight table

- Example 2: Extension with input forms

- Additional Notes

- Demo application with automations

-

Qlik Licensing Service Reference Guide

Content BackgroundQlik Licensing Service (QLS)Signed License Key (JWT)What is a JSON Web Token?What’s in this for me as a Customer?Activation Using th... Show MoreContent

- Background

- Qlik Licensing Service (QLS)

- Signed License Key (JWT)

- What is a JSON Web Token?

- What’s in this for me as a Customer?

- Activation Using the Signed License Key

- Frequency in which license information is updated

- Data Transmitted by an Activation Request

- Activation Reply

- Analyzer Capacity License Report Process

- Data Transmitted

- Sync Reply

- User Assignment Sync Process

- Data Transmitted

- Reply fromLicense Backend

Background

Qlik Products have generally utilized license keys to enforce license entitlements and use rights. During activation, the licensed entitlement is downloaded to the product in the format of a Licensing Enabler File (LEF). Activation requires internet connectivity to the deployment and is triggered by entering a 16-digit serial number and a corresponding control number. Offline activation is also supported using a manual LEF by copying and pasting a text file into the activation user dialog. Communication is through an http protocol.

Qlik Licensing Service (QLS)

Introduced with the February 2019 release[GM1] of Qlik Sense Enterprise, Qlik has developed an alternative process for product activation. There have been several drivers for this change, including a move to an https protocol for a more secure connection between the Customer deployment and Qlik infrastructure. More information follows below.

To allow for Customers to make the decision when to move, Qlik has introduced the use of a Signed License Key to determine which activation method to use. Over time Qlik Licensing Service will replace the current activation process, but for now both methods of activations will work.Signed License Key (JWT)

As mentioned above, Qlik has added one additional way to activate Qlik products.

What is a JSON Web Token?

JSON Web Token (JWT) is an open standard (RFC 7519) that defines a compact and self-contained way for securely transmitting information between parties as a JSON object. This information can be verified and trusted because it is digitally signed. JWT’s can be signed using a secret (with the HMAC algorithm) or a public/private key pair using RSA or ECDSA.

Although JWT’s can be encrypted to also provide secrecy between parties, we will focus on signed tokens. Signed tokens can verify the integrity of the claims contained within it, while encrypted tokens hide those claims from other parties. When tokens are signed using public/private key pairs, the signature also certifies that only the party holding the private key is the one that signed it. That is why we refer to this as the Signed License Key.What’s in this for me as a Customer?

With the use of a Signed License Key, there are more Product and deployment offers to use.

- Use of multi-site deployments, such as connecting a Qlik Sense Enterprise site with a QlikView Server deployment using Qlik Sense Enterprise license options. This is enabled by accepting a Dual-Use offer for each QlikView deployment that should be enabled for a Unified License scenario.

- Use of multi-geo deployments, such as having Qlik Sense Enterprise sites in different locations using the same list of users.

- Use of the consumption-based license, Qlik Sense Enterprise Analyzer Capacity. This additional user license is possible to use in a single or multi-cloud scenario.

All of the above is enabled by the use of the Signed License Key. This made possible as the local deployment will sync entitlement data with all deployment’s using the same Signed License Key through an online database, License Backend, hosted by Qlik within Qlik Cloud.

Activation Using the Signed License Key

This is initiated by entering a Signed License Key to the Control Panel. The request is performed by the service Licenses using port 443 (https protocol procedures applies).

Signed license KeyExample data A Signed License Key based on one of Qlik’s internal keys. eyJhbGciOiJSUzUxMiIsInR5cCI6IkpXVCIsImtpZCI6ImEzMzdhZDE3LTk1ODctNGNhOS05M2I3LTBi

MmI5ZTNlOWI0OCJ9.eyJqdGkiOiI2MjNhYTlhZi05NTBmLTQ3ZjctOGJmMC1mNGQzOWY0MmQ5N

mMiLCJsaWNlbnNlIjoiOTk5OTAwMDAwMDAwMTI1MyJ9.YJqTct2ngqLfl2VP3jxW4RsDNK2MTL-BpJ

WnBdIfF5gGbJcX0hc__tfIa2ab5ZrL9h6tsZxTwgucTFiRTAOz8PaOQP7JTnhPCyrBZwpnmhvCrSHx2

C-HbCARFUIueBzMg8fgvWH-3HxBuxx6jnDhekDTUbb12vBq7CySampJkgMT7QsDdUkeJy5E7O0U

8yhd1RtEDeuTbeX35eIdQUN4DyJWHHPiT9qZt1AV0_Ofe1iLKxYZMa5jC0kIsVwYnRCJzibZlrLE7mS

VlNitxmcm8OoUrR_ZIk8VuOkoz_qqy8N_wwrt7FcT2slWz50XzuL8TIWY9mcGILFrequency in which license information is updated

Assignment information (what user has what type of access assigned) is synchronized from the license service to license.qlikcloud.com every 10 minutes.

Changes to a license (such as adding additional analyzer capacity) can take up to 24 hours to be retrieved.Data Transmitted by an Activation Request

Data Element Comment Example Data Signed License Key See above Cause Initial or Update “Initial” User agent build by the License service version (operating system) and Product (e.g. QSEfW, QCS, QSEfE, QV) Licenses/1.6.4 (windows) QSEfW Activation Reply

Data Element Comment Sample Data License definition content variable based on product and entitlement "name": "analyzer_time", "usage": {"class": "time",

"minimum": 5},

"provisions": [{ "accessType":"analyzer"} ],"units": [{"count": 200, "valid": "2018-06-01/2018-12-31"}]},

"name": "professional",

"usage": { "class": "assigned",

"minimum": 1 }, "provisions": [{

"accessType": "professional" }],"units": [{ "count": 10, "valid": "" }]}Analyzer Capacity License Report Process

(Time schedule is not disclosed and includes grace time to support outages in the internet connection, a/k/a Optimistic Delegation.)

Data Transmitted

Data Element Comment Sample Data Signed License Key

See above Array Element id Used for internal match only 1 Allotment name alternatives are Analyzer_Time, Core_Time “analyzer_time” Year/Month YYYY-MM 2018-11 Consumption for this deployment since last sync 242 Source hashed ID to make each deployment unique, e.g. a Qlik Sense Enterprise on Windows and a Qlik Sense Enterprise on Kubernetes will have different Source ID's fbe89d02-6d24-4595-915e-c52ce76f2195

User agent same construct as for as activation request Licenses/1.6.4 (windows) QSEfW Sync Reply

Data Element Comment Sample Data Total consumption Used by the Product for enforcement. Deny access will be executed if quota has been exceeded. Quota is set in the LEF.

Additional quota for the month could be managed as Overage in the LEF. This would contain an Overage Value (COUNT) or the value YES.

Total quota for the month is calculated as licensed quota + Overage quota. If the LEF contains the value YES, there will be no cap on the capacity for the Year/Month.12345 User Assignment Sync Process

Data Transmitted

Data Element Comment Sample Data Signed License Key See above Allotment name Professional / Analyzer “professional” Subject Domain / User ID;

if this an add or delete transaction. By delete the subject will be removed immediately. An internal id will be used to secure sync to other deployments using the same Signed License Key. The internal id will disappear within 60 days after a delete transaction.

(This information is stored for all assigned users until such a time that the assignment is deleted at which point it is deleted. The information is used for synchronizing assignments across deployments in order to facilitate the single-license-multi-deployment scenario. It is encrypted in transit and at rest.)“acme\bob”

(For information on how data is submitted and stored in the audit logs see here)

User agent Build by the License service version (operating system) and Product (e.g. QSEfW, QCS, QSEfE, QV) Licenses/1.6.4 (windows) QSEfW Source Hashed ID to make each deployment unique, e.g. a Qlik Sense Enterprise on Windows and a Qlik Sense Enterprise on Kubernetes will have different Source ID's fbe89d02-6d24-4595-915e-c52ce76f2195

Sync metadata Versioning information about the subjects and list of subjects to manage the synchronization process { "source": "my assignments",

"bases": [{ "license": "1234 1234 1234 1234",

"version": 0 }], "patches": [{

"instance": "", "version": 0,

"license": "1234 1234 1234 1234", "allotment": "analyzer", "subject": \\generated4, "created": "2019-04-18T10:01:35.024031Z" }

Reply from License Backend

Data Element Comment Sample Data Signed License Key See above Subject Including subjects changed by other deployments “acme\bob” Sync metadata Versioning information about the subjects and list of subjects to manage the synchronization process { "bases": [{ "license": "1234 1234 1234 1234", "version": 17 }], "patches": [{ "instance": "5382018630938057025",

"version": 14,

"license": "1234 1234 1234 1234", "allotment": "analyzer",

"subject": ACME\\bob",

"created": "2019-04-18T10:01:35.024Z",

"rejection": "" }]

This Reference Guide is intended solely for general informational purposes and its contents do not form part of the product documentation. The information in this guide is subject to change without notice. ALL INFORMATION IN THIS GUIDE IS BELIEVED TO BE ACCURATE BUT IS PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. Qlik makes no commitment to deliver any future functionality and purchasing decisions should not be based upon any future expectation. -

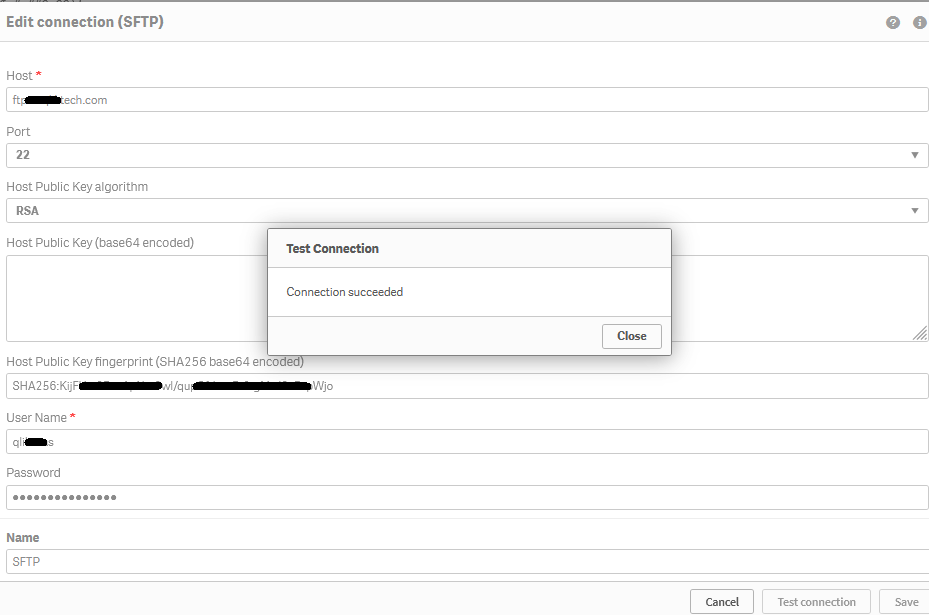

How to create an SFTP data connection in a Qlik Cloud Application using 'fingerp...

Steps Retrieve the SFTP server fingerprint (this example uses port 22) Open a Windows command prompt Run the following command: sftp -P 22 username... Show MoreSteps

- Retrieve the SFTP server fingerprint (this example uses port 22)

- Open a Windows command prompt

- Run the following command:

sftp -P 22 username@ftptest.test.com

Where username is 'username' and 'ftptest.test.com' is the sftp hostname. - The response received will be similar to:

C:\Windows\System32>sftp -P 22 username@ftptest.test.com The authenticity of host 'ftptest.test.com (18x.6x.15x.21x)' can't be established. RSA key fingerprint is SHA256:KijFUxxxxxxxxxxxxxx/xxxxxxxxxxxxxxxxxapWjoif the above does not retrieve your fingerprint, contact your SFTP server administrator who can provide fingerprint or public key details for you.

- Copy the RSA fingerprint

- Create a Qlik Cloud Data Connection

- Open your Qlik Cloud Application and navigate to the data load editor

- Under Data Connection choose Create new connection

- Search and select SFTP

- Enter your credentials and fingerprint information

- Hostname

- (ie: myftpserver.domain.com. Do not pre-pend the address with ftp:// nor sftp://)

- Username

- Password

- Insert fingerprint

- Hostname

- Click Test connection

If there are connectivity issues, to request data from your data connections through a firewall, you need to add the underlying (all three) IP addresses for your region to your firewall's allow list. These addresses are static and will not change. See Allowlisting domain names and IP addresses.

Other Diagnostics:

Error in SFTP connector

- Error shows public key mismatch error in Qlik SFTP connector

Diagnostic

- Open Command Prompt and enter "ssh-keyscan -t rsa <host ip or name) | ssh-keygen -lf -"

Example: (insert a valid SFTP server host IP)

- C:\Windows\System32>ssh-keyscan -t rsa 192.168.xxx.255 | ssh-keygen -lf -

Result:

- "(stdin) is not a public key file"

In case of this or other SFTP connection errors, please work with the SFTP server administrator or SFTP server vendor in order in order to enable the generation of a publicly available fingerprint.Related Information:

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

- Retrieve the SFTP server fingerprint (this example uses port 22)

-

How to: Use Qlik Application Automation to manage users

This article provides an overview of how to manage users using Qlik Application Automation. This approach can be useful when migrating from QlikView, ... Show MoreThis article provides an overview of how to manage users using Qlik Application Automation. This approach can be useful when migrating from QlikView, or Qlik Sense Client Managed, to Qlik Sense Cloud when security concerns prevent the usage of Qlik-CLI and PowerShell scripting.

You will find an automation attached to this article that works with the Microsoft Excel connector. More information on importing automation can be found here.

Content

- Prerequisites

- Sheet Configuration

- Automation Configuration

- Running the Automation

- Considerations

- Related Content

Prerequisites

- Access to a Qlik Cloud tenant

- User assigned the role of Tenant Admin

- Office 365 account (for Microsoft Excel)

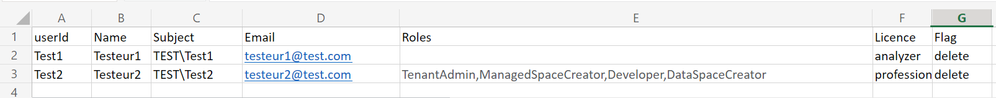

Sheet Configuration

In this example, we use a Microsoft Excel file as a source file to manage users. A sheet name, for example, Users, must be added and this must also be provided as input when running the automation. The sheet must also contain these headers: userId, Name, Subject, Email, Roles, Licence, and Flag.

Example of sheet configuration:

If users are to be created the Flag column must be set to create. If users are to be deleted, there's no need to include roles, but Flag must be set to delete.

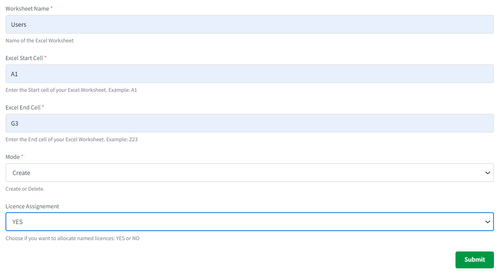

Automation Configuration

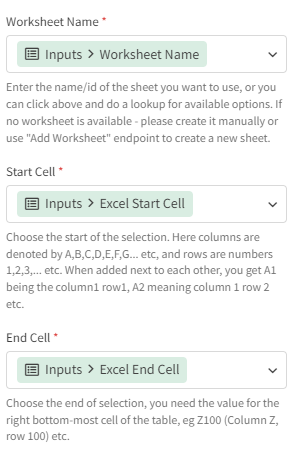

- Add an Input block that takes the name of the worksheet from which data should be read as input. You also need to specify the first and last cell to read data from, as well as if users are to be created or deleted.

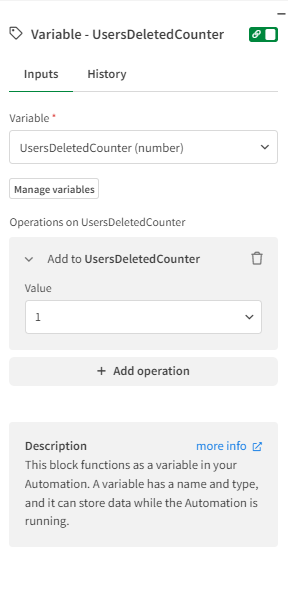

- Add two variables of the type number. One to keep track of created users and one to keep track of deleted users. These variables will be used to update the run title when the automation has been completed. Only one of them will be used when running the automation since only one of the modes can be used (Create or Delete). Let's call the variables UsersCreatedCounter and UsersDeletedCounter.Intially set these variables to 0.

- Add the Get Current Tenant Id block from Qlik Cloud Services Connector.

-

Add the List Rows With Headers block from the Microsoft Excel connector to read the values that have been configured in the Excel sheet.

- Use the "do lookup" function to find the Drive ID and Item ID of the file you will be using as a source.

- Configure the other fields to get the values from the input fields.

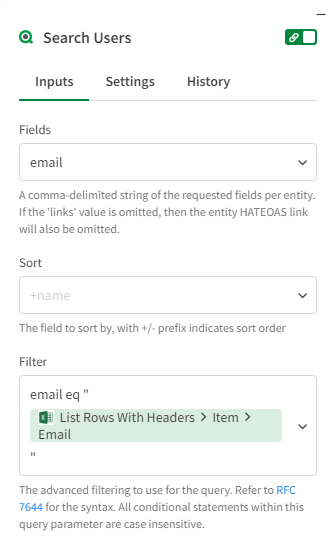

- Inside of the List Rows With Headers loop add the Search Users block from the Qlik Cloud Services connector. Configure Fields and Filter input parameters as shown below.

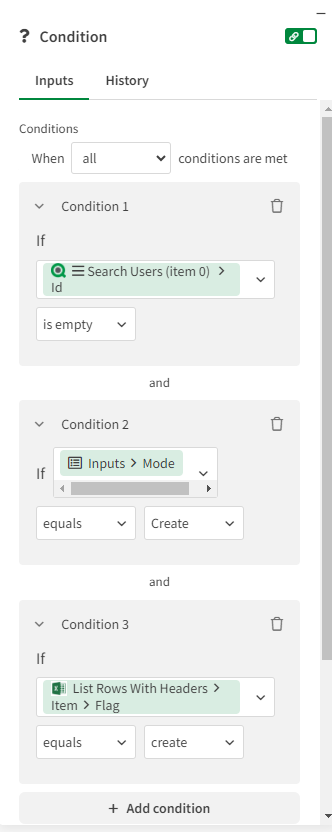

- Add a Condition block to check if the user exists, if we're in Create mode, and if the Flag column in the Excel file is set to create.

- If the conditions are true, add the below variables by initially setting all these variables to empty:

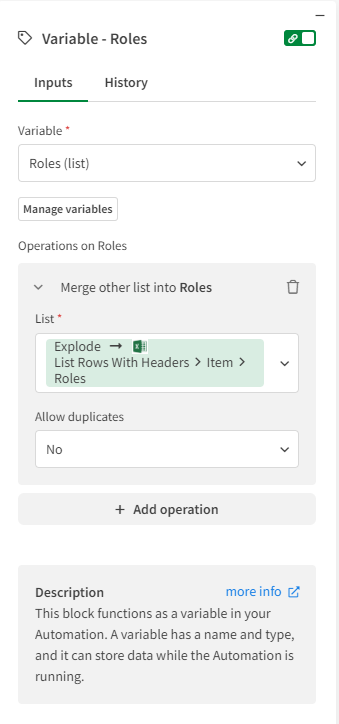

- Roles: List variable to store a list of roles that needs to be assigned to the user. Configure the variable by clicking on Add operation button and selecting the 'Merge other list into Roles' option. In the List field, use explode formula to convert comma-separated roles from the Role column in the Excel file into a list.

- AssignedRoles: Variable of type object to store the role ID as key-value pair.

- AssignedRolesList: List variable to store the AssignedRoles object variable as an item.

- Roles: List variable to store a list of roles that needs to be assigned to the user. Configure the variable by clicking on Add operation button and selecting the 'Merge other list into Roles' option. In the List field, use explode formula to convert comma-separated roles from the Role column in the Excel file into a list.

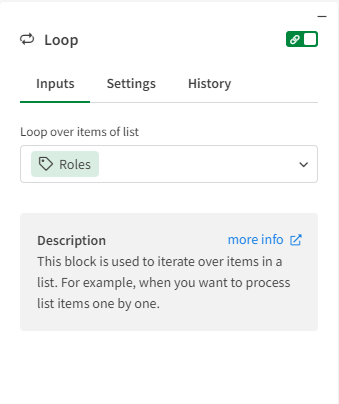

- Add a Loop block to loop through the Roles list variable where the roles of the user are stored from the Excel file.

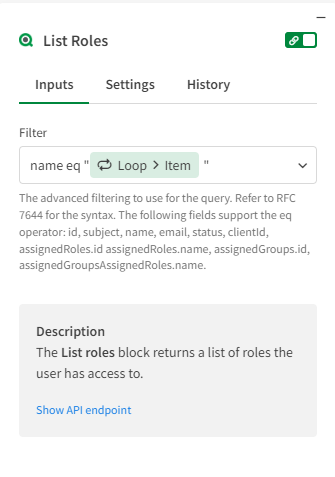

- Add the List Roles block from the Qlik Cloud Services connector to retrieve the IDs of the roles. Configure the Filter input parameter as shown below.

- Add AssignedRoles object variable to store role ID. Configure the variable by clicking on Add operation button and selecting the 'Set key/values of the AssignedRoles' option. Click on Add key/value button and use the ID as the key and the ID from the output of the List Roles block as the value.

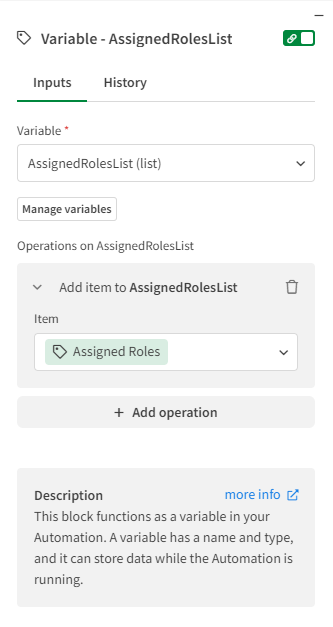

- Add the AssignedRolesList variable to store the AssignedRoles object variable as an item to this list variable. To do this click on Add operation button and select the 'Add item to AssignedRolesList' option. In the Item field map the AssignedRoles object variable.

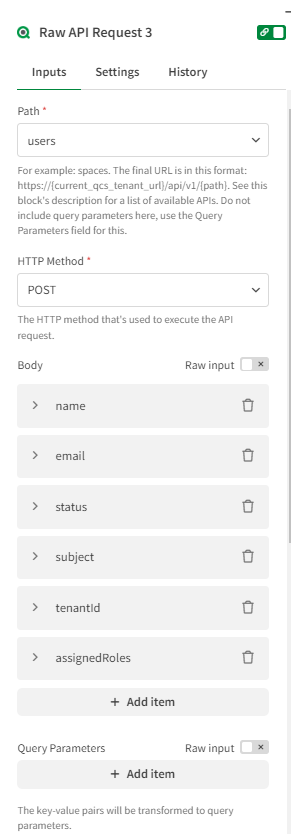

- Add the Raw API Request block from the Qlik Cloud Services connector to create the user. Configure the block as shown below.

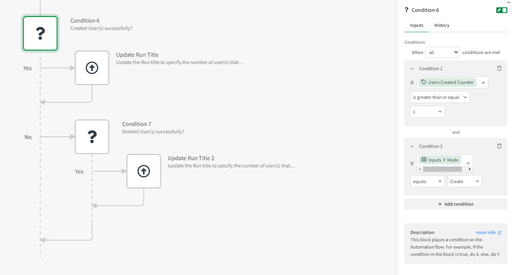

- Add the Condition block to check if the user has been created. If the condition is true increment the UsersCreatedCounter variable by 1.

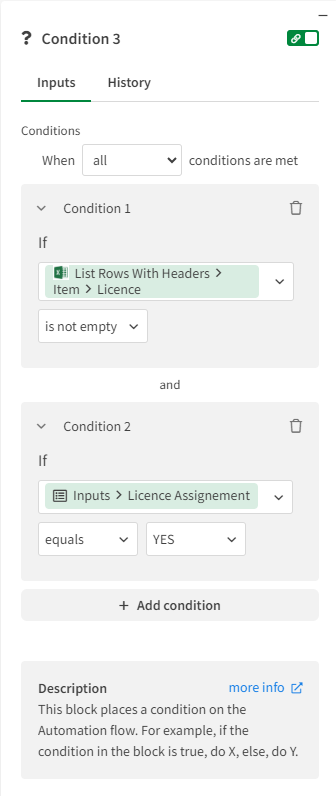

- Add the Condition block to check if we need to allocate a license to the user created in the above step.

- If the conditions are true, add the Raw API Request block to allocate a license to the user. Configure the input parameters as shown below.

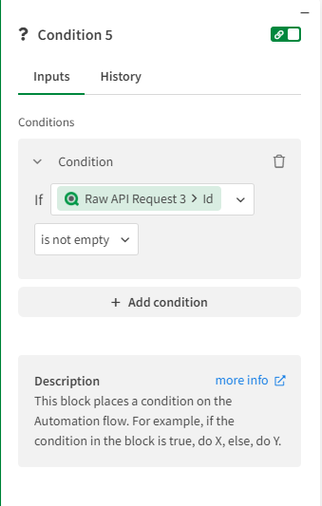

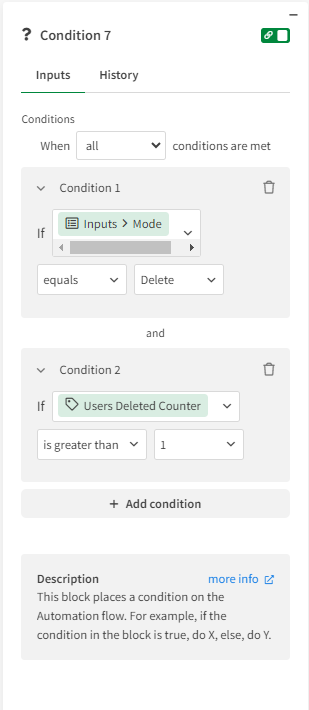

- If the first condition in the List Rows With Headers loop is false, then use another Condition block to find out if we are in Delete mode, if the flag column in the Excel file is set to delete, and if the user exists.

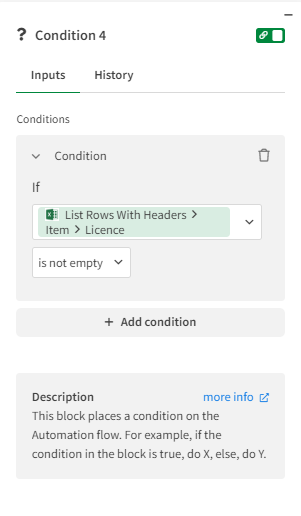

- If the conditions are true, add another Condition block to check if the user has a named license.

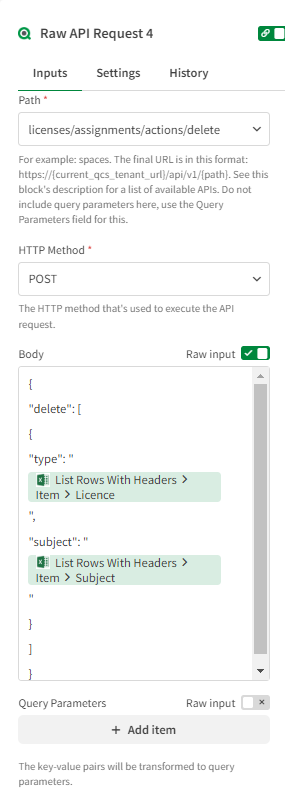

- If the condition outcome evaluates to true, add the Raw API Request block to deallocate the user's license. Configure the input parameters as shown below.

- If the condition outcome evaluates to true, add the Raw API Request block to deallocate the user's license. Configure the input parameters as shown below.

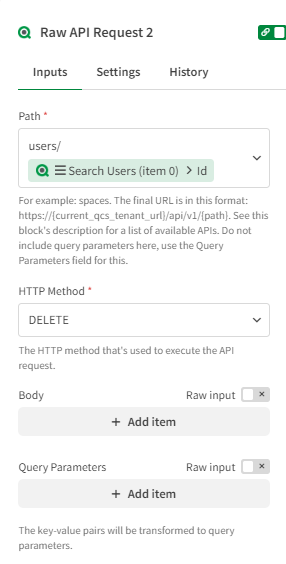

- Add the Raw API Request block to delete the user. Configure the input parameters as shown below.

- Add the UsersDeletedCounter variable and increment it by 1.

- The last part of this automation is to check the mode and update the automation's run title to display how many users have been created or deleted.

Running the Automation

When running the automation you must provide input to the automation, this includes the name of the worksheet to read data from. You also need to specify the first and last cell to read data from, as well as if users are to be created or deleted. Example :

Input Value Worksheet Name Users Excel Start Cell A1 Excel End Cell G5 Mode Create Considerations

- A multitenant scenario can be supported by utilizing the Qlik Platform Operations connector instead of the Qlik Cloud Services connector.

- Google Sheets could be used instead of Microsoft Excel.

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

Related Content

How to manage space membership (users)

Environment

-

How To: Configure Qlik Sense Enterprise SaaS to use Azure AD as an IdP. Now with...

This article provides step-by-step instructions for implementing Azure AD as an identify provider for Qlik Cloud. We cover configuring an App registra... Show MoreThis article provides step-by-step instructions for implementing Azure AD as an identify provider for Qlik Cloud. We cover configuring an App registration in Azure AD and configuring group support using MS Graph permissions.

It guides the reader through adding the necessary application configuration in Azure AD and Qlik Sense Enterprise SaaS identity provider configuration so that Qlik Sense Enterprise SaaS users may log into a tenant using their Azure AD credentials.

Content:

- Prerequisites

- Helpful vocabulary

- Considerations when using Azure AD with Qlik Sense Enterprise SaaS

- Configure Azure AD

- Create the app registration

- Create the client secret

- Add claims to the token configuration

- Add group claim

- Collect Azure AD configuration information

- Configure Qlik Sense Enterprise SaaS IdP

- Recap

- Addendum

- Related Content (VIDEO)

Prerequisites

- An Microsoft Azure account

- A Microsoft Azure Active Directory instance

- A Qlik Sense Enterprise SaaS tenant

- The BYOIDP feature in your Qlik license is set to YES. Contact customer support to find out if you are entitled to bring your own identity provider to your tenant.

Helpful vocabulary

Throughout this tutorial, some words will be used interchangeably.

- Qlik Sense Enterprise SaaS: Qlik Sense hosted in Qlik’s public cloud

- Microsoft Azure Active Directory: Azure AD

- Tenant: Qlik Sense Enterprise SaaS tenant or instance

- Instance: Microsoft Azure AD

- OIDC: Open Id Connect

- IdP: Identity Provider

Considerations when using Azure AD with Qlik Sense Enterprise SaaS

- Qlik Sense Enterprise SaaS allows for customers to bring their own identity provider to provide authentication to the tenant using the Open ID Connect (OIDC) specification (https://openid.net/connect/)

- Given that OIDC is a specification and not a standard, vendors (e.g. Microsoft) may implement the capability in ways that are outside of the core specification. In this case, Microsoft Azure AD OIDC configurations do not send standard OIDC claims like email_verified. Using the Azure AD configuration in Qlik Sense Enterprise SaaS includes an advanced option to set email_verified to true for all users that log into the tenant.

- The Azure AD configuration in Qlik Sense Enterprise SaaS includes special logic for contacting Microsoft Graph API to obtain friendly group names. Whether those groups originate from an on-premises instance of Active Directory and sync to Azure AD through Azure AD Connect or from creation within Azure AD, the friendly group name will be returned from the Graph API and added to Qlik Sense Enterprise SaaS.

Configure Azure AD

Create the app registration

- Log into Microsoft Azure by going to https://portal.azure.com.

- Click on the Azure Active Directory icon in the browser Or search for "Azure Active Directory" in the search bar on the top. The overview page for the active directory will appear.

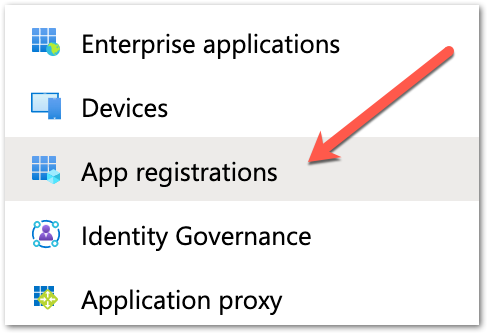

- Click the App registrations item in the menu to the left.

- Click the New registration button at the top of the detail window. The application registration page appears.

- Add a name in the Name section to identify the application. In this example, the name of the hostname of the tenant is entered along with the word OIDC.

- The next section contains radio buttons for selecting the Supported account types. In this example, the default – Accounts in this organizational directory only – is selected.

- The last section is for entering the redirect URI. From the dropdown list on the left select “web” and then enter the callback URL from the tenant. Enter the URI https://<tenant hostname>/login/callback.

The tenant hostname required in this context is the original hostname provided to the Qlik Enterprise SaaS tenant.

Using the Alias hostname will cause the IdP handshake to fail. - Complete the registration by clicking the Register button at the bottom of the page.

- Click on the Authentication menu item on the left side of the screen.

- On the middle of the page, the reference to the callback URI appears. There is no additional configuration required on this page.

Create the client secret

- Click on the Certificates and secrets menu item on the left side of the screen.

- In the center of the Certificates and secrets page, there is a section labeled Client secrets with a button labeled New client secret. Click the button.

- In the dialog that appears, enter a description for the client secret and select an expiration time. Click the Add button after entering the information.

- Once a client secret is added, it will appear in the Client secrets section of the page.

Copy the "value of the client secret" and paste it somewhere safe.

After saving the configuration the value will become hidden and unavailable.

Add claims to the token configuration

- Click on the Token configuration menu item on the left side of the screen.

- The Optional claims window appears with two buttons. One for adding optional claims, and another for adding group claims. Click on the Add optional claim button.

- For optional claims, select the ID token type, and then select the claims to include in the token that will be sent to the Qlik Sense Enterprise SaaS tenant. In this example, ctry, email, tenant_ctry, upn, and verified_primary_email are checked. None of these optional claims are required for the tenant identity provider to work properly, however, they are used later on in this tutorial.

- Some optional claims may require adding OpenId Connect scopes from Microsoft Graph to the application configuration. Click the check mark to enable and click Add.

- The claims will appear in the window.

Add group claim

- Click on the API permissions menu item on the left side of the screen.

- Observe the configured permissions set during adding optional claims.

- Click the Add a permission button and select the Microsoft Graph option in the Request API permissions box that appears. Click on the Microsoft Graph banner.

- Click on Delegated permissions. The Select permission search and the OpenId permissions list appears.

In the OpenID permissions section, check email, openid, and profile. In the Users section, check user.read.

- In the Select permissions search, enter the word group. Expand the GroupMember option and select GroupMember.Read.All. This will grant users logging into Qlik Sense Enteprise SaaS through Azure AD to read the group memberships they are assigned.

- After making the selection, click the Add permissions button.

- The added permissions will appear in the list. However, the GroupMember.Read.All permission requires admin consent to work with the app registration. Click the Grant button and accept the message that appears.

Failing to grant consent to GroupMember.Read.All may result in errors authenticating to Qlik using Azure AD. Make sure to complete this step before moving on.

Collect Azure AD configuration information

- Click on the Overview menu item to return to the main App registration screen for the new app. Copy the Application (client) ID unique identifier. This value is needed for the tenant’s idp configuration.

- Click on the Endpoints button in the horizontal menu of the overview.

- Copy the OpenID Connect metadata document endpoint URI. This is needed for the tenant’s IdP configuration.

Configure Qlik Sense Enterprise SaaS IdP

- With the configuration complete and required information in hand, open the tenant’s management console and click on the Identity provider menu item on the left side of the screen.

- Click the Create new button on the upper right side of the main panel.

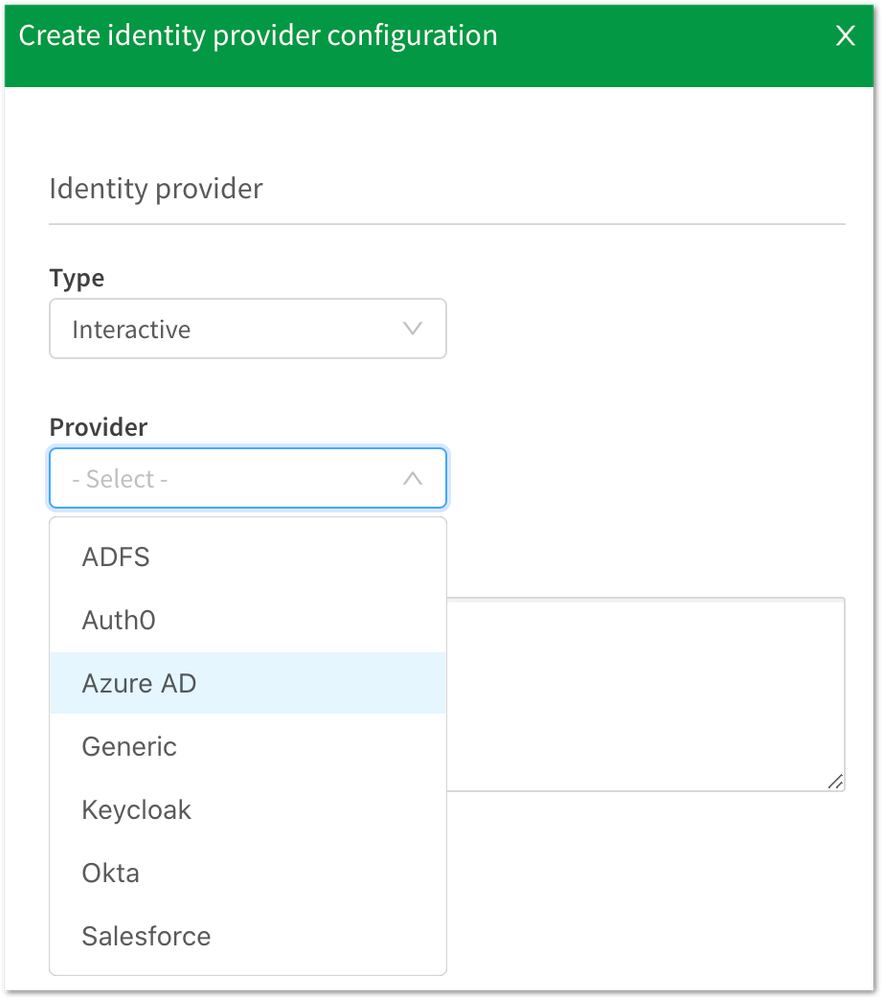

- Select Interactive from the Type drop-down menu item, and select 'AZURE AD' from the Provider drop-down menu item.

- Scroll down to the Application credentials section of the configuration panel and enter the following information:

- ADFS discovery URL: This is the endpoint URI copied from Azure AD.

- Client ID: This is the application (client) id copied from Azure AD.

- Client secret: This is the value copy and pasted to a safe location from the Certificates & secrets section from Azure AD.

- The Realm is an optional value used if you want to enter what is commonly referred to as the Active Directory domain name.

- Scroll down to the Claims mapping section of the configuration panel. There are five textboxes to confirm or alter.

- The sub field is the subject of the token sent from Azure AD. This is normally a unique identifier and will represent the UserID of the user in the tenant. In this example, the value “sub” is left and appid is removed. To use a different claim from the token, replace the default value with the name of the desired attribute value.

- The name field is the “friendly” name of the user to be displayed in the tenant. For Azure AD, change the attribute name from the default value to “name”.

- In this example, the groups, email, and client_id attributes are configured properly, therefore, they do not need to be altered.

In this example, I had to change the email claim to upn to obtain the user's email address from Azure AD. Your results may vary.

- The sub field is the subject of the token sent from Azure AD. This is normally a unique identifier and will represent the UserID of the user in the tenant. In this example, the value “sub” is left and appid is removed. To use a different claim from the token, replace the default value with the name of the desired attribute value.

- Scroll down to the Advanced options and expand the menu. Slide the Email verified override option ON to ensure Azure AD validation works. Scope does not have to be supplied.

- The Post logout redirect URI is not required for Azure AD because upon logging out the user will be sent to the Azure log out page.

- Click the Save button at the bottom of the configuration to save the configuration. A message will appear confirming intent to create the identity provider. Click the Save button again to start the validation process.

- The validation procedure begins by redirecting the person configuring the IdP to the login page for the IdP.

- After successful authentication, Azure AD will confirm that permission should be granted for this user to the tenant. Click the Accept button.

- If the validation fails, the validation procedure will return a window like the following.

- If the validation succeeds, the validation procedure will return a mapped claims window. If the validation states it cannot map the user's email address, it is most likely because the email_verified switch has not been turned on. Go ahead and confirm, move through the remaining steps, and update the configuration as per the previous step. Re-run the validation to map the email.

- After confirming the information is correct, the account used to validate the IdP may be elevated to a TenantAdmin role. It is strongly recommended to do make sure the box is checked before clicking continue.

- The next to last screen in the configuration will ask to activate the IdP. By activating the Azure AD IdP in the tenant, any other identity providers configured in the tenant will be disabled.

- Success.

- Please log out of the tenant and re-authenticate using the new identity provider connection. Once logged in, change the url in the address bar to point to https://<tenanthostname>/api/v1/diagnose-claims. This will return the JSON of the claims information Azure AD sent to the tenant. Here is a slightly redacted example.

- Verify groups resolve properly by creating a space and adding members. You should see friendly group names to choose from.

Recap

While not hard, configuring Azure AD to work with Qlik Sense Enterprise SaaS is not trivial. Most of the legwork to make this authentication scheme work is on the Azure side. However, it's important to note that without making some small tweaks to the IdP configuration in Qlik Sense you may receive a failure or two during the validation process.

Addendum

For many of you, adding Azure AD means you potentially have a bunch of clean up you need to do to remove legacy groups. Unfortunately, there is no way to do this in the UI but there is an API endpoint for deleting groups. See Deleting guid group values from Qlik Sense Enterprise SaaS for a guide on how to delete groups from a Qlik Sense Enterprise SaaS tenant.

Related Content (VIDEO)

Qlik Cloud: Configure Azure Active Directory as an IdP

-

Qlik Cloud Monitoring Apps Workflow Guide

Installing, upgrading, and managing the Qlik Cloud Monitoring Apps has just gotten a whole lot easier! With two new Qlik Application Automation templa... Show MoreInstalling, upgrading, and managing the Qlik Cloud Monitoring Apps has just gotten a whole lot easier! With two new Qlik Application Automation templates coupled with Qlik Data Alerts, you can now:

- Install/update the apps with a fully guided, click-through installer using an out-of-the-box Qlik Application Automation template.

- Programmatically rotate the API key required for the data connection on a schedule using an out-of-the-box Qlik Application Automation template. This ensures the data connection is always operational.

- Get alerted whenever a new version of a monitoring app is available using Qlik Data Alerts. Repeat Step 1 to complete the update.

The above allows you to deploy the monitoring apps to your tenant with a hands-off approach. Dive into the individual components below.

Content:

- Part One: 'Qlik Cloud Monitoring Apps Installer' template overview

- Part Two: 'Qlik Cloud Monitoring Apps API Key Rotator' template overview

- Part Three: How to setup a Qlik Alert to know when an app is out of date

- A Qlik Cloud Monitoring Apps Workflow Demo (Video)

- FAQ

Part One: 'Qlik Cloud Monitoring Apps Installer' template overview

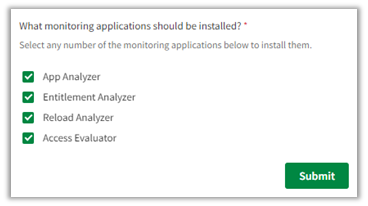

This automation template is a fully guided installer/updater for the Qlik Cloud Monitoring Applications, including but not limited to the App Analyzer, Entitlement Analyzer, Reload Analyzer, and Access Evaluator applications. Leverage this automation template to quickly and easily install and update these or a subset of these applications with all their dependencies. The applications themselves are community-supported; and, they are provided through Qlik's Open-Source Software GitHub and thus are subject to Qlik's open-source guidelines and policies.

For more information, refer to the GitHub repository.

Features

- Can install/upgrade all or select apps.

- Can create or leverage existing spaces.

- Programmatically handles pre-requisite settings, roles, and entitlements.

- Installs latest versions from Qlik’s OSS GitHub.

- Creates required API key.

- Creates required analytics data connection.

- Can create custom reload schedules.

- Can reload post-install.

- Tags apps appropriately to track which are installed and their respective versions.

- Supports both user and capacity-based subscriptions

Click-through installer:

Flow:

Note that if the monitoring applications have been installed manually (i.e., not through this automation) then they will not be detected as existing. The automation will install new copies side-by-side. Any subsequent executions of the automation will detect the newly installed monitoring applications and check their versions, etc. This is due to the fact that the applications are tagged with "QCMA - {appName}" and "QCMA - {version}" during the installation process through the automation. Manually installed applications will not have these tags and therefore will not be detected.

Part Two: 'Qlik Cloud Monitoring Apps API Key Rotator' template overview

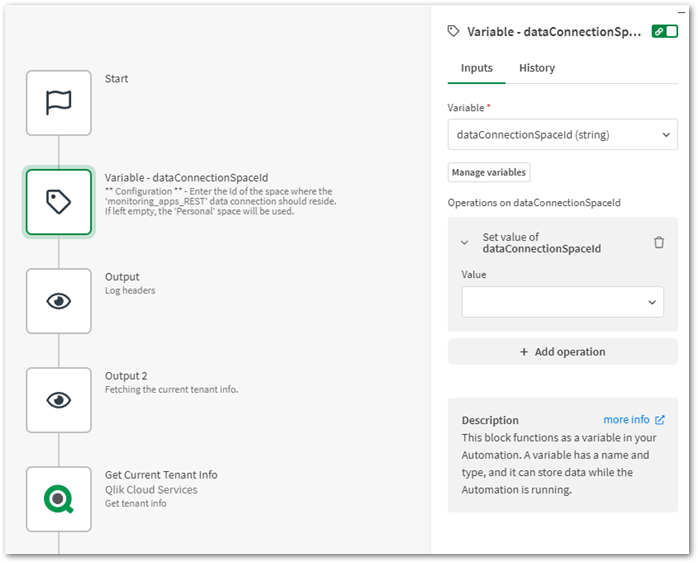

This template is intended to be used alongside the Qlik Cloud Monitoring Apps for user-based subscriptions template. This automation provides the ability to keep the API key and associated data connection used for the Qlik Cloud Monitoring Apps up to date on a scheduled basis. Simply input the space Id where the monitoring_apps_REST data connection should reside, and the automation will recreate both the API key and data connection regularly. Ensure that the cadence of the automation’s schedule is less than the expiry of the API key.

Configuration

Enter in the Id of the space where the monitoring_apps_REST data connection should reside.

Ensure that this automation is run off-hours from your scheduled monitoring application reloads so it does not disrupt the reload process.

Part Three: How to setup a Qlik Alert to know when an app is out of date

Each Qlik Cloud Monitoring App has the following two variables:

- vLatestVersion: the latest version of the app released on Qlik’s OSS GitHub.

- vIsLatestVersion: a Boolean of whether the app’s current version is equal to the latest version of the app released on Qlik’s OSS GitHub (1 if true, 0 if false).

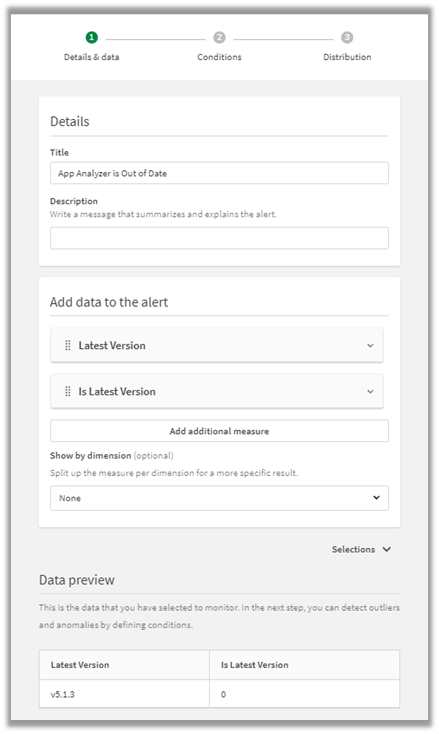

With these variables, we can create a new Qlik Data Alert on a per-app basis. For each monitoring app that you want to be notified on if it falls out of date:

- Right-click on a chart in the app, select Alerts and then Create new alert.

- Clear out the defaults and provide the alert with a name such as <Monitoring App Name> is Out of Date.

- Select Add measure and select the fx icon to enter a custom expression. Enter the expression ’$(vLatestVersion)'. Ensure it is wrapped in single quotes, then provide the label for the expression Latest Version.

- Select Add measure and select the fx icon to enter a custom expression. Enter the expression $(vIsLatestVersion), then provide the label for the expression Is Latest Version.

- Confirm the Details & data tab resembles the following:

- Navigate to the next tab, Conditions.

- Set the Measure to Is Latest Version, the Operator to Equal to, and the Value to 0. This way, if the app is not the latest version (0), it will trigger the alert.

- Confirm that the Conditions tab resembles the following:

- Navigate to the Distribution tab and confirm that the alert is evaluated When data is refreshed.

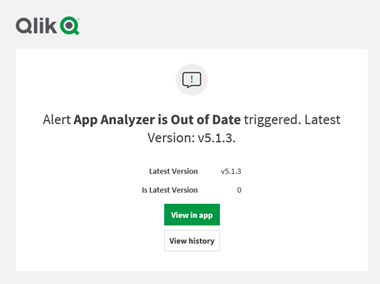

Here is an example of an alert received for the App Analyzer, showing that at this point in time, the latest version of the application is 5.1.3 and that the app is out of date:

A Qlik Cloud Monitoring Apps Workflow Demo (Video)

FAQ

Q: Can I re-run the installer to check if any of the monitoring applications are able to be upgraded to a later version?

A: Yes. Run the installer, select which applications should be checked and select the space that they reside in. If any of the selected applications are not installed or are upgradeable, a prompt will appear to continue to install/upgrade for the relevant applications.

Q: What if multiple people install monitoring applications in different spaces?

A: The template scopes the applications install process to a “target” space, i.e., a shared space (if not published) or a managed space. It will scope the API key name to `QCMA – {spaceId}` of that target space. This allows the template to install/update the monitoring applications across spaces and across users. If one user installs an application to “Space A” and then another user installs a different monitoring application to “Space A”, the template will see that a data connection and associated API key (in this case from another user) exists for that space already and it will install the application leveraging those pre-existing assets.

Q: What if a new monitoring application is released? Will the template provide the ability to install that application as well?

A: Yes. The template receives the list of applications dynamically from GitHub. If a new monitoring application is released, it will become available immediately through the template.

Q: I would like to be notified whenever a new version of a monitoring applications is released. Can this template do that?

A: As per the article above, the automation templates are not responsible for notifications of whether the applications are out of date. This is achieved using Qlik Alerting on a per-application basis as described in Part 3.

Q:I have updated my application, but I noticed that it did not preserve the history. Why is that?

A: The history is preserved in the prior versions of the application’s QVDs so the data is never deleted and can be loaded into the older version. Each upgrade will generate a new set of QVDs as the data models for the applications sometimes change due to bug fixes, updates, new features, etc. If you want to preserve the history when updating, the application can be upgraded with the “Publish side-by-side” method so that the older version of the application will remain as an archival application. However note that the Qlik Alert (from Part 3) will need to be recreated and any community content that was created on the older application will not be transferred to the new application.

-

Qlik Sense Engine - How the memory hard max limit works

The Qlik Sense Engine allows for a hard max limit to be set on memory consumption. Environment: Qlik Sense Enterprise on Windows , all versions... Show MoreThe Qlik Sense Engine allows for a hard max limit to be set on memory consumption.

Environment:

Qlik Sense Enterprise on Windows , all versions

Resolution:

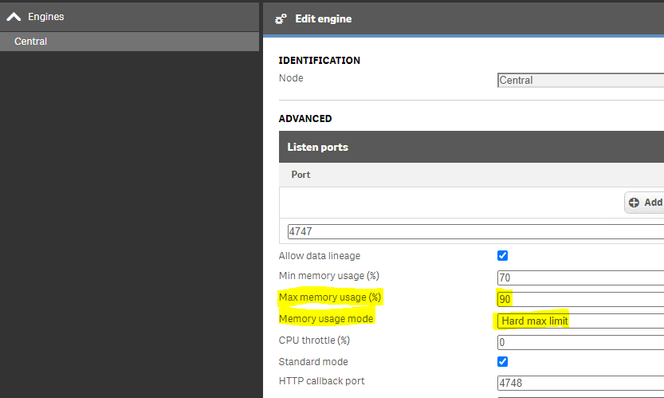

The setting is located in the Qlik Sense Management Console > Engine > Advanced and can be configured as an option in the setting Memory usage mode.

See Editing an engine - Qlik Sense for administrators for details on Engine settings.- In the drop down list you can choose Hard Max Limit which prevent the Engine to use more than a certain defined limit.

- This limit is defined by the parameter set in percentage called Max memory usage (%)

Even with the hard limit set, it may still be possible for the host operating system to report memory spikes above the Max memory usage (%).

The reason for that is because the Qlik Sense Engine memory limit will be defined based on the total memory available.

Example:- A server has 10 GB memory in total and the Max memory usage (%) is set to 90%.

- This will allow the process engine.exe to use 9 GB memory.

- Another application or process may at that point already be consuming 5 GB memory, and this will cause an overload of the system if the engine is set to use 9 GB.

- The engine.exe will not be able to respect other services

The memory working setting limit is not a hard limit to set on the engine. This is a setting that set how much we allocate and how far we are allowed to go before we start alarming on the working set beyond parameters

Internal Investigation IDs:

- QLIK-96872

-

Qlik Talend Data Integration: How to use the Bulk Copy API for batch insert oper...

To take advanced of Batch Size provided performance boost on SQLserver, please make sure using sqlserver jdbc version > 9.2 Microsoft JDBC Driver for ... Show MoreTo take advanced of Batch Size provided performance boost on SQLserver, please make sure using sqlserver jdbc version > 9.2

Microsoft JDBC Driver for SQL Server version 9.2 and above supports using the Bulk Copy API for batch insert operations. This feature allows users to enable the driver to do Bulk Copy operations underneath when executing batch insert operations. The driver aims to achieve improvement in performance while inserting the same data as the driver would have with regular batch insert operation. The driver parses the user's SQL Query, using the Bulk Copy API instead of the usual batch insert operation. Below are various ways to enable the Bulk Copy API for batch insert feature and lists its limitations. This page also contains a small sample code that demonstrates a usage and the performance increase as well.

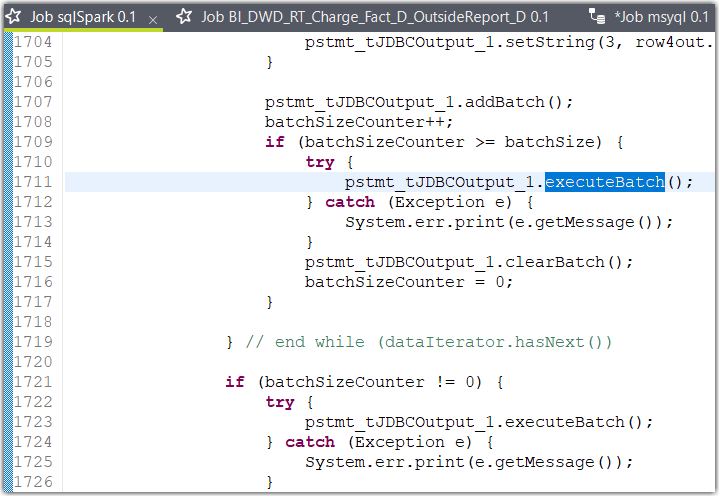

This feature is only applicable to PreparedStatement and CallableStatement'sexecuteBatch()&executeLargeBatch()APIs.and our tJDBCOutput "Use Batch" take advantage of executeBatch()Related Content

Using bulk copy API for batch insert operation

Environment

-

QMC Reload Failure Despite Successful Script in Qlik Sense Nov 2023 and above

Reload fails in QMC even though script part is successfull in Qlik Sense Enterprise on Windows November 2023 and above.When you are using a NetApp bas... Show MoreReload fails in QMC even though script part is successfull in Qlik Sense Enterprise on Windows November 2023 and above.

When you are using a NetApp based storage you might see an error when trying to publish and replace or reloading a published app.In the QMC you will see that the script load itself finished successfully, but the task failed after that.

ERROR QlikServer1 System.Engine.Engine 228 43384f67-ce24-47b1-8d12-810fca589657

Domain\serviceuser QF: CopyRename exception:

Rename from \\fileserver\share\Apps\e8d5b2d8-cf7d-4406-903e-a249528b160c.new

to \\fileserver\share\Apps\ae763791-8131-4118-b8df-35650f29e6f6

failed: RenameFile failed in CopyRenameExtendedException: Type '9010' thrown in file

'C:\Jws\engine-common-ws\src\ServerPlugin\Plugins\PluginApiSupport\PluginHelpers.cpp'

in function 'ServerPlugin::PluginHelpers::ConvertAndThrow'

on line '149'. Message: 'Unknown error' and additional debug info:

'Could not replace collection

\\fileserver\share\Apps\8fa5536b-f45f-4262-842a-884936cf119c] with

[\\fileserver\share\Apps\Transactions\Qlikserver1\829A26D1-49D2-413B-AFB1-739261AA1A5E],

(genericException)'

<<< {"jsonrpc":"2.0","id":1578431,"error":{"code":9010,"parameter":

"Object move failed.","message":"Unknown error"}}ERROR Qlikserver1 06c3ab76-226a-4e25-990f-6655a965c8f3

20240218T040613.891-0500 12.1581.19.0

Command=Doc::DoSave;Result=9010;ResultText=Error: Unknown error

0 0 298317 INTERNAL&

emsp; sa_scheduler b3712cae-ff20-4443-b15b-c3e4d33ec7b4

9c1f1450-3341-4deb-bc9b-92bf9b6861cf Taskname Engine Not available

Doc::DoSave Doc::DoSave 9010 Object move failed.

06c3ab76-226a-4e25-990f-6655a965c8f3Resolution

Potential workarounds

- Roll back your upgrade to a version earlier than November 2023

- Change the storage to a file share on a Windows server

Cause

The most plausible cause currently is that the specific engine version has issues releasing File Lock operations. We are actively investigating the root cause, but there is no fix available yet.

An update will be provided as soon as there is more information to share.

Internal Investigation ID(s)

QB-25096

QB-26125Environment

- Qlik Sense Enterprise on Windows November 2023 and above

-

Tool to troubleshoot Talend Studio connection to Talend Management Console

The TestConnectivity.jar command line tool assists in troubleshooting Studio connections to the Talend Management Console (TMC). It provides output o... Show MoreThe TestConnectivity.jar command line tool assists in troubleshooting Studio connections to the Talend Management Console (TMC). It provides output of connection information from simple to detailed through debug logging.

Content:

Example Usage

java <Optional parameter(s)> -jar TestConnectivity.jar <TMC location or URL> <Optional URL>

example: java -Djava.security.debug=certpath -Djavax.net.debug=all -jar TestConnectivity.jar US|& tee -a testconnectivity.out

Sample Commands

java -jar TestConnectivity.jar HELP

java -jar TestConnectivity.jar DEBUG

java -jar TestConnectivity.jar US

java -jar TestConnectivity.jar EU

java -jar TestConnectivity.jar AP

java -jar TestConnectivity.jar URL http://mywebserver.com/

java -jar TestConnectivity.jar DEBUG URL http://mywebserver.com/ ftp://speedtest.tele2.net/Java Debug Settings

-Djava.security.debug=certpath

-Djavax.net.debug=ssl

-Djavax.net.debug=all (Use this for default truststore information)Additional Parameters

-Dhttps.proxySet=true

-Dhttps.proxyHost=MYPROXYHOST

-Dhttps.proxyPort=9090

-Dhttps.proxyUser=ME

-Dhttps.proxyPassword=MYPASSWORD

-Dhttps.nonProxyHosts=127.0.0.1|localhost|*.MYCOMPANY.com

-Djavax.net.ssl.trustStoreType=JKS

-Djavax.net.ssl.trustStore="C:/certs/cacerts"

-Djavax.net.ssl.trustStorePassword="changeit"

-Djavax.net.ssl.keyStoreType=JKS

-Djavax.net.ssl.keyStore="C:/certs/cacerts"

-Djavax.net.ssl.keyStorePassword="changeit"Environment

- Talend Studio 7.3

- Talend Studio 8.0.1

-

Talend Data Integration: Unable to build Talend jobs using Azure DevOps

Azure DevOps pipelines fails with the following error when building Talend Jobs. [ERROR] Failed to execute goal on project job_AladdinToBusiness_00002... Show MoreAzure DevOps pipelines fails with the following error when building Talend Jobs.

[ERROR] Failed to execute goal on project job_AladdinToBusiness_000020_profitAndLossFileChild: Could not resolve dependencies for project org.example.tcorp_talend_ims.job:job_AladdinToBusiness_000020_profitAndLossFileChild:jar:0.2.0: Failed to collect dependencies at org.talend.components.lib:talend-proxy:jar:1.0.0:

Cause

Maven blocks external HTTP repositories by default since version 3.8.1, for more details, please read Release Notes – Maven 3.8.1.

Resolution

Open the Maven settings file settings.xml located in ${maven.home}/conf/ or ${user.home}/.m2/, add a dummy mirror with the id maven-default-http-blocker to override the existing one. This disables HTTP blocking for all repositories.

<mirrors> <mirror> <id>maven-default-http-blocker</id> <mirrorOf>dummy</mirrorOf> <name>Dummy mirror to override default blocking mirror that blocks http</name> <url>http://0.0.0.0/</url> </mirror> </mirrors> </settings>Related Content

Disable maven blocking external HTTP repositories

Environment

-

Add data files preview is not using the whole model to preview data in Microsoft...

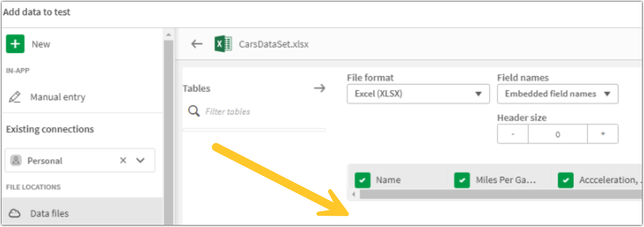

When attempting to add data files, the preview does not utilize the entire modal to display data in Microsoft Edge or Chrome browsers (latest releases... Show MoreWhen attempting to add data files, the preview does not utilize the entire modal to display data in Microsoft Edge or Chrome browsers (latest releases) on both Qlik Sense Enterprise on Windows and Qlik Cloud.

When selecting a source data file, the preview of the data is limited to showing only one row, and the whole modal space is not being utilized.

Qlik Sense Enterprise preview:

Qlik Cloud preview:

Environment

Affected versions are Qlik Sense Enterprise on Windows:

- May 2023

- August 2023

- November 2023

- February 2024

Resolution

This behavior is caused by defect QB-25217.

Fix Version:

Fixed in Qlik Cloud Services as of the 28th of February 2024.

Fixed for Qlik Sense Enterprise on Windows on the following versions:

- May 2023 Patch 15

- August 2023 Patch 13

- November 2023 Patch 7

- February 2024 Patch 2

Workaround

Use non-Chromium-based browsers such as Firefox or Safari.

Internal Investigation ID(s)

QB-25217

Information provided on this defect is given as is at the time of documenting. For up-to-date information, please review the most recent Release Notes, or contact support with the QB-25217 for reference.

Related Content

No scroll bar in the data files preview in Microsoft Edge or Chrome Browsers

Table is not rendered properly in Chrome/Edge while selecting data from the Data Source -

Qlik Replicate and SAP ODP Endpoint: row counts do not match between Qlik Replic...

comparing the number of rows between the backend SAP ODB environment and the Qlik Replicate Full Load task, Qlik Replicate does not show the correct v... Show Morecomparing the number of rows between the backend SAP ODB environment and the Qlik Replicate Full Load task, Qlik Replicate does not show the correct value.

Example:

Qlik Replicate shows 357,462 rows loaded, while the SAP ODP (ODQMON) job log shows 1.5 million rows.

Resolution

Qlik is investigating this as RECOB-8294. No workaround is currently available.

Information provided on this defect is given as is at the time of documenting. For up-to-date information, please review the most recent Release Notes, or contact support with the RECOB-8294 for reference.

Cause

Product Defect ID: RECOB-8294

Environment

-

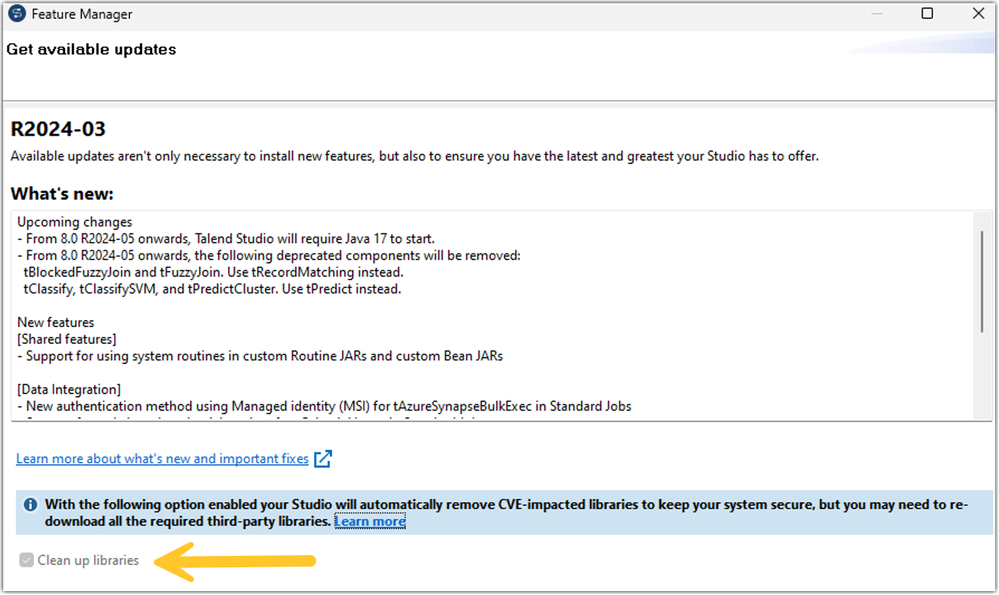

Qlik Talend Studio: "Clean up Libraries" check box greyed out when patching fro...

When patching Studio from the Feature manager, the Clean up libraries option is greyed out: Resolution Navigate to <Talend Studio Home>\studio\ Ba... Show MoreWhen patching Studio from the Feature manager, the Clean up libraries option is greyed out:

Resolution

- Navigate to <Talend Studio Home>\studio\

- Backup and edit Talend-Studio-win-x86_64.ini (Win OS) or Talend-Studio-macosx-cocoa.ini (Mac OS)

- Remove the following parameter -Dtalend.studio.m2.clean=true

- Save and restart Studio

Cause

The -Dtalend.studio.m2.clean=true property ensures Studio removes obsolete jars during patching.

Related Content

For more information, please review the Studio installation guide.

Environment

Talend Studio 8.0.1

-

Qlik Enterprise Manager fails to reconnect with Qlik Replicate server: SYS-E-SES...

After upgrading Qlik Enterprise Manager to 2022.11, increased connection errors to the Qlik Replicate server can be observed, with the connection term... Show MoreAfter upgrading Qlik Enterprise Manager to 2022.11, increased connection errors to the Qlik Replicate server can be observed, with the connection terminating approximately every 30 minutes.

The following error is logged:

3 2023-12-19 06:09:06 [ServerDto ] [ERROR] Failed to get monitoring data for server: 172.29.73.29. Message:'SYS-E-SESS_LOST, The client is not connected to the Server. Please connect and then try again.'''.

Resolution

This is a known defect. The fix will be included in the next possible Qlik Enterprise Manager release (tentatively 2023.11 SP03).

Fix Description: Added auto-reconnect flag where it was missing to allow QEM to better manage the connection drops and attempt to re-establish the EM<-->QR communication, especially in high-load situations.

Workaround:

The following are test patches that address this issue. Please contact support to obtain the test patch.

- QEM: QlikEnterpriseManager_2022.11.0.919_X64

- QEM: QlikEnterpriseManager_2023.5.0.689_X64

- QEM: QlikEnterpriseManager_2023.11.0.368_X64

Environment

Qlik Enterprise Manager 2022.11.0.634

-

Qlik Replicate SAP ODP Log Stream Source error SDK endpoint encountered a recove...

A Qlik Replicate Task reading from Log Stream SAP ODP Source endpoint task fails with: Failed during unloadSDK endpoint encountered a recoverable erro... Show MoreA Qlik Replicate Task reading from Log Stream SAP ODP Source endpoint task fails with:

Failed during unload

SDK endpoint encountered a recoverable error [1024719]Resolution

This error is currently under investigation as QB-26198, however, no resolution is available as the SAP ODP Source Endpoint does not support the Log Stream Staging Task setup.

Information provided on this defect is given as is at the time of documenting. For up-to-date information, please review the most recent Release Notes, or contact support with the QB-26198 for reference.

Cause

Product Defect ID: QB-26198

Environment

-

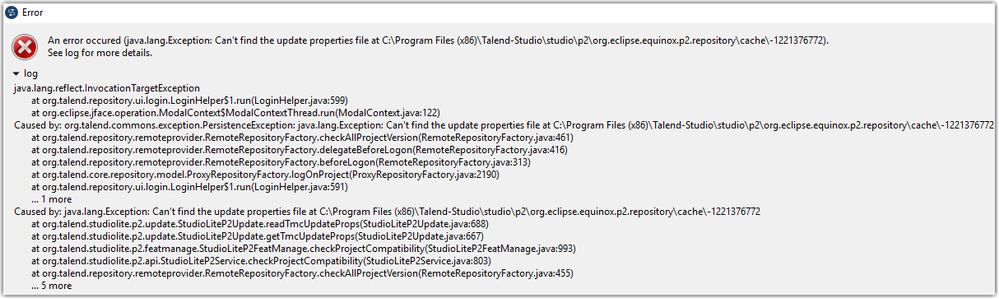

Qlik Talend Cloud: Cannot find update properties file

The user is unable to open the remote project. A pop-up error is displayed, reading: An error occurred (java.langException: Can't find the update prop... Show MoreThe user is unable to open the remote project. A pop-up error is displayed, reading:

An error occurred (java.langException: Can't find the update properties file at: C:\ProgramFiles (x86)\Talend-Studio\studio\p2\org.eclipse.equinox.p2.repository\cache\-xxxx)

Resolution- Run studio in debug mode:

"Talend-Studio-win-x86_64.exe --talendDebug > debugLog.txt" - Review the resulting debugLog.txt for the root cause of the error, such as:

!SUBENTRY 1 org.eclipse.equinox.p2.transport.ecf 4 1002 2023-12-27 11:20:24.857

!MESSAGE HTTP Proxy Authentication Required: https://update.talend.com/Studio/8/base/content.xml

!STACK 1

org.eclipse.ecf.filetransfer.BrowseFileTransferException: Proxy Authentication Required

In this example, the password of the proxy was changed and was then not updated synchronously in the Proxy setting of Talend Studio. The issue could be fixed by manually updating the user credentials of the proxy in studio -> preferences -> proxy setting.

Environment

- Run studio in debug mode:

-

Qlik Sense Utility - Functions and Features

The QlikSenseUtil comes bundled with Qlik Sense Enterprise on Windows. It's executable is by default stored in: %Program Files%\Qlik\Sense\Repository\... Show MoreThe QlikSenseUtil comes bundled with Qlik Sense Enterprise on Windows. It's executable is by default stored in:

%Program Files%\Qlik\Sense\Repository\Util\QlikSenseUtil\QlikSenseUtil.exe

Qlik Sense Util is no longer supported as a backup and restore tool. For the officially supported backup and Restore Process, see Backup and Restore.

It serves as a:

- Qlik Sense Port Checker: Pings every active port Qlik sense is using for every node. Note that some ports can be for internal use and might not be exposed to the node you are pinging from.

- Data structure checker: Mostly applicable for a synchronized persistence deployment. Checks that the data references are not broken.

- Duplicate server nodes: Mostly applicable for a synchronized persistence deployment. Checks there are no duplicate server nodes in the database.

- Remove old app binaries: Remove deleted app binaries from the selected folder.

- Get system configuration: Returns the system configuration from the database.

- Connection string editor: A tool for editing the connection string.

- Service Cluster: Functionality for updating the service cluster in the database.

- Log Fetcher: A tool for fetching logs from the system. If the time span is shorter than 24h all logs will be summarized in one single file. This will make trouble shooting easier if you are looking for events what occurred within a short time frame.

- Fetching logs with a time span larger than 24h will copy the logs and paste them in their logical structure. The filter functionality selects every row with the specified keyword and creates log files based on rows selected.

Note : Qlik Support prefers using the Log Collector included in How To Collect Qlik Sense Log Files

- Fetching logs with a time span larger than 24h will copy the logs and paste them in their logical structure. The filter functionality selects every row with the specified keyword and creates log files based on rows selected.

- Service type checker: Mostly applicable for a synchronized persistence deployment. Checking for duplicated service types in the database.

-

Qlik Sense PosgreSql connector cut off strings containing more than 255 characte...

The native Qlik PostgreSql connector (ODBC package) does not import strings with more of 255 characters. Such strings are just cut off, the following ... Show MoreThe native Qlik PostgreSql connector (ODBC package) does not import strings with more of 255 characters. Such strings are just cut off, the following characters are not shown in the applications. No warnings are thrown during the script execution.

The problem affects the Qlik connector but not all the DNS drivers.

Environment

Qlik Sense February 2023 and higher versions

Qlik CloudResolution

When setting up the PostgreSQL connection, set the parameter TextAsLongVarchar with value 1 in the connector Advanced settings.

When TextAsLongVarchar is set, and the Max String Length is set to 4096, 4096 characters are loaded.

Notice that there still are limitations to this functionality related to the datatype used in the database. Data types like text[] are currently not supported by Simba and they are affected by the 255 characters limitation even when the TextAsLongVarchar parameter is applied.

Qlik has opened an improvement request to Simba to support them.

As workaround, it is possible to test a custom connector using a DSN driver to deal with these data types.

Internal Investigation ID

QB-21497

-

Qlik Replicate DB2 Z/OS Source Endpoint Date column not loading to Target

DB2 Z/OS Source Endpoint loading a table with date columns leads to the data coming in as blank. The source data has valid dates but is not loading to... Show MoreDB2 Z/OS Source Endpoint loading a table with date columns leads to the data coming in as blank. The source data has valid dates but is not loading to the target in the Qlik Replicate Task

Resolution

The ODBC Driver for DB2 Z/OS requires the 11.5.6 or the 11.5.8 ODBC driver to be installed on the Qlik Replicate Server.

Cause

ODBC driver 11.5.9 or other installed.

Related Content

z/OS Prerequisites | Qlik Replicate Help

Environment

Qlik Replicate 2023.5