Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Search our knowledge base, curated by global Support, for answers ranging from account questions to troubleshooting error messages.

Featured Content

-

How to contact Qlik Support

Qlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical e... Show MoreQlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical experts. In this article, we guide you through all available avenues to secure your best possible experience.

For details on our terms and conditions, review the Qlik Support Policy.

Index:

- Support and Professional Services; who to contact when.

- Qlik Support: How to access the support you need

- 1. Qlik Community, Forums & Knowledge Base

- The Knowledge Base

- Blogs

- Our Support programs:

- The Qlik Forums

- Ideation

- How to create a Qlik ID

- 2. Chat

- 3. Qlik Support Case Portal

- Escalate a Support Case

- Resources

Support and Professional Services; who to contact when.

We're happy to help! Here's a breakdown of resources for each type of need.

Support Professional Services (*) Reactively fixes technical issues as well as answers narrowly defined specific questions. Handles administrative issues to keep the product up-to-date and functioning. Proactively accelerates projects, reduces risk, and achieves optimal configurations. Delivers expert help for training, planning, implementation, and performance improvement. - Error messages

- Task crashes

- Latency issues (due to errors or 1-1 mode)

- Performance degradation without config changes

- Specific questions

- Licensing requests

- Bug Report / Hotfixes

- Not functioning as designed or documented

- Software regression

- Deployment Implementation

- Setting up new endpoints

- Performance Tuning

- Architecture design or optimization

- Automation

- Customization

- Environment Migration

- Health Check

- New functionality walkthrough

- Realtime upgrade assistance

(*) reach out to your Account Manager or Customer Success Manager

Qlik Support: How to access the support you need

1. Qlik Community, Forums & Knowledge Base

Your first line of support: https://community.qlik.com/

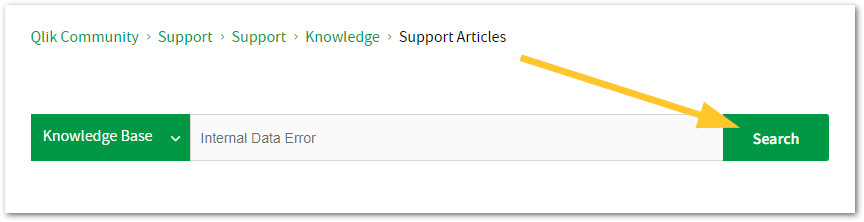

Looking for content? Type your question into our global search bar:

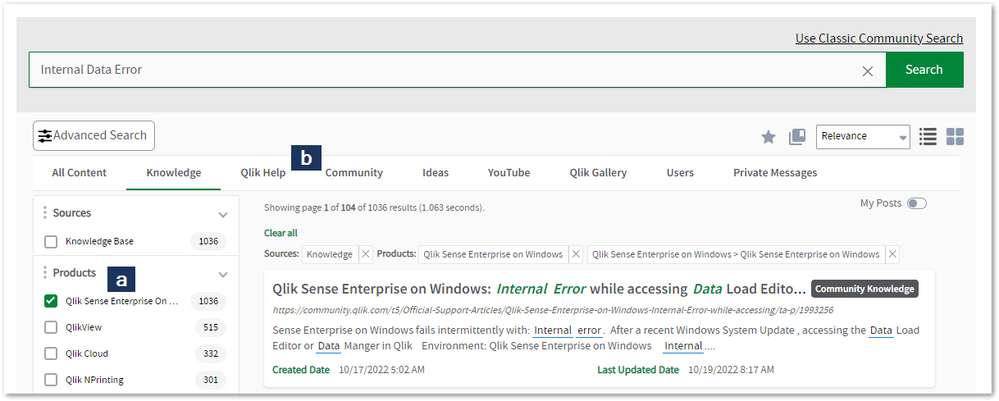

The Knowledge Base

Leverage the enhanced and continuously updated Knowledge Base to find solutions to your questions and best practice guides. Bookmark this page for quick access!

- Go to the Official Support Articles Knowledge base

- Type your question into our Search Engine

- Need more filters?

- Filter by Product

- Or switch tabs to browse content in the global community, on our Help Site, or even on our Youtube channel

Blogs

Subscribe to maximize your Qlik experience!

The Support Updates Blog

The Support Updates blog delivers important and useful Qlik Support information about end-of-product support, new service releases, and general support topics. (click)The Qlik Design Blog

The Design blog is all about product and Qlik solutions, such as scripting, data modelling, visual design, extensions, best practices, and more! (click)The Product Innovation Blog

By reading the Product Innovation blog, you will learn about what's new across all of the products in our growing Qlik product portfolio. (click)Our Support programs:

Q&A with Qlik

Live sessions with Qlik Experts in which we focus on your questions.Techspert Talks

Techspert Talks is a free webinar to facilitate knowledge sharing held on a monthly basis.Technical Adoption Workshops

Our in depth, hands-on workshops allow new Qlik Cloud Admins to build alongside Qlik Experts.Qlik Fix

Qlik Fix is a series of short video with helpful solutions for Qlik customers and partners.The Qlik Forums

- Quick, convenient, 24/7 availability

- Monitored by Qlik Experts

- New releases publicly announced within Qlik Community forums (click)

- Local language groups available (click)

Ideation

Suggest an idea, and influence the next generation of Qlik features!

Search & Submit Ideas

Ideation GuidelinesHow to create a Qlik ID

Get the full value of the community.

Register a Qlik ID:

- Go to: qlikid.qlik.com/register

- You must enter your company name exactly as it appears on your license or there will be significant delays in getting access.

- You will receive a system-generated email with an activation link for your new account. NOTE, this link will expire after 24 hours.

If you need additional details, see: Additional guidance on registering for a Qlik account

If you encounter problems with your Qlik ID, contact us through Live Chat!

2. Chat

Incidents are supported through our Chat, by clicking Chat Now on any Support Page across Qlik Community.

To raise a new issue, all you need to do is chat with us. With this, we can:

- Answer common questions instantly through our chatbot

- Have a live agent troubleshoot in real time

- With items that will take further investigating, we will create a case on your behalf with step-by-step intake questions.

3. Qlik Support Case Portal

Log in to manage and track your active cases in Manage Cases. (click)

Please note: to create a new case, it is easiest to do so via our chat (see above). Our chat will log your case through a series of guided intake questions.

Your advantages:

- Self-service access to all incidents so that you can track progress

- Option to upload documentation and troubleshooting files

- Option to include additional stakeholders and watchers to view active cases

- Follow-up conversations

When creating a case, you will be prompted to enter problem type and issue level. Definitions shared below:

Problem Type

Select Account Related for issues with your account, licenses, downloads, or payment.

Select Product Related for technical issues with Qlik products and platforms.

Priority

If your issue is account related, you will be asked to select a Priority level:

Select Medium/Low if the system is accessible, but there are some functional limitations that are not critical in the daily operation.

Select High if there are significant impacts on normal work or performance.

Select Urgent if there are major impacts on business-critical work or performance.

Severity

If your issue is product related, you will be asked to select a Severity level:

Severity 1: Qlik production software is down or not available, but not because of scheduled maintenance and/or upgrades.

Severity 2: Major functionality is not working in accordance with the technical specifications in documentation or significant performance degradation is experienced so that critical business operations cannot be performed.

Severity 3: Any error that is not Severity 1 Error or Severity 2 Issue. For more information, visit our Qlik Support Policy.

Escalate a Support Case

If you require a support case escalation, you have two options:

- Request to escalate within the case, mentioning the business reasons.

To escalate a support incident successfully, mention your intention to escalate in the open support case. This will begin the escalation process. - Contact your Regional Support Manager

If more attention is required, contact your regional support manager. You can find a full list of regional support managers in the How to escalate a support case article.

Resources

A collection of useful links.

Qlik Cloud Status Page

Keep up to date with Qlik Cloud's status.

Support Policy

Review our Service Level Agreements and License Agreements.

Live Chat and Case Portal

Your one stop to contact us.

Recent Documents

-

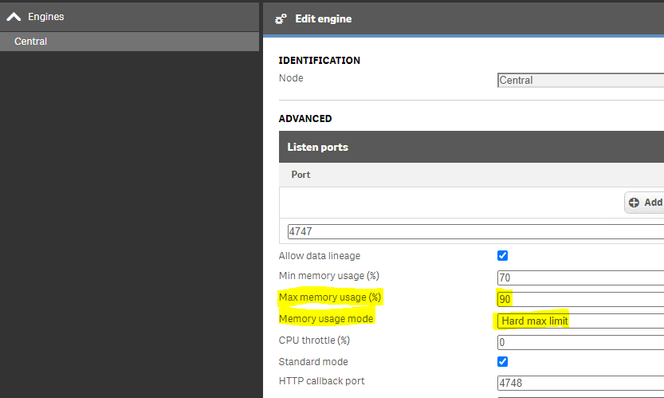

Qlik Sense Engine Enterprise on Windows: How the memory hard max limit works

The Qlik Sense Engine allows for a hard max limit to be set on memory consumption, which, if enabled on the Operating System level as well, will make ... Show MoreThe Qlik Sense Engine allows for a hard max limit to be set on memory consumption, which, if enabled on the Operating System level as well, will make a best effort to remain below the set limit.

The setting is located in the Qlik Sense Management Console > Engine > Advanced and can be configured as an option in the setting Memory usage mode.

See Editing an engine - Qlik Sense for administrators for details on Engine settings.

- In the drop down list you can choose Hard Max Limit which prevent the Engine to use more than a certain defined limit.

- This limit is defined by the parameter set in percentage called Max memory usage (%)

This setting requires that the Operating System is configured to support this, as described in the SetProcessWorkingSetSizeEx documentation (QUOTA_LIMITS_HARDWS_MAX_ENABLE parameter).

Even with the hard limit set, it may still be possible for the host operating system to report memory spikes above the Max memory usage (%).

The reason for that is because the Qlik Sense Engine memory limit will be defined based on the total memory available.

Example:- A server has 10 GB memory in total and the Max memory usage (%) is set to 90%.

- This will allow the process engine.exe to use 9 GB memory.

- Another application or process may at that point already be consuming 5 GB memory, and this will cause an overload of the system if the engine is set to use 9 GB.

- The engine.exe will not be able to respect other services

The memory working setting limit is not a hard limit to set on the engine. This is a setting that set how much we allocate and how far we are allowed to go before we start alarming on the working set beyond parameters.

Internal Investigation IDs:

QLIK-96872

-

Handling Duplicate Rows when doing a Full Load in Replicate

This is a problem which on first impressions should not (and you would think logically cannot) happen. Therefore it is important to understand why it ... Show MoreThis is a problem which on first impressions should not (and you would think logically cannot) happen. Therefore it is important to understand why it does, and what can be done to resolve it when it does.

The situation is that Replicate is doing a Full Load for a table (individually or as part of a task full loading many tables). The source and target tables have identical unique primary keys. There are no uppercasing or other character set issues relating to any of the columns that make up the key which may sometimes cause duplication problems. Yet as the Full Load for the table progresses, probably nearing the end, you get a message indicating that Replicate has failed to insert a row into the target as a result of a duplicate. That is there is already a row in the target table with the unique key for the row that it is trying to insert. The Full Load for that table is terminated (often after several hours); and if you try again the same error, perhaps for a different row, will often occur.

Logically this shouldn’t happen, but it does. The likelihood of it doing so depends on the source DBMS type, the type of columns in the source table, and you will find it is always for a table that is being updated (SQL UPDATEs) as Replicate copies it. The higher the update rate and the bigger the table, the more likely it is to happen.

Note: This article discussed the problems that are related to duplicates in the TARGET_LOAD and not the TARGET_APPLY, that is during Full Load and before starting to apply the cached changes.Analysis:

To understand the fix we first need to understand why the problem occurs, and this involves understanding some of the internal workings of most conventional Relational Database Management Systems.

RDBMS’s tend to employ different terminology for things that exist in all of them. I’m going to use DB2 terminology and explain each term the first time I use it. With a different RDBMS the terminology may be different, but the concepts are generally the same?

The first concept to introduce is the Tablespace. That’s what it’s called in DB2, but it exists for all databases and is the physical area where the rows that make up the table are stored. Logically it can be considered as a single contiguous data area, split up into blocks, numbered in ascending order.

This is where your database puts the row data when you INSERT rows into the table. What’s also important is that it tries to update the existing data for a row in place when you do an UPDATE, but may not always be able to do so. If that is the case then it will move the updated row to another place in the tablespace, usually at what is then the highest used (the endpoint) block in the tablespace area.

The next point concerns how the DBMS decides to access data from the tablespace in resolving your SQL calls. Each RDBMS has an optimiser, or something similar that makes these decisions. The role of indexes with a relational database is somewhat strange. They are not really part of the standard Relational Database model, although in practice they are used to guarantee uniqueness and support referential integrity. Other than for these roles, they exist only to help the optimiser come up with faster ways of retrieving rows that satisfy your SELECT (database read) statements.

When any piece of SQL (we’ll focus on simple SELECT statements here) is presented to the optimiser, it decides on what method to use to search for and retrieve any matching rows from the tablespace. The default method is to search through all the rows directly in the tablespace looking for rows that match any selection criteria, this is known as a Tablespace Scan.

A Tablespace Scan may be the best way to access rows from a table, particularly if it is likely that many or most of the rows in the table will match the selection criteria. For other SELECTs though that are more specific about what row(s) are required, a suitable matching index may be used (if one exists) to go directly to the row(s) in the tablespace.

The sort of SQL that Replicate generates to execute against the source table when it is doing a Full Load is of the form SELECT * FROM, or SELECT col1, col2, … FROM. Neither of these has any row specific selection criteria, and in fact this is to be expected as a Full Load is in general intended to select all rows from the source table.

As a result the database optimiser is not likely to choose to use an index (even if a unique index on the table exists) to resolve this type of SELECT statement, and instead a Tablespace Scan of the whole tablespace area will take place. This, as you will see later, can be inconvenient to us but is in fact the fastest way of processing all the rows in the table.

When we do a Full Load copy for a table that is ‘live’ (being updated as we copy it), the result we end up with when the SELECT against the source has been completed and we have inserted all the rows into the target is not likely to be consistent with what is then in the source table. The extent of the differences is dependent on the rate of updates and how long the Full Load for that table takes. For high update rates on big tables that take many hours for a Full Load the extent of the differences can be quite considerable.

This all sounds very worrying but it is not as the CDC (Change Data Capture) part of Replicate takes care of this. CDC is mainly known for Replicating changes from source to target after the initial Full Load has been taken, keeping the target copies up to date and in line with the changing source tables. However CDC processing has an equally important role to play in the Full Load process itself, especially when this is being done on ‘live’ tables subject to updates as the Full Load is being processed.

In fact CDC processing doesn’t start when Full Load is finished, but in fact before Full Load starts. This is so that it can collect details of changes that are occurring at the source whilst the Full Load (and it’s associated SELECT statement) are taking place. The changes collected during this period are known as the ‘cached changes’ and they are applied to the newly populated target table before switching into normal ongoing CDC mode to capture all subsequent changes.

This takes care of and fixes all of the table row data inconsistencies that are likely to occur during a table Full Load, but there is one particular situation that can occur and catch us out before the Full Load completes and the cached changes can be applied. This results in Replicate trying to insert details for the same row more than once in the target table; triggering the duplicates error that we are talking about here.

Consider this situation:- We obtain a copy of a row with a particular key as the Tablespace Scan satisfying our SELECT statement passes by its location in the source tablespace.

- Subsequently that row is updated in the source table. After update it cannot fit into its original place in the tablespace. So the row for that key is moved to a location in a empty block at the current high point in the tablespace.

- As the tablespace continues it comes across the new copy of the row for that same key and returns it as part of the result set for the SELECT statement. Now we have 2 rows for the same key that we are going try to insert into the target table.

That is how the problem occurs. Having variable length columns, and binary object columns in the source table make this (movement of the row to a new location in the tablespace) much more likely to happen and the duplicate insert problem to occur.

Resolution:

So how to fix this, or at least how to find a method to stop it happening.

The solution is to persuade the optimiser in the source database to use the unique index on the table to access the rows in the table’s tablespace rather than scanning sequentially through it. The index (which is unique) will only provide one row to read for each key as the execution of our SELECT statement progresses. We don’t have to worry about whether it is the ‘latest’ version of the row or not because that will be taken care of later by the application of the cached changes.

The optimiser can (generally) be persuaded to use the unique index on the source table if the SELECT statement indicates that there is a requirement to return the rows in the result set in the order given by that index. This requires having a SELECT statement with a order clause matching the columns in the unique index. Something of the form SELECT * FROM ORDER BY col1, col2, col3, etc. Where col1, col2, col3 etc. are the columns that make up the tables unique primary index.

But, how can we do this. Replicate has a undocumented facility that allows the user to configure extra text to be added to the end of the generated SQL for a particular table during Full Load processing specifically to add a WHERE statement to determine which rows are included and excluded during a Full Load extract.

This is not exactly what we want to do (we want to include all rows), but this ‘FILTER’ facility also provides the option to extend the content of the SELECT statement that is generated after the WHERE part of the statement has been added. So we can use it to add the ORDER BY part of the statement that we require.

Here is the format of the FILTER statement that you need to add.

—FILTER: 1=1) ORDER BY col1, col2, coln —

This is inserted in the ‘Record Selection Condition’ box on the individual table filter screen when configuring the Replicate task. If you want to do this for multiple tables in the Replicate task then you need to set up a FILTER for each table individually.

To explain, the —FILTER: keyword indicates the beginning of filter information that is expected to begin with a WHERE clause (which is generated automatically).

The 1=1)) component completes that WHERE clause in a way that all rows are selected (you could put in something to limit the rows selected if required, but that’s not what we are trying yo achieve here)

It is then possible to add other clauses and parameters before terminating the additional text to be added with the final —

In this case an ORDER clause is added that will guarantee that rows are returned in the order selected. This causes the unique index on the table to be used to retrieve rows at the source; assuming that you code col1, col2, etc. to match the columns and their order in the index. If the index has some columns in descending order (rather than ascending) make sure that is coded in the ORDER BY statement as well.

If you code things incorrectly the generated SELECT statement will fail and you will be able to see and debug this through the log. -

How to implement "soft" deletes on replicated table

In general - "soft delete" is a term used when a delete operation is performed on a row of a table and you do not want that the row will be physicall... Show More -

How to perform a partial reload in Qlik Sense

Partial reloads can be performed in Qlik Sense via the in-app Button object. More information is available under Partial Reload - Qlik Sense on Window... Show MorePartial reloads can be performed in Qlik Sense via the in-app Button object. More information is available under Partial Reload - Qlik Sense on Windows.

For older versions of Qlik Sense, a third party extension is available which can be used to achieve Partial Reload. Note that this third party extension is not covered by Qlik Support. Please contact the extension vendor for assistance.

Download: Qlik Branch Project: Qlik Sense Reload Button

Or: GitHub repository for the Qlik Sense Reload Button.Documentation on how to load new and updated records with incremental load can be found here: Loading new and updated records with incremental load

-

QMC Reload Failure Despite Successful Script in Qlik Sense Nov 2023 and above

Reload fails in QMC even though script part is successfull in Qlik Sense Enterprise on Windows November 2023 and above.When you are using a NetApp bas... Show MoreReload fails in QMC even though script part is successfull in Qlik Sense Enterprise on Windows November 2023 and above.

When you are using a NetApp based storage you might see an error when trying to publish and replace or reloading a published app.In the QMC you will see that the script load itself finished successfully, but the task failed after that.

ERROR QlikServer1 System.Engine.Engine 228 43384f67-ce24-47b1-8d12-810fca589657

Domain\serviceuser QF: CopyRename exception:

Rename from \\fileserver\share\Apps\e8d5b2d8-cf7d-4406-903e-a249528b160c.new

to \\fileserver\share\Apps\ae763791-8131-4118-b8df-35650f29e6f6

failed: RenameFile failed in CopyRenameExtendedException: Type '9010' thrown in file

'C:\Jws\engine-common-ws\src\ServerPlugin\Plugins\PluginApiSupport\PluginHelpers.cpp'

in function 'ServerPlugin::PluginHelpers::ConvertAndThrow'

on line '149'. Message: 'Unknown error' and additional debug info:

'Could not replace collection

\\fileserver\share\Apps\8fa5536b-f45f-4262-842a-884936cf119c] with

[\\fileserver\share\Apps\Transactions\Qlikserver1\829A26D1-49D2-413B-AFB1-739261AA1A5E],

(genericException)'

<<< {"jsonrpc":"2.0","id":1578431,"error":{"code":9010,"parameter":

"Object move failed.","message":"Unknown error"}}ERROR Qlikserver1 06c3ab76-226a-4e25-990f-6655a965c8f3

20240218T040613.891-0500 12.1581.19.0

Command=Doc::DoSave;Result=9010;ResultText=Error: Unknown error

0 0 298317 INTERNAL&

emsp; sa_scheduler b3712cae-ff20-4443-b15b-c3e4d33ec7b4

9c1f1450-3341-4deb-bc9b-92bf9b6861cf Taskname Engine Not available

Doc::DoSave Doc::DoSave 9010 Object move failed.

06c3ab76-226a-4e25-990f-6655a965c8f3Resolution

Potential workarounds

- Roll back your upgrade to a version earlier than November 2023

- Change the storage to a file share on a Windows server

Cause

The most plausible cause currently is that the specific engine version has issues releasing File Lock operations. We are actively investigating the root cause, but there is no fix available yet.

An update will be provided as soon as there is more information to share.

Internal Investigation ID(s)

QB-25096

QB-26125Environment

- Qlik Sense Enterprise on Windows November 2023 and above

-

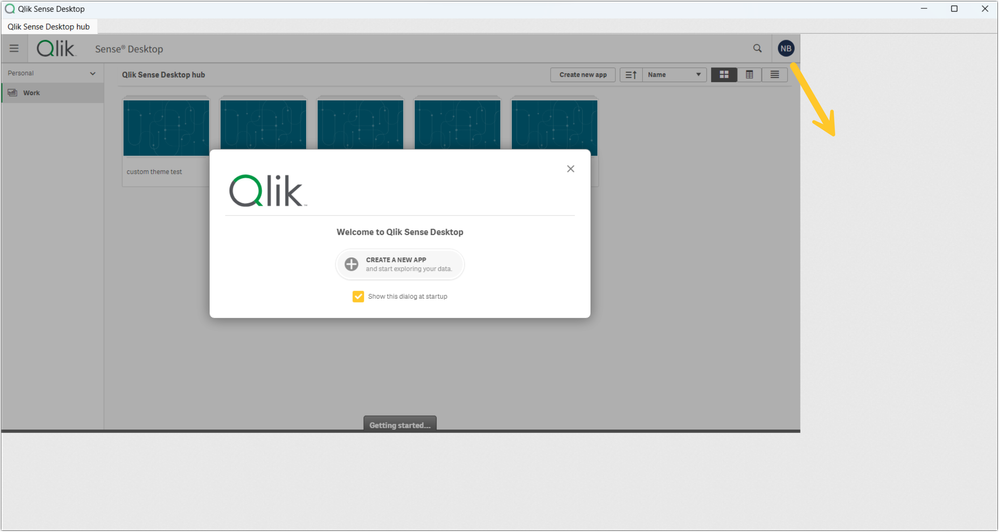

Qlik Sense Desktop: Display scaling issue with zoom higher than 100%

After installing Qlik Sense Desktop February 2024 or upgrading from a previous version, the display scaling no longer works as expected. Blank space ... Show MoreAfter installing Qlik Sense Desktop February 2024 or upgrading from a previous version, the display scaling no longer works as expected.

Blank space is shown to the right and bottom of the Qlik Sense client:

Resolution

This issue is caused by QB-25016. A fix will be deployed with the Qlik Sense Desktop May 2024 release.

Workaround:

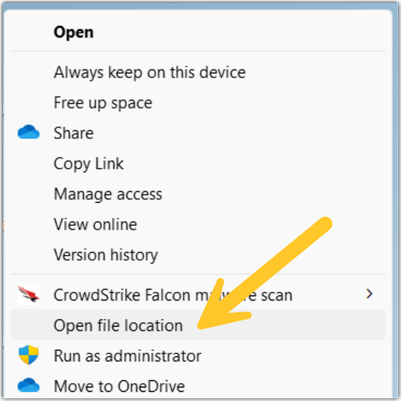

- Close Qlik Sense Desktop Feb 2024

- Right-click the Qlik Sense Desktop icon, and select Open file location

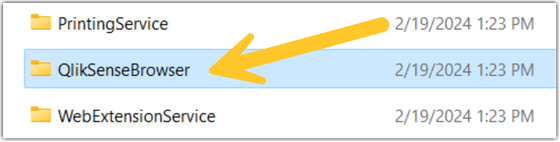

- Open the QlikSenseBrowser directory

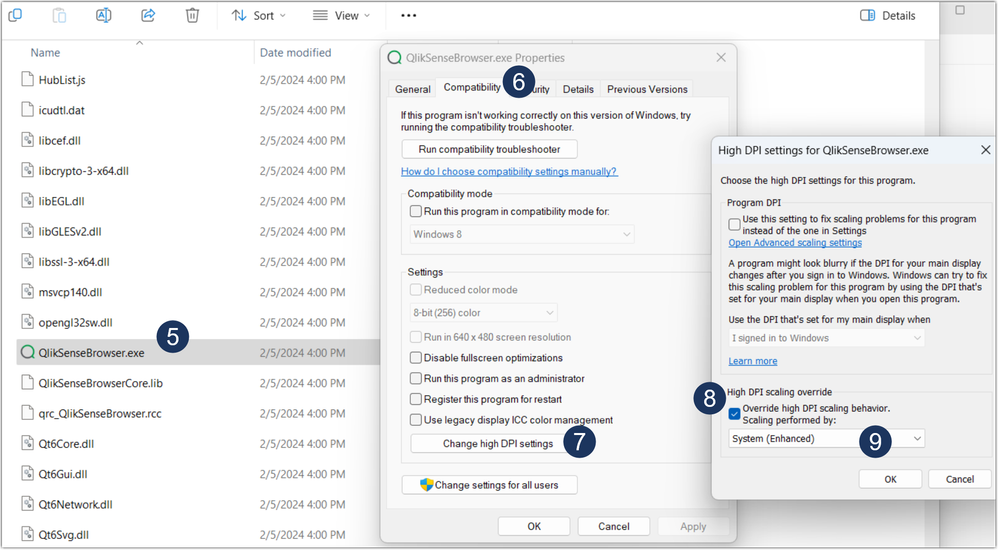

- In the QlikSenseBrowser directory, find QlikSenseBrowser.exe

- Right-click QlikSenseBrowser.exe and open Properties

- Go to the Compatibility tab

- Click Change high DPI settings

- Check Override high DPI scaling behavior

- From the dropdown list, select System (Enhanced)

- Apply the change, and open Qlik Sense Desktop

Fix Version:

Qlik Sense Desktop May 2024

Cause

Product Defect ID: QB-25016

Environment

- Qlik Sense Desktop Feb 2024

-

AAALR is registered in the Qlik Sense logs

The following messages will appear in the engine trace system logs:QVGeneral: when AAALR(63.312046) is greater than 1.000000, we suggest using new row... Show MoreThe following messages will appear in the engine trace system logs:

QVGeneral: when AAALR(63.312046) is greater than 1.000000, we suggest using new row applicator to improve time and mem effeciency.

QVGeneral: - aggregating on 'RecruiterStats'(%DepartmentID) with Cardinal(87), for Object: in Doc: ffe8a825-b52e-4ceb-aea2-30de0f2c3306

There has also been reports of end users seeing the message "Internal Engine error" when opening apps when the error above is present.

Also for QlikView see article SE_LOG: when AAALR(1072.471418) is greater than 1.000000, we suggest using new row applicator to improve time and mem efficiency.Environment:

- Qlik Sense Enterprise on Windows, all versions

"AAALR" is a very low level concept deep in the engine. Generally speaking it means the average length of aggregation array. The longer this array is, the more memory and CPU power are to be used by the Engine to get aggregation results for every hypercube node.

When AAALR is greater than 1.0, normally the customer has a large data set and suffers slow responses and high memory usage in their app. In this case, Qlik Sense has a setting called DisableNewRowApplicator (default value is 1).

By setting this parameter to “0”, Qlik Sense will use a new algorithm which is optimized for large data set to do the aggregation, and will use much less memory and CPU power.

Changing this setting when they have AAALR warnings, making this change has resulted in drastic performance increases.

Possible setting values for DisableNewRowApplicator:1 - Use Engine default

0 - Use new row applicator where Engine seems suitable

-1 - Force new row applicator all the timeResolution:

- Stop all Qlik Sense services from the windows control panel / services

- Locate the “C:\ProgramData\Qlik\Sense\Engine\Settings.ini”

- Add this portion to the Engine Settings.ini file (press <enter> at the end of DisableNewRowApplicator=0 so the cursor rests on a blank line below it)

[Settings 7]

DisableNewRowApplicator=0

<---- the cursor should be here when saving the file- Restart all Qlik Sense services

- (If multinode) Complete the above steps on every node.

Related Content:

-

Qlik Talend Studio: NoClassDefFoundError for SAP DestinationDataProvider

When executing a Talend Studio job using an SAP Connection, the job may fail with the following error: Connection failure. You must change the SAP Se... Show MoreWhen executing a Talend Studio job using an SAP Connection, the job may fail with the following error:

Connection failure. You must change the SAP Settings.

java.lang.NoClassDefFoundError: com/sap/conn/jco/ext/DestinationDataProvider

at org.talend.repository.sap.SAPClientManager.init(SAPClientManager.java:121)

at org.talend.repository.sap.SAPClientManager.init(SAPClientManager.java:95)

at org.talend.repository.sap.ui.wizards.SAPConnectionForm$10.run(SAPConnectionForm.java:374)

at org.eclipse.jface.operation.ModalContext$ModalContextThread.run(ModalContext.java:122)

Caused by: java.lang.ClassNotFoundException: com.sap.conn.jco.ext.DestinationDataProvider cannot be found by org.talend.libraries.sap_7.3.1.20211105_0234-patch

at org.eclipse.osgi.internal.loader.BundleLoader.findClassInternal(BundleLoader.java:511)

at org.eclipse.osgi.internal.loader.BundleLoader.findClass(BundleLoader.java:422)

at org.eclipse.osgi.internal.loader.BundleLoader.findClass(BundleLoader.java:414)

at org.eclipse.osgi.internal.loader.ModuleClassLoader.loadClass(ModuleClassLoader.java:153)

at java.base/java.lang.ClassLoader.loadClass(ClassLoader.java:521)

... 4 moreResolution

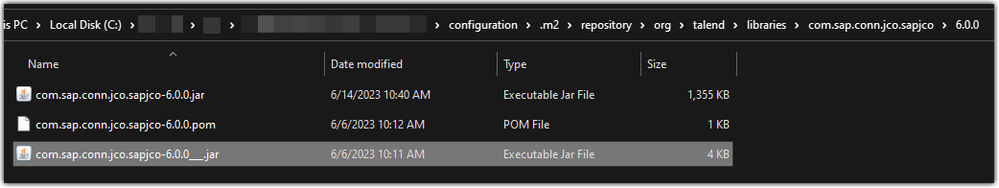

The root cause is that studio initialised with the wrong version of jar, which needs to be replaced with the official sapjco3.jar file from SAP.

To resolve:

- Browse to your Talend Studio's installation folder

- Go to <Studio installation folder>\configuration\.m2

epository\org\talend\libraries\com.sap.conn.jco.sapjco - Remove com.sap.conn.jco.sapjco

- Reinstall sapjco3.jar

Related Content

For further investigation on installing external modules to Talend Studio, please refer to Installing external modules

Environment

-

Using Kafka with Qlik Replicate

This Techspert Talks session covers: How Replicate works with Kafka Kafka Terminology Configuration best practices Chapters: 01:06 - Kafka Archit... Show More -

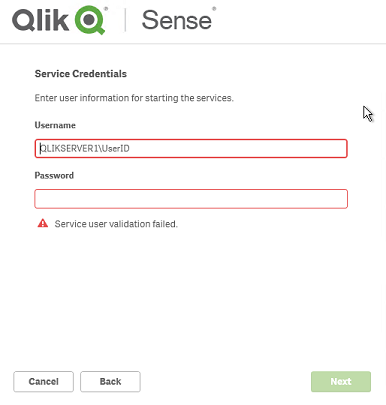

Qlik Sense setup or upgrade fails during install on invalid Service Credentials

During an installation or upgrade of Qlik Sense, the administrator encounters an error indicating that the validation of the Qlik Sense service accoun... Show More

During an installation or upgrade of Qlik Sense, the administrator encounters an error indicating that the validation of the Qlik Sense service account has failed.Environment:

Qlik Sense Enterprise on Windows , all versions

Resolution:

The most common reason for this is that the credentials entered are not valid. So to be sure that these credentials work elsewhere (e.g. try to launch an application using these credentials like Notepad.exe)

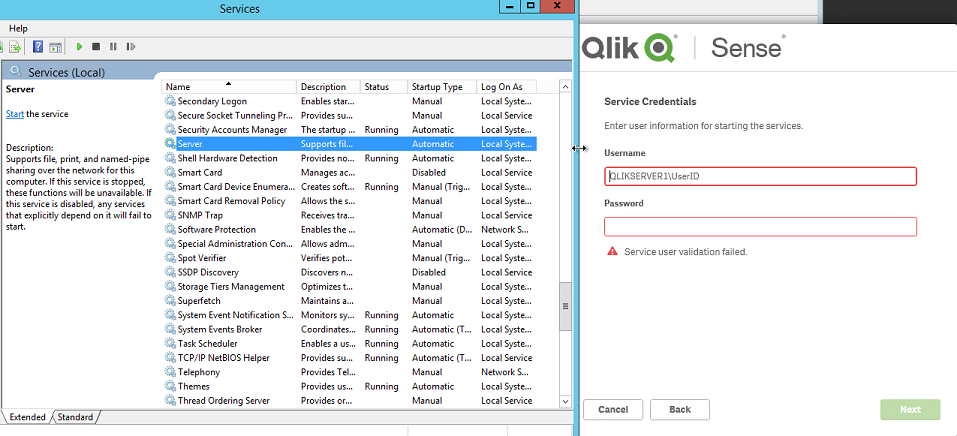

Other potential causes:- Server service not running (when using a local account)

- Service user is present in the Kerberos form : user@fqdn.ad.domain.name vs. NetBiosDomainName\User as here reported.

- Generalized timeout validating the credentials

For (1):

- Start > Administrative Tools > Services

- Navigate to the Server service:

- Start the Server service

- Re-enter the credentials

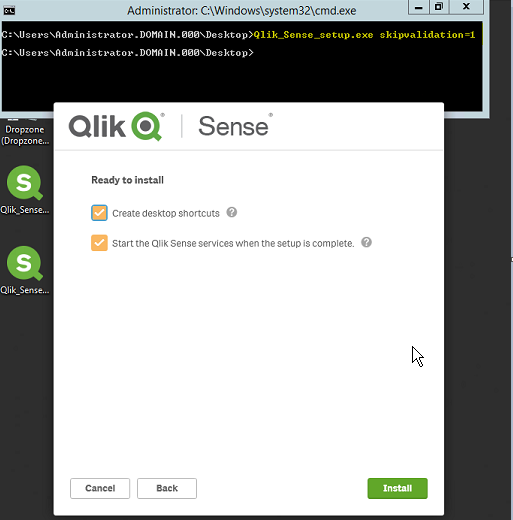

For (2):- Cancel the installer

- Start > Command Prompt

- Change the directory to the directory where the installer is present

- Execute the installer using the skipvalidation parameter

- Before 3.1.2: -skipvalidation

- 3.1.2 or higher: skipvalidation=1

- Example: Qlik_Sense_setup.exe skipvalidation=1

- Continue through the installer

- Note: When using this configuration flag the installer will do no validation of the credentials entered. If bad credentials are entered, then the administrator will encounter a failure of the services to start due to these incorrect credentials.

-

The Qlik Sense Monitoring Applications for Cloud and On Premise

Qlik Sense offers a range of Monitoring Applications that come pre-installed with the product. This article aims to provide information on where to f... Show MoreQlik Sense offers a range of Monitoring Applications that come pre-installed with the product. This article aims to provide information on where to find information about them or where to download them.

Content:

- Qlik Cloud

- Entitlement Analyzer for Qlik Cloud

- App Analyzer for Qlik Cloud

- Reload Analyzer for Qlik Cloud

- Access Evaluator for Qlik Cloud

- How to automate your Qlik Cloud Monitoring Apps

- Other Qlik Cloud Monitoring Apps

- Qlik Application Automation Monitoring App

- OEM Dashboard for Qlik Cloud

- Monitoring Apps for Qlik Sense Enterprise on Windows

- Operations Monitor and License Monitor

- App Metadata Analyzer

- The Monitoring & Administration Topic Group

- Other Apps

Qlik Cloud

Entitlement Analyzer for Qlik Cloud

The Entitlement Analyzer is a Qlik Sense application built for Qlik Cloud, which provides Entitlement usage overview for your Qlik Cloud tenant.

The app provides:

- Which users are accessing which apps

- Consumption of Professional, Analyzer and Analyzer Capacity entitlements

- Whether you have the correct entitlements assigned to each of your users

- Where your Analyzer Capacity entitlements are being consumed, and forecasted usage

For more information and to download the app and usage instructions, see The Entitlement Analyzer for Qlik Cloud.

App Analyzer for Qlik Cloud

The App Analyzer is a Qlik Sense application built for Qlik Cloud, which helps you to analyze and monitor Qlik Sense applications in your tenant.

The app provides:

- App, Table and Field memory footprints

- Synthetic keys and island tables to help improve app development

- Threshold analysis for fields, tables, rows and more

- Reload times and peak RAM utilization by app

For more information and to download the app and usage instructions, see Qlik Cloud App Analyzer.

Reload Analyzer for Qlik Cloud

The Reload Analyzer is a Qlik Sense application built for Qlik Cloud, which provides an overview of data refreshes for your Qlik Cloud tenant.

The app provides:

- The number of reloads by type (Scheduled, Hub, In App, API) and by user

- Data connections and used files of each app’s most recent reload

- Reload concurrency and peak reload RAM

- Reload tasks and their respective statuses

For more information and to download the app and usage instructions, see Qlik Cloud Reload Analyzer.

Access Evaluator for Qlik Cloud

The Access Evaluator is a Qlik Sense application built for Qlik Cloud, which helps you to analyze user roles, access, and permissions across a tenant.

The app provides:

- User and group access to spaces

- User, group, and share access to apps

- User roles and associated role permissions

- Group assignments to roles

For more information and to download the app and usage instructions, see Qlik Cloud Access Evaluator.

How to automate your Qlik Cloud Monitoring Apps

Do you want to automate the installation, upgrade, and management of your Qlik Cloud Monitoring apps? With the Qlik Cloud Monitoring Apps Workflow, made possible through Qlik's Application Automation, you can:

- Install/update the apps with a fully guided, click-through installer using an out-of-the-box Qlik Application Automation template.

- Programmatically rotate the API key that is required for the data connection on a schedule using an out-of-the-box Qlik Application Automation template. This ensures that the data connection is always operational.

- Get alerted whenever a new version of a monitoring app is available using Qlik Data Alerts.

For more information and usage instructions, see Qlik Cloud Monitoring Apps Workflow Guide.

Other Qlik Cloud Monitoring Apps

Qlik Application Automation Monitoring App

This article shows how to use the Qlik Application Automation Monitoring App. It explains how to set up the load script and how to use the app for monitoring Qlik Application Automation usage statistics for a cloud tenant.

For more information and to download the app and usage instructions, see Qlik Application Automation monitoring app.

OEM Dashboard for Qlik Cloud

The OEM Dashboard is a Qlik Sense application for Qlik Cloud designed for OEM partners to centrally monitor usage data across their customers’ tenants. It provides a single pane to review numerous dimensions and measures, compare trends, and quickly spot issues across many different areas.

Although this dashboard is designed for OEMs, it can also be used by partners and customers who manage more than one tenant in Qlik Cloud.

For more information and to download the app and usage instructions, see Qlik Cloud OEM Dashboard & Console Settings Collector.

The Qlik Cloud monitoring applications are provided as-is and are not supported by Qlik. Over time, the APIs and metrics used by the apps may change, so it is advised to monitor each repository for updates and to update the apps promptly when new versions are available.

If you have issues while using these apps, support is provided on a best-efforts basis by contributors to the repositories on GitHub.

Monitoring Apps for Qlik Sense Enterprise on Windows

Operations Monitor and License Monitor

The Operations Monitor loads service logs to populate charts covering performance history of hardware utilization, active users, app sessions, results of reload tasks, and errors and warnings. It also tracks changes made in the QMC that affect the Operations Monitor.

The License Monitor loads service logs to populate charts and tables covering token allocation, usage of login and user passes, and errors and warnings.

For a more detailed description of the sheets and visualizations in both apps, visit the story About the License Monitor or About the Operations Monitor that is available from the app overview page, under Stories.

Basic information can be found here:

The License Monitor

The Operations MonitorBoth apps come pre-installed with Qlik Sense.

If a direct download is required: Sense License Monitor | Sense Operations Monitor. Note that Support can only be provided for Apps pre-installed with your latest version of Qlik Sense Enterprise on Windows.

App Metadata Analyzer

The App Metadata Analyzer app provides a dashboard to analyze Qlik Sense application metadata across your Qlik Sense Enterprise deployment. It gives you a holistic view of all your Qlik Sense apps, including granular level detail of an app's data model and its resource utilization.

Basic information can be found here:

App Metadata Analyzer (help.qlik.com)

For more details and best practices, see:

App Metadata Analyzer (Admin Playbook)

The app comes pre-installed with Qlik Sense.

The Monitoring & Administration Topic Group

Looking to discuss the Monitoring Applications? Here we share key versions of the Sense Monitor Apps and the latest QV Governance Dashboard as well as discuss best practices, post video tutorials, and ask questions.

Other Apps

LogAnalysis App: The Qlik Sense app for troubleshooting Qlik Sense Enterprise on Windows logs

Sessions Monitor, Reloads-Monitor, Log-Monitor

Connectors Log AnalyzerAll Other Apps are provided as-is and no ongoing support will be provided by Qlik Support.

-

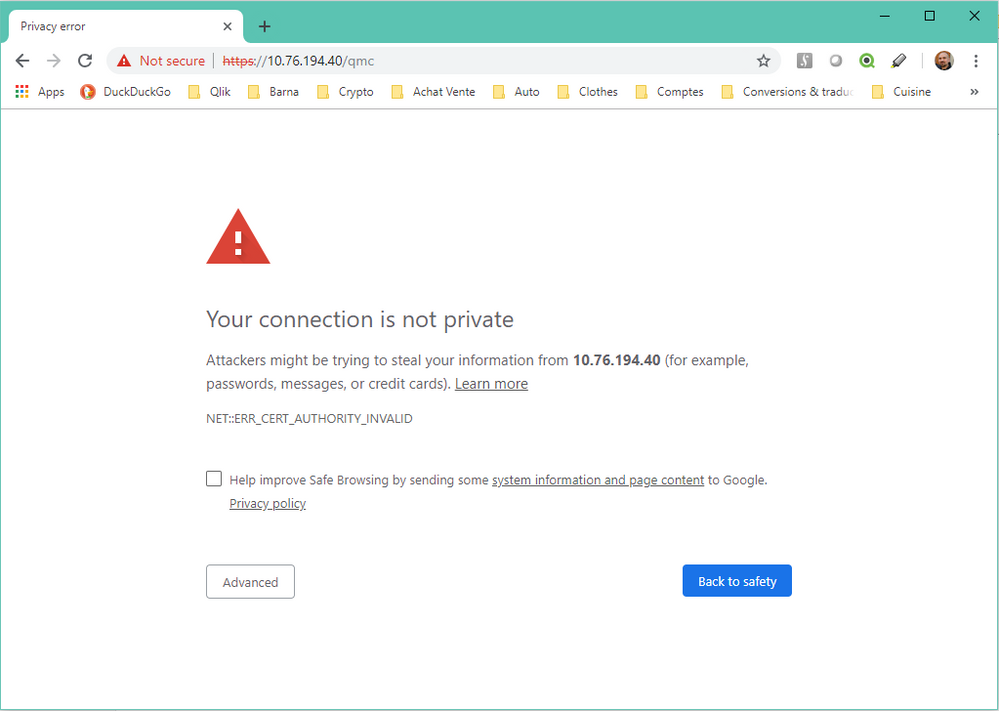

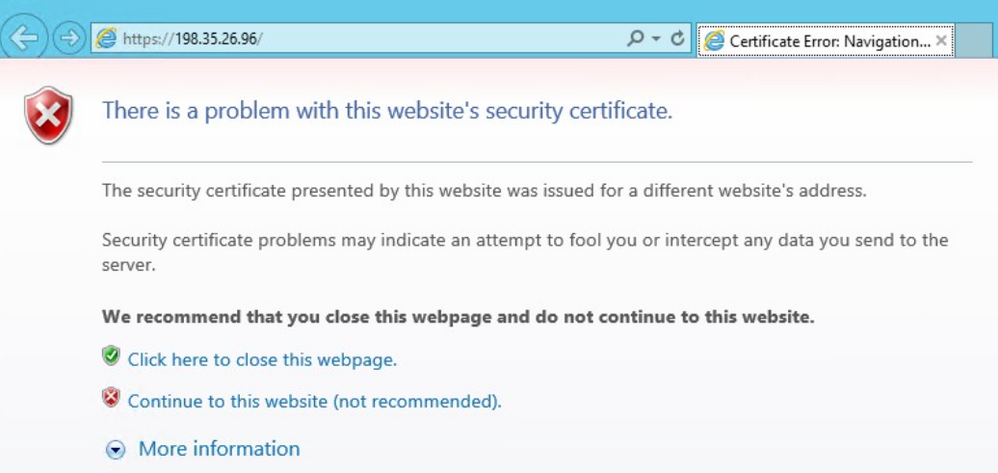

Qlik Sense: Problem with Security Certificate, Connection is untrusted

Accessing the Qlik Sense Enterprise on Windows hub or Management Console with HTTPS leads to a number of different browser errors (depending on browse... Show MoreAccessing the Qlik Sense Enterprise on Windows hub or Management Console with HTTPS leads to a number of different browser errors (depending on browser vendor):

There is a problem with this website's security certificate.

Problem with security certificate or connection is untrusted.

Your connection is not private.

NEST::ERR_CERT_AUTHORITY_INVALID

When selecting Continue.... the page will display with a red cross in its URL bar and the error:

Certificate error

Environment:

Qlik Sense Enterprise on Windows

The client's web browser does not consider the certificate provided by the server to be trusted.

By default, Qlik Sense Enterprise on Windows is installed with a server-side self-signed certificate. These are not trusted by clients and the error is to be expected.

Resolution

Use the correct URL

- Do not use the IP address of the server. The certificate is assigned to the hostname.

- Are you using an alias of the server name? Use the correct name.

Progress without action

- You can proceed without taking further action (not recommended)

Obtain and install a trusted certificate

- Obtain a valid Signed Server Certificate matching the Proxy node URL, from a trusted Certificate Authority (such as VeriSign, GlobalSign or trusted Enterprise CA) or your corporate certificate authority. See Qlik Sense: Compatibility information for third-party SSL certificates to use with HUB/QMC for requirements.

- Install the certificate as documented in Qlik Sense Hub and QMC with a custom SSL certificate.

Note: This still requires that the root certificate is installed locally on all clients. With trusted Certificate Authorities, this is usually the case by default.

Import the root certificate on all local clients.

Don't have a certificate by a trusted Certificate Authority? You will need to import the certificate on all local clients.

This can be achieved using a Group Policy, see Distribute Certificates to Client Computers by Using Group Policy

Related Content

Identifying Qlik Sense root CA and server certificates in certificate store

Qlik Sense: Compatibility information for third-party SSL certificates to use with HUB/QMC

Qlik Sense Mobile on iOS: cannot open apps on the HUB

Qlik Sense: Trust a self-signed certificate on the client -

How to: automation monitoring app for tenant admins with Qlik Application Automa...

This article shows how to use the Qlik Application Automation Monitoring App. It explains how to set up the load script and how to use the app for mon... Show MoreThis article shows how to use the Qlik Application Automation Monitoring App. It explains how to set up the load script and how to use the app for monitoring Qlik Application Automation usage statistics for a cloud tenant.

Index:

- How to configure the load script

- Qlik Application Automation Monitoring app content

- Loading data

- Limitations

The app included is an example and not an official app. The app is provided as-is.

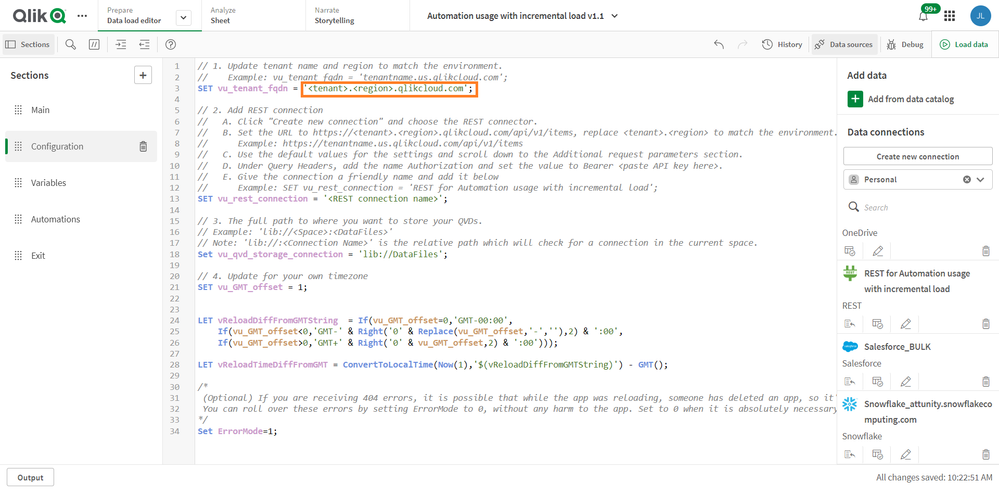

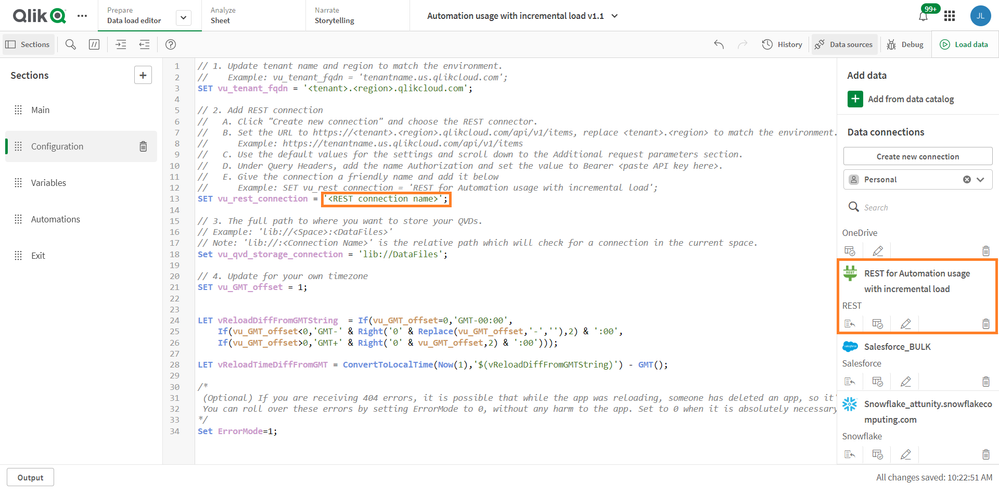

How to configure the load script

There are four steps for the configuration of the load script:

- Configure your tenant here by typing the name of your tenant and region here:

- Configure your data connection by typing the name of an existing data connection. The data connection must be configured in the pane to the right, see picture. If you do not have one, you must create one.

- Configure your data folder location here:

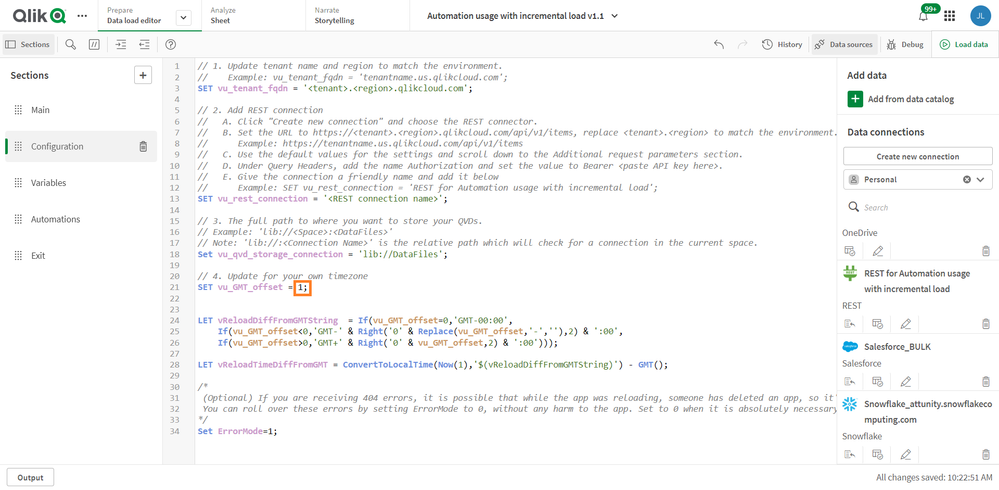

- Configure your time zone here, using deviation from GMT, for example, Central European time +1:

Qlik Application Automation Monitoring app content

The monitoring app includes four sheets that present various information on the Qlik Application Automation usage in the current tenant.

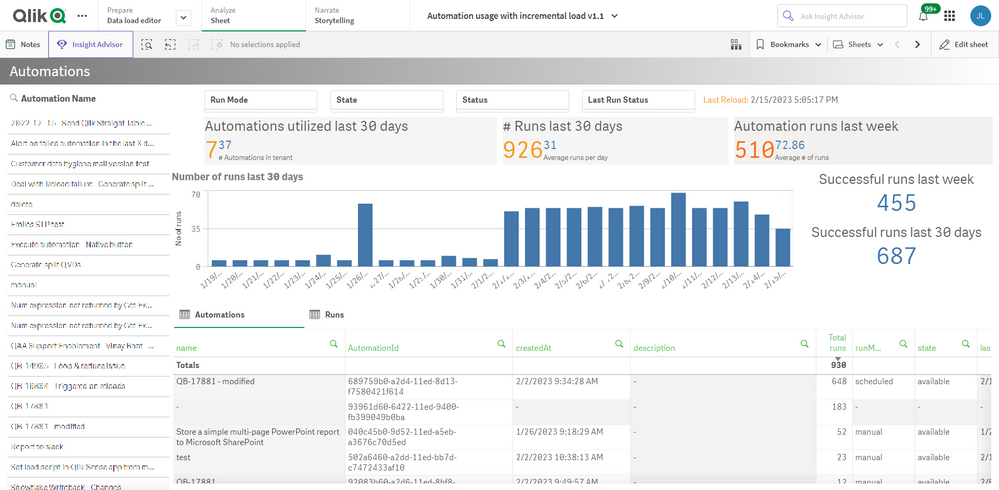

Automations

Filtering is available based on Automation Name, Last Run Status, Run Mode, State & Status.

- # Runs last 30 days

- Automation runs last week

- Automations in tenant

- Automations utilized last 30 days

- Average # of runs

- Average runs per day

- Successful runs last 30 days

- Successful runs last week

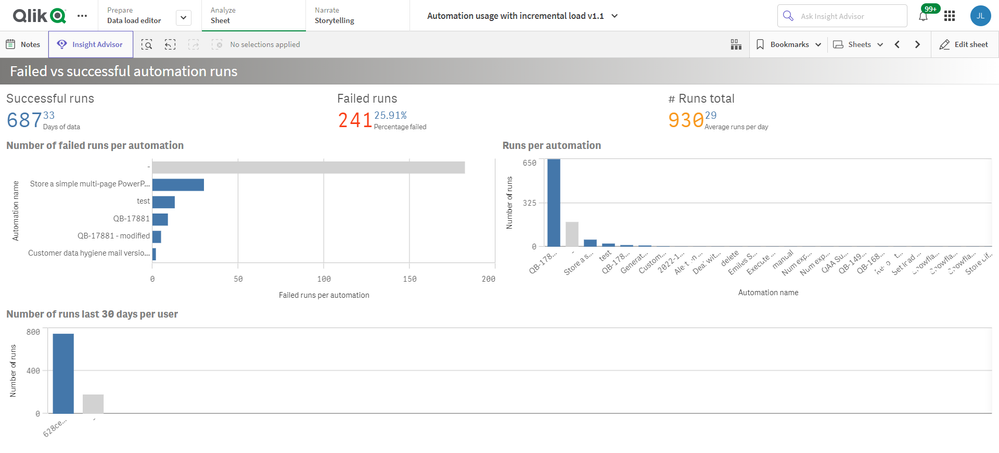

Failed vs successful automation runs

- # Runs total

- Average runs per day

- Days of data

- Failed runs

- Number of failed runs per automation

- Number of runs last 30 days per user

- Percentage failed

- Runs per automation

- Successful runs

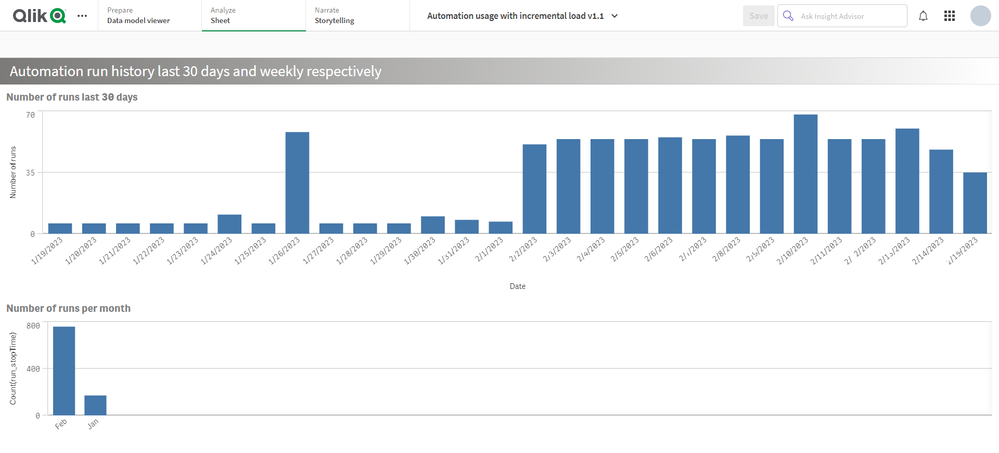

Automation run history last 30 days and weekly respectively

- Number of runs last 30 days

- Number of runs per month

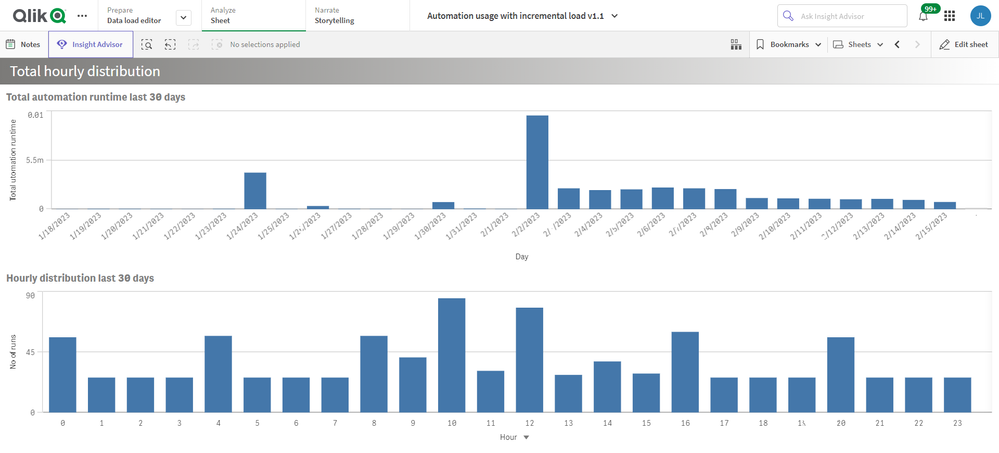

Total hourly distribution

- Hourly distribution last 30 days

- Total automation runtime last 30 days

Loading data

The Qlik Application Automation Monitoring app facilitates incremental load, that is, only added data is loaded into the app.

Important: Qlik Application Automation runs are only stored for 30 days. When data is loaded into the app for the first time, only 30 days of history is loaded, thus only 30 days of history will be available in the app. After this initial data load, data older than 30 days will be available in the app thanks to the incremental data load. If data is loaded at least once every 30 days, continuous data will be available in the app.

Limitations

- At the initial load, only 30 days of history are loaded. This is because Qlik Application Automation history is only stored for 30 days.

- For continuous data in the app, data must be loaded at least every 30 days.

- The API can only return the last 5000 runs for every automation. Please keep this in mind when scheduling the reloads of this app.

Environment

Qlik Cloud

Qlik Application AutomationThe information in this article is provided as-is and is to be used at your own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

Qlik Sense Desktop hub/app view not in full screen

While using Qlik Sense Desktop on windows 10 with high DPI machine specifically screen resolution set to 150%, Sense app won't fit to the full screen ... Show MoreWhile using Qlik Sense Desktop on windows 10 with high DPI machine specifically screen resolution set to 150%, Sense app won't fit to the full screen resolution.

Cause

Compatibility issue with Qlik Sense and the resolution of the monitor(s).

Resolution

- Change the scaling to either 100% or 125%.

- Change the Qlik Sense app compatibility mode to win 8.

- Right click on Qlik sense Desktop icon

- Go to Properties

- Go to compatibility tab

- In compatibility mode section check the "Run this program in compatibility mode for:" select option "Windows 8".

- Click OK

- Override the DPI scaling behavior

- Ensure Windows is set to use 150% scaling (or the preferred scaling level).

- Right Click on Qlik Sense Desktop app Icon

- Choose Properties.

- Select the Compatibility tab.

- Click the button Change high DPI settings.

- In the Qlik Sense Properties window, go the "High DPI scaling override" section.

- Check the box for "Override high DPI scaling behavior."

- Choose the option "Scaling performed by: System".??

- This should ensure the application will use the correct system scaling that has been applied in the windows 10 display settings.

Note: If Qlik Sense Desktop is already running, please close it before following the DPI scaling steps. -

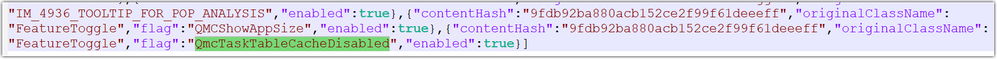

Tasks in the Qlik Sense Management Console don't update to show the correct stat...

Executing tasks or modifying tasks (changing owner, renaming an app) in the Qlik Sense Management Console and refreshing the page does not update the ... Show MoreExecuting tasks or modifying tasks (changing owner, renaming an app) in the Qlik Sense Management Console and refreshing the page does not update the correct task status. Issue affects Content Admin and Deployment Admin roles.

The behaviour began after an upgrade of Qlik Sense Enterprise on Windows.

Fix version:

This issue can be mitigated beginning with August 2021 by enabling the QMCCachingSupport Security Rule.

Solution for August 2023 and above:

Enable QmcTaskTableCacheDisabled.

To do so:

- Navigate to C:\Program Files\Qlik\Sense\CapabilityService\

- Locate and open the capabilities.json

- Modify or add the QmcTaskTableCacheDisabled flag for these values to true

{"contentHash":"CONTENTHASHHERE","originalClassName":"FeatureToggle","flag":"QmcTaskTableCacheDisabled","enabled":true}

Where CONTENTHASHHERE matches the number in all other features listed in the capabilities.json.

Example:

This will disable the caching on the tasks table only, leaving the overall QMC Cache intact to gain performance. If you had previously set QmcCacheEnabled, QmcDirtyChecking, QmcExtendedCaching to false, please set it to true again. - Restart the Qlik Sense services

Workaround for earlier versions:

Upgrade to the latest Service Release and disable the caching functionality:

To do so:

- Navigate to C:\Program Files\Qlik\Sense\CapabilityService\

- Locate and open the capabilities.json

- Modify the flag for these values to false

- QmcCacheEnabled

- QmcDirtyChecking

- QmcExtendedCaching

- Restart the Qlik Sense services

NOTE: Make sure to use lower case when setting values to true or false as capabilities.json file is case sensitive.

Should the issue persist after applying the workaround/fix, contact Qlik Support.

Internal Investigation ID(s):

Environment

-

QlikView: Disable individual items in Ajax toolbar

This article aims to document how to create a customized CSS file to disable individual items in the Ajax toolbar, for example, More or Add Object. I... Show MoreThis article aims to document how to create a customized CSS file to disable individual items in the Ajax toolbar, for example, More or Add Object.

In this approach to hide individual items in the Ajax toolbar we create a custom CSS file (the first step) and then call this custom file in a copy of the opendoc.htm (the second step).

Content:

- Creating customized files

- Changes in QlikView Server to use the new opendoc.htm file

- Available toolbar items

- Related Content

Creating customized files

- Create a custom .css file to hide, for example, the More menu. In the file, add the following

.ctx-menu-action-FOLDOUTDOWN

{

display: none !important;

} - Create a copy of opendoc.htm located in C:\Program Files\QlikView\Server\QlikViewClients\QlikViewAjax

Do not alter the original opendoc.htm!

- Rename the copy and edit it, adding a reference to the custom .css created.

In this example, our custom.css file is located in the /htc folder.<link rel="stylesheet" type="text/css" media="screen" href="/QvAjaxZfc/htc/default.css" />

<link rel="stylesheet" type="text/css" media="screen" href="/QvAjaxZfc/htc/custom.css" />

<link rel="apple-touch-icon" href="/QvAjaxZfc/htc/Images/Touch/touch-icon.png" />

Changes in QlikView Server to use the new opendoc.htm file

- Open the QlikView Management Console

- Navigate to System > Setup > QlikView Web Servers

- Open your QlikView WebServer

- Open the AccessPoint tab

- In the Accesspoint Settings section, change the Client Paths for Full Browser and Small Devices to the path and name of your opendoc.htm copy, such as opendoc_new.htm:

/QvAjaxZfc/opendoc_new.htm

From here on, the customized opendoc_new.htm will be used when opening any document in the Ajax client, and the customized CSS will be applied.

If you want to apply the custom behavior only to specific documents:

- Open the QlikView Management Console

- Navigate to Documents > User Documents

- Choose your document

- Open the Server tab

- In the Availability section, check Full Browser and Small Device Version and set the Url to

/QvAjaxZfc/opendoc_new.htm

Available toolbar items

These are the available toolbar items:

- ctx-menu-action-CLEARSTATE

- ctx-menu-action-BCK

- ctx-menu-action-FWD

- ctx-menu-action-UNDO

- ctx-menu-action-REDO

- ctx-menu-action-LS (lock all selections)

- ctx-menu-action-US (unlock all selections)

- ctx-menu-action-CS (current selections)

- ctx-menu-action-TOGGLENOTES

- ctx-menu-action-REPOSITORY

- ctx-menu-action-NEWSHEETOBJ

- ctx-menu-action-SHOWFIELDS

- ctx-menu-action-ADDBM

- ctx-menu-action-REMBM

- ctx-menu-action-BookMarks

- ctx-menu-action-Reports

- ctx-menu-action-FOLDOUTDOWN

- .ctx-menu-action-SENDBACKTOACCESSPOINT

The information in this article is provided as-is and will be used at your discretion. Depending on the tool(s) used, customization(s), and/or other factors, ongoing support on the solution below may not be provided by Qlik Support.

Environment

Related Content

-

How to Install PostgreSQL ODBC client on Linux for PostgreSQL Source Endpoint

This article provides a comprehensive guide to efficiently install the PostgreSQL ODBC client on Linux for a PostgreSQL source endpoint. Overview Dow... Show MoreThis article provides a comprehensive guide to efficiently install the PostgreSQL ODBC client on Linux for a PostgreSQL source endpoint.

Overview

- Download the PostgreSQL ODBC client software.

- Upload the downloaded files to your Replicate Linux Server.

- Install the RPM files in the specified order.

- Take note of considerations or setup instructions during the installation process.

Steps

- Download the PostgreSQL ODBC client software

Please choose the appropriate version of the PostgreSQL client software and the corresponding folder for your Linux operating system. In this article, we are installing PostgreSQL ODBC Client version 13.2 on Linux 8.5.

- Upload the downloaded files to a temporary folder in your Qlik Replicate Linux Server

- Install the RPM files in the specified order

rpm -ivh postgresql13-libs-13.2-1PGDG.rhel8.x86_64.rpm

rpm -ivh postgresql13-odbc-13.02.0000-1PGDG.rhel8.x86_64.rpm - Take note of considerations or setup instructions during the installation process.

- Note the installation folder (default: "/usr/pgsql-13/lib")

- Open site_arep_login.sh in /opt/attunity/replicate/bin/ and add the installation folder as a LD_LIBRARY_PATH:

export LD_LIBRARY_PATH=/usr/pgsql-13/lib:$LD_LIBRARY_PATH

- Save the site_arep_login.sh file and restart Replicate Services.

- unixODBC is a prerequisite. If it's not already present on your Linux Server, make sure to install it before PostgreSQL ODBC client software installation:

rpm -ivh unixODBC-2.3.7-1.el8.x86_64.rpm

- "/etc/odbcinst.ini" and "/etc/odbc.ini" are optional and typically not required, unless it becomes necessary for troubleshooting connectivity issues by "isql"

- "psql" is optional and typically not required, unless it becomes necessary for troubleshooting connectivity issues by psql. It can be downloaded and installed by:

postgresql13-13.2-1PGDG.rhel8.x86_64.rpm

Environment

- Qlik Replicate all versions

- PostgreSQL Server all versions

- PostgreSQL Client version 13.2

-

Qlik Talend Studio: cMessagingEndPoint ResolveEndpointFailedException: Failed to...

After patching Qlik Talend Studio to R2023-07, an old salesforce:event/xxx message pull will fail with: org.apache.camel.ResolveEndpointFailedExceptio... Show MoreAfter patching Qlik Talend Studio to R2023-07, an old salesforce:event/xxx message pull will fail with:

org.apache.camel.ResolveEndpointFailedException: Failed to resolve endpoint: salesforce://event/Customer_Details_New__e?rawPayload=true&replayId=-1 due to: event/Customer_Details_New__e

Resolution

Since Camel 3.19.0, operationName is mandatory in the salesforce URI. See camel-salesforce - Endpoint syntax should have mandatory operationName and CAMEL-18317: camel-salesforce: Add subscribe operation for details.

Old salesforce:event/xxx will be changed to salesforce:subscribe:event/xxx to fix this issue.

Environment

Talend Studio v 7.3.1; v 8.0

-

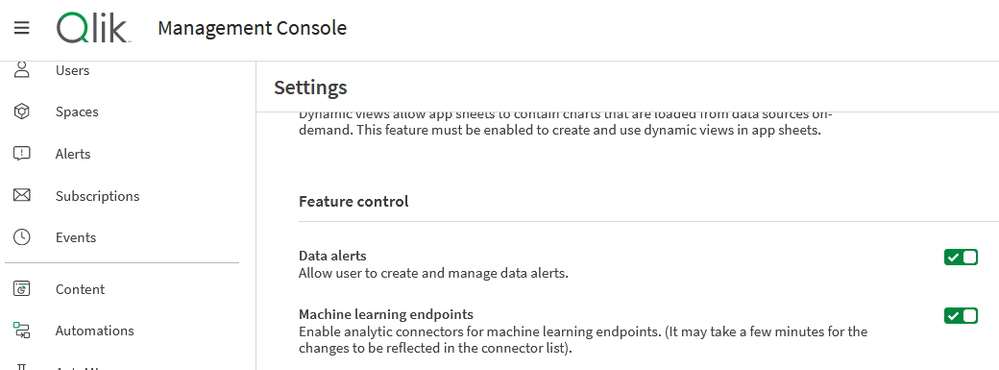

DataRobot Connector unavailable in Qlik Cloud data connections

When attempting to create a DataRobot connection in the Qlik Cloud tenant hub, the connection cannot be found Navigating to Add New > Data connection ... Show MoreWhen attempting to create a DataRobot connection in the Qlik Cloud tenant hub, the connection cannot be found

Navigating to Add New > Data connection > and searching for DataRobot does not find the data connection option.

Resolution

- Go to your TMC (tenant management console) settings and

- Scroll down to the Feature control section

- Enable the following switch: Machine learning endpoints

- Return to the tenant hub

- Now go to Add New

- Click Data connection

- Type DataRobot and select it to continue with creating the DataRobot connection

Related Content

Environment

Qlik Cloud

The information in this article is provided as-is and will be used at your discretion. Depending on the tool(s) used, customization(s), and/or other factors, ongoing support on the solution below may not be provided by Qlik Support.

-

License Monitor Task fails with message Error: HTTP protocol error 403 (Forbidde...

Issue reported: License Monitor Task fails with message Error: HTTP protocol error 403 (Forbidden) Diagnosis: Open "Tasks" in the QMC and view the ... Show MoreIssue reported:

- License Monitor Task fails with message Error: HTTP protocol error 403 (Forbidden)

Diagnosis:

- Open "Tasks" in the QMC and view the 'information' icon within the 'status' column for the failed task

- Download the Script log file

- The downloaded Script Log file for the License Monitor ends with:

2019-12-10 21:11:49 Error: HTTP protocol error 403 (Forbidden):

2019-12-10 21:11:49

2019-12-10 21:11:49 The server refused to fulfill the request.

2019-12-10 21:11:49 Execution Failed

2019-12-10 21:11:49 Execution finished.

Cause(s):

The License Monitor acquires license data from the Qlik Sense Repository Service (QRS) through QRS API calls. The requests to these end-points are by default made by the user running the Qlik Sense services, but the related data connections may have been configured to run with a custom user.

The user executing the API calls must fulfill two requirements;

- User is not blocked in Qlik Sense

- User is assigned RootAdmin role

Resolution:

Assign RootAdmin role to the user running Qlik Sense services

This is most often the only required action, since the license monitor app by default relies on user running Qlik Sense services.

- In the QMC, navigate to 'Users'

- In the 'Users' under the User ID column find the row with the service account

- Edit that service account row

- Select 'Add role' button

- Hover over the new field and select 'RootAdmin' in the list

- Reload License Monitor app

Run monitor app connections with customer RootAdmin account.

NOTE: This set is only required if a custom data connection user is required to comply with local security policies.

Use a custom RootAdmin account for the Data Connections that start with monitor_apps_REST_*

- In the QMC, in the left panel under Manage Content, select Data Connections

- Under the 'Name' column are the connections starting with monitor_apps_REST_*

- Edit each of these data connections you will see a User ID and Password field

- Enter a user into the User ID field (domain\username format)

- Enter a password in the Password field

- The user must have a 'Root Admin' role assignment;

- In the QMC, navigate to 'Users'

- In the 'Users' under the User ID column find the row with the desired user account

- Edit that service account row

- Select 'Add role' button

- Hover over the new field and select 'RootAdmin' in the list

- Reload License Monitor app

Confirm that UDC filter include account used for data connections

- Confirm that user used for data connections is not marked as Blocked in QMC Users section

- If user is blocked, adjust UDC filter to include the user account used for the Data Connections

- Sync UDC and reload License Monitor app