Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Re: Interval match and performace

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interval match and performace

Hi Guys,

I have the following scenario:

There are different Master data Tables like "Station, Measuringbar, Variable" and the keyfigure table with "HourlyData"

Now each of these master data tables have the following fields, ID, valid from, valid to etc. I made a calendar for two Years which are needed for reporting. My Datamodel looks like this normally without considering the Validity of the dates from valid from, valid to fields.

Station:

Load

Station_Id as %Key_Station_Id,

Valid_From_Station, //YYMMDDHH, Date with Hour Format 2012122106 where 06 is 06 Hrs

Valid_To_Station, //YYMMDDHH, Date with Hour Format

Station_Art_ID,

Station_Art_Txt

;

Variable:

Load

Station_Id & '_' & Measuringbar_Id as %Key_ID_Station_Measuringbar ,

Station_Id as %Key_Station_Id, // Connection to Station Table

Measuringbar_Id,

Upstream,

Downstream,

Valid_From_Variable, //YYMMDDHH, Date with Hour Format

Valid_To_Variable //YYMMDDHH, Date with Hour Format

;

MeasuringBar:

Load

Station_Id & '_' & Measuringbar_Id as %Key_ID_Station_Measuringbar ,

Valid_From_MeasuringBar, //YYMMDDHH, Date with Hour Format

Valid_To_MeasuringBar //YYMMDDHH, Date with Hour Format

;

HourlyData:

Station_Id & '_' & Measuringbar_Id as %Key_ID_Station_Measuringbar ,

Date & Hour as Date_Key,

Hour,

Status,

Volume,

Energy

;

Calendar:

Date & Hour as Date_Key, // Hrs 1 to 24 generated automatically with loop

Year,

Month,

Week,

Day

...

;

So my task is to build the datamodel taking in to count the Interval match validity when I select a Date (Year, Month, Day) of the calendar. Only the valid data from master tables at that particular point of time should be considered when I select a year, month, day etc...

For this I have done the following:

I built a table KeyFigures.

Qualify *;

Unqualify '%KEY*',DATE_VALID_FROM,DATUM_VALID_TO;

KeyFigures:

Load

'#STATION#'& %Key_Station_Id as %KEY_ID_OBJEKT,

'#STATION#' & Valid_From_Station &'#'& Valid_To_Station as %KEY_ID_OBJEKT_VALID_FROM_VALID_TO,

%Key_Station_Id

Valid_From_Station as DATE_VALID_FROM,

Valid_To_Station as DATUM_VALID_TO

from Station

Join (KeyFigures)

Load

'#MeasuringBar#'& Measuringbar_Id as %KEY_ID_WGOBJEKT,

'#MeasuringBar#'& %Measuringbar &'#'& Valid_From_MeasuringBar &'#'& Valid_To_MeasuringBar as %KEY_ID_OBJEKT_VALID_FROM_VALID_TO,

Valid_From_MeasuringBar as DATE_VALID_FROM,

Valid_To_MeasuringBar as DATUM_VALID_TO

from MeasuringBar;

Join (KeyFigures)

Load

'#Variable#'& %Key_ID_Station_Measuringbar as %KEY_ID_WGOBJEKT,

'#Variable#'&Valid_From_Variable &'#'& Valid_To_Variable as %KEY_ID_OBJEKT_VALID_FROM_VALID_TO,

Valid_From_Variable as DATE_VALID_FROM,

Valid_To_Variable as DATE_VALID_TO

from Variable;

UNQUALIFY *;

KeyFigures_Detail:

Load Distinct

%KEY_ID_WGOBJEKT,

%KEY_ID_WGOBJEKT_GUELTIG_VON_GUELTIG_BIS,

DATE_VALID_FROM,

DATE_VALID_TO

Resident KeyFigures;

inner Join IntervalMatch(Date_Key)

Load DATE_VALID_FROM,

DATE_VALID_TO

Resident KeyFigures_Detail;

Join(Variable)

Load Distinct

%KEY_ID_WGOBJEKT,

%KEY_ID_WGOBJEKT_GUELTIG_VON_GUELTIG_BIS,

Date_Key

Resident KeyFigures_Detail;

drop Table KeyFigures_Detail;

Drop Field %KEY_ID_WGOBJEKT from KeyFigures; // to remove the synthetic keys

Variable_MS: //Final Variable_MS as a Facttable

Load *,

%Key_ID_Station_Measuringbar &'#'& Date_Key as %KEY_ID_STATION_MSCHIENE_Date_Key

Resident Variable;

Drop Table Variable;

Drop Field Date_Key from HourlyData;

Now I get a Starschema Datamodel with the correct data and links but I could load only one month. For that the Table "Variable_MS" contains 800 Million datasets which is too much. The data is getting multiplicated due to interval match.

How can I change my Datamodel to be performant using Interval match and still get the 2 Years data.

I hope I will get some positive answers from profis..

Thanks in advance

Sravan

- « Previous Replies

-

- 1

- 2

- Next Replies »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would not put times in the master calendar. I my suggested data model above, I have split it into two master tables: One master calendar (one record per date) and a master time table. The reason is exactly the one you have encountered now - the calendar otherwise becomes too big.

If you separate the dates from the times by

date(floor(timestamp#(TimeStampField,'YYYYMMDDhh'))) as Date,

time(frac(timestamp#(TimeStampField,'YYYYMMDDhh'))) as Time,

then you can still populate the TagesWerte with dummy records, but just one record per day. The intervalmatch can still be made with the TimeStampField (YYYYMMDDhh).

HIC

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Henric,

Thanks for your input. I have solved other issues but still trying to solve the Dimension Table "Variable_MS".

This table contains a lot of Attributes with ValidFrom and ValidTo Dates. After spending two days, I am approaching you.

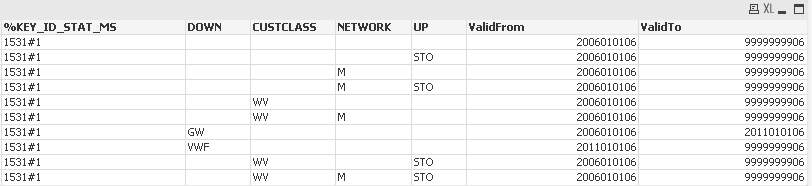

For example the Raw/Source Table as one example looks like this in the following figure. It contains a lot of Attributes like Down, Customerclass, Network, Up etc with seperate ValidFrom and ValidTo for each attribute.

Now what I have done in script previously was to load each attribute with separate table and outerjoin them together to get a table with datasetentries all in one entry of table for Reporting. But the join does not give correct results, when the ValidFrom and ValidTo is split like with Down =GW and Down= VWF in the above example. I dont get a outer join correct to get the attributes correct. I think I need to use a Interval match at this place before joining the individual tables. I tried but without success. Now Interval Match must be done between two tables with each table havinf a ValidFrom and ValidTo Dates. Looks a bit tricky...

Any Suggestions?

I am attaching the example with my problem..

Thanks a lot....

sravan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not sure I understand everything you want to do...

But, I think you need to ask yourself "what is the primary key for these attributes?" Is it %KEY_ID_KEY_MS and Interval? Then you should do a GROUP BY [%KEY_ID_KEY_MS], [ValidFrom], [ValidTo] when you load the attributes. Once loaded, you do an Intervalmatch to get a bridge table that links the table with your other data.

HIC

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Henric,

sorry for the confusion. I will make it simple to understand. The user selects a "ReportType" in a sheet and then he can see the Report based on the preselected data [If condition in the script]. Ideal example [Ideal.qvw] is as attached. If the ValidFrom and ValidTo is same in all the Attributes, they join together in one Report. This Works great. But this is not the case everytime.

The problem arises,If certain attributes have a different ValidFrom and ValidTo state. This is normally the case. Attached is one example [Example_test.qvw]

The attributes which have a different ValidFrom and ValidTo like DOWN = GF and VWF does not get selected when the "ReportType" is selected. I just need these also in my selection. Thats what I need.

I tried with "Group by" but it does not serve the purpose. May be you can help me.

Thanks a lot

sravan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi Henric,

solved this by building a Table for each Attribute and by doing an Intervalmatch to get a bridge table that links the table with your other data.. Though the complete Appikation is Approx.2,6 GB, it works.

Thanks

Sravan

- « Previous Replies

-

- 1

- 2

- Next Replies »