Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Re: Large volume data ?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Large volume data ?

I have 2 tables: one containing a person_key and person details, and one containing the person_key and a mailshot_ID, plus mailshot details.

The person key is a unique 6 digit number.

There are roughly 750,000 persons and each one might receive 5 mailshots a year.

I have simply linked the tables on the person-key. This does not seem huge volumes of data to me, but the resulting QV model seems large and slow.

I have tried using AUTONUMBER on the person-key, but since the key is already well-structured, this makes little difference.

Does anyone have any hints or tips about the approach?

Sorry if this is a simple query, but I'm puzzled by the apparent poor performance.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you have a screenshot of your datamodel? is it just 2 tables? What element of the dashboard seems slow?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

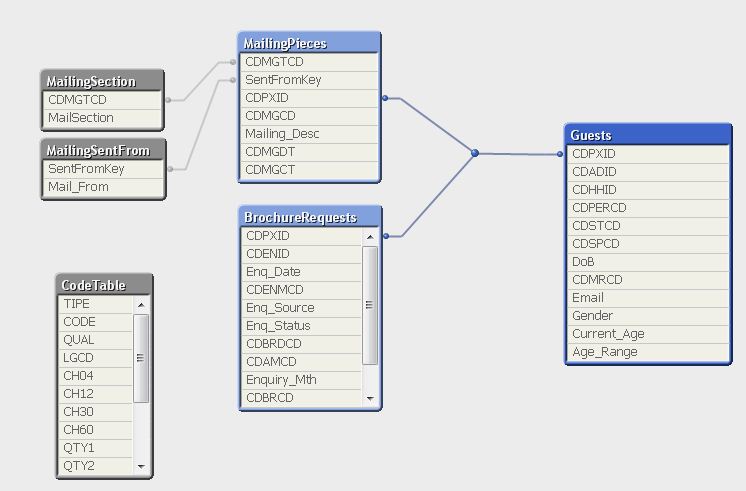

Well, as you'd expect, my description was a little cut-down, but this is model; the BrochureRequests table is (relatively) small. I hope you can follow. The person_key is CDPXID.

Once loaded, performance is average, but data is slow to load (30-40 minutes) and seems to create a big QVW. Ultimately, load time is not important because it will occur overnight. I'm just concerned my structure is poor.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

maybe it is from the expressions or calculated dimensions, probably it is not because of the data model, 750 000 persons is not considered "large volume"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are all of those fields being used in your user interface? Loading is can be slow if you are not performing an incremental refresh. I would suggest minimising the fields you are loading and going to the document settings > sheet and review the memory usage and calculation time of your charts