Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Forums by Product

- :

- Products (A-Z)

- :

- Qlik Sense

- :

- Documents

- :

- Delta Tags – A new mechanism for efficiently handl...

- Edit Document

- Move Document

- Delete Document

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Delta Tags – A new mechanism for efficiently handling incremental reloads in QlikView and Qlik Sense

- Move Document

- Delete Document

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Delta Tags – A new mechanism for efficiently handling incremental reloads in QlikView and Qlik Sense

When working with large datasets in QlikView and Qlik Sense, it’s important to ensure the backend data processing jobs (Extracts and Transforms) perform efficiently, by following best practices to reduce execution time and keep resource usage at a minimum.

One of the best practices recommended for efficient data processing is implementing an incremental load strategy, at least in the Extract Layer. Whenever possible, you should also implement incremental data processing on the Transform layer.

One essential aspect of any delta load strategy, is implementing a mechanism for keeping track of when the QVDs were last updated, in order to determine the starting point of the next refresh cycle.

In this post, I am introducing a new technique that I’ve developed for keeping track of incremental loads in QlikView and Qlik Sense: Delta Tags.

In a nutshell, Delta Tags is a framework to keep track of delta reload jobs in QlikView and Qlik Sense, in which the delta timestamps are stored in the QVD file headers along with the rest of the data.

Avantages

There are three major advantages to implementing Delta Tags:

- Reliability: It makes the delta process highly reliable, since the actual data being processed is no longer separated from the metadata used in the incremental logic (i.e. the “last refreshed timestamp”).

- Consistency: QVD files can be moved around, restored from backups, etc. This framework guarantees that, when a QVD is restored to a previous version or changed by an external process, the load script will automatically detect the delta timestamps on the restored file and reprocess the data accordingly.

- Efficiency: the Delta Tags approach also makes the incremental loads highly efficient since the metadata in QVDs can be quickly read from the load script and with minimal resource usage.

How Delta Tags work

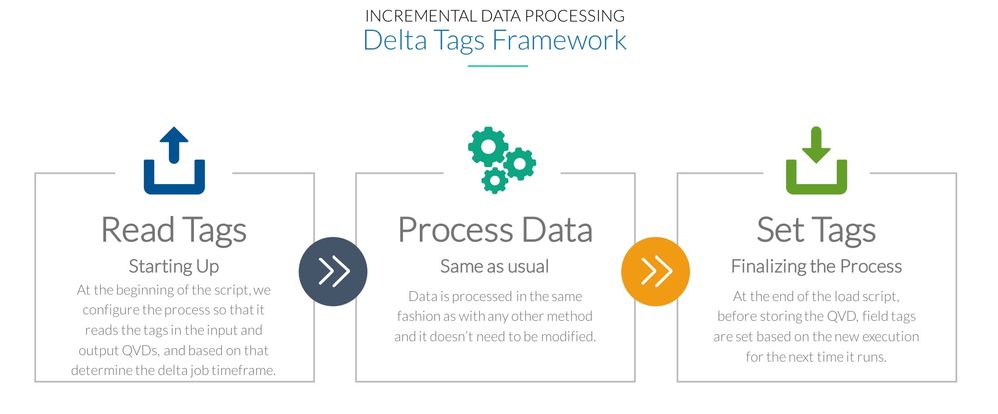

Here is a quick overview of how the process works:

- First, at the beginning of the script, we configure the process so that it reads the tags in the input and output QVDs, and based on that determine the delta job timeframe.

- The second step is to process the data based on the calculated timeframe. This is done similar to any other method and it doesn’t need to be modified.

- Finally, at the end of the load script, before storing the QVD, field tags are set based on the new successful execution, so that they can be used the next time it runs.

As you’ll see in the next example, this framework is very easy to implement, and it only takes a few lines of code at the start and at the end of the Load Script.

Delta Tags Example

I’ll use a very simplified example to demonstrate how to implement Delta Tags. This example consists of a script in the Extract layer that loads data from a database into a single QVD.

We can split the entire process into 6 steps.

Step 1: Include

Since the Delta Tags framework is packaged as an external Qlik script, the first thing we need to do is add it to the load script with an include statement:

$(include=e:\path\to\deltatags.qvs);Step 2: Configure

Next, we add a few lines of code with the configuration of our delta logic, including:

- What are the input QVDs, if any

- What are the output QVDs

- The “Last Check” type, which can be:

- RunTime: used to set the starting point timestamp based on the last time that the script ran successfully. This is primarily used for scripts on the Extract layer.

- Inputs: used to define the starting point based on when the input QVDs where last updated at the time we last ran the script, not on the actual time at which it ran. This is used for scripts on the Transform layer and I will share an example of this in a later blog post.

Additionally, there are optional configuration parameters that can be set at this point. For example, we can specify if we want to manually force a full reload.

In our example, we’ll configure the job as having one single output QVD, named Table.QVD, and we’ll set the field tags on the Id field. Additionally, we want to use the RunTime last check type since it’s an Extract script and there are no input QVDs.

CALL DeltaTags_RegisterQVD('Output', 'Table.QVD', 'Id'); Set vDeltaTags_Config_LastCheckType = 'RunTime'; Set vDeltaTags_Config_FullLoad = 0;

Step 3: Read Tags

Reading existing delta tags from the QVDs is simply done by using a CALL statement to execute a built-in function in the Delta Tags script.

CALL StartUpDeltaTags;

Trace Querying database starting on $(vDeltaTags_Config_StartingPoint); = 0;

As a result of this operation, we will now have two new script variables available for the rest of the script:

- vDeltaTags_Config_StartingPoint This is the calculated “starting point” that defines the delta timeframe. This value takes into account a buffer time that is set globally and can also be overridden locally on each script, and is used to extend the delta timeframe by a predefined time interval in order to account for late-arriving data and protect against data loss. For instance, if we last refreshed the data at 2:30pm, and we have a buffer time of 10 minutes, the script will use 2:20pm as the starting point. This buffer time is completely optional, and can also be set to zero.

- vDeltaTags_Config_FullLoad This is a flag set by the Delta Tags script the indicates whether or not the script will run in full mode, rather than in delta mode. This not only takes into account the value we set before the CALL statement, but also whether or not the QVDs exist on the specified path and whether any tags were found on the QVDs. These last two scenarios would cause a full load since the data needs to be reprocessed.

Step 4: Process Data

Based on the variables output by the Delta Tags script, we can process the data as we normally would. If, for instance, we’re querying a database, we would use the following:

Table: LOAD Id, field1,

field2,

UpdatedTimestamp;

SQL SELECT

Id,

field1,

field2,

UpdatedTimestamp;

FROM Table WHERE UpdatedTimestamp >= '$(vDeltaTags_Config_StartingPoint)';

After this, we would typically concatenate the new data with the historical data from the local QVD:

Concatenate(Table) LOAD *

FROM Table.QVD (qvd)

WHERE Not Exists (Id);

Step 5: Set Tags

Once we have processed the data and before storing it back to disk, we’ll call another Delta Tags function to set the tags and remove the old timestamps.

CALL SetDeltaTags;

Step 6: Store QVD(s)

Finally, we can store the QVDs and the tags will also be updated so we can use them in the next reload.

STORE Table into Table.QVD (qvd);

To verify that the tags are being set correctly, you can load the metadata in the QVDs into QlikView or Qlik Sense and you should see them associated with the specified field (Id, in our example above).

As you can see, using Delta Tags is quite simple to implement, as it only takes a few lines of code at the start and end of the load script, and results in a much more efficient and reliable process when compared with other techniques.

Download and Try It Yourself

You can download the Delta Tags script below and start using it in your own projects. The attached package also includes a script you can use to inspect the QVD file metadata.

VIDEO Example: Incremental Data Extracts with Qlik using Delta Tags and QVD Segmentation

Here is a 14-min video showing how the Delta Tags technique easily replaces another commonly used approach to incremental loads (based on variables), and demonstrates why the Delta tags approach makes the process more reliable while still being highly efficient and configurable.

Learn More

Learn more about this and other techniques at my blog: https://aftersync.com/blog

Also, if you're interested in learning advanced techniques for building optimized data flows in QlikView and Qlik Sense, I just released an online course that covers various important aspects of data processing in Qlik: The Lean Data Processing Master Class. Join here: https://www.leandataprocessing.com/lean-data-processing-masterclass