Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics

- :

- New to Qlik Analytics

- :

- Storing new csv's in existing qvd

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Storing new csv's in existing qvd

hi,

im pulling in multiple csvs into one qvd. however i want the script to only read in new csvs and store it into the existing qvd, i dont want it to reread every csv file every time as it will be quite slow due to large amount of csvs.

im having a few problems,

firstly when it runs it doesn't update the qvd if i put a new csv in a folder,

second, i want to be able to identify a specific string in all the files, but i only know how to do it for each field, is it possible to search the entire file instead of a specific field?

my code:

SUB DoDir(Root)

FOR each File in filelist( Root & '\*.csv')

test:

LOAD *, RowNo() as rows, filename() as filename,

if(wildmatch([Sector 27],'*¡MM')=1, [Sector 27]) as Sector27MM_Fails,

if(wildmatch([Sector 27],'*¡MM*')+wildmatch([Sector 27],'*¡NR*')+

wildmatch([Sector 27],'*¡WL*')+ wildmatch([Sector 27],'*¡BG*')+ wildmatch([Sector 27],'*¡FG*')=1, [Sector 27]) as Sector27AllFails

FROM

[$(File)]

(txt, codepage is 28591, embedded labels, delimiter is ',', header is 1 lines);

STORE test INTO [lib://........completeQvd.qvd] (qvd);

NEXT File

FOR each Dir in Dirlist (Root&'\*')

CALL DoDir(Dir)

NEXT Dir

END SUB

CALL DoDir('[lib://..../LVS')

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should not need a filelist. The pattern above works for me in testing. I see your error when my filepath is bad. I can't see your filepath -- I understand you've necessarily obfuscated it.

FWIW here's a test script that runs fine on my server.

Sub DoDir(Root)

Data:

BUFFER

LOAD *

FROM

[$(Root)\*.xlsx]

(ooxml, embedded labels, table is Sheet1);

FOR each Dir in Dirlist (Root&'\*')

CALL DoDir(Dir)

NEXT Dir

EndSub

Call DoDir('lib://qlikview (robxps15_rob)/qlikcommunity/MultiExcel')

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not totally following what you are doing with the wildmatch, so I'll skip that in my example below. You can ditch the subroutine and instead use the "Buffer (incremental)" Load prefix. Buffer incremental with a wildcard filename will load only new files and automatically merge with the old files already loaded.

test:

Buffer (incremental)

LOAD *, RowNo() as rows, filename() as filename,

etc...

FROM [lib://..../LVS/*.csv]

(txt, codepage is 28591, embedded labels, delimiter is ',', header is 1 lines);

STORE test INTO [lib://........completeQvd.qvd] (qvd);

-Rob

http://www.easyqlik.com

http://masterssummit.com

http://qlikviewcookbook.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Regarding to your second question it's not possible in the script to detect certain values within a whole table else you need to do it for each field.

This might be done within another loop which runs with field-, table- and field-value-functions over all fields.

Another approach could be to load your files twice - one time normal like you already do and another time by loading the file with a fixed length (it's an option within the table-wizard) which could load the entire record as a single field.

The mentioned loop-logic isn't very complicated and also quite performant but to match and merge these results with your origin load is probably not trivial. Easier in this regard seems the loading twice approach then here you could use recno() as record-key and a counting- and/or subfield/textbetween-logic in which field it happens and both information could be used to match the loadings again.

Beside this it's quite common that such csv-files contain data within a crosstable-structure and your provide fieldname indicates in this direction. Within the most scenarios it's not sensible to remain by it else to transform it per crosstable-statement into a "normal" data-structure. If your data are a crosstable and your mentioned value-check isn't really aimed for each field else only the measure-fields you could transform the data and applying then your check on the remaining single-field for the measures. Even if you need to look in each field you may use a crosstable with an additionally 'dummy' field to get a single-field for the raw-data but then you will need some extra efforts to revert this logic completely/partly which is of course also possible with a generic-statement.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks @rwunderlich for that.

can the Buffer (incremental) load be used when the csv files are within subfolders? when i tried your suggestion, it works when i direct it to a folder that has csvs but doesn't work when i point it to my parent folder that has sub folders that store the csvs.

that is why i was using the subroutine and for each loop.

any ideas?

thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know if buffer is capable to handle advanced incremental scenarios whereby I never used this feature. Personally I would probably just store which file was already loaded and to check the new ones against them to load or to skip them. This might be done with something like:

LoadedFiles: load 'dummy' as File autogenerate 0;

// LoadedFiles: load File from LoadedFiles.qvd (qvd);

for each file in filelist()

if fieldindex('File', '$(file)') = 0 then

your load-statements

concatenate(LoadedFiles) load '$(File)' as File autogenerate 1;

end if

next

store LoadedFiles into LoadedFiles.qvd (qvd);

By the first run you could comment the qvd-load from LoadedFiles and afterwards the dummy-load - of course you may design this with more efforts - but the logic what is meant should be clear.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can use buffer and wildcard load with subdirectories like:

Sub DoDir(Root)

test:

Buffer (incremental)

LOAD *, RowNo() as rows, filename() as filename,

etc...

FROM [$(Root)/*.csv]

(txt, codepage is 28591, embedded labels, delimiter is ',', header is 1 lines);

FOR each Dir in Dirlist (Root&'\*')

CALL DoDir(Dir)

NEXT Dir

EndSub

CALL DoDir('[lib://..../LVS')

STORE test INTO [lib://........completeQvd.qvd] (qvd);

-Rob

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

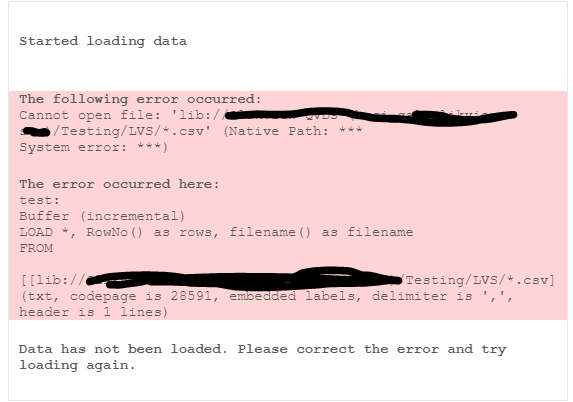

i tried your update, however its still throwing me an error saying it cannot connect to my LVS folder:

my code:

test:

Buffer (incremental)

LOAD *, RowNo() as rows, filename() as filename

FROM

[$(Root)/*.csv]

(txt, codepage is 28591, embedded labels, delimiter is ',', header is 1 lines);

FOR each Dir in Dirlist (Root&'\*')

CALL DoDir(Dir)

NEXT Dir

EndSub

CALL DoDir('[lib://...../Testing/LVS')

STORE test INTO [lib:/...../Testing/LVS\completeQvd.qvd] (qvd);

do i need to put in a for each filelist to grab the files?

ive verified that the filepath is correct but its looking like it is having trouble going through ths sub folders in my LVS folder to grab the csvs?

thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should not need a filelist. The pattern above works for me in testing. I see your error when my filepath is bad. I can't see your filepath -- I understand you've necessarily obfuscated it.

FWIW here's a test script that runs fine on my server.

Sub DoDir(Root)

Data:

BUFFER

LOAD *

FROM

[$(Root)\*.xlsx]

(ooxml, embedded labels, table is Sheet1);

FOR each Dir in Dirlist (Root&'\*')

CALL DoDir(Dir)

NEXT Dir

EndSub

Call DoDir('lib://qlikview (robxps15_rob)/qlikcommunity/MultiExcel')