Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Re: Slow KPI Table using Value List

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Slow KPI Table using Value List

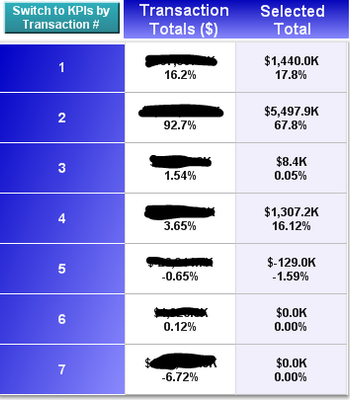

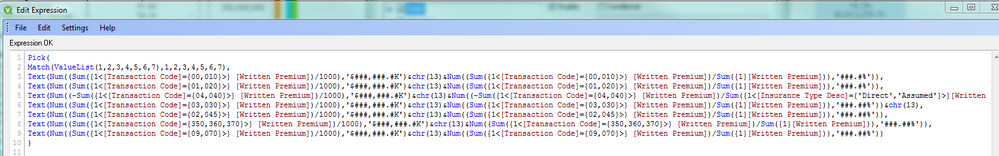

I'm building a table with about 15 KPI measures. These 15 measures are repeated, one column is for totals and one is for selection. It's performing relatively quickly if I store everything in a text box, but inside of the table using ValueList() and Pick(Match()) it is very slow. .. Like 50x longer calc time than the expressions in a single text box.

Is there any way to improve the performance here? It's much easier to keep this in a table rather than break it out into multiple text boxes. Note that in the pictures below I changed my text strings to numbers. It didn't help performance much.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can you aggregate the KPI in script in a table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe it' the valuelist() which delayed the calculation. You could replace it with a real (dummy) dimension, for example:

Dummy: load recno() es ExpressionList autogenerate 7;

and then:

pick(ExpressionList, expr1, expr2, ...)

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the suggestion Marcus. Probably should have considered that myself. It's still slower than the text box, but it's at least 50% faster depending on the selections. Have no clue yet what the performance difference will be working with 4 million rows on my PC vs 50 million on the server.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Because of the fact that your calculations have no dimensional context else they are global and have a more or less redundancy you could transfer all or some calculations to a variable. I think at least by your rate-calculation the part of: sum({1} [Written Premium]) you should notice a benefit if it's calculated only once.

I'm not sure if it's worth to create for all of the parts own variables because beside the efforts to create them they have the disadvantage to be calculated each time if any selection changed in the application and within the table the calculations are only applied if the object is visible.

Another possibility (from the calculation point of view - in regard to the layout it may have disadvantages) could be to split your value- and rate-calculation into separate rows. I assume it would in general perform better and you could avoid the formatting-expressions and apply the formatting in the number-tab - and you could try to use the caching-capabilities from Qlik by accessing the previous calculated value with interrecord-functions like above() or below().

Beside this you could also look witin the datamodel if it could be optimized in some way, for example if it not used a star-scheme.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

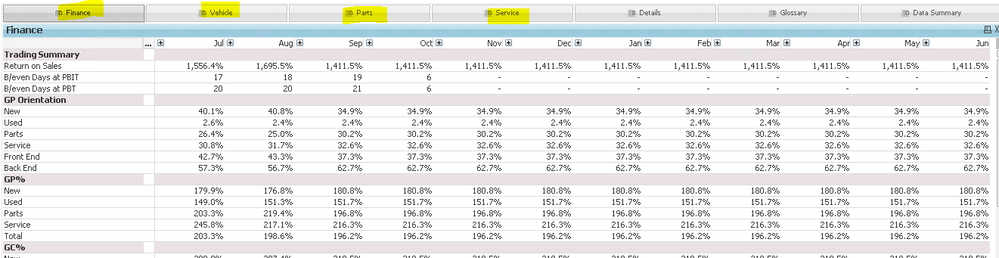

I am in the same situation as above.

My dashboard looks like this:

In each of the tab, there will be another set of KPIs with different set of logics.

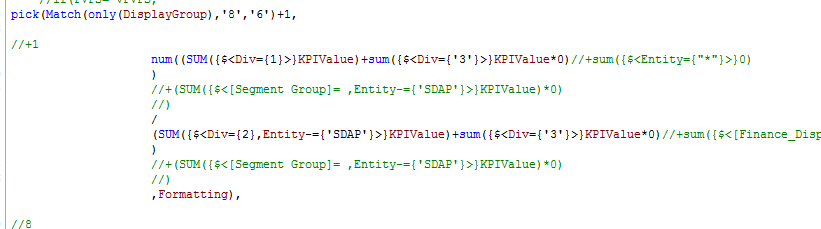

At first i built these KPIs line by line in the expression tab, but i managed to transform these KPIs logics into different sets KPI groupings :

I have 30+ over KPIs in each tabs and managed to transform these into 3-10 KPIs groupings.

This is possible if u layered out all dimensions that is used in set analysis (Transaction Code,Written Premium) into an Excel File.

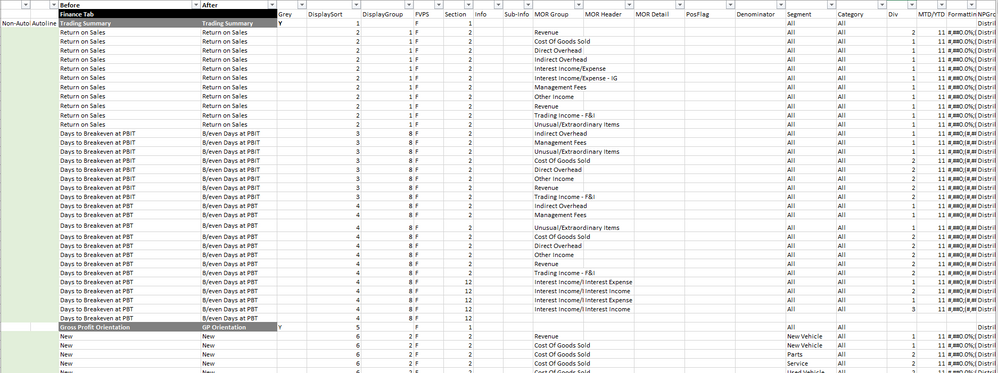

For me, my Excel File looks like this:

Once you have your Master Excel file ready, link it to your Link table or Fact table with all the dimensions listed in the Master Excel file.

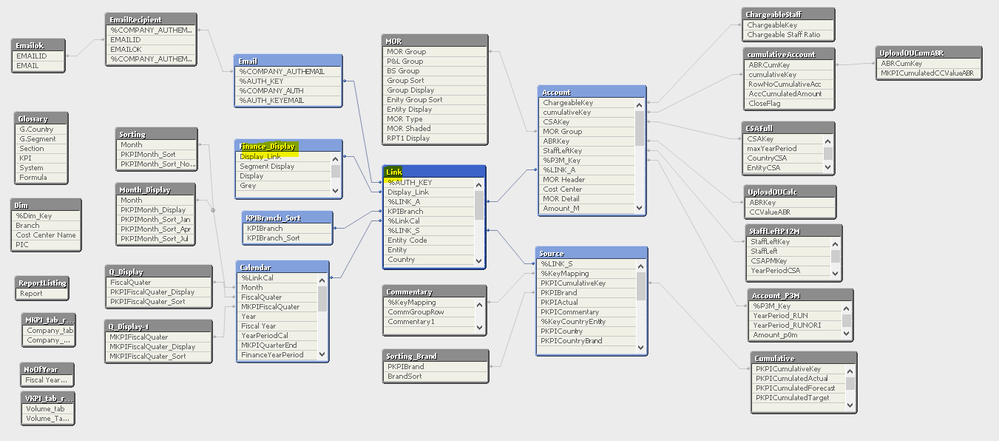

For my scenario, I link it through the Link table:

Sorry my Data Model is a bit messy as I have a lot of KPI logics that is written in script.

This will also help in front end visualization performance as calculating values in script will not consume additional memory space when user is making selections.

Please spend some time on designing the Excel file as i would prefer this way to do KPI reports, rather than relying on set analysis to filter out data for each KPIs.

Thanks and regards,

Arthur Fong