Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Support

- :

- Support

- :

- Knowledge

- :

- Support Articles

- :

- How to troubleshoot Hadoop connectivity issues

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

How to troubleshoot Hadoop connectivity issues

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to troubleshoot Hadoop connectivity issues

Sep 30, 2022 9:38:17 AM

Sep 30, 2022 9:38:17 AM

In this article, we will be walking through the basic troubleshooting steps required to look into Hadoop endpoint connectivity issues.

These steps will focus on investigating two connections:

- HDFS connectivity

- HIVE connectivity

The Scenario

We have implemented two issues by blocking port 50070 and adding the wrong ODBC parameter for the Hive. The outlined troubleshooting steps will highlight how to identify these issues.

In Replicate UI Go to Server Tab --> Logging --> Server Logging Levels and keep the COMMUNICATION and SERVER logging component to Trace during the troubleshooting.

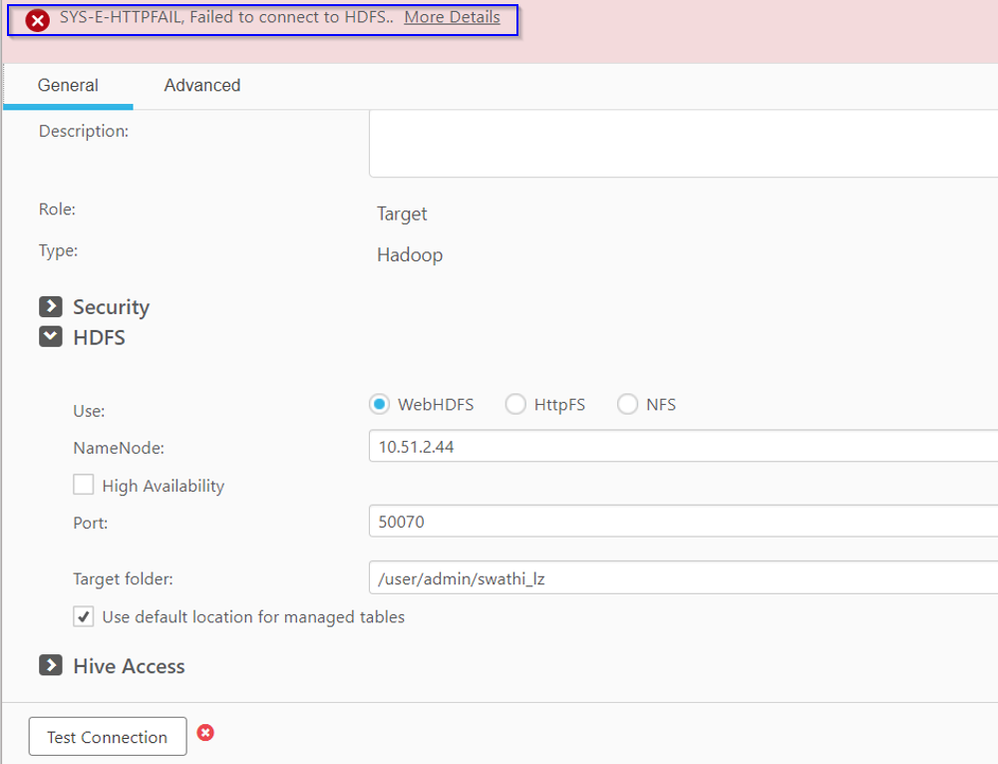

As we begin, we can already see that the connection test fails when we click Test Connection. It fails with SYS-E-HTTPFAIL, Failed to connect to HDFS...

To view the Server logs go to Replicate UI --> Server Tab --> Logging --> Server Logging Levels --> View Logs --> Open the server log to see the below connectivity failed messages:

00011144: 2022-09-25T00:43:40:393877 [COMMUNICATION ]T: requested URL <http://10.51.2.44:50070/webhdfs/v1/?user.name=admin&op=GETFILESTATUS>, action is GET (at_curl_http_client.c:504)

00011144: 2022-09-25T00:43:40:393877 [COMMUNICATION ]T: Clearing (if there is) curl old custom request (at_curl_http_client.c:950)

00011144: 2022-09-25T00:43:40:393877 [COMMUNICATION ]T: calling user pre submit callback (at_curl_http_client.c:1960)

00011144: 2022-09-25T00:43:40:393877 [COMMUNICATION ]T: user pre submit callback returned with status 0 (at_curl_http_client.c:1964)

00011144: 2022-09-25T00:43:40:393877 [COMMUNICATION ]T: preparing response structure; body will be written as dynamic string (at_curl_http_client.c:1428)

00011144: 2022-09-25T00:43:40:395850 [COMMUNICATION ]T: AT_CURL->at_curl_easy_perform: curl_action_status == CURLE_OK failed (at_curl.c:883)

00011144: 2022-09-25T00:43:40:395850 [COMMUNICATION ]T: Failed to curl_easy_perform: curl status = 7, Couldn't connect to server (Failed to connect to 10.51.2.44 port 50070: Bad access) [1000143] (at_curl.c:883)

The error Couldn't connect to server in the logs and Failed to connect in the UI indicates a connectivity issue and we will begin investigating there.

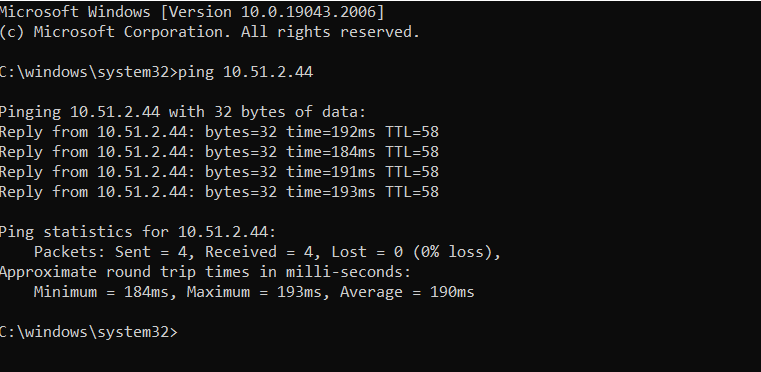

1. Ping test.

This is a quick and common way to verify Hive and HDFS connectivity. It verifies if we have network connectivity at all.

In a command line, we execute:

ping 10.51.2.44

Where 10.51.2.44 is the IP address of the destination server.

Result: PASS

2. Telnet Test

Depending on whether or not we are using Hive or HDFS, the ports used are different. It verifies if the port specified is being listened on.

In a command line, we execute:

telnet 10.51.2.44 50070

Where 10.51.2.44 is the IP address of the destination server.

Result: FAIL

This indicates that port 50070 may not be available (blocked by a firewall, etc).

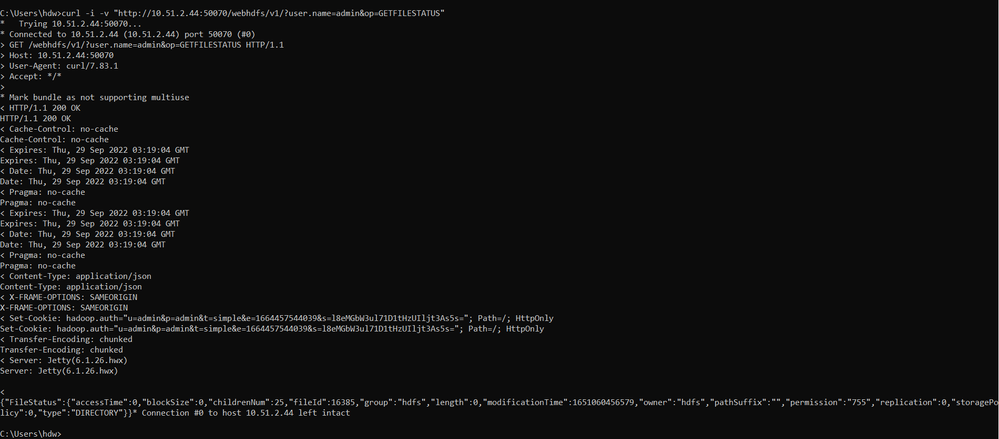

3. CURL Test

This test is only required for HDFS. We might need to use different authentication mechanisms with CURL based on the deployed authentication mechanisms (like Username\password, Kerberos with key tab, and Kerberos with AD Etc.,).

We must use the exact URL found in the Qlik Replicate log file.

In a command line, we execute:

curl -i -v "HTTP://10.51.2.44:50070/webhdfs/v1/user/admin/USER?user.name=admin&op?GETFILESTATUS"

Where 10.51.2.44 is the IP address of the destination server.

Result: FAIL

Since both the CURL and Telnet tests failed, we look into port 50070 and identify that it has been blocked by a firewall. We enable the port and further tests show Telnet and CURL succeeding.

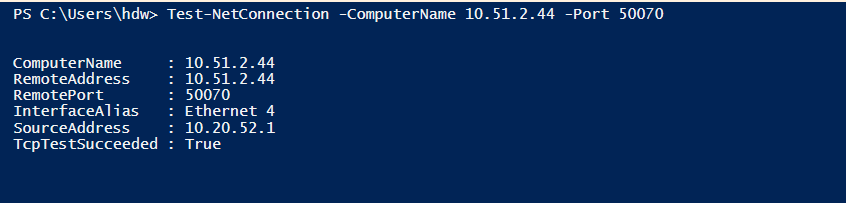

We can also verify the port using PowerShell and the following command:

Test-NetConnection -ComputerNamer 10.51.2.44 -Port 50070

This is an example of a successful CURL test:

However, when we test the connection in the UI, the connection still fails.

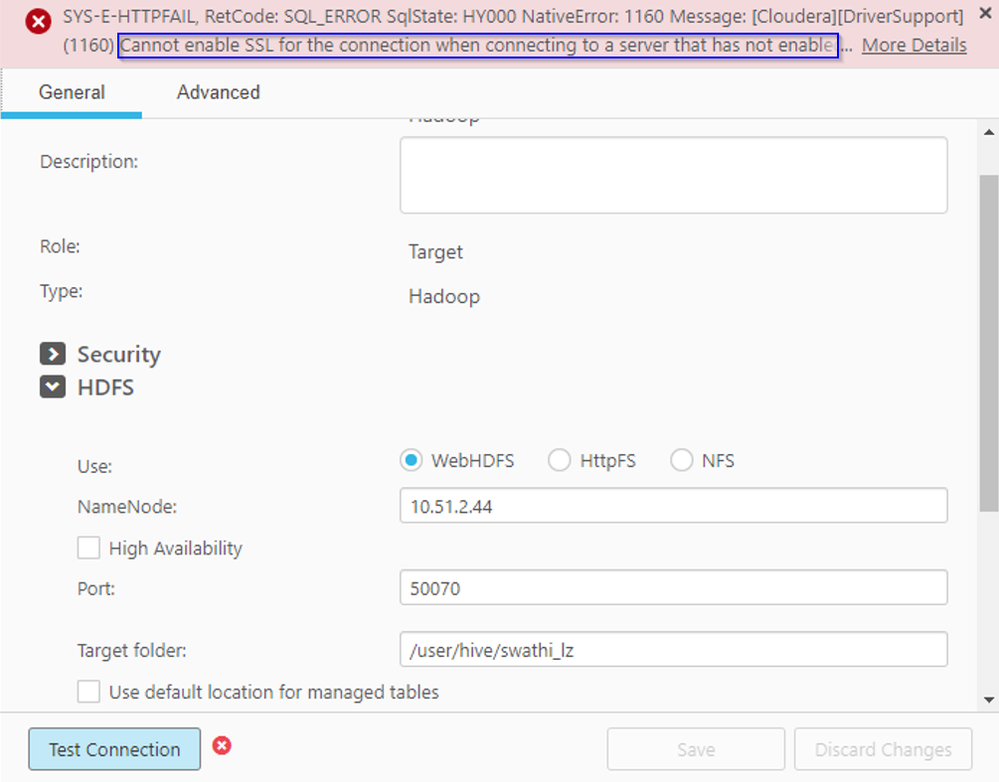

This time it displays a different error:

Cannot enable SSL for the connection when connecting to a server that has not enabled...

To view the Server logs go to Replicate UI --> Server Tab --> Logging --> Server Logging Levels --> View Logs --> Open the server log to see the below connectivity failed messages:

00028952: 2022-09-25T01:02:09:511445 [COMMUNICATION ]T: requested url <http://10.51.2.44:5 0070/webhdfs/v1/?user.name=admin&op=GETFILESTATUS>, action is GET (at_curl_http_client.c:504)

00028952: 2022-09-25T01:02:09:511445 [COMMUNICATION ]T: Clearing (if there is) curl old custom request (at_curl_http_client.c:950)

00028952: 2022-09-25T01:02:09:511445 [COMMUNICATION ]T: calling user pre submit callback (at_curl_http_client.c:1960)

00028952: 2022-09-25T01:02:09:511445 [COMMUNICATION ]T: user pre submit callback returned with status 0 (at_curl_http_client.c:1964)

00028952: 2022-09-25T01:02:09:511445 [COMMUNICATION ]T: preparing response structure; body will be written as dynamic string (at_curl_http_client.c:1428)

00028952: 2022-09-25T01:02:09:912486 [COMMUNICATION ]T: http response code is 200 (at_curl_http_client.c:1186)

00028952: 2022-09-25T01:02:10:127510 [SERVER ]T: going to connect using connection string <ssl=1;HiveServerType=2;Driver={Cloudera ODBC Driver for Apache Hive};HOST=10.51.2.44;PORT=10000;Schema=hive_lz;AuthMech=3;UID=hive;PWD={****};> (hive_odbc_client.c:374)

00028952: 2022-09-25T01:02:10:546553 [INFRASTRUCTURE ]T: AR_ODBC_DRIVER_FUNC->ar_SQLDriverConnect returned -1 (ar_odbc_func.c:1117)

00028952: 2022-09-25T01:02:10:546553 [SERVER ]T: RetCode: SQL_ERROR SqlState: HY000 NativeError: 1160 Message: [Cloudera][DriverSupport] (1160) Cannot enable SSL for the connection when connecting to a server that has not enabled SSL. If the server has SSL enabled, please check if it has been configured to use a SSL protocol version that is lower than what is allowed for the connection. The minimum SSL protocol version allowed for the connection is TLS 1.2. [1022502] (ar_odbc_conn.c:584)

The logs confirm that the HDFS connection worked this time, but not the Hive connection failed.

We verify the Hive connection following the same method as before.

1. Ping test:

In a command line, we execute:

ping 10.51.2.44

Where 10.51.2.44 is the IP address of the destination server.

Result: PASS

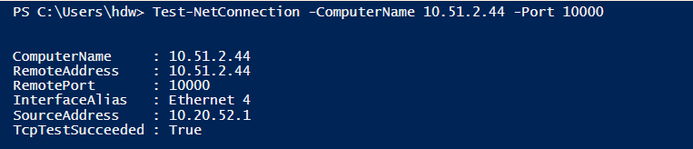

2. Port Test

This time we use PowerShell, but you can use telnet as well.

We will use port 10000.

In PowerShell, we execute:

Test-NetConnection -ComputerNamer 10.51.2.44 -Port 10000

Where 10.51.2.44 is the IP address of the destination server.

Result: PASS

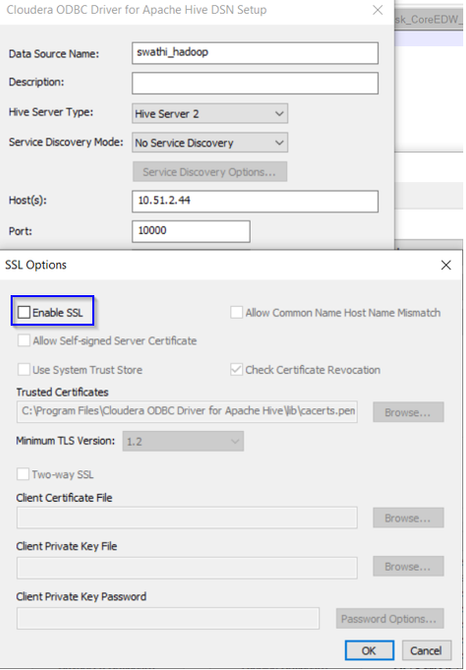

3. ODBC Test

We will test the ODBC connection from the Qlik Replicate server:

Result: Pass

4. Review the Hive connection string

Locate the Hive connection string in the Qlik Replicate log and compare it with the DSN setup.

ssl=1; HiveServerType=2;Driver={Cloudera ODBC Driver for Apache Hive};HOST=10.51.2.44;PORT=10000;Schema=swathi_lz;AuthMech=3;UID=hive;PWD={****};

The log indicates that we are using SSL.

But as we review the Hive DSN Setup, we can see that we have SSL unchecked:

Result: Everything matched other than ssl=1.

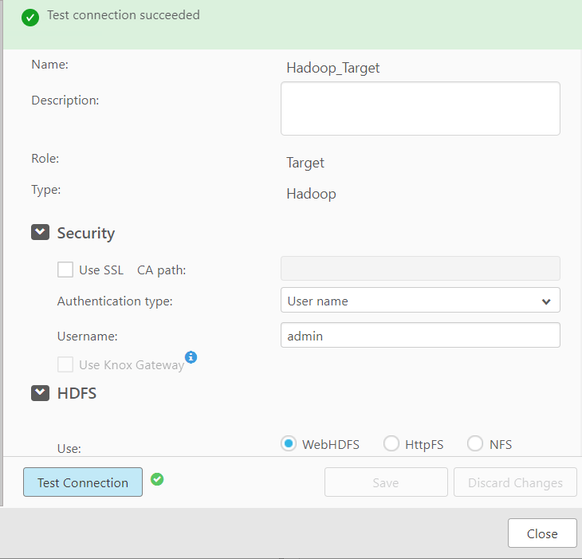

Let’s review and fix the endpoint settings in Qlik Replicate and perform the test connection by removing the internal parameter of ssl=1.

But what if we receive different error messages?

Generally, driver issues can lead to blockers for Hive connections. If you locate a different error in the UI or the log files, research these errors using either Google or our knowledge base here in the Community, as fixes may already exist.

Environment:

Qlik Replicate

Hadoop Target