Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Data Integration

- :

- Qlik

- :

- Qlik Cloud Data Integration

- :

- Snowflake storage assets & QVD

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Snowflake storage assets & QVD

I have seen that you can use Snowflake as a landing zone and also as a source to create data storage assets.

Currently we follow the 3-tier approach:

- Extract app generating Extract QVD's as 1-1 copy from source

- Transform app generating Transform QVD that transforms (mapping, joining, aggregation, renaming) data from extract qvd's

- Final App using the Transform qvd's.

With the use of Storage data assets, could we:

- skip step 1 (extract),

- perform step 2 & store on Snowflake

- Use the creates tables/views directly in the final app

Would as a result, data storage assets via Snowflake partly replace the need for QVD's?

Is the only benefit of QVD's than mainly for optimized load?

Thanks already for the feedback!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

GREAT QUESTION.

Layer 1 QVD's that are just raw data from your source are perfect to utilize for INCREMENTAL loads. Meaning if you have millions/billions of records, once you've pulled them all you need to do is pull the changes which will save you a TON of money and time. You can utilize the standard incremental load strategy that has been around for a long while, or you take advantage of the Qlik MERGE function. If you have time travel turned on for your Snowflake tenant you can follow the advice in my post about it. https://dataonthe.rocks/merging-with-time-travel/

If you use, or will soon be using Qlik Cloud Data Integration then you can take advantage of the Change Data Capture tables which store the changes. https://community.qlik.com/t5/Official-Support-Articles/Merging-the-Hard-to-Imagine-with-Simplicity/...

Layer 2 QVD's as you indicate typically do just what you've indicated, but once joined/mapped/denormalized/concatenating field keys/create DUAL datatype fields etc, can't work with an incremental strategy. Those things are ncessary and while you can certainly fully qualify field names as you desire them to be, there are things like the DUAL datatype that you simply can't mimic. That doesn't mean that you can try and model your tables as close as possible to what is needed so you do minimal work in your Layer 2 transformations.

Final applications should do everything that they can to load the QVD's in an OPTIMIZED format with very few additional changes. I can tell you from experience lately building out our next generation of SAP Accelerator applicators that I absolutely follow my own advice.

In my case the layering adds an extra benefit in that our partner engineering team needs to go through the process for Microsoft, Snowflake, AWS, Google Big Query and Databricks. By isolating the raw data from the transformations and from the visual application I insulate the impact of field changes any one vendor might require. The extract always pulls raw data. The application load always pulls known Qlik Sense field model names. Thus, my only changes are limited to the transform layer and those are very minimal.

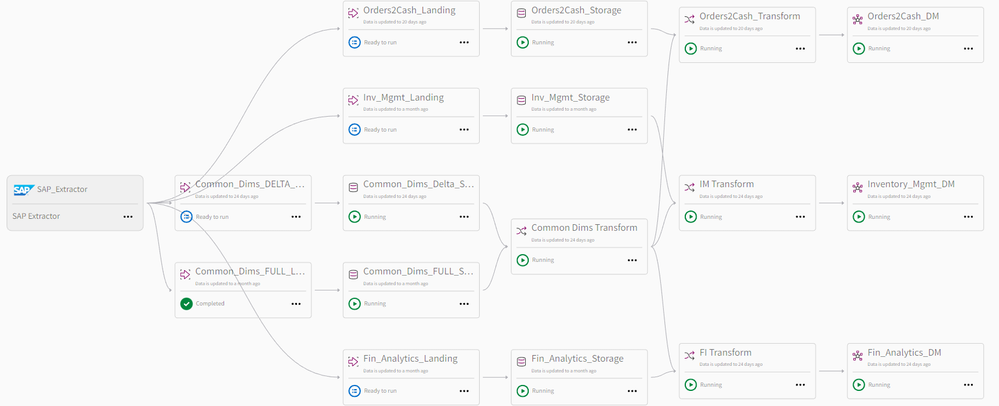

The following is the Qlik Cloud Data Integration flow that pulls data all the way from SAP for Orders to Cash, Inventory Management and Financial Analytics. We have definitely done all we can within the flow to ensure that the tables in Snowflake are as clean as possible so we only need to do the essential Qlik things like concatening keys so fact tables from different marts can be associated inside of Qlik Sense. If you know you want to transform data inside of Snowflake this is definitely the way to go and all work is pushed down to Snowflake. As such, you can even take advantage of Snowpark by simply selecting from your user defined functions.