Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Data Integration

- :

- Qlik

- :

- Qlik Compose for Data Warehouses

- :

- Re: Continuing an ETL set ignoring the errored Ent...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Continuing an ETL set ignoring the errored Entity

Is there any way to continue the load for rest of the entities in an ETL set even if one of them fails for like cases like Primary key violation or even for a deadlocked query by just using a single ETL set and not using multiple ETL set in an parallel workflow?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not sure I exactly understand the use case but in workflows you can create a path for behavior on task errors. See https://help.qlik.com/en-US/compose/May2021/Content/ComposeDWDL/Main/DW/Tasks%20and%20Workflows/cont...

Does that help?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wouldn't need to have another task.

Consider the example below.

I have two entities one is the orders table and another product_code table. Order table have relation to product_code table using cd_name column. The product_code base table has the key as it primary key. Due to an issue in the application, there were duplicate cd_name inserted with different keys. But in compose dwh the primary key is cd_name and handle duplicate was not selected. Now due to this duplicates, primary key violation has occured and the whole etl set got errored out but i want compose to ignore that entity and complete the load for orders table. Is there a way to do so?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well yes of course 😂.

But why I asked this is, when the whole ETL breaks I have to restart from scratch. Loading every entity again. If just was only errored and rest was fine, alot of time should be saved.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @aj96 , Currently there is no way to have an ETL Task / ETL Set ignore a PK error / violation.

When Compose processes data into the central DW model, it is ensuring there is integrity in the data and that all entities can be inner joined (from left to right as you view the default model display). If you review the code steps, you'll see that all TSTG tables are loaded first and PK violations confirmed / checked as part of the initial process. So while you may need to reload staging tables, the majority of the ETL has not executed when the PK violations are checked.

Having said that. If this occurs frequently with a few specific tables there are ways to configure Compose to handle these issues more "gracefully". For ANY DW / ETL process (IMO) its always best to handle these duplicate key issues in the ETL processes instead of ignoring them.

Option 1 would be to configure "Handle Duplicates". The benefit of this is there is nothing to code and Compose will simply handle duplicate records, selecting 1 of the duplicate key values for you. The downside is of course that Compose is picking 1 record "at random" .

Option 2 would be to create a validation rule to REJECT and REPORT duplicate keys. This would ensure no duplicates enter the DW, and that the values are recorded to an ERRORMART where you can investigate the issues and potentially determine why you are getting duplicates and take corrective action on the Landing area.

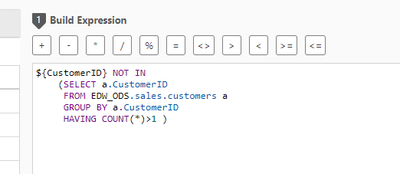

Here is an example rule to check for Duplicate CustomerID values. <Note the rule describes GOOD data - hence the NOT IN 🙂 )

Option 3 is to use a Single-Table ETL for the specific TSTG table where you would remove 1 of the "DUPLICATE" rows with some custom code. The benefit here is you take direct control over the duplicates. However this does require writing some additional SQL code as part of the ETL process.

My personal preference is Option 2 - as de-duplication is not performed "blindly" and it also provides me with a log of the issue so I can determine if this is systemic or every once in a while.

Of course this all means you need to know where you are going to have PK issues and code for that. Typically you have a good understanding of this by testing and knowing your source data.

Hope this helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @TimGarrod for the detailed solution :).

I more thinking like if it doesn't come through staging then it should insert the missing references. And you are right I should probably identify these issue and add data quality. The reason why I asked this, the pk issue was an application error and should be fixed but it cost us 7hrs of load time as all those data needed to be processed again. If Compose handled this, it would have saved us 7hrs of load time😊