Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Data Integration

- :

- Qlik

- :

- Qlik Replicate

- :

- Latency increasing at snowflake target end

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Latency increasing at snowflake target end

Hi Team,

Latency is increasing at target endpoint, the changes are applying slowly. By design the task was set as full load + cdc . The source table doesn't have primary key. But even then cdc is set for it. Previously it ran well, but from past 2 days , its showing latency of 79 hours at target end.

Without primary key, task is working in transaction apply mode(one by one) hence executing update and inserts query in about half a second individually.

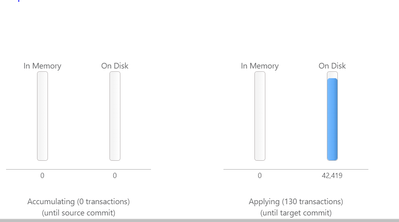

Changes are sitting on disk and not getting committed at the target side.

Tuning

Task : Full load + cdc, Source doesn't have primary key.

Source : Oracle

Target : Snowflake

Please suggest what are the possible solution for this . Please let me know if any other information is needed.

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @suvbin ,

Thanks for reaching out!

While working with Snowflake target endpoint please take note the limitaion:

• The Transactional apply Change Processing mode is not supported

Even if the task running well (no error no warning) however the performance is bad , and it may cause cost as well.

I'd like to suggest you open support ticket (even PS team involved) to solve the problem completely.

Hope this helps.

Regards,

John.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As @john_wang indicated you are trying something which is not supported even though it may appear to work for small tests. Tell your customer it isn't going to work. What is the expected incoming change rate? If it is 1/sec or more it will 'never' catch up. They either need to add an (artificial) PK or move (isolate) that table and all like it to a full-load task.

>> inserts query in about half a second individually.

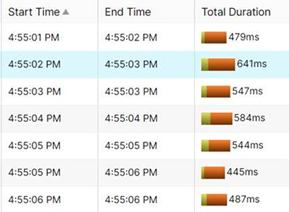

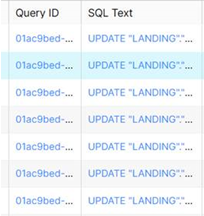

HOW do you know? TARGET_APPLY VERBOSE log for a few minutes? In my experience a singleton snowflake update can take 2 seconds. Show some timestamped insert lines?

fwiw, Hein.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Note: From yesterday onwards latency has been reduced for this task . But it may increase at anytime , if incoming changes are more and more over ... there is no pk for this. And there is no possibility of adding PK for this (as per client).

Target is: Snowflake on AWS

Changed the size from Large to Medium

When noticed before 2 days there is high latency and changes were around 1 million and then decreased to 20 thousand . Even then the latency didn't reduce, it was keep on increasing.

>> inserts query in about half a second individually.

checked in the snowflake end.

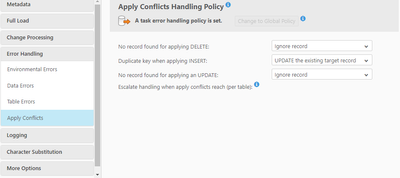

and also one more option is enabled

One of the option i have seen in some blog is

-->Change into “Store Changes” from “Apply Changes”, where it can keep insert the records instead of update. In snowflake write a process to update the record.

Could you please brief what is the advantage of store changes for this scenario . and also how can we write a process to update the record at snowflake end.

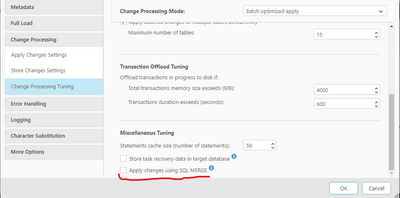

And also will "Apply Changes using SQL Merge" helps in this situation? Please suggest.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @suvbin ,

Could you please brief what is the advantage of store changes for this scenario . and also how can we write a process to update the record at snowflake end.

Store Changes are much faster than Apply Changes as it's appended straightly in the Store Changes Tables.

Qlik Compose is the best product to merge the Store Changes to target tables.

And also will "Apply Changes using SQL Merge" helps in this situation?

This option improves target apply performance because of :

- It reduces the number of SQL statements run per table from three to one.

- The target database only needs to scan the Replicate net changes table once, significantly reducing I/O

More details please check Apply changes using SQL MERGE.

Regards,

John.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@suvbin "checked in the snowflake end. "

Good work. You may also want to check as the replicate sending side by enabling TARGET_APPLY VERBOSE for a minute or two. Now look at the interval between individual requests being send to get the overall round-trip timing

Actually, judging by the Snowflake query start times I expect just under 1 second per update with most falling in their own second shown and once 2 in the same second. More than the 1/2 second indicated, but less than 2 seconds I have seen at times .

A separate task with Store-changes for this table as @john_wang suggest could be an interesting workaround. Changes will come in as a bulk and your DBA's (or Compose) can figure out how to apply them to be base table(s).

Hein

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So is the latency at snowflake end is common without the primary key ?

or in general ( i mean the behaviour of snowflake) the latency part at snowflake is normal ?

Because i see the latency is again increasing. and the task is stuck for long time. the changes are not getting applied.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Am confused .. 🙂

Because i see the target latency is again increasing. and the task is stuck for long time. the changes are not getting applied.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>> So is the latency at snowflake end is common without the primary key ? or in general ( i mean the behaviour of snowflake) the latency part at snowflake is normal ?

It is more that ANY query to Snowflake will take a second. Whether it impacts a single row, thousand rows or 100,000 rows. You may only see it taking significantly longer (end-to-end) when hitting millions. The snowflake internal execution time may increase earlier, but the target row count for the query is often a minor component.

You may want to drill deeper into the Snowflake query and find a full table-scan 'all the time'.

OLTP databases like Oracle, SQLserver can do thousands of singleton changes/second.

DW databases like Snowflake can do millions of changes in a second as long as they come in a single query. They prime the engine for a bulk all the time - only to be disappointed when there are only a few or a singleton change to be made.

The issue with the lack of PK is that Replicate in the current design has no choice other then executing single-ton updates. Now your DBA/Data Analyst likely understands the data better than Replicate does and they may well now how to do a bulk update taking seconds for all rows instead of hours doing one at a time. For that the __CT table may be easiest.

Maybe you can TRY to declare a fake PK on the target and deal with potential multi-row updates/deletes. Snowflake does not really do PK's anyway - not like an Oracle or SQLserver.

>> Because i see the latency is again increasing. and the task is stuck for long time. the changes are not getting applied.

Set TARGET APPLY logging to VERBOSE for a few minutes - Is it really not performing any or is it going so slow that the reporting of the changes is not happening yet. Of course you can (and should ) verify this on the Snowflake side also - are there queries from Replicate when you filter by its connectionID?

Hein.