Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Re: group-by over 200 million rows

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

group-by over 200 million rows

Hi everybody,

I have a problem with the reload time of one of my apps I created.

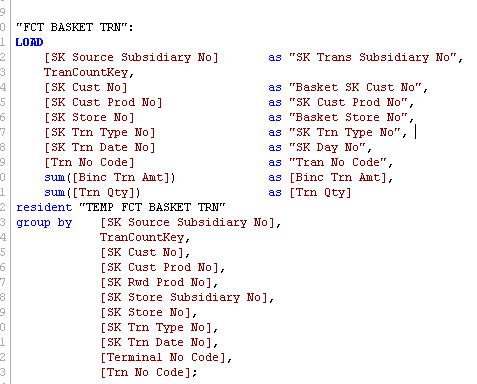

it looks as follow:

the table consist of just over 200 million rows, and the reload time takes around 4 hours to complete.

Is there any way of reducing the reload time of this? If so, please let me know ![]()

Thanks

Stefan

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Stefan,

Since you're aggregating by transaction day I'm not sure if you have 200 million transactions per day or in total. If that's the total table then you could surely cut down the time taken by using incremental loading.

I.e. instead of aggregating everything every day you store away the aggregated data in a QVD and just load the new records each day, aggregate them and replace the aggregated QVD.

The incremental load scenario will of course depend on whether you're just adding records or there are modifications/deletions being made to existing transactions as well as you'd need to re-aggregate those days as well. This can all be scripted though and if you search for "Incremental Load Scenarios" on the community you'll find a good baseline PDF with examples.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Stefan,

Did you try to store your "TEMP FCT BASKET TRN" into QVD.

Then, Sum and Group by from the QVD Source ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Increasing hardware capacity is surely an option. Apart from that, I would try to reload it from a qvd rather than a resident load. While your hardware is not enough, resident load may suffer a bit.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there any specific reason of doing group by in Script?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI,

Instead of doing Group By why can't you directly use Sum(Amt) and Sum(Qty) fields wherever you required? If it taking more time to reload then try without doing Group by and handle this using Sum() in frontend.

Hope this helps you.

Regards,

jagan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Stefan,

Since you're aggregating by transaction day I'm not sure if you have 200 million transactions per day or in total. If that's the total table then you could surely cut down the time taken by using incremental loading.

I.e. instead of aggregating everything every day you store away the aggregated data in a QVD and just load the new records each day, aggregate them and replace the aggregated QVD.

The incremental load scenario will of course depend on whether you're just adding records or there are modifications/deletions being made to existing transactions as well as you'd need to re-aggregate those days as well. This can all be scripted though and if you search for "Incremental Load Scenarios" on the community you'll find a good baseline PDF with examples.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Johannes - Excellent suggestion. This is good example of where incremental loading can assist with large scale applications.