Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Re: Left Join Multiple Files TXT

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Left Join Multiple Files TXT

Hello, I would like to request help from you.

I have several TXT files and would like to open a table by downloading all the columns and once they have been replicated in a new column where the key is the name of the file. I need to get the due date for each file.

If I do a left join, it works, but it will have two tables and I want to consolidate all the content into just one table. When I use only LOAD, it brings information only from the first file it finds.

Can someone help me?

[PRELOAD1]: LOAD FileBaseName() as PK_FileBaseName, [A], [B], [C] FROM [lib://AMIL/DM_*.txt] (txt, codepage is 28591, embedded labels, delimiter is '#', msq, header is 5 lines) ; ///////////////////////////////////////////////////////////////////////////////////////////// [PRELOAD2]: LOAD DISTINCT FileBaseName() as PK_FileBaseName, date_pay, date_shipping FROM [lib://AMIL/DM_*.txt] (txt, codepage is 28591, no labels, comment is TESTE, delimiter is '#', no quotes, header is 2 lines, no eof) ;

I tried to use something described below in the PRELOAD2 table, but the result allowed the same.

vPath = 'lib://AMIL/';

For each File in FileList('$(vPath)/DM*.txt')

Left join

[PRELOAD2]:

LOAD DISTINCT

FileBaseName() as PK_FileBaseName,

date_pay,

date_shipping

FROM $(File)

(txt, codepage is 28591, no labels, comment is TESTE, delimiter is '#', no quotes, header is 2 lines, no eof)

Where WildMatch([@3],'Emissão*')

;

Next File;

PK_FileBaseName | A | B | C | date_pay | date_shipping

1

2

3

4

5

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

*reply test*

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello edugallowk ,

A couple issues here:

1st issue:

Syntax of the load statements. It looks like you're reading from the same series of files in both the first & second statement. This implies a blending of record sets of the two load statements together into 1 file series (this would be unusual, but possible, with logic in the load script "untangling" the two subsets).

Another problem stems from the format of the second load statement attempting to load by named fields, in conjunction with the 'no labels' keyword.

In a "no labels" setting, your load statement would look more like this:

LOAD @1 AS PK_FileBaseName,

@2 AS date_pay,

@3 AS date_shipping

FROM

[DM_*.txt]

(txt, codepage is 1252, no labels, delimiter is '#', msq, header is 5 lines);

2nd Issue - sequencing

When you iterate through the second load statement, via 'For each File in FileList('$(vPath)/DM*.txt')'

The first file found is performing a Left Join on a single key field 'PK_FileBaseName'.

On the second pass, both tables now contain the fields PK_FileBaseName, date_pay, date_shipping and join mechanics have converted to a 3 field compound key.

You need to concatenate all the collected files from the second table, before finally perform the Left Join a single time.

[PRELOAD1]:

LOAD

FileBaseName() as PK_FileBaseName,

[A],

[B],

[C]

FROM [DM_*.txt]

(txt, codepage is 28591, embedded labels, delimiter is '#', msq, header is 5 lines)

;

[PRELOAD2]:

LOAD DISTINCT

FileBaseName() as PK_FileBaseName,

date_pay,

date_shipping

FROM [DM_*.txt]

(txt, codepage is 28591, embedded labels, delimiter is '#', msq, header is 5 lines)

//(txt, codepage is 28591, no labels, comment is TESTE, delimiter is '#', no quotes, header is 2 lines, no eof)

;

LEFT JOIN(PRELOAD1)

LOAD * RESIDENT PRELOAD2;

DROP TABLE PRELOAD2;

contents of Data file DM_01.txt :

I

yam

a

5 line

header

A#B#C#date_pay#date_shipping

Ayyyy#Beeee#Ceeee#Friday#$5

contents of Data file DM_02.txt:

I

yam

a

5 line

header

A#B#C#date_pay#date_shipping

Deee#Eeee#eFFFF#Tuesday#$4

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello! I'm going to test the tips now and soon return with the result.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, thank you very much for the directions. I still find it difficult to merge the tables.

I need to merge the [PreLoad1] information with that of [Preload2] into a single table [BILLING.]

Even using the method you suggested, I still encounter the same problems of joining and bringing all of their respective data regarding the dates of Preload2.

It loads only the data of the first file that is found, ignoring the other files. Any other tips? Because they are multiple txt files, do not they have to do a Loop? If you have any other suggestions, I thank you.

PreLoad1:

LOAD

[Código],

[Beneficiário],

[Matrícula],

[CPF],

[Plano],

[Tipo],

[Idade],

if(IsNull([Dependência]) or [Dependência]='','Titular',[Dependência]) as [Dependência],

Date([Data Limite] ) AS [Data Limite],

[Data Inclusão],

Date([Data Exclusão] ) AS [Data Exclusão],

[Lotacao],

[Rubrica],

if(WildMatch(Rubrica,'Mens.*'),'1',

if(WildMatch(Rubrica,'Co-participação*'),'2',

if(WildMatch(Rubrica,'Cobrança*'),'3',

if(WildMatch(Rubrica,'Devolução*'),'4','Outros')))

) as [Rubrica_Custom],

[Co-Participacao],

[Outros],

[Mensalidade],

[Total Família],

/*Informações Adicionais*/

SubField(FileDir(),'/',3) as Operadora_Grupo,

SubField(FileBaseName(),'_',2) &REPEAT('0', 15 - LEN(SubField(FileBaseName(),'_',2))) as Subfatura_Custom,

Date(Date#('01' &'/' &SubField(FileBaseName(),'_',4) &'/' &SubField(FileBaseName(),'_',3),'DD/MM/YYYY')) as Competencia_Vencimento,

/*Informações do Arquivo*/

FileDir() as FileDir,

FileExtension() as FileExtension,

FileName() as FileName,

FilePath() as FilePath,

FileSize() as FileSize,

FileTime() as FileTime,

FileBaseName() as PK_FileBaseName

FROM [lib://AMIL/DM_*.txt]

//FROM $(File)

(txt, codepage is 28591, embedded labels, delimiter is '#', msq, header is 5 lines)

Where WildMatch([Rubrica],'Mens.*')

;I need to join the script below:

DatePayMap:

Mapping

Load

FileBaseName() as PK_FileBaseName,

date(SubField([@4],' ',-1)) AS VENCIMENTO

FROM [lib://AMIL/DM_*.txt]

(txt, codepage is 28591, no labels, comment is TESTE, delimiter is '#', no quotes, header is 2 lines, no eof)

Where WildMatch([@3],'Emissão*')

;

DateShippingMap:

Mapping

Load

FileBaseName() as PK_FileBaseName,

date(SubField([@3],' ',-1)) AS EMISSAO

FROM [lib://AMIL/DM_*.txt]

(txt, codepage is 28591, no labels, comment is TESTE, delimiter is '#', no quotes, header is 2 lines, no eof)

Where WildMatch([@3],'Emissão*')

;

PreLoad2:

Load

FileBaseName() as PK_FileBaseName,

applymap('DatePayMap',FileBaseName(),null()) as Date_Pay,

applymap('DateShippingMap',FileBaseName(),null()) as Date_Shipping

FROM [lib://AMIL/DM_*.txt]

(txt, codepage is 28591, no labels, comment is TESTE, delimiter is '#', no quotes, header is 2 lines, no eof)

Where WildMatch([@3],'Emissão*')

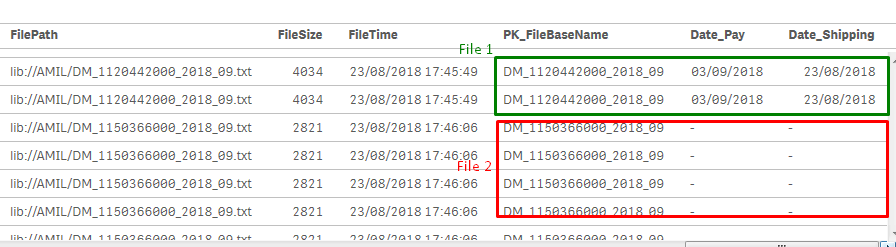

;Problem:

In file 1 it brings the data to the junction, when changing to file 2, 3, 4 and too much information is not filled in the junction. 😕

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Edugallowk,

The wildcard load of a series of text files appears to be working fine. This is demonstrated by the fact values in the field PK_FileBaseName show multiple file names.

There's no requirement these tables be unified down to a single table to test the MAPPING fields.

Try deactivating the MAPPING keyword in DatePayMap & DateShippingMap, and let both of these two-column tables reside as leaf tables in the data model, associating to table [Preload1] on the key PK_FileBaseName.

Exit the script before [Preload2]. Then examine the table box that is supposed to report 'Date_Pay' & 'Date_Shipping'.

If you don't see values in that form, then the problem may not be the syntax of your load statement, but the alignment between the two different passes at files FROM [lib://AMIL/DM_*.txt].

The transactional rows with a 5 row header Where WildMatch([Rubrica],'Mens.*') may not be lining up with date tagging rows using 2 row header Where WildMatch([@3],'Emissão*'). (are the important dates for each file notated in header lines 3-5? you may not have to make a full pass at loading the entire file to pluck these out, reduce the row band with where RecNo() < 6 or something like that)

Temporarily commenting out the //MAPPING keyword will let you examine the health of the mapping tables you're attempting to join into the fact tables.

In a structural sense, not sure why you can't just do the two mapping tables first, APPLYMAP to [Preload1] and then skip [Preload2] in its entirety.

1. DatePayMap

2. DateShippingMap

3. Preload1

(Preload 2 is unnecessary)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @edugallowk,

Yes as @evan_kurowski suggests, In my example i provided, i am doing the mapping loads first then applying them to the first load.

Does that not work as it seemed to work in my example.

No need to do the 2nd load.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try this..

DatePayMap:

Mapping

LOAD

FileBaseName() as PK_FileBaseName,

date(SubField([@3],' ',-1)) AS EMISSAO

FROM [lib://AMIL/DM_*.txt]

(txt, codepage is 28591, no labels, comment is TESTE, delimiter is '#', no quotes, header is 2 lines, no eof)

Where WildMatch([@3],'Emissão*') ;

DateShippingMap:

Mapping

LOAD

FileBaseName() as PK_FileBaseName,

date(SubField([@4],' ',-1)) AS VENCIMENTO

FROM [lib://AMIL/DM_*.txt]

(txt, codepage is 28591, no labels, comment is TESTE, delimiter is '#', no quotes, header is 2 lines, no eof)

Where WildMatch([@3],'Emissão*') ;

PreLoad1:

LOAD

[Código],

[Beneficiário],

[Matrícula],

[CPF],

[Plano],

[Tipo],

[Idade],

if(IsNull([Dependência]) or [Dependência]='','Titular',[Dependência]) as [Dependência],

Date([Data Limite] ) AS [Data Limite],

[Data Inclusão],

Date([Data Exclusão] ) AS [Data Exclusão],

[Lotacao],

[Rubrica],

if(WildMatch(Rubrica,'Mens.*'),'1',

if(WildMatch(Rubrica,'Co-participação*'),'2',

if(WildMatch(Rubrica,'Cobrança*'),'3',

if(WildMatch(Rubrica,'Devolução*'),'4','Outros')))

) as [Rubrica_Custom],

[Co-Participacao],

[Outros],

[Mensalidade],

[Total Família],

/*Informações Adicionais*/

SubField(FileDir(),'/',3) as Operadora_Grupo,

SubField(FileBaseName(),'_',2) &REPEAT('0', 15 - LEN(SubField(FileBaseName(),'_',2))) as Subfatura_Custom,

Date(Date#('01' &'/' &SubField(FileBaseName(),'_',4) &'/' &SubField(FileBaseName(),'_',3),'DD/MM/YYYY')) as Competencia_Vencimento,

/*Informações do Arquivo*/

FileDir() as FileDir,

FileExtension() as FileExtension,

FileName() as FileName,

FilePath() as FilePath,

FileSize() as FileSize,

FileTime() as FileTime,

FileBaseName() as PK_FileBaseName,

applymap('DatePayMap',FileBaseName(),null()) as Date_Pay,

applymap('DateShippingMap',FileBaseName(),null()) as Date_Shipping

FROM [lib://AMIL/DM_*.txt]

(txt, codepage is 28591, embedded labels, delimiter is '#', msq, header is 5 lines)

Where WildMatch([Rubrica],'Mens.*');

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, I'm a little irritated with the forum here.

First I haven't seen any of Hopkinsc's posts on this thread until a few moments ago, and they all showed up AFTER I posted the solution (or two solutions, as the first problem was addressed, then a new problem presented, and a second solution proposed).

But from my perspective this appeared as time-sequencing, as if Hopkinsc got to go back in time and insert his responses into the thread mid-stream, earlier in the chronological timeline.

If this kind of "time-travel" happens again, might be an inducement to curtail community usage. (not trying to blame you Hopkins, but this is how the site revealed sequencing to me).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am finding this site a little irritating since it has been updated. sometimes not being refreshed, sometimes very very slow.

I assure you that i have not got a time machine and i am actually posting my comments correctly and at the times specified 🙂

I have had commented 5 times and @edugallowk had responded to them before you posted your first message of 'reply test'. So it does look like an issue with the site.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@hopkinsc wrote:I am finding this site a little irritating since it has been updated. sometimes not being refreshed, sometimes very very slow.

I assure you that i have not got a time machine and i am actually posting my comments correctly and at the times specified 🙂

I have had commented 5 times and @edugallowk had responded to them before you posted your first message of 'reply test'. So it does look like an issue with the site.

Yeah, none of those responses displayed on my browser-session, as far as I was aware I was first responder.

I've seen retroactive insertion on social media sites, but credit stealing was an issue with the old Community site. You could have posters marking wrong answers, skipping over multiple responses that were applicable, or just getting their query satisfied to leave the thread unanswered. 'Community' implies a bit of social responsibility, hope the new site attempts to cure any corruptions of the predecessor.