Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Has this ever happened to you... trynna loop an ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Has this ever happened to you... trynna loop an input load?

I'm a huge fan of the placeholder table and forced concatenation by explicit naming. I can speak a little to the evolution of this technique, as I'm a big iterator, and enjoy iteration, even in its most gratuitous forms.

Like many Qlik techniques it starts with the glint of inspiration, devolves into tears, but eventually emerges victorious through perseverance.

So if you're out there trying to collect different inputs in bulk and sequence your load through a series of input files, this post is for you… It may make sense if you've been through a similar history, and personally it has performed as solid defensive programming for myself.

Naturally the first thing you'll want to try is a wildcard load of fields against a wildcard set of files.

//Whee!! I'm autoconcatenatin’ the whole stock market!

LOAD *

FROM [\HISTORICAL STOCK DATA\SECURITIES_HISTORY_*.QVD](qvd);

And everything is going to work great!

Until... your upstream partner in DB land, who provides your flat file series, but doesn't have that shiny 'Certified Qlik Something or Other' certificate like you do, watches you cruising along without a care in the world, crushing 100's of millions of rows, and mutters "you thought life was gonna be that easy??!".

And then the divergence begins. Drizzling in to your source tables. "What's going on?!" March 2017 has an extra field in it called [GDATE_FLAG], files for Japan & LatAm are missing the field [RD_Code], the entire Q4 data set switched field names from [Division_Cd] to [DIVISION_CD]!!!

Suddenly it dawns on you; wildcard load is now being held hostage by the forces of cooperation.

You decide to put an end to that nonsense and think "I'll identify a finite list of required fields, and get them rock-solid named in requirements! Heh heh!"

//Better not try to mess with THIS rock-solid table structure! Field names are DOCUMENTED!

LOAD Symbol,

Date,

Open,

High,

Low,

Close,

Volume,

[Adj Close]

FROM [\HISTORICAL STOCK DATA\SECURITIES_HISTORY_*.QVD](qvd);

Are you still attempting wildcard looping? Not so fast!! "Wth! Who changed the file naming convention for the 2016 files from YYYYMMDD to MM_DD_YY?! And btw, you dropped a file into the source repository that had a completely new spreadsheet tab name, and wound up busting the load again."

Stick with a set of solid requirements though, because eventually divergence disruptions will reach that ambiguous point where they make the perceived jump from "accidental" to "careless, and possibly subversive".

But mayhem doesn't give up that easily. "You know what I need?" (says Mayhem) "I now need some piece of reasoning or request that causes a preceding load." Proud to show off your Qlik know-how, you slap on that preceding statement and say "See! Qlik can do it!!"

[DA_STOCK_MARKIT]:

LOAD *,

'Nope' As Nope;

LOAD Symbol,

Date,

Open,

High,

Low,

Close,

Volume,

[Adj Close]

FROM [\HISTORICAL STOCK DATA\SECURITIES_HISTORY_QN*.QVD](qvd);

Uh-oh.

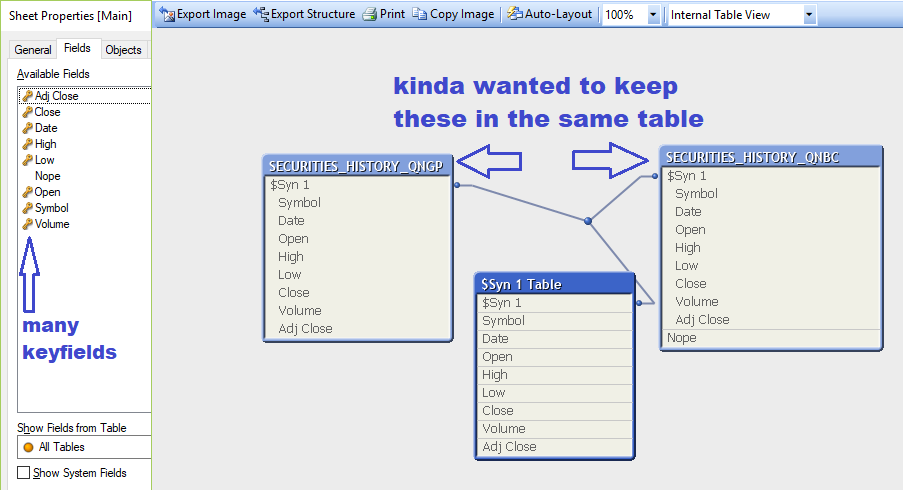

If you had happened to be working with very large tables with lots of fields and high data volumes, and didn't apply tiny row limits to test, the first time you come out of a broken auto-concatenation that results in a full-table synthetic key, let me describe the symptoms:

Your RAM is melting. It is screaming under the mind-boggling strain of forming 'Cartesian of All the Things'. The RAM fills up like the Titanic, and the machine if you're working on a desktop with limited RAM sizing will become sluggish and then unresponsive. A server with a larger headroom, maybe it will last a little longer. Then those data permutations reach virtual memory and you have to wait.. longer than Gandalf waits as he emerges from his battle with the Balrog (“Balrog”? Just "Balrog"? Or "THE Balrog"?).

You might at the end of that eternity, get responsiveness back. Whatever you do, do NOT begin making selections in the user interface once it unfreezes! Reduce data, close out! Close out!!

**extra-credit if you realized what was happening in time to save the RAM, and force-closed Qlik via task-manager, but then realized you didn't have 'Save Before Reload' set.. and lost a bunch of script changes from the hard-close**

If you're smart, you go into your script and make sure that doesn't happen again. If you're me.. you on your 5th bomb out and are as baffled as the first, muttering "I don't get it.. all it was, was a harmless little hardcode field. What's the harm?"

And that's, my fellowes of the Q, how we arrived at explicitly forced named concatenation.

Now I know what you're thinking "I don't care WHAT is in those tables, they are getting smashed together!" If your process gets fed a listing of elements, an extract from IMDB, and a table scraped from Wikipedia, it don't matter. They're all goin in the same table!

[SQUASH_IT_TOGETHER]:

LOAD '' AS PLACEHOLDER AUTOGENERATE(0);

FOR EACH vRandoFile IN FileList('TOTALLY_RANDOM_FIELD_LISTS\*.QVD')

CONCATENATE(SQUASH_IT_TOGETHER)

LOAD * FROM

[$(vRandoFile)] (qvd);

NEXT

(Mayhem) “Muauahahaa!! I’m not out of tricks! Let's blend up the file extensions!”

[SQUASH_DIFFERENT_FILE_TYPES_TOGETHER]:

LOAD '' AS PLACEHOLDER AUTOGENERATE(0);

FOR EACH vRandoFile IN FileList('TOTALLY_RANDOM_DATA_FILES\*.*')

Let vExtension = Left(Upper(Subfield('$(vRandoFile)','.',-1)),3); //hopefully you're not naming data files 'Hows_bout.txt.them.csv.apples'

SWITCH '$(vExtension)'

CASE 'QVD'

Set vFormatString = (qvd);

CASE 'CSV'

Set vFormatString = (txt, codepage is 1252, no labels, delimiter is ',', msq);

CASE 'XLS'

Call GetExcelTypeSheetandFieldSequence('$(vRandoFile)')

For each $(vSheet) in $(vSheets)

CONCATENATE(SQUASH_IT_TOGETHER)

LOAD *

FROM [$(vRandoFile)]

($(vFormatString), table is [$(vSheet)]);

Next //excel sheet

CASE 'TXT','PIP','QVO','TAB'

Call TryToLearnTheDelimiterByReadingSmallSample('$(vRandoFile)')

Call AttemptAFieldListingFromGleanedDelimiter('$(vDelimiter)')

Call TestForFileEncodingJustToBeSure('$(vRandoFile)')

CONCATENATE(SQUASH_IT_TOGETHER)

LOAD $(vFlatFileFileList)

FROM [$(vRandoFile)]

$(vFormatString);

DEFAULT

CONCATENATE(SQUASH_IT_TOGETHER)

LOAD [@1:n] AS JUST_THROW_IT_ON_THE_HEAP

FROM [$(vRandoFile)] (fix, utf8, no labels);

END SWITCH

NEXT

“At this point, you have done your due diligence. I free you from further obligations... your project is cancelled. Sincerely, Wernstrom Mayhem”

** And lastly.. for extra-credit: why NOCONCATENATE the humble 0 row placeholder table?

Because they will VANISH (if you're not paying close attention)

If you’re lucky you will discover this just after the script phase where it completed a long load of transactions in tens of millions, nee hundreds of millions of rows, attempted all in one gulp. The script gallantly cavorts onward to the [Accounts] table. CONCATENATE [Accounts]..... bzzzzzt!

[Trans]:

Load '' as ID autogenerate(0);

//I will just make my innocent placeholder table use the same key field as my Trans table, they are associated so that should be just fine.

[Accounts]:

Load '' as ID autogenerate(0);

/* script, script, script...

...

...

...

many script tabs later

*/

//Hey, time to sequence through the Account files

CONCATENATE(Accounts)

...

//Nope.

- Tags:

- qlikview_scripting

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's quite refreshing ![]() I suggest you put it into a document in the resource library.

I suggest you put it into a document in the resource library.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's quite refreshing ![]() I suggest you put it into a document in the resource library.

I suggest you put it into a document in the resource library.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good idea Marcus. Done! J'ever try ta loop a QlikView input load... and have this happen??