Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Re: Keep only rows/operations with the latest tran...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

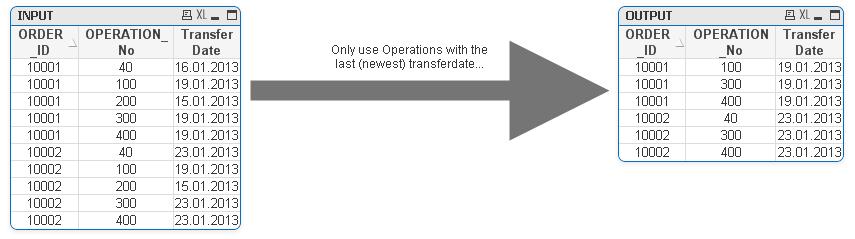

Keep only rows/operations with the latest transfer date (per order)

Hi,

I want to share my solution for this problem and ask the more experienced developers if there is a possibility to solve this more elegant. I think my solution is not the best one. But it is a solution. ![]() I just couldn't test it against a larger dataset, but this will happen shortly.

I just couldn't test it against a larger dataset, but this will happen shortly.

Situation:

Datawarehouse stores our Orders. Every ORDER can have multiple OPERATIONS. So the combination of ORDER_ID and Operation_No is unique. Every Operation has a field called "Last transfer date". If an operation is deleted (for several reasons) from the operational system it stays in the DataWarehouse with the transfer date at this point. All other operations get a new transfer date every time the orders/operations changes. The transfer happens on a daily basis.

Problem:

If I use the original table I get too much operations including deleted ones and I end up with wrong results. So I have to clean it up in my app like the picture shows.

I achieve this (at the moment) by creating a mapping table with ORDER_ID and max(Transferdate) for every order. Than I load only the orders from the original table WHERE the maxdate from the mapping table = transfer date. Please take a look at the attached files...

I found this tricky because the "GROUP BY" clause needs all fields to have an aggregation expression or to be included in the "group by" statement. This makes it impossible (for me) to get the maxdate for the Orders, since the operation_id has no aggregation expression and can't be included in the group by statement. I try to avoid joining...

My solution looks a bit awkward for me and I appreciate every suggestions for improvements. I think another way would be to flag the relevant operations, but it complicates the expressions in the designer...

Best Regards,

Peter

- « Previous Replies

-

- 1

- 2

- Next Replies »

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As per you source data nature the new data just append to QVD and it is Source.QVD.

Here I am creating intermediate QVD to avoid the full scan of Source qvd.

LET vNow= number(now()) ;

IF Transform.qvd exists // Loading new data from source after last reload + transfrom qvd

Transform:

LOAD *, OrderID&'-'OperationNO AS PK FROM Source.qvd Where Datefiled >= $(vLastReload );

Concatenate (Transform)

LOAD * from Transform.qvd where not exists (PK,OrderID&'-'&OperationNo);

Inner Join (Transform)

Load OrderID, Max(DateField) Resident Transform group by OrderID;

ELSE //Full scan on Source.qvd

Transform:

LOAD * FROM Source.qvd;

Inner Join (Transform)

LOAD OrderID, max(Datefiled) AS Datefield

From Source.qvd Group by OrderID ;

STORE Transform into Transform.qvd (qvd);

Let vLastReload = $(vNow);

You can tweak this idea with your example.

Please post same qvd data if you have any questions so any one from community will help better

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Peter,

There is a shorter way, but it is usable if you don't need to keep the original "INPUT" table:

INPUT:

LOAD * INLINE [

ORDER_ID, OPERATION_No, TransferDate

10001, 200, 15.01.2013

10002, 100, 19.01.2013

10001, 300, 19.01.2013

10001, 40, 16.01.2013

10001, 400, 19.01.2013

10002, 200, 15.01.2013

10002, 300, 23.01.2013

10001, 100, 19.01.2013

10002, 40, 23.01.2013

10002, 400, 23.01.2013];

INNER JOIN (INPUT) LOAD DISTINCT

ORDER_ID,

max(TransferDate) AS TransferDate

RESIDENT INPUT

GROUP BY ORDER_ID;

Regards,

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would use the solution you have now. Find the max transfer date for each order ID, then pull records with the matching date. There are other ways to do it, but I think that's the cleanest and easiest to maintain.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

thank you for your contribution. I didn't thought of inner join... ![]()

I think a shorter way (in lines of code) than yours is not possible. ![]() At the moment there is no need to keep the original table, so it would be possible from that view. But I'm always cautious when it comes to joins, because my structure is "a bit" more complex than this test example. I will take a look which solution performs better.

At the moment there is no need to keep the original table, so it would be possible from that view. But I'm always cautious when it comes to joins, because my structure is "a bit" more complex than this test example. I will take a look which solution performs better.

In your case I have to load the complete table first and then apply the inner join. In my case I load the (small) mapping table first and then the necessary data via Where clause.(I don't have the table already loaded like in this example). I haven't the experience to tell which way is more efficient(speed / ram usage).The table is not that big at the moment it has about 20 cols and 200k rows. I hope I can measure a difference between the 2 solutions.

Anyway, its very helpful, I always asked myself in the past when(/where) I would need the inner join.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK. At least you have an example of inner join now ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you can Implement the incremental loading. This will improve your performance while loading.

What is your data source ? it is QVD or DB ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

my Datasource is QVD. I looked at incremental loading, but didn't get it how it could me with this problem. 😕

Could you please explain a bit how it could be helpful my case?

Regards Peter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As per you source data nature the new data just append to QVD and it is Source.QVD.

Here I am creating intermediate QVD to avoid the full scan of Source qvd.

LET vNow= number(now()) ;

IF Transform.qvd exists // Loading new data from source after last reload + transfrom qvd

Transform:

LOAD *, OrderID&'-'OperationNO AS PK FROM Source.qvd Where Datefiled >= $(vLastReload );

Concatenate (Transform)

LOAD * from Transform.qvd where not exists (PK,OrderID&'-'&OperationNo);

Inner Join (Transform)

Load OrderID, Max(DateField) Resident Transform group by OrderID;

ELSE //Full scan on Source.qvd

Transform:

LOAD * FROM Source.qvd;

Inner Join (Transform)

LOAD OrderID, max(Datefiled) AS Datefield

From Source.qvd Group by OrderID ;

STORE Transform into Transform.qvd (qvd);

Let vLastReload = $(vNow);

You can tweak this idea with your example.

Please post same qvd data if you have any questions so any one from community will help better

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dathu,

thank you for your reply and the script. Its much more than I expected. ![]()

If I understand this script right, its more an addition to the original problem. The cleanup itself happens with the inner join like Michael suggested?

But it could be relevant if the data is growing. And it looks easier as I thought it would be..

I think instead of now(), I have to use today(). I don't have a timestamp in the source, just a date like "19.01.2013". Or is it possible to use something like if 19.01.2001 >= now() than ....

Best Regards,

Peter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Genrally, It depends one how often you refresh the data and I was converted timestamp into number because date or timestamp stored number format in QVD.

If you are reloading once a day then use Num(today()).

I just gave basic idea and just implement your scenario.

- « Previous Replies

-

- 1

- 2

- Next Replies »