Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- List of Tables with Row and Null Count created in ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

List of Tables with Row and Null Count created in script

Hi All,

We are trying to validate data density in a data warehouse project development but stuck at a point. I know there is a facility for this but we would like to get a bit more flexible and detailed solution then hover over the mouse on fields in the table viewer.

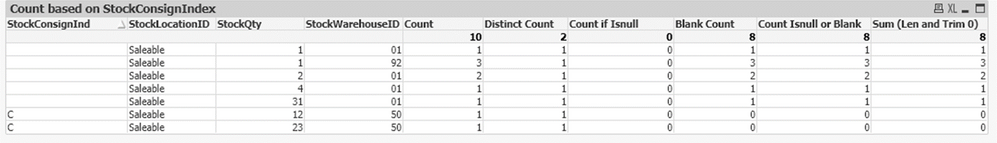

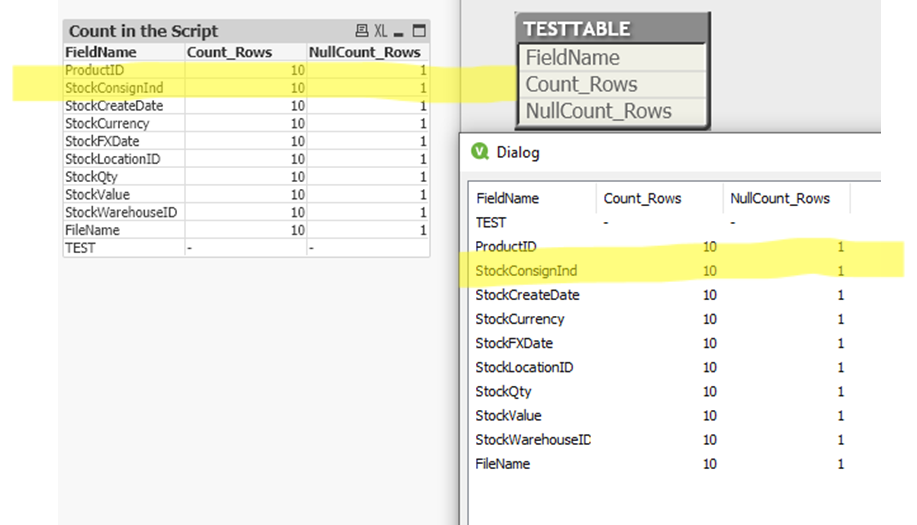

The problem is that when trying to complete the "Null" count within the script I cannot get QlikView to count the fields' value instead of the fields' name. Here is the first picture from the desired outcome but it bases on expressions on the source table. However, when using the "same" expression in the script I get different results. Also there is the outcome from list-table build and the script which build the table.

// *************** Pre-Loading all text in folder *************** //

FOR EACH vFileName in Filelist ('$(vUseinRBConfigPath)\Azure\*.txt')

LOAD *,

'$(vFileName)' as FileName

FROM [$(vFileName)]

(txt, utf8, embedded labels, delimiter is '|', msq);

NEXT vFileName

// ************** NULL COUNT STARTS ************************* //

TESTTABLE: //*** Testable pre-load for concatenation ***

LOAD * Inline [

FieldName, TEST

TEST,TEST,

];

//FOR tableIdx = NoOfTables()-1 to 0 step -1 //*** Loop through dynamic table names

//LET vTable = TableName($(tableIdx)); ***

LET vTable = 'Stock20200122040018';

FOR fieldIdx = 1 to NoOfFields('$(vTable)')

LET vNullCount = 0;

Concatenate (TESTTABLE)

LOAD

FieldName($(fieldIdx),'$(vTable)') as FieldName,

Count(FieldName($(fieldIdx),'$(vTable)')) as Count_Rows,

If(Len(Trim(FieldValue($(fieldIdx),'$(vTable)')))=0, $(vNullCount)+1, $(vNullCount)) as NullCount_Rows//$(fieldIdx)

//LOAD [ProductID"|"StockConsignInd"|"StockCreateDate"|"StockCurrency"|"StockFXDate"|"StockLocationID"|"StockQty"|"StockValue"|"StockWarehouseID]

Resident Stock20200122040018

GROUP BY FieldName($(fieldIdx),'$(vTable)')

;

NEXT fieldIdx

//NEXT tableIdx

Drop Field TEST;

Set exceptList="'TESTTABLE'";//*** Dropping all the table in the app except the created list-table

call dropTablesExcept(exceptList);// ***

Exit SCRIPT;

// ************** NULL COUNT FINISHES ************************* //

We would like to make this as dynamic as possible and we wouldn't like to keep all the source tables. It can be quite various amount of data to validate so we trying to keep the solution low size as possible. That is why we drop all the tables with a sub procedure apart from the created list-table called TESTTABLE.

Probably we missing some evident logic or function so if anyone has got an idea what is it or how to solve this, please drop a comment below. Thanks in advance.

//not sure the snapshots will be visible or not in the post but they don't in the preview still there in the editor. 😞

- Tags:

- qlikview_scripting

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would do it a bit differently by including the NULL counting (and maybe further data-validation) within the for each loop of the files. This means an approach like:

for each vfile in filelist()

t: load * from vfile;

for i = 1 to nooffields(t)

f: load vfile as File, noofrows(t) as Rows, fieldname(i, t) as Field, noofrows(t) - fieldvaluecount(fieldname(i, t)) as Null

autogenerate 1;

drop tables t;

next

next

store f into final.qvd (qvd);

It's just a bit simplified without a working syntax - only to show the logic. Depending on your data/requirements and your sources you may need an additional NULL handling - means mainly the use of NULL variables and/or a NULL mapping to ensure that all blank/empty/missing values are handled as NULL and to avoid that each field needs to be checked/transformed with something like: if(len(trim(Field)),Field, null()).

It's not only simpler - it has also the big advantage that you don't need resident-loads which runs through the data-tables else you could use the various field/table-functions and run through the symbol-tables. From a performance point of view this is a big difference - especially if there are further measures intended like checking the quality of the data which could be done within a load like:

c:

load len(F), isnum(F), ... ;

load fieldvalue(fieldname(i, t), recno()) as F autogenerate fieldvaluecount(fieldname(i, t));

which would be also included within the file-loop. Many more background to this topic could you find here:

Symbol-Tables-and-Bit-Stuffed-Pointers-a-deeper-look-behind-the-scenes

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

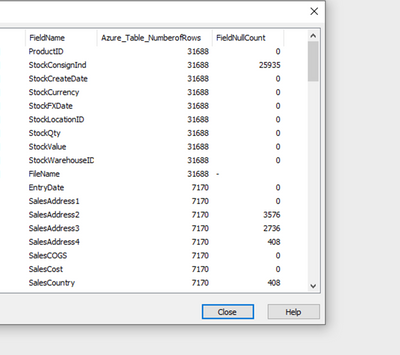

Please see the mentioned pictures in the order of the original post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would do it a bit differently by including the NULL counting (and maybe further data-validation) within the for each loop of the files. This means an approach like:

for each vfile in filelist()

t: load * from vfile;

for i = 1 to nooffields(t)

f: load vfile as File, noofrows(t) as Rows, fieldname(i, t) as Field, noofrows(t) - fieldvaluecount(fieldname(i, t)) as Null

autogenerate 1;

drop tables t;

next

next

store f into final.qvd (qvd);

It's just a bit simplified without a working syntax - only to show the logic. Depending on your data/requirements and your sources you may need an additional NULL handling - means mainly the use of NULL variables and/or a NULL mapping to ensure that all blank/empty/missing values are handled as NULL and to avoid that each field needs to be checked/transformed with something like: if(len(trim(Field)),Field, null()).

It's not only simpler - it has also the big advantage that you don't need resident-loads which runs through the data-tables else you could use the various field/table-functions and run through the symbol-tables. From a performance point of view this is a big difference - especially if there are further measures intended like checking the quality of the data which could be done within a load like:

c:

load len(F), isnum(F), ... ;

load fieldvalue(fieldname(i, t), recno()) as F autogenerate fieldvaluecount(fieldname(i, t));

which would be also included within the file-loop. Many more background to this topic could you find here:

Symbol-Tables-and-Bit-Stuffed-Pointers-a-deeper-look-behind-the-scenes

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Marcus,

Thanks for your reply. I will accept your answer as solution as it has pointed me to the right direction when I was running out of ideas. However the fieldvaluecount() hasn't given the desired outcome as it counted the distinct value of particular fields.

Just for reference to who would troubling with similar issue. I couldn't create a fully automatic solution and I had to create a pre-declared _KeyField reference table for each table to be able to go through each row in each table and look them for blank (I would do the same with null values too). Then, because it created a separate field for each column with a flag, I run a sum/group by load separately into a concatenated table with the count of flags. The trick was that after the evaluation of left join within the loop I done the concatenated load straight away. Please see the code below, also hope the picture will be visible too this time.

LET vTableNameOrigin = 'KeyFieldList';

LET vKeyFieldName = 'CustomerID';

LET vMaxRecNoCounter = NoOfRows('$(vTableNameOrigin)');

LET vRecNoCounter = 1;

DO WHILE vRecNoCounter < $(vMaxRecNoCounter)

LET vTableName = peek('Table','$(vRecNoCounter)','$(vTableNameOrigin)');

LET vFieldName = peek('Field','$(vRecNoCounter)','$(vTableNameOrigin)');

LET vSourceFile = peek('SourceName','$(vRecNoCounter)','$(vTableNameOrigin)');

LET vLinkTableField = '[$(vSourceFile)] | $(vFieldName)';

IF(peek('Key','$(vRecNoCounter)','$(vTableNameOrigin)')) = 1 THEN

LET vKeyFieldName = peek('Field','$(vRecNoCounter)','$(vTableNameOrigin)');

ENDIF;

LEFT JOIN('$(vSourceFile)')

LOAD

$(vKeyFieldName),

IF (Len(Trim($(vFieldName)))=0,1,0) as [FValue@$(vFieldName)]

Resident $(vSourceFile)

;

[Data Table Nulls]:

LOAD

'$(vLinkTableField)' as _LinkTableField,

SUM([FValue@$(vFieldName)]) as Nulls

Resident $(vSourceFile)

Group by '$(vLinkTableField)'

;

LET vRecNoCounter = vRecNoCounter+1;

LOOP

LET vRecNoCounter = Null();

LET vTableName = Null();

LET vFieldName = Null();

LET vSourceFile = Null();

LET vLinkTableField = Null();

LET vKeyFieldName = Null();