Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Recent Documents

-

Excel-Style Spreadsheets Inside Qlik Apps: How to Add [Tutorial]

This guide walks you through building a feature-rich spreadsheet that looks and feels like Excel in Qlik Sense using the Spreadsheets extension (first... Show MoreThis guide walks you through building a feature-rich spreadsheet that looks and feels like Excel in Qlik Sense using the Spreadsheets extension (first demoed at Qlik Connect 2025).

Check it out as a video tutorial or keep reading:

Step 1: Add the Spreadsheets Extension

- Open your Qlik app and go to the Assets panel.

- Head to Custom objects > AnyChart 4, and drag AnyChart Spreadsheets onto your app's sheet.

This creates a basic spreadsheet looking as follows:

Step 2: Add Data

- Select the object, open the Properties panel, go to Data, and click Add.

- Choose the new data section, enable Create synced sheet, and click Edit data.

- Add the dimensions and measures you want to use.

This creates one more sheet, titled DataSheet1, populated with your data.

Step 3: Format Your Spreadsheet

- To keep things clean, feel free to delete the empty sheet created by default, titled Sheet1.

- On DataSheet1, select your table area, head to the INSERT tab, and click Table. Confirm with OK.

- Apply formatting as needed, all in a familiar Excel-like interface. For example:

- Resize rows and columns.

- Change alignment and font settings from the HOME tab.

Step 4: Add Charts

You can also add charts within the spreadsheet's sheets, just like in MS Excel.

- Select your data, open the INSERT tab, and choose Insert Chart.

- Select a chart type, click OK, and place the chart where you need it.

Step 5: Save Changes

- Whenever you need to save everything you have done, open the USER tab and click Save to app.

Step 6: Apply Selections & See Your Spreadsheet in Action

- Apply filters or selections in your Qlik app.

- The spreadsheet updates instantly. Charts, tables, and formulas adjust to the selected data in real time.

This works as the spreadsheet is fully connected to Qlik’s associative engine.

Optional: Export to Excel

If you want to work outside of Qlik, such as in Microsoft Excel or Google Sheets, feel free to export the entire spreadsheet as an .xlsx file:

- Go to the FILE tab, choose Export, and select Excel format.

- Click Export Excel File, enter a file name, and confirm with OK.

- The file downloads immediately, ready to open in Excel or another compatible tool.

Here's how the downloaded XLSX file looks when opened in MS Excel:

Done

You now have a reactive spreadsheet, built with live Qlik data, ready to expand, customize, or share.

Helpful Links

- Download the extension

- Explore the demo app (view live or download QVF)

- See the documentation

- Request a personalized live demo

-

Using Qlik Lineage API's with your On-Premise Environment(s)

Starting Point Before you get to excited, the Qlik Lineage API's do NOT work against your Qlik Sense Enterprise on Windows environments. But I used th... Show MoreStarting Point

Before you get to excited, the Qlik Lineage API's do NOT work against your Qlik Sense Enterprise on Windows environments. But I used that title because that is how I searched when a customer said "I really like tracking the lineage for my SasS applications, but I also need it for the Qlik Sense Applications I still have on-premise."

Don't get discouraged just yet, as I'm going to show you a way forward that will let you capture the lineage you want using a product Qlik provides that you might not be aware of ... Qlik Lineage Connectors.

In a nutshell, the Qlik Lineage Connectors collects all the lineage information for your on-premise environments and publishes the needed METADATA for them to the Cloud, where you can use the API's. No data is moved to the cloud, and you do not need to reload anything in the cloud to make it work.

Installation & Configuration

Note: You will need at least a bare bones Qlik SaaS tenant to serve as the catalog for your lineage data. Follow the instruction for the Qlik Lineage Connectors application, as the point of this post is to help you after that so you can configure and get the Qlik Lineage API's working on all of that precious on-premise lineage metadata.

The metadata can only be published to a Managed space within your Qlik SaaS Tenant. In the following screen shot you will notice I conveniently named that space "OnPremise" so there would be no doubt in my old mind what the space was when I see it a few weeks from now. 😉

The following screen shot shows what that space looked like before configuring and running the Qlik Lineage Connectors in my environment:

Executing Qlik Linage Connectors On-Premise

You can run/test the process by going to the Scheduler tab and simply pressing the run/play icon. As you will see in the image below I configured my environment to be limited to a single stream that had 22 applications in it.

As the process was going I began seeing "items" appear in my "OnPremise" space.

Output

I used the word "items" because they are a type of object called an "External link." You can click on the link and it acts just like other hyperlinks and would launch the application in your QSEoW environment. However, it also has the advantage which is driving this post ... the ability to see the Lineage Metadata for those on-premise applications. Simply right click on the "external link" and choose either "Lineage" or "Impact analysis", just like you would any of your SaaS applications.

Yeah! We are in business:

API Calls

If you checkout the Lineage APIs you will notice a pretty simple format.

https://{Qlik Tenant}/api/v1/lineage-graphs/nodes/{QRI_ID}

If you look at the online documentation or examples people have shared they all involve "applications." Which shows you that the QRI_ID involves a pattern of "qri:app:sense://{Application Id}"

Alas, these "external links" are not "applications" they are simply pointers to the on-premise applications along with the lineage metadata. So, that format won't work for us. However, we can easily get that QRI_ID value using the Qlik "items" API call.

Items API

In the documentation you will notice that one of the parameters that can be utilized for the "items" API call is something called "resourceType." That parameter allows you to filter the API to exactly what you are looking for which we need to do in this case. The "resourceType" value we want is "genericlink."

Notice that in my screenshot (from Postman) that in addition to passing "genericlink" for the "resourceType" parameter, I have also configured another parameter called "spaceId" that then limits the list to the "genericlink"(s) found in my "OnPremise" Managed Space.

If you look at the blue highligted area in the screenshot above it output exactly what we are looking for in the form of the "secureQri" value. Woohoo! We can programmatically iterate through the output from calling the items API and pass the QRI_ID values to the lineage API.

Lineage API

Two important parameters for the Lineage API are called "level" and "up." The "level" parameter lets you define what aspects of the lineage information you want. While the "up" parameter lets you declare how many values to return.

In my call, notice that I have indicated that I wish to get all values, and I want the resources.

You simply change the "level" parameter if you wish to obtain Table names or Field names and the information about them. Or set it to all and it will return all of the metadata you could dream of.

-

Link KPIs to a single sheet with different filters

I created this article with the intention of helping with a post, but also to collaborate with other similar questions that may be useful in the artic... Show MoreI created this article with the intention of helping with a post, but also to collaborate with other similar questions that may be useful in the article.

Link KPIs to a single spreadsheet with different actions:

Resolution

Step 1 - KPIs

1st - KPI

Count({<Age={">30"}>}Name)

2nd - KPI

Count({<Age={"<=30"}>}Name)

3rd ......

Step 2 - Button

*The KPI itself may already contain a link to a spreadsheet, but since we would need to use the filter actions, I also centralized this navigation in the button chart.*- Button for KPI 1

Action: Select values matching search criteria

Field: Age

Value: fx

='>30'

Add action:

Navigation (go to a Sheet)Sheet: select the folder name

- Button for KPI 2

In this scenario, it follows the same procedure as the first, only changing the value that goes in the expression. '<='

Value: fx

='<=30'

Note: If needed, you can add an additional action to clear the filter and ensure that only the current filter is filtered.

Final Step 3 - Container Layout

See how a small customization using the Layout Container chart can help maintain a cleaner look by inserting the button chart into the KPI as a simple icon.This step will allow you to improve the design of your application, as you can organize your charts in a way that better suits your needs,

among several other features. So, explore:

- Here is the qvf file for import, you can also find a sample video. I hope everyone is doing well. Best regards, MatheusEnvironment

- Qlik Sense SaaS

-

Benchmark tests for concurrent reloads using Qlik Data Gateway - Direct Access

Introduction The goal of these tests was to assess the performance and behavior of Qlik Data Gateway - Direct Access (hereafter Direct Access gateway)... Show MoreIntroduction

The goal of these tests was to assess the performance and behavior of Qlik Data Gateway - Direct Access (hereafter Direct Access gateway) when installed on machines with limited resources.

Direct Access gateway 1.7.2 was used for these tests since it was the latest version available at the time. Later versions might provide improved performance.

Test environment

We conducted these tests on two resource-constrained machines with configurations detailed in the table below. To minimize the effects of network latency, these machines, the source database, and the Qlik Cloud Services staging tenant were all located in the US East region.

Name

Windows Server

No. of CPUs

Memory (GB)

Storage (GB)

Minimal

Windows 2022

2

8

32

Small

Windows 2022

4

8

32

Database description

We used a SQL Server database called benchmark containing four tables, each with a varying number of entries. Detailed descriptions of these tables are provided in the table below.

Table name

Number of entries

sample_table_1m

1,000,000

sample_table_5m

5,000,000

sample_table_10m

10,000,000

Sample_table_48m

48,000,000

Each table contained ten columns and was created using the following SQL Query:

The data in each table was generated randomly using the following SQL Query:

Test scenarios

The following tests were executed using the Qlik SQL Server Connector.

Multiple parallel reloads

In this scenario, we ran multiple reloads in parallel to determine the maximum number of concurrent reloads each machine was able to handle.

Minimal machine results

The results for the minimal machine are presented below.

Table name

3 reloads

5 reloads

10 reloads

15 reloads

sample_table_10m

2m21s

3m11s

6m13s

2m13s

6m21s

8m1s

2m54s

3m19s

6m29s

8m26s

7m2s

8m26s

3m44s

7m3s

8m26s

7m11s

9m7s

2m54s

7m52s

7m19s

9m15s

7m44s

9m16s

8m8s

7m44s

9m24s

9m49s

14m30s

8m17s

15m11s

16m1s

16m17s

16m51s

sample_table_48m

14m38s

21m56s

26m

> 1h

> 1h

> 1h

26m

> 1h

22m21s

26m

> 1h

22m21s

27m

> 1h

19m28s

23m2s

27m

> 1h

19m28s

24m

DirectAccess-4025

> 1h

31m

> 1h

33m

> 1h

34m

> 1h

34m

DirectAccess-4025

DirectAccess-4025

DirectAccess-2009

Closing data chunk stream from client

Findings

It seems then, that the safe number of concurrent reloads for the minimal machine is 13. In some cases, a few reloads failed with the DirectAccess-4025 error. However, when reloads were performed with chunk recovery (RELOAD_CACHE_MEMORY_MB=100) enabled, they were always successful.

Small machine results

The results for the small machine are presented below.

Table name

3 reloads

5 reloads

10 reloads

15 reloads

sample_table_10m

1m48s

1m56s

4m58s

5m57s

5m23s

5m57s

2m13s

2m54s

5m32s

7m19s

5m40s

7m19s

3m27s

5m48s

7m19s

7m36s

8m1s

4m50s

3m43s

7m52s

8m17s

8m50s

8m26s

3m43s

9m6s

8m34s

8m34s

8m42s

9m6s

8m50s

9m7s

9m15s

9m15s

sample_table_48m

> 1h

> 1h

> 1h

> 1h

> 1h

> 1h

> 1h

> 1h

> 1h

> 1h

> 1h

> 1h

> 1h

> 1h

> 1h

Findings

During the testing, CPU utilization was nearly 100%. However, memory usage did not differ significantly between tests. At its highest, memory usage reached only half of the available memory.

Large reloads

In this scenario, we performed reloads with large amounts of data (1 million-10 million rows).

Minimal machine results

The results for the minimal machine are presented below.

Table name

Average fetch time

sample_table_1m

11

sample_table_5m

62

sample_table_10m

80

Small machine results

The results for the small machine are presented below.

Table name

Average fetch time

sample_table_1m

3

sample_table_5m

14

sample_table_10m

30

Conclusion

Based on the benchmark tests, Direct Access gateway can safely run a maximum of 13 concurrent reloads on a minimal Windows machine, although it will take less time to run them on a small machine.

-

DocuGen - Automatic generation of Qlik Sense app documentation thanks to Qlik Ap...

Download latest version Challenge: Many of us likely know that after developing a Qlik Sense app, documentation needs to be created to deploy our a... Show MoreDownload latest version

Challenge:

Many of us likely know that after developing a Qlik Sense app, documentation needs to be created to deploy our analysis to production. This process is time-consuming, often requiring several days to produce what feels like just a "piece of paper". Another issue is that documentation is often made using standard templates, leading to materials that aren't complete and can be hard to comprehend. Documentation can be mandatory for both regulatory and operational reasons. Following the idea of “working smarter,” I want to automate this process using DocuGen with Qlik Application Automation (QAA). This approach can save us valuable time, allow us to transfer use cases to production faster, and provide documentation that is both thorough and easy to read.

Idea:

It all started with this simple idea and a first prototype, which I built in QAA. Various Qlik Cloud Services blocks are used to extract the relevant metadata from the individual app (e.g. table definitions, sheets and visualizations, variables, measures and dimensions, etc.). This data is then stored in variables and once all the information has been collected it gets passed into a “Custom Code” block. This block uses Python 3.11 and creates a dynamic HTML file without external references. This means that the created HTML file remains transportable and does not require any external resources, as it contains all the necessary functions itself. Finally, the created HTML file is saved to MS Sharepoint.

Input parameters for the python script.

Outcome:

This approach enables us to produce standardized documentation significantly more quickly on a daily basis, reducing the time required from an estimated 3 to 5 days to approximately 30 minutes. In my day-to-day job as a BI Specialist, I am now able to transfer analysis prototypes to production much faster for my customers and deliver added value for the business departments even faster. I think many other people in similar roles like me face the same challenge. This is the reason why I created a community project out of this idea together with Dennis Jaskowiak (Qlik PreSales). We started to extend the documentation with more functionalities, generalized the design of the documentation and reworked the code. In this article, we would like to share the project and its content with the Qlik Community.

App general information overview.

Sheets & visualizations section.

Data model section.

Script section.

Content of the package:

The archive consists of only 4 files:

To import the QAA you need the DocuGen v(x).json file. This file contains the exported QAA workspace.

The "code" folder contains the code segments used if you want to customise the code for your needs.

-

custom_code_block.py: This file contains the code that we use in the

"Custom Code"blog in the automation. -

used_css.css: This file contains the CSS definition that we use in the

"Variable - vCSS"blog in the automation and imports the style description for our HTML file. The definition is not minified in this file. -

used_js.js: This file contains the Java scripts that we use in our Python script (custom_code_block.py at the very end). The JavaScript is implemented in the Python code (last

<script></script>section). It is important that'{'and'}'are replaced with'{{'and'}}'so that they are not evaluated in Python.

Installation:

- Create a new blank Qlik Application Automation

- Upload workspace (right click on canvas) à DocuGen v(x).json

- Create a connection for SharePoint a. scroll to the bottom and click on the “Create File on Microsoft SharePoint” Block that has a red border b. click on the Connection tab and create a connection to your Microsoft SharePoint c. After you receive the information

“Connection is linked”you can use it by clicking on your created connection. The connection tab should now change its colour from red to black. - (optional) If you want to switch the number format from ‘comma’ to ‘point’ as decimal separator you can switch the value of the block named

“Variable – vNumber Format”from'de'to'us'. It’s the second block of the automation. - Now you can run the automation to generate your first documentation

Running the automation:

When you click on “Run” the automation gets executed. By default, the automation has configured “Run Mode: Manual”. It requires a few inputs for processing:

- Space – where the app is located (Personal doesn’t work)

- App name

- List of Sections that need to be documented (e.g. Data Model, Measures, Dimensions etc.)

- Screenshots (yes/no)

- List for type of sheets (e.g. Public, Community, Private)

If you want to run the automation triggered (e.g. by REST call) it needs to me modified. We will post an example later to showcase this option.

Features:

This list showcases what DocuGen covers:

-

General app information:

- app name, description, Id, Resource type, type of usage, owner, published, published time, space Id, has section access, is encrypted, create date, modified date, last reload time, app memory footprint, app reload peak RAM

-

Data model:

- For every table it generates:

- title, number of rows, number of fields, number of key fields, is loose, comment, table size,

- table containing field name, number of distinct values, number of non-null values, tags, ishidden, iskey, connected tables

- For every table it generates:

-

Measures:

- For every measure it generates:

- title, label, description, Id, definition, expression, grouping, custom formatted, format type, format, color, tags

- For every measure it generates:

-

Dimensions:

- For every dimension it generates:

- title, label, description, Id, definition, grouping, tags

- For every dimension it generates:

-

Variables:

- For every variable it generates:

- title, Id, definition, comment, tags

- For every variable it generates:

-

Sheets & Visualizations:

- list of sheets

- for every sheet it generates title, type, Id, description, grid resolution, sheet mode, list of used objects

- link to sheet

- list of objects on the sheet

- for every object on a sheet, it generates screenshot, type, Id, title, subtitle, footnote, color mode, color definition, dimensions, measures, link to object

- if the object is a container (layout container, tabbed container or normal container) it creates a list of all child objects containing the following information:

- type, Id, title, subtitle, footnote, color mode, color definition, dimensions, measures, link to object

- list of sheets

-

Load Script:

- For every script section it generates:

- title, script

- For every script section it generates:

Closing:

This is it for now. I hope this community project can help you automate and reduce the time you spend on documentation. On the bottom of that article, you will find the necessary files. The current version of the files will be hosted on GitHub.

Link to GitHub : LINK

A special thanks to Emil Koslowski (Product Manager Qlik Application Automation) for his invaluable support with various Qlik Application Automation questions. Your efforts have been instrumental in shaping a robust solution. Additionally, a big shoutout to Dennis Jaskowiak (PreSales Qlik) for his genuine enthusiasm for the idea, as well as his mentorship and unwavering support throughout the development - your guidance has been truly instrumental. Thank you!

This project has been created by Prodromos Chatziagorakis (Volkswagen Financial Services) & Dennis Jaskowiak (Qlik).

-

-

Top 10 Viz tips - part IX - Qlik Connect 2025

At Qlik Connect 2025 I hosted a session called "Top 10 Visualization tips". Here's the app I used with all tips including test data. Tip titles, ... Show MoreAt Qlik Connect 2025 I hosted a session called "Top 10 Visualization tips". Here's the app I used with all tips including test data.

Tip titles, more details in app:

- Open ended buckets

- Cyclic reset

- "Hidden" columns

- Labels in different sizes

- Others by Ack%

- Highlight over threshold

- Tooltip to other things

- Bar codes revisited

- Table tooltips

- More Pivot tooltips

- Max min

- Gradients and opacity with SVG

- Cluster map layer

- Standard deviation band

- Band chart in table

- Table with scaling text

- Font size for highlight

- Labels on bars

- Renaming map labels

- "Extra" columns in Pivot

- Vertical waterfall revisited

- Trellis respond to selection

- Radar with more than one measure

- Button with two lines of text

- Currencies

- Date range sliders

- Dotted line on forecast

- Nav menu tooltips

- Blinking KPI

- Color table headers

I want to emphasize that many of the tips were discovered by others than me, I tried to credit the original author at all places when possible.

If you liked it, here's more in the same style:

- 24 days of visualization Season III, II, I

- Top 10 Tips Part IX, VIII, VII, VI, V, IV, III, II , I

- Let's make new charts with Qlik Sense

- FT Visual Vocabulary Qlik Sense version

- Similar but for Qlik GeoAnalytics : Part III, II, I

Thanks,

Patric -

How to read .json files with Qlik Sense

Before you start reading: The way to import .json with Qlik Sense script is undocumented and may still change at any time without notice. Qlik scrip... Show MoreBefore you start reading: The way to import .json with Qlik Sense script is undocumented and may still change at any time without notice.

Qlik script natively reads JSON (JavaScript Object Notation), it is a bit tricky and slower for large files - and an import wizard is missing, which would create the script blocks.

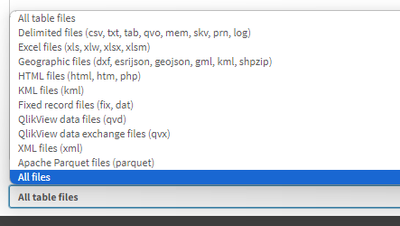

When you click on Add data in the connection pane, the file dialog for the folder will show no specific filter for .json files:

Choose "All files" and then select your .json file. Next you will see, that the wizard attempts to interpret it as csv. Pointless, but at least you get the FROM construct properly filled. Forget the rest, as we will replace it in a minute.

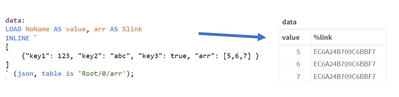

Just hit "Insert script" and then put LOAD * in front of FROM, and instead of (txt ...) put (json):

data: LOAD * FROM [lib://:Google_Drive/119UHVSR9_JjkT9o-mz2NAO1xyS1xU56f/01.json] (json);Not too complicated so far. If your .json file only has one root object (or one root array of objects) and you will already get the keys from the top level. You are already done.

Now lets learn more about the parsing!

Simple JSON Object

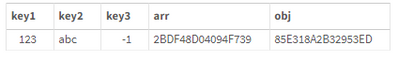

A singe json file containing only one object ...

{"key1": 123, "key2": "abc", "key3": true, "arr": [], "obj": {} }... will result in one line of data.

- Keys with simple values will be 1:1 loaded into a Qlik Sense field

- arrays and sub-objects will get a 16 char hash-value (I will explain below)

Multi-elements JSON File

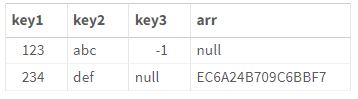

If the json file has multiple "rows" (objects in an outer array [ ... ]) you automatically will get multiple rows in Qlik.

[ {"key1": 123, "key2": "abc", "key3": true} , {"key1": 234, "key2": "def", "arr": [5,6,7]} ]The arrays can have different keys. Common keys will concatenate in the same field, whereas keys missed out in a given 'row' will read "null" (unfortunately as text, not as Null()) in the result.

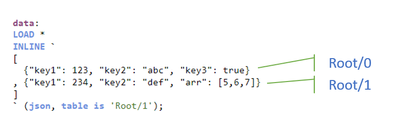

Now lets look, how to deal with sub-objects and arrays? For this we need understand the table is construct and the hash values.

- The root element has the pseudo-table name "Root"

- Every element in an array has an offset, starting with 0, even the outmost array. Hence, the first "row" above you would get with "Root/0"

- An object or an array will come back as a hash-value

- To get the object or array contents itself, alter the "table is" construct to address this key, e.g. in above example "Root/1/arr"

- If it is an array, you need to use the Pseudo key NoName to get the value and the key itself to get the hash value to link it to its parent

Importing JSON sub-arrays

That is fine for one row, but if you have a json file with many rows, you don't want to call every line with "Root/0/arr", "Root/1/arr", "Root/2/arr" ...

The good thing: You don't have to do it yourself. This article is explaining how to load .json by hand. If you want a quick solution, this one is for you: https://github.com/ChristofSchwarz/QlikScripts/tree/master/json

Assuming a .json file with this content

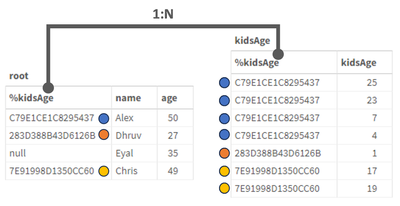

[ {"name": "Alex" , "age": 50, "kidsAge": [25,23,7,4] } , {"name": "Dhruv" , "age": 27, "kidsAge": [1] } , {"name": "Eyal" , "age": 35, "kidsAge": [] } , {"name": "Chris" , "age": 49, "kidsAge": [17,19] } ]We will introduce a FOR loop now. Note, if an array is empty [] or is null, the LOAD command inside the Loop would fail on that "row", so we set ErrorMode to 0 (=continue script upon error) and reset it to the default 1 (=fail on error) after the NEXT command

SET vFile = [lib://.../filename.json]; root: LOAD name, age, kidsAge AS %kidsAge FROM [$(vFile)] (json, table is 'Root'); SET ErrorMode = 0; FOR v = 0 TO NoOfRows('root') - 1 kidsAge: LOAD DISTINCT kidsAge AS %kidsAge, NoName AS kidsAge FROM [$(vFile)] (json, table is 'Root/$(v)/kidsAge'); NEXT v SET ErrorMode = 1;The result is a nice 1:N relation resolved.

The fieldname "kidsAge" also exists on the inner LOAD (next to "NoName") and it returns - yes - the same hashvalue as in the outer, first LOAD.

Importing Json sub-objects

Last example is for a sub-object (instead of the sub-array): Similarily, you get hashes on the parent level and the content itself when addressing it right with the "table is" position. A FOR loop will ease things again, plus some more logic.

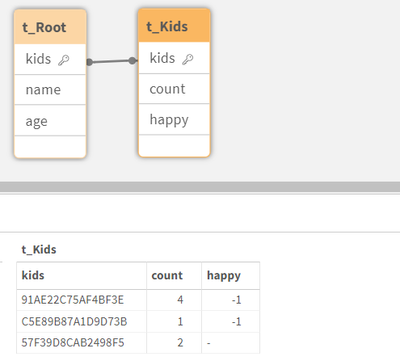

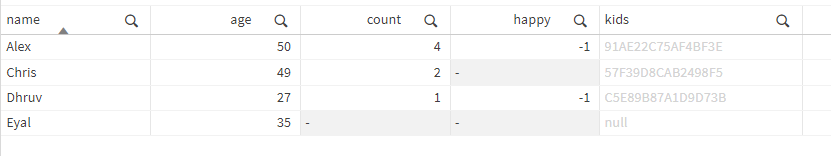

In this .json file we have one row where "kids" is null and the subobject doesn't always contain the key "happy"

[ {"name": "Alex" , "age": 50, "kids": {"count": 4, "happy": true } } , {"name": "Dhruv" , "age": 27, "kids": {"count": 1, "happy": true } } , {"name": "Eyal" , "age": 35, "kids": null} , {"name": "Chris" , "age": 49, "kids": {"count": 2 } } ]We can't count on auto-concatenate any more for the sub-objects (row 4 would end up in a different table than rows 1 and 2). Also, without error-tolerance, the 3rd row import would fail. So we go for this script, where we create an empty table for the "kids" and then explicitly concatenate into it. We have to use the LOAD * functionality, because the structure of the subobjects is not identical throughout the main array. (Assuming you set the vFile yourself)

SET vFile = [lib://..../filename.json]; t_Root: LOAD // outer load * FROM [$(vFile)] (json, table is 'Root'); SET ErrorMode = 0; t_Kids: LOAD * INLINE [kids]; // start empty table FOR v = 0 TO NoOfRows('t_Root') - 1 CONCATENATE(t_Kids) LOAD DISTINCT // inner load * FROM [$(vFile)] (json, table is 'Root/$(v)/kids'); NEXT v SET ErrorMode = 1;We will get now two tables, that are 1:1 related (or 1:1 or 0, to be precise)

Note: the inner LOAD * will return the "kids" as a field, too, containing the hash that links to the first table

The result can easily be used now in the Client

Uff, it can get tricky, but next week I will show you that all the manual steps (loop, field aliasing, concatenating) could be solved by a recursive SUB.

I hope though, that Qlik will also finish a wizard, that does that parsing on import like we have it for JSON objects received via the REST Connection or, likewise, as XML files are parsed.

Conclusion

Pros

- A surprise and already a good starting point for importing .json natively

- Everything can be imported using "table is" directive

Cons

- Quite some script logic needed to parse nested JSON objects

- Can be slow

- Still undocumented and may change in future

-

Using DeepSeek with QlikSense, prompt by input text

Before you start writing: Public articles must follow our Content Standard. See the Content Guidelines for the bare minimum standard and our Contend S... Show MoreBefore you start writing: Public articles must follow our Content Standard. See the Content Guidelines for the bare minimum standard and our Contend Standard for a detailed breakdown. Do not publish directly. Submit your article for review or for publication to have it reviewed by a subject matter expert or writer.

Configure and use deepseek with QlikSense Saas

Connector Config Rest

Request body:

{

"model": "deepseek-chat",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Hello!"}

],

"stream": false

}after Bearer you wirte your API Key generated in https://platform.deepseek.com/api_keys

Script in your app:

vG_inputData = Replace(vinputtext,chr(34),'');

vResponseBody='{"model": "deepseek-chat","messages": [{"role": "system", "content": "You are a helpful assistant."},{"role": "user", "content": "$(vG_inputData)"}],"stream": false}';vResponseBody=Replace(vResponseBody,'"',chr(34)&chr(34));LIB CONNECT TO 'deepseek'; //conn NameRestConnectorMasterTable:SQL SELECT"id","object","created","model","system_fingerprint","__KEY_root",(SELECT"index","logprobs","finish_reason","__KEY_choices","__FK_choices",(SELECT"role","content","__FK_message"FROM "message" FK "__FK_message")FROM "choices" PK "__KEY_choices" FK "__FK_choices"),(SELECT"prompt_tokens","completion_tokens","total_tokens","prompt_cache_hit_tokens","prompt_cache_miss_tokens","__KEY_usage","__FK_usage",(SELECT"cached_tokens","__FK_prompt_tokens_details"FROM "prompt_tokens_details" FK "__FK_prompt_tokens_details")FROM "usage" PK "__KEY_usage" FK "__FK_usage")FROM JSON (wrap on) "root" PK "__KEY_root"WITH CONNECTION (BODY "$(vResponseBody)");[message]:LOAD [role],[content],[__FK_message] AS [__KEY_choices]RESIDENT RestConnectorMasterTableWHERE NOT IsNull([__FK_message]);[choices]:LOAD [index],[logprobs],[finish_reason],[__KEY_choices],[__FK_choices] AS [__KEY_root]RESIDENT RestConnectorMasterTableWHERE NOT IsNull([__FK_choices]);[prompt_tokens_details]:LOAD [cached_tokens],[__FK_prompt_tokens_details] AS [__KEY_usage]RESIDENT RestConnectorMasterTableWHERE NOT IsNull([__FK_prompt_tokens_details]);[usage]:LOAD [prompt_tokens],[completion_tokens],[total_tokens],[prompt_cache_hit_tokens],[prompt_cache_miss_tokens],[__KEY_usage],[__FK_usage] AS [__KEY_root]RESIDENT RestConnectorMasterTableWHERE NOT IsNull([__FK_usage]);[root]:LOAD [id],[object],[created],[model],[system_fingerprint],[__KEY_root]RESIDENT RestConnectorMasterTableWHERE NOT IsNull([__KEY_root]);DROP TABLE RestConnectorMasterTable;Dashboard:Use variable input to modify vinputtext

-

Unlocking the Power of Talend: Common Challenges and Solutions

IntroductionTalend has emerged as a leading open-source data integration platform, enabling organizations to connect, transform, and manage their data... Show MoreIntroduction

Talend has emerged as a leading open-source data integration platform, enabling organizations to connect, transform, and manage their data seamlessly. However, like any powerful tool, it comes with its own set of challenges. In this article, we will explore some of the most common problems faced by Talend users and provide practical solutions to overcome them. Whether you are a seasoned Talend developer or a newcomer, understanding these challenges will help you maximize the potential of this robust platform.1. Performance Issues

One of the most frequently encountered problems in Talend is performance bottlenecks. As data volumes grow, jobs that once ran efficiently may start to lag.Solution:

Optimize Job Design: Break down complex jobs into smaller, reusable components. Use joblets for common tasks to improve maintainability and performance.

Use Bulk Components: For database operations, leverage bulk components like tBulkExec and tOutputBulk to minimize transaction overhead.

Parallel Execution: Utilize the tParallelize component to run multiple sub-jobs concurrently, effectively utilizing available resources.

2. Data Quality Issues

Data quality is paramount in any ETL process. Talend users often face challenges related to data inconsistencies, duplicates, and missing values.Solution:

Implement Data Validation: Use components like tFilterRow and tMap to validate and cleanse data during the ETL process. Establish rules for data quality checks.

Use Talend Data Quality Tools: Talend offers built-in data quality tools that can help identify and rectify data issues. Integrate these tools into your ETL jobs for better data governance.

3. Complex Job Management

As organizations scale, managing multiple Talend jobs can become cumbersome. Dependencies between jobs can lead to confusion and errors.Solution:

Job Repository: Utilize Talend’s repository to manage jobs and their dependencies effectively. Organize jobs into folders based on functionality or project.

Use Context Variables: Implement context variables to manage configurations dynamically. This allows for easier job management and reduces hardcoding.

4. Error Handling and Logging

Effective error handling is crucial for maintaining data integrity and ensuring smooth job execution. Talend users often struggle with tracking errors and debugging jobs.Solution:

Implement Robust Error Handling: Use components like tLogCatcher and tDie to capture and handle errors gracefully. Create a centralized error handling strategy to log errors and send notifications.

Detailed Logging: Configure logging levels in Talend to capture detailed execution logs. Use tFileOutputDelimited to write logs to files for further analysis.

5. Integration with Other Tools

Integrating Talend with other systems (e.g., databases, cloud services, APIs) can pose challenges, especially when dealing with different data formats and protocols.Solution:

Use Talend Components: Talend provides a wide range of components for various integrations. Familiarize yourself with these components to streamline the integration process.

API Integration: For RESTful services, use tRESTClient to interact with APIs. Ensure proper authentication and error handling when making API calls.

6. Learning Curve

For new users, the learning curve associated with Talend can be steep. Understanding the various components and best practices takes time.Solution:

Training and Documentation: Invest in training resources, such as Talend Academy, to enhance your skills. Leverage Talend’s extensive documentation and community forums for support.

Practice: Hands-on experience is invaluable. Start with small projects to build confidence and gradually tackle more complex integrations.

Conclusion

While Talend is a powerful tool for data integration, it is not without its challenges. By understanding common problems and implementing the solutions outlined in this article, users can unlock the full potential of Talend and drive successful data integration initiatives. Continuous learning and adaptation are key to mastering this versatile platform, ensuring that organizations can leverage their data effectively in an ever-evolving landscape.Call to Action

Have you faced challenges while using Talend? Share your experiences and solutions in the comments below! Join the Talend community to stay updated on best practices, tips, and tricks for optimizing your data integration processes. -

Qlik Sense + Nginx Reverse Proxy for Automatically Renewed, Trusted HTTPS Certif...

Tutorial: Qlik Sense + Nginx Reverse Proxy for Automatic, Free HTTPS Certificates If you’re looking for a straightforward way to get automatically re... Show MoreTutorial: Qlik Sense + Nginx Reverse Proxy for Automatic, Free HTTPS Certificates

If you’re looking for a straightforward way to get automatically renewed HTTPS certificates for your Qlik Sense Enterprise server, Let’s Encrypt + Nginx may be the way to go. This article provides configuration details on placing an Nginx reverse proxy in front of Qlik Sense Enterprise, plus automatic HTTPS certificate provisioning and renewal through Certbot.

-

Generate Complex Authorization Script in QlikView for Section Access

Hi Community,I would like to share with you something which I thing can be very useful.Few month back i work on Complex Authorization in QlikView for ... Show MoreHi Community,

I would like to share with you something which I thing can be very useful.

Few month back i work on Complex Authorization in QlikView for very complex Row Level security. During implementation it was too difficult to create various possible combinations if we have more number of columns.For example see below security combination where for user TEST2, we you want to reduce the data as per below logic i.e. ([Dim 1]=’A’ AND [Dim 2]=’C’) OR ([Dim 1]=’B’ AND [Dim 2]=’F’)

In order to archived this we need to create all possible combinations into the Security Bridge table which connect Section Access table & Main data-set on which security need to apply.

But if you have too lot of fields then creating those combinations is too hard. In order to overcome this situation i have written script in QVW which generate this all combinations based on # of fields you supply in configuration.

In Configuration Details, need to specify the parameters values. Once all parameters are pass then you need to Reload the QVW, which will automatically generate script and you can copy the script from Generated Section Access Script section. In Configuration Details all parameters are required

After copying the script, go to STEP 5 where you need to modify the details as per your details & for STEP 6 you need to enable SECTION ACCESS & SECTION APPLICATION lines.

If you have any doubts feel free to ask.

Thanks,

Akshaye -

Making Sense of QVD files

Problem I see you out there my friend. You got parachuted into an organization that has been using QlikView or Qlik Sense for many years, and you find... Show MoreProblem

I see you out there my friend. You got parachuted into an organization that has been using QlikView or Qlik Sense for many years, and you find yourself with directories and sub-directories full of QVD files.

You weren't around when the architecture was put in place.

The boss wasn't around when the architecture was put in place.

And all of you are scratching your head trying to understand what they are, and why they are there. Because after all QlikView and Qlik Sense are nothing more than #DataVisualization tools. Right?

Base Understanding

While QlikView and Qlik Sense can definitely visualize data and the Associative Engine is ideal for Analytics, the truth is both products provide much more functionality. In this article I will be helping you understand why those QVD's are there and help you see how that fit into the overall Data - to - Outcome platform. Notice I've highlighted sections in red that I will focus on for this post. Wow, nothing to do with visuals/analyze at all.

For the next few minutes I want you to change hats. If you are in an IT type role, please put on a business user hat. And if you are in business please put on an IT hat.

IT wants to ensure the data surfaced is governed and high quality. The process of doing that can take a long time without the right tools.

Business users want outcomes, and typically want the answers they need yesterday. Frankly the last thing they want to hear is "it is going to take a long time before you can get the answers you need."

Qlik Virtual Data warehouse tables

Those two personas don't have to be mutually exclusive. The purpose utilizing QVD files for QlikView and Qlik Sense was to provide a "virtual" data warehouse. For 3 reasons:

-

A Centralized data warehouse didn't exist and wasn't going to be built

-

A Centralized data warehouse was going to be built, but it was going to take time and business needed answers before it was complete

-

A Centralized data warehouse did/does exist, but other sources of data were also needed that were not part of it

Thus Qlik (Architects/Modelers/Engineers/Developers) utilize QlikView or Qlik Sense to construct a "virtual" warehouse from those sources.

Benefits of QVD files

Single Source of Truth

Compressed

Development Speed

Typically the IT and Business relationship looks like this ... "Give us all of your requirements in triplicate and signed in blood. We will then prioritize your request and deliver a finished application in 13 man months." With Qlik that can change for the good for all involved. Developers/Designers can sit down with business users, and do a rapid business intelligence session and see what is needed to get the right answers so that the right actions can be taken to drive outcomes. That is made possible by the fact that they can simply start with QVD's that already contain the single source of truth and work with, instead of against, the business users.

Delivery Flexibility

Incremental Loads

One thing we all understand about data in source systems, data warehouses and data marts is that it is ever growing. Applications need to stay current. Because nobody is going to achieve good outcomes based on data that is a year old. While we have millions/billions of fact records but the truth is that the vast majority of them are the same that they were 10 minutes ago, a day ago, a week ago. With QlikView and Qlik Sense the data architects can perform what we refer to as Incremental Loads. We talk to the source systems, data warehouses and data marts and say "Hey give me the data that has changed since the last time we talked." (That's just pseudo code not a real SQL statement.) The new data, changed data and deleted is then merged with the existing millions/billions of rows to keep the data fresh and the original QVD is overlayed. This concept may well be the highest benefit and impacts so many of the other benefits.

One complaint I hear is that "we really need to get answers from live data." Well the truth is running a query that has to return millions of rows of data, especially if joined to other tables takes many, many minutes to return. Can you really say you are dealing with "live" data when it takes 20 minutes to return the answer????? NO!!!! The answer from the live data is actually 20 minutes because tables were locked to return a consistent answer. With incremental loads you could actually ask for the data every few minutes and merge it. The visual applications can provide data that is fresh as of 5 minutes ago, not 20.

Security

Reduced impact and $$$

-

-

Exporting all variables from the Qlikview application using macro

Hi everyone!!It’s about VBScript macro which export all variables from your Qlikview application. You can call it by using a button object or using do... Show MoreHi everyone!!

It’s about VBScript macro which export all variables from your Qlikview application. You can call it by using a button object or using document event triggers (OnPostReload) located in Settings menu Document Properties option and tab Triggers, for example.

So, why am I develped it? I'll tell you why. A friend of mine was talking about variables sincronization issues between his Qlikview and Qliksense applications. Every time when he create a new variable or he changed the variable definition or even in its comment, he had to replicate this changes to another application which, sometimes, he forgot to do it.

Firstly, I’ve searched if was there some solution on the Qlik Community. Unfortunately, I didn't found nothing ready. So, that's why I developed it. Since then, my friend has been using this macro in his applications and the variables sincronization issues was solved. He just call the macro through document event triggers, setting (OnPostReload) option and then the macro load every single variable into a file (*.csv) which is created in the same folder of your Qlikview application. Once the variables was exported our Qliksense application could be load it and create each variable easily.

Just to be clear and understandable how it works, I attached as example two files (*.qvw) where one of them is the application which need to export his variables every time its reloaded and another is the application which will load data the exported file and it will create every single variable.

When you will open macro editor from the application which will export the variables you will can easily change the file name and its folder destiny. You will can change columns name as well.

So, that is it! I hope it can be useful for everyone!

You can read the scripts below:

ExportVarApp (Macro):

Sub ExportVar()

set docprop = ActiveDocument.GetProperties

wd = docprop.MyWorkingDirectory

wdEx = wd & "\VariablesApp\"

Set fileSystemObject = CreateObject("Scripting.FileSystemObject")

If Not fileSystemObject.FolderExists(wdEx) Then

fileSystemObject.CreateFolder(wdEx)

End If

vNameFile = fileSystemObject.GetBaseName(ActiveDocument.Name) 'Getting Qlikview's document name

fPath = wdEx & vNameFile & "_Variables.csv"On Error Resume Next

Err.Clear

if fileSystemObject.FileExists(fPath) Then

fileSystemObject.DeleteFile(fPath)

if Err.Number <> 0 then

' An exception occurred

msgbox "Exception:" & vbCrLf &_

" Error number: " & Err.Number & vbCrLf &_

" Error description: '" & Err.Description & vbCrLf &_

" File cannot be deleted because it still open for another application." & vbCrLf &_

" Please, close " & vNameFile & " file and try it again." & vbCrLf

exit sub

else

set fileSystemObject = NothingSet objFSO = CreateObject("Scripting.FileSystemObject")

'--------------------------------------------------------------------------------------------------------------------------------------------

' ForReading = 1 - Open a file for reading only. You can't write to this file.

' ForWriting = 2 - Open a file for writing only. Use this mode to replace an existing file with new data. You can't read from this file.

' ForAppending = 8 - Open a file and write to the end of the file. You can't read from this file.

'--------------------------------------------------------------------------------------------------------------------------------------------

Const ForAppending = 8

vSep = "|" 'Delimiter or Separator of fields'

vColumn1 = "VarName"

vColumn2 = "VarDefinition"

set MyVarsApp = ActiveDocument.GetVariableDescriptions

for i = 0 to MyVarsApp.Count - 1

set vObj = MyVarsApp.Item(i)

Set objTS = objFSO.OpenTextFile(fPath, ForAppending, true)

if i = 0 then

objTS.WriteLine(vColumn1 & vSep & vColumn2)

objTS.WriteLine(vObj.Name & vSep & vObj.RawValue)

else

objTS.WriteLine(vObj.Name & vSep & vObj.RawValue)

end if

objTS.Close()

next

msgbox("Arquivo " & vNameFile & "_Variables.csv" & " was exported with success!!")

end if

end if

End SubImportVarApp (Loading and creating variables)

//Load variables

VarTable:

LOAD VarName,

VarDefinition

FROM

VariablesApp\ExportVarApp_Variables.csv

(txt, codepage is 1252, embedded labels, delimiter is '|', msq);//Creating variables

For i = 0 to NoOfRows('VarTable') - 1Let vVarName = peek('VarName', i, 'VarTable'); // Name of the variable

Let $(vVarName) = peek('VarDefinition', i, 'VarTable'); // Definition of the variable

Next i

//Destroying variables of control

Let i = null();Let vVarName = null(); //

Drop Table VarTable;

-

Supercharge your Qlik development with metadata!

Imagine that you are an average ordinary Qlik Developer. You start to create a new application for some healthcare big cheese so you find the virtual ... Show MoreImagine that you are an average ordinary Qlik Developer. You start to create a new application for some healthcare big cheese so you find the virtual data warehouse of QVD files and all goes well. The data loads just fine and just for giggles and grins you utilize the Data Preview in the Load Script to see the values and panic hits you. What on earth is wrong with the Age field?

That Qlik Dork guy is falling apart and could be 99 for all you know. But Dan and Levi? C'mon they can't possibly be that old. Suddenly you are reminded of the importance of metadata. If you knew how that field was defined, it might help you understand those crazy values. Right?

-

How To /Missing Manual (25 articles)

How To / Missing Manual QlikView functions explainedHeader 1Header 2123456789101112131415161718192... Show More

How To / Missing Manual

QlikView functions explainedDid You like it?

-

Creating Harvey Balls Chart using SVG graphics

The 'Measure Representation as Image' option is available in some Qlik Sense charts, enabling new possibilities for creating measures that return a s... Show More -

Moving QVD Files Between Spaces in Qlik Cloud Using a Script

Moving QVD Files Between Spaces in Qlik Cloud Using a Script In Qlik Cloud, moving QVD files between different spaces is not a straightf... Show More

Moving QVD Files Between Spaces in Qlik Cloud Using a Script

Thank you for the reading.Environment

- Qlik Cloud on the 20th november 2024

-

Limitation for generic link

More detailed information about the Limitation for Generic Link Resolution Managing links from the Administration activity center You can create, uplo... Show MoreMore detailed information about the Limitation for Generic Link

Resolution

Managing links from the Administration activity center

You can create, upload, edit, move, and delete links from the Generic links page.

Creating a link

Do the following:

-

In the Administration activity center, go to Generic links.

-

Click Create new.

-

To add an image, drag and drop an image file or click Browse to select the file.

-

Enter the following link details:

-

Name: Enter a name for the link.

-

Link URL: Enter the URL in the format https://domain.com.

-

Only HTTPS URLs are allowed.

-

Domain names can contain letters (a-z), numbers (0-9), and hyphens (-), but not underscores (_) or the '@' symbol. For example, https://my-domain.com/ is valid, while https://my_domain.com/ is not.

-

-

Space: Select the space where the link will be added.

-

Description (optional): Enter a description for the link.

-

Tags (optional): Add tags to help categorize the link.

-

-

Click Create.

Internal Investigation ID(s)

HLP-17441

This will be released on the next documentation update in Helpsite

Environment

- Qlik SaaS

-

-

Compare Infoproviders between BW HANA environments using Gold Client BW

Compare Infoproviders between BW HANA environments using Gold Client BW One of the most asked and key features of Gold client BW is to provide the use... Show MoreCompare Infoproviders between BW HANA environments using Gold Client BW

One of the most asked and key features of Gold client BW is to provide the users with a feature to Compare infoproviders between two BW HANA environments. This unique feature also list’s out the differences of the object between the two environments.

The function allows the comparison of various types of Infoproviders like Info Objects, Info Cubes, DSO and ADSO’s structures and properties between the current BW system and a target BW system.

Upon entering one or multiple Info Objects, Info Cubes, DSO’s or ADSO’s the function will compare the structure and properties of the same against the Info Objects, Info Cubes, DSO’s and ADSO’s in the target system specified by the user.

Example:

Source BW Environment = A4H(BWonHANA)

Target BW Environment = BW2(BW4HANA)

infoprovider to be compared between two BW environments = 0MATERIAL

Login to the source BW system which in this example is A4H and use the tcode ZGOLDBW for Gold Client BW

Click on Compare Info provider button under the Infoprovider Equalizer section as shown in the figure below

In the next screen we need to provide the details of the info provider which we would like to compare with the Target BW system. Below are the steps to be followed

Compare Info Provider for on your target system.

- Select InfoObject radio button from the Info Provider type

- Enter InfoObject(s) in the Info Provider Selection which in this case is 0MATERIAL

- Enter the RFC destination of the BW system the Info Object is being compared to – Which in this example is BW2

- Press the Execute button

Once the execute button is pressed the user will be asked to login to the target BW system which in this example is BW2

After logging in successfully, the system will compare the infoprovider between the two BW environments and will list out the differences of the infoprovider between the environments.

In the below example of 0MAERIAL we can see that the length, output length, number of attributes and the attributes which is missing or differ from the source BW system will be displayed.

Similar to 0MATERIAL the user’s will be able to compare the below different types of infoprovider between two BW HANA and BW Non-HANA environments

- Info Object

- Info Cube

- Data store Objects

- Advance Data store objects

Environment

-

Gantt Chart in Qlik Sense: How-To Tutorial [+VIDEO]

Looking for the simplest way to create a Gantt chart in Qlik Sense to further streamline data-driven project management in your company or organizati... Show MoreLooking for the simplest way to create a Gantt chart in Qlik Sense to further streamline data-driven project management in your company or organization? We’ll show you one right now! Follow this quick tutorial and you’ll learn how to easily visualize project tasks and activities as data in a matter of minutes — on smart, interactive Gantt charts using the intuitive Gantt Chart extension for Qlik Sense by AnyChart, Qlik's Technology Partner.

Watch the video tutorial:

Or read the text-and-pictures version below.

Loading Project Data in Qlik Sense

In Qlik, everything starts with the data.

We are going to create a Gantt chart based on data from an XLSX file that looks as shown below.

The tables in the file represent the structure of a company and its project schedule. There are three departments, each of them carrying out several projects. Each task within a project is assigned to a certain employee.

First of all, we will create an app, add this data to Qlik, apply the suggested associations, and load the data.

Then we need to make sure that Qlik established proper associations between tables.

Let’s create a sheet and add three filter panes by dropping three fields on the sheet: ‘department_name’, ‘project_name’, and ‘task_name’.

If we switch from ‘Edit’ to ‘View’ and try to select a department and a project, we will see that associated tasks are displayed correctly.

Adding Gantt Chart in Qlik Sense

Now that the data is loaded and the associations between the tables are set up the right way, we can add a Gantt chart.

Go to ‘Custom objects’, find AnyGantt, and drop a Project Chart on the sheet, below the filter panes we added before.

Add dimensions

To configure the Gantt chart, we need to add dimensions representing the hierarchical structure of our data.

We will use the following dimensions: ‘department_name’, ‘project_name’, and ‘task_name.

Add measures

The next step is adding measures.

Let’s use the ‘start_date’ and ‘finish_date’ fields.

These measures do not require aggregation, so we can use either the ‘Only()’ aggregation or just the name of the field.

Map data

Then we need to make sure that AnyGantt interprets our dates correctly.

Use the ‘Map as’ drop-down menu to map the ‘start_date’ field as ‘Actual Start’ and ‘finish_date’ as ‘Actual Finish’.

As you can see, you can also map a date as ‘Baseline Start’, ‘Baseline Finish’, ‘Actual Progress’, “Baseline Progress”, or “Data Field”.

Preliminary result

After these steps, we can already see a Gantt chart:

The data grid on the left displays the hierarchy of departments, projects, and tasks.

The timeline on the right displays the duration of tasks.

Show actual progress

Let’s add one more measure, ‘progress’, and map it as ‘Actual Progress’.

Please note that you do not need to aggregate it.

Now you can see progress bars.

Result: Gantt Chart in Qlik Sense, Built with Real Ease

That is it, the Gantt chart is ready:

In the ‘View’ mode, select a department or a project in a filter pane, and the Gantt chart will display the related tasks.

It is very easy!

Helpful Links

- Download the extension

- Explore demo apps (view live or download QVF)

- See the documentation

- Request a personalized live demo

Questions?

If you have any questions on how to create a Gantt chart or any other chart type using AnyChart's Extensions for Qlik Sense, drop us a note in the comments below or contact AnyChart's Support Team directly.