Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Recent Documents

-

Migrating To Qlik Cloud Analytics

This Techspert Talks session covers: Demonstration of the Qlik Cloud Analytics Migration tool Advantages of Qlik Cloud SaaS Readiness Chapters... Show More -

Qlik NPrinting and the CVE-2025-32433 Erlang/OTP vulnerability

Erlang/Open Telecom Platform (OTP) has disclosed a critical security vulnerability: CVE-2025-32433. Is Qlik NPrinting affected by CVE-2025-32433? Reso... Show MoreErlang/Open Telecom Platform (OTP) has disclosed a critical security vulnerability: CVE-2025-32433.

Is Qlik NPrinting affected by CVE-2025-32433?

Resolution

Qlik NPrinting installs Erlang OTP as part of the RabbitMQ installation, which is essential to the correct functioning of the Qlik NPrinting services.

RabbitMQ does not use SSH, meaning the workaround documented in Unauthenticated Remote Code Execution in Erlang/OTP SSH is already applied. Consequently, Qlik NPrinting remains unaffected by CVE-2025-32433.

All future Qlik NPrinting versions from the 20th of May 2025 and onwards will include patched versions of OTP and fully address this vulnerability.

Environment

- Qlik NPrinting

-

Qlik Cloud: Data for Analysis usage not resetting at the start of a new month

Monthly monitoring of the data volume used in Qlik Cloud (Data for Analysis) is essential when using a capacity-based subscription. This data is acces... Show MoreMonthly monitoring of the data volume used in Qlik Cloud (Data for Analysis) is essential when using a capacity-based subscription.

This data is accessible in the Qlik Cloud Administration Center Home section:

For an overview of how the Data for Analysis is calculated, see Understanding the subscription value meters | Data for Analysis; its calculation considers the size of all resources on each day, and the day with the maximum size is treated as the high-water mark, which is then used for billing purposes.

However, you may sometimes notice that the usage does not decrease as expected, even after reducing your app data. In such cases, it is recommended to review unused or rarely reloaded apps, as the previous app reload size may still be used for the calculation.

Resolution

To review the detailed usage, you can use a Consumption Report.

- Distribute a consumption report; see Distributing detailed consumption reports | help.qlik.com for details on how to achieve this.

- Review your data usage on the Data for Analysis sheet.

- If you observe unexpected usage in apps, such as apps with reload infrequently, the is possible that the size is carried over from the previous reload.

To prevent the previous reload size from being carried over into the following month in similar use cases (specifically for apps that are not actively in use), a possible workaround is to reload apps using small dummy data to update the previous reload size of the apps.

Note that while offloading QVD files to, for example, S3 incurs no cost, any subsequent reload of those QVDs into Qlik Cloud will be counted toward Data for Analysis. Users should carefully evaluate whether this approach is beneficial.

Cause

Example case:

- Extract external data into an app during reload.

- Store the data as QVD files, and save the files outside Qlik Cloud (such as AWS S3).

- Drop the source tables from the data model at the end of the script.

- Reload completes; the final data model contains no tables.

The app is reloaded only once a month (or even less frequently) for the purpose of creating QVD files. At the end of the script, all tables are dropped, and the final app size is empty.

In this scenario, the usage of Data for Analysis won't be reset in the following month since it takes into consideration the size of loading the app. Therefore, the loading size continues to be charged in the next month as a previous reload.

Environment

- Qlik Cloud Analytics

-

How the Windows Authentication Pattern in Virtual Proxy enables form-based authe...

Click here for Video Transcript The Qlik Sense Virtual Proxy has two different authentication options by default: Forms and Windows Authentication. T... Show MoreClick here for Video Transcript

The Qlik Sense Virtual Proxy has two different authentication options by default: Forms and Windows Authentication. The Windows Authentication Pattern setting determines which one is used.

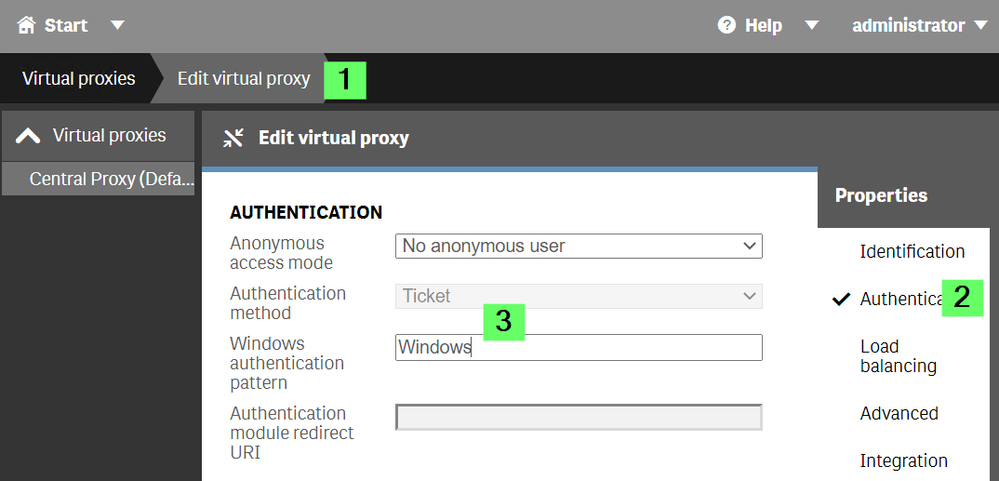

The setting is accessed through the Qlik Sense Management Console > Virtual Proxies > [Virtual Proxy] > Authentication

See Figure 1:

When a client connects to Qlik Sense, the browser sends a User-Agent string to as part of the standard connection process.

The Qlik Sense Proxy service reads this string and checks for the presence of the value found in Windows Authentication Pattern. If the string is present windows authentication is used and if the browser supports NTLM the user may be automatically logged in. If the automatic login fails they will get a pop-up asking for username and password.

If the Windows Authentication Pattern value is not found then we fall back to forms authentication where instead we use a form and always require a username and password for login.

The default value for Windows Authentication Pattern is "Windows", which is present in all windows browser user-agent strings. If you change it to say Chrome or Firefox instead only those browsers will be offered Windows authentication. If you want to use Windows Authentication on all browsers try setting it to another string found in all user-agents (besides Windows), for example, "Mozilla".Related Content:

-

Qlik Sense Client Managed: Adjust the MaxHttpHeaderSize for the Micro Services r...

When using SAML or ticket authentication in Qlik Sense, some users belonging to a big number of groups see the error 431 Request header fields too lar... Show MoreWhen using SAML or ticket authentication in Qlik Sense, some users belonging to a big number of groups see the error 431 Request header fields too large on the hub and cannot proceed further.

Resolution

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

The default setting will still be a header size of 8192 bytes. The fix adds support for a configurable MaxHttpHeaderSize.

Steps:- To configure add it at the top section of the file under [globals] (C:\Program Files\Qlik\Sense\ServiceDispatcher\service.conf)

[globals] LogPath="${ALLUSERSPROFILE}\Qlik\Sense\Log" (...) MaxHttpHeaderSize=16384 - Once the change is done, save the file and restart the Qlik Service Dispatcher service.

Note: The above value (16384) is an example. You may need to put more depending on the total number of characters of all the AD groups to which the user belongs. The max value is 65534.

Environment:

Qlik Sense Enterprise on Windows

- To configure add it at the top section of the file under [globals] (C:\Program Files\Qlik\Sense\ServiceDispatcher\service.conf)

-

Qlik NPrinting task don't finish unless the Qlik NPrinting server is restarted: ...

If Qlik NPrinting tasks do not complete and will only continue if the operating system is restarted, review the Qlik NPrinting log files for the follo... Show MoreIf Qlik NPrinting tasks do not complete and will only continue if the operating system is restarted, review the Qlik NPrinting log files for the following error:

io: read/write on closed pipe

This error indicates the Windows Operating System is damaged (after a faulty restart, a failed upgrade, or similar root causes).

Resolution

Reinstalling Qlik NPrinting will not be sufficient to resolve the error. The Operating System will need to be reinstalled.

Example solution:

- Take a backup of your Qlik NPrinting database; see Backup and restore Qlik NPrinting for details.

- Store the backup in a location off the current server

- Reinstall Windows

- Restore your Qlik NPrinting database; see Backup and restore Qlik NPrinting for details.

Environment

- Qlik NPrinting

-

How to Decrypt Qlik Talend Data Integration Verbose Task Log Files

To decrypt the Qlik Talend Data Integration (QCDI) verbose task log files: Open the Qlik Talend Data Integration Project in the Web UI Navigate to yo... Show MoreTo decrypt the Qlik Talend Data Integration (QCDI) verbose task log files:

- Open the Qlik Talend Data Integration Project in the Web UI

- Navigate to your project in the Qlik Talend Data Integration (formerly QCDI) browser interface

- Locate the Task Name

- Go to the Tasks tab

- Click on the ... (ellipsis) button next to the relevant task

- Select View task logs

- Identify the unique task name (example: TASK_aRBItFnknSU0vR5LX0AgkA)

- SSH into the Linux Gateway machine using the qlik user and log in

- Navigate to the movement/bin Directory:

cd /opt/qlik/gateway/movement/bin - Source the Qlik Talend Data Integration environment:

source arep_login.sh - Decrypt the Task Log File by running the repctl dumplog command:

repctl dumplog "/opt/qlik/gateway/movement/data/logs/TASK_aRBItFnknSU0vR5LX0AgkA.log" log_key="/opt/qlik/gateway/movement/data/tasks/TASK_aRBItFnknSU0vR5LX0AgkA/log.key" > "/opt/qlik/gateway/movement/data/logs/TASK_aRBItFnknSU0vR5LX0AgkA_Decrypted.log"

In the example command, replace TASK_aRBItFnknSU0vR5LX0AgkA with your actual task name. - (Optional) Compress and download the decrypted Logs

To bundle and download the decrypted logs:tar -czf decryptedLogs.tar.gz TASK_aRBItFnknSU0vR5LX0AgkA_Decrypted.log reptask_TASK_aRBItFnknSU0vR5LX0AgkA__250806061702_Decrypted.log

Then, download decryptedLogs.tar.gz from the Linux gateway and send it to Qlik Support.

Related Content

How to Decrypt Qlik Replicate Verbose Task Log Files

Environment

- Qlik Talend Data Integration (QCDI)

-

How To Collect Log Files From Qlik Sense Enterprise on Windows

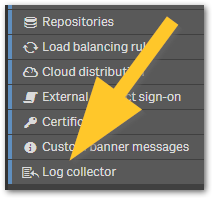

The Qlik Sense log files can be easily collected using the Log Collector. The Log Collector is embedded in the Qlik Sense Management Console. It is t... Show MoreThe Qlik Sense log files can be easily collected using the Log Collector.

The Log Collector is embedded in the Qlik Sense Management Console. It is the last item listed in the Configure Systems section.

For instructions on how to use the Log Collector, see Log collector (help.qlik.com).

Content

- Requirements

- Using the Log Collector

- What does the Qlik Sense Log Collector access?

- Manually collecting log files

- Older Qlik Sense Versions (3.1 and earlier)

Requirements

The user must be a root admin and have administrative permissions.

Using the Log Collector

The best way to gather these logs is to use the Qlik Sense Log Collector. If the tool is not included in your install, it can be downloaded from this article.

- Login to Windows Server on Qlik Sense node

- Open Windows File Explorer

- Navigate to Log Collector folder at C:\Program Files\Qlik\Sense\Tools\QlikSenseLogCollector

- Press Shift key and right click on QlikSenseLogCollector.exe

- Select Run as different user

- Enter Qlik Sense service account credentials.

- Configure log collection

- Date range to collect logs from (minimum of 5 days if possible)

- Support case number to include in ZIP file name

- Select options

- Include Archive logs

- Click Collect

- If Qlik Sense is not automatically accessible on the node, further options are presented.

- Qlik Sense is running and on this host

this option to get more options on automatic log collection - Qlik Sense is not running or accessible

this option allows to define the path to local Qlik Sense log folder - Qlik Sense is located elsewhere

this option allows to enter FQDN of remote Qlik Sense server

- Qlik Sense is running and on this host

- Wait until collection is finished

- Click Close

- Open generated ZIP file in C:\Program Files\Qlik\Sense\Tools\QlikSenseLogCollector

Note, no need to unzip the file, simple double click on the ZIP file to browse its content- Confirm it contains JSON and TXT files, if system info options were enabled in Step 6

- Confirm there is one folder per node in the deployment, named in same way as the node

- Confirm there is a Log folder representing Archived Logs from persistent storage

- If ZIP content is incomplete, repeat steps above or contact support for further guidance

- If ZIP content is accurate, attach ZIP file to support case for further analysis by Qlik

What does the Qlik Sense Log Collector access?

This list provides an overview of what system information the Qlik Sense Log collector accesses and collects.

- WhoAmI (information)

C:\Windows\System32\whoami.exe

- Port status (information)

C:\Windows\System32\netstat.exe -anob

- Process Info (information)

C:\Windows\System32\tasklist.exe /v

- Firewall Info (information)

C:\Windows\System32\netsh.exe advfirewall show allprofiles

- Network Info (information)

C:\Windows\System32\ipconfig.exe /all

- IIS Status (iisreset /STATUS Display the status of all Internet services.)

C:\Windows\System32\iisreset.exe /status

- Proxy Activated (information)

C:\Windows\System32\reg.exe query "HKEY_CURRENT_USER\Software\Microsoft\Windows\CurrentVersion\Internet Settings" | find /i "ProxyEnable"

- Proxy Server (information)

C:\Windows\System32\reg.exe query "HKEY_CURRENT_USER\Software\Microsoft\Windows\CurrentVersion\Internet Settings" | find /i "proxyserver"

- Proxy AutoConfig (information)

C:\Windows\System32\reg.exe query "HKEY_CURRENT_USER\Software\Microsoft\Windows\CurrentVersion\Internet Settings" | find /i "AutoConfigURL"

- Proxy Override (information)

C:\Windows\System32\reg.exe query "HKEY_CURRENT_USER\Software\Microsoft\Windows\CurrentVersion\Internet Settings" | find /i "ProxyOverride"

- Internet Connection (information)

C:\Windows\System32\ping.exe google.com

- Drive Mappings (information)

C:\Windows\System32\net.exe use

- Drive Info (information)

C:\Windows\System32\wbem\wmic.exe /OUTPUT:STDOUT logicaldisk get size

- Localgroup Administrators (information)

C:\Windows\System32\net.exe localgroup "Administrators"

- Localgroup Sense Service Users (information)

C:\Windows\System32\net.exe localgroup "Qlik Sense Service Users"

- Localgroup Performance Monitor Users (information)

C:\Windows\System32\net.exe localgroup "Performance Monitor users"

- Localgroup Qv Administrators (information)

C:\Windows\System32\net.exe localgroup "QlikView Administrators"

- Localgroup Qv Api (information)

C:\Windows\System32\net.exe localgroup "QlikView Management API"

- System Information (information)

C:\Windows\System32\systeminfo.exe

- Program List (information)

C:\Windows\System32\wbem\wmic.exe /OUTPUT:STDOUT product get name

- Service List (information)

C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe -command "gwmi win32_service | select Started

- Group Policy (information)

C:\Windows\System32\gpresult.exe /z

- Local Policies - User Rights Assignment (information)

C:\Windows\System32\secedit.exe /export /areas USER_RIGHTS /cfg

- Local Policies - Security Options (information)

C:\Windows\System32\secedit.exe /export /areas

- Certificate - Current User(Personal) (information)

C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe -command "Get-ChildItem -Recurse Cert:\currentuser\my | Format-list"

- Certificate - Current User(Trusted Root) (information)

C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe -command "Get-ChildItem -Recurse Cert:\currentuser\Root | Format-list"

- Certificate - Local Computer(Personal) (information)

C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe -command "Get-ChildItem -Recurse Cert:\localmachine\my | Format-list"

- Certificate - Local Computer(Trusted Root) (information)

C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe -command "Get-ChildItem -Recurse Cert:\localmachine\Root | Format-list"

- HotFixes (information)

$FormatEnumerationLimit=-1;$Session = New-Object -ComObject Microsoft.Update.Session;$Searcher = $Session.CreateUpdateSearcher();$historyCount = $Searcher.GetTotalHistoryCount();$Searcher.QueryHistory(0, $historyCount) | Select-Object Title, Description, Date, @{name="Operation"; expression={switch($_.operation){1 {"Installation"}; 2 {"Uninstallation"}; 3 {"Other"}}}} | out-string -Width 1024 - UrlAclList (information)

C:\Windows\System32\netsh.exe http show urlacl

- PortCertList (information)

C:\Windows\System32\netsh.exe http show sslcert

Manually collecting log files

Any versions of Qlik Sense Enterprise on Windows prior to May 2021, do not include the Log Collector.

If the Qlik Sense Log collector does not work then you can manually gather the logs.

- Default storage (before archiving): C:\ProgramData\Qlik\Sense\Log

- Default archive storage: C:\ProgramData\Qlik\Sense\Repository\Archived Logs

- Manual retrieval of Reload logs: How to find the Script (Reload) logs in Qlik Sense Enterprise

For information on when logs are archived, see How logging works in Qlik Sense Enterprise on Windows.

Older Qlik Sense Versions (3.1 and earlier)

Persistence Mechanism Current Logs(Active Logs) Archived Logs

Shared (Sense 3.1 and newer) C:\ProgramData\Qlik\Sense\Log Defined in the QMC under

CONFIGURE SYSTEM > Service Cluster > Archived logs root folder

Example enter (\\QLIKSERVER\QlikShare\ArchivedLogs)Synchronized (Sense 3.1 and older) C:\ProgramData\Qlik\Sense\Log C:\ProgramData\Qlik\Sense\Repository\Archived Logs Note: Depending on how long the system has been running, this folder can be very large so you will want to include only logs from the time frame relevant to your particular issue; preferably a day before the issue began occurring.

Related Content

-

Qlik Talend Product: When attempting to run a task containing a SQLite component...

When attempting to run a task containing a SQLite component on Remote Engine, it receives the following error relating to SQLite connections: tDBConn... Show MoreWhen attempting to run a task containing a SQLite component on Remote Engine, it receives the following error relating to SQLite connections:

tDBConnection_2 Error opening connection java.sql.SQLException: Error opening connection at org.sqlite.SQLiteConnection.open(SQLiteConnection.java:283) at org.sqlite.SQLiteConnection.

Caused by: org.sqlite.NativeLibraryNotFoundException: No native library found for os.name=Linux, os.arch=x86_64, paths=[/org/sqlite/native/Linux/x86_64:/usr/java/packages/lib:/usr/lib/x86_64-linux-gnu/jni:/lib/x86_64-linux-gnu:/usr/lib/x86_64-linux-gnu:/usr/lib/jni:/lib:/usr/lib]

Resolution

To resolve this, please ensure that the system’s temp directory has both write and execute permissions for the user running the process. Configuring the JVM to use a custom temp directory with appropriate permissions by setting up the run profile.

For example:

-Djava.io.tmpdir=/media/local/temp Where "media/local/temp" has executable permissions

Cause

This issue arises because the SQLite JDBC driver (such as sqlite-jdbc) includes native libraries (like libsqlitejdbc.so) within the .jar file. At runtime, these native libraries are extracted to a temporary directory (usually /tmp or the path defined by the java.io.tmpdir system property) and loaded by the JVM to enable the connection to the SQLite database. If the temporary directory does not have the appropriate executable permissions, the driver fails to load the native libraries, leading to a connection error.

Environment

-

The Qlik Sense Monitoring Applications for Cloud and On Premise

Qlik Sense Enterprise Client-Managed offers a range of Monitoring Applications that come pre-installed with the product. Qlik Cloud offers the Data Ca... Show MoreQlik Sense Enterprise Client-Managed offers a range of Monitoring Applications that come pre-installed with the product.

Qlik Cloud offers the Data Capacity Reporting App for customers on a capacity subscription, and additionally customers can opt to leverage the Qlik Cloud Monitoring apps.

This article provides information on available apps for each platform.

Content:

- Qlik Cloud

- Data Capacity Reporting App

- Access Evaluator for Qlik Cloud

- Answers Analyzer for Qlik Cloud

- App Analyzer for Qlik Cloud

- Automation Analyzer for Qlik Cloud

- Entitlement Analyzer for Qlik Cloud

- Reload Analyzer for Qlik Cloud

- Report Analyzer for Qlik Cloud

- How to automate the Qlik Cloud Monitoring Apps

- Other Qlik Cloud Monitoring Apps

- OEM Dashboard for Qlik Cloud

- Monitoring Apps for Qlik Sense Enterprise on Windows

- Operations Monitor and License Monitor

- App Metadata Analyzer

- The Monitoring & Administration Topic Group

- Other Apps

Qlik Cloud

Data Capacity Reporting App

The Data Capacity Reporting App is a Qlik Sense application built for Qlik Cloud, which helps you to monitor the capacity consumption for your license at both a consolidated and a detailed level. It is available for deployment via the administration activity center in a tenant with a capacity subscription.

The Data Capacity Reporting App is a fully supported app distributed within the product. For more information, see Qlik Help.

Access Evaluator for Qlik Cloud

The Access Evaluator is a Qlik Sense application built for Qlik Cloud, which helps you to analyze user roles, access, and permissions across a tenant.

The app provides:

- User and group access to spaces

- User, group, and share access to apps

- User roles and associated role permissions

- Group assignments to roles

For more information, see Qlik Cloud Access Evaluator.

Answers Analyzer for Qlik Cloud

The Answers Analyzer provides a comprehensive Qlik Sense dashboard to analyze Qlik Answers metadata across a Qlik Cloud tenant.

It provides the ability to:

- Track user questions across knowledgebases, assistants, and source documents

- Analyze user behavior to see what types of questions users are asking about what content

- Optimize knowledgebase sizes and increase answer accuracy by removing inaccurate, unused, and unreferenced documents

- Track and monitor page size to quota

- Ensure that data is kept up to date by monitoring knowledgebase index times

- Tie alerts into metrics, (e.g. a knowledgebase hasn't been updated in over X days)

For more information, see Qlik Cloud Answers Analyzer.

App Analyzer for Qlik Cloud

The App Analyzer is a Qlik Sense application built for Qlik Cloud, which helps you to analyze and monitor Qlik Sense applications in your tenant.

The app provides:

- User sessions by app, sheets viewed

- Large App consumption monitoring

- App, Table and Field memory footprints

- Synthetic keys and island tables to help improve app development

- Threshold analysis for fields, tables, rows and more

- Reload times and peak RAM utilization by app

For more information, see Qlik Cloud App Analyzer.

Automation Analyzer for Qlik Cloud

The Automation Analyzer is a Qlik Sense application built for Qlik Cloud, which helps you to analyze and monitor Qlik Application Automation runs in your tenant.

Some of the benefits of this application are as follows:

- Track number of automations by type and by user

- Analyze concurrent automations

- Compare current month vs prior month runs

- Analyze failed runs - view all schedules and their statuses

- Tie in Qlik Alerting

For more information, see Qlik Cloud Automation Analyzer.

Entitlement Analyzer for Qlik Cloud

The Entitlement Analyzer is a Qlik Sense application built for Qlik Cloud, which provides Entitlement usage overview for your Qlik Cloud tenant for user-based subscriptions.

The app provides:

- Which users are accessing which apps

- Consumption of Professional, Analyzer and Analyzer Capacity entitlements

- Whether you have the correct entitlements assigned to each of your users

- Where your Analyzer Capacity entitlements are being consumed, and forecasted usage

For more information, see The Entitlement Analyzer.

Reload Analyzer for Qlik Cloud

The Reload Analyzer is a Qlik Sense application built for Qlik Cloud, which provides an overview of data refreshes for your Qlik Cloud tenant.

The app provides:

- The number of reloads by type (Scheduled, Hub, In App, API) and by user

- Data connections and used files of each app’s most recent reload

- Reload concurrency and peak reload RAM

- Reload tasks and their respective statuses

For more information, see Qlik Cloud Reload Analyzer.

Report Analyzer for Qlik Cloud

The Report Analyzer provides a comprehensive dashboard to analyze metered report metadata across a Qlik Cloud tenant.

The app provides:

- Current Month Reports Metric

- History of Reports Metric

- Breakdown of Reports Metric by App, Event, Executor (and time periods)

- Failed Reports

- Report Execution Duration

For more information, see Qlik Cloud Report Analyzer.

How to automate the Qlik Cloud Monitoring Apps

Do you want to automate the installation, upgrade, and management of your Qlik Cloud Monitoring apps? With the Qlik Cloud Monitoring Apps Workflow, made possible through Qlik's Application Automation, you can:

- Install/update the apps with a fully guided, click-through installer using an out-of-the-box Qlik Application Automation template.

- Programmatically rotate the API key that is required for the data connection on a schedule using an out-of-the-box Qlik Application Automation template. This ensures that the data connection is always operational.

- Get alerted whenever a new version of a monitoring app is available using Qlik Data Alerts.

For more information and usage instructions, see Qlik Cloud Monitoring Apps Workflow Guide.

Other Qlik Cloud Monitoring Apps

OEM Dashboard for Qlik Cloud

The OEM Dashboard is a Qlik Sense application for Qlik Cloud designed for OEM partners to centrally monitor usage data across their customers’ tenants. It provides a single pane to review numerous dimensions and measures, compare trends, and quickly spot issues across many different areas.

Although this dashboard is designed for OEMs, it can also be used by partners and customers who manage more than one tenant in Qlik Cloud.

For more information and to download the app and usage instructions, see Qlik Cloud OEM Dashboard & Console Settings Collector.

With the exception of the Data Capacity Reporting App, all Qlik Cloud monitoring applications are provided as-is and are not supported by Qlik. Over time, the APIs and metrics used by the apps may change, so it is advised to monitor each repository for updates and to update the apps promptly when new versions are available.

If you have issues while using these apps, support is provided on a best-efforts basis by contributors to the repositories on GitHub.

Monitoring Apps for Qlik Sense Enterprise on Windows

Operations Monitor and License Monitor

The Operations Monitor loads service logs to populate charts covering performance history of hardware utilization, active users, app sessions, results of reload tasks, and errors and warnings. It also tracks changes made in the QMC that affect the Operations Monitor.

The License Monitor loads service logs to populate charts and tables covering token allocation, usage of login and user passes, and errors and warnings.

For a more detailed description of the sheets and visualizations in both apps, visit the story About the License Monitor or About the Operations Monitor that is available from the app overview page, under Stories.

Basic information can be found here:

The License Monitor

The Operations MonitorBoth apps come pre-installed with Qlik Sense.

If a direct download is required: Sense License Monitor | Sense Operations Monitor. Note that Support can only be provided for Apps pre-installed with your latest version of Qlik Sense Enterprise on Windows.

App Metadata Analyzer

The App Metadata Analyzer app provides a dashboard to analyze Qlik Sense application metadata across your Qlik Sense Enterprise deployment. It gives you a holistic view of all your Qlik Sense apps, including granular level detail of an app's data model and its resource utilization.

Basic information can be found here:

App Metadata Analyzer (help.qlik.com)

For more details and best practices, see:

App Metadata Analyzer (Admin Playbook)

The app comes pre-installed with Qlik Sense.

The Monitoring & Administration Topic Group

Looking to discuss the Monitoring Applications? Here we share key versions of the Sense Monitor Apps and the latest QV Governance Dashboard as well as discuss best practices, post video tutorials, and ask questions.

Other Apps

LogAnalysis App: The Qlik Sense app for troubleshooting Qlik Sense Enterprise on Windows logs

Sessions Monitor, Reloads-Monitor, Log-Monitor

Connectors Log AnalyzerAll Other Apps are provided as-is and no ongoing support will be provided by Qlik Support.

-

Qlik Cloud Analytics: Manual Reload results may not be displayed in the Schedule...

The reload history found in the Catalog (1) > App menu (2) > Schedule (3) > History (4) > does not display results of manually initiated reloads. Res... Show MoreThe reload history found in the Catalog (1) > App menu (2) > Schedule (3) > History (4) > does not display results of manually initiated reloads.

Resolution

To review manual triggers:

- Go to the Catalog

- Click the App menu (...)

- Open Details

- Open Reload History

Improvements to this section of the tenant UI are planned for the future. No details or estimates can be provided as of now.

Cause

This is a current limitation. Only reloads triggered on a schedule will be displayed in this section of the tenant UI.

Environment

- Qlik Cloud Analytics

Related Content

Cannot download Qlik Cloud reload logs, option greyed out / missing

-

Qlik Cloud Analytics Basic Users are automatically promoted to Full User entitle...

Users previously assigned a Basic User entitlement have unexpectedly been promoted to Full User entitlement. This is working as expected and will hap... Show MoreUsers previously assigned a Basic User entitlement have unexpectedly been promoted to Full User entitlement.

This is working as expected and will happen when a Basic User is granted any additional permissions. Source: Types of user entitlements.

An exception is the Collaboration Platform role, see Qlik Cloud Analytics: Assigning the Collaboration Platform User role to a Basic User does not promote it to full.

How to determine what elevates a user

To determine what promotes a user to a Full User:

- Log in and go to the Cloud Administration center

- Go to User Management

- Hover (a) over the user's Information icon

It is also possible to use the Access Evaluator App to have a more holistic overview over assignments.

What to remove

To avoid users being promoted to Full Users, remove any assignment, both in single spaces and at a global level.

Spaces

Verify the users are not assigned any roles other than than Has restricted view.

Be careful when using the Anyone group, since assigning any other role to this group will promote all users to Full.

This includes the default data space: Default_Data_Space created on tenant setup. Remove the Anyone member:

- Go to Spaces

- Click the ellipses menu (...) and choose Manage members

- Remove Anyone by through the ellipses menu (...) and clicking Remove

Global

Turn off Auto assign for all Security roles in the Administration Center.

- Open the Administration Center

- Open Users

- Switch to the Permissions tab

- Switch Auto assign to Off on all Security roles:

Specific users

If a user was manually assigned a role, the role has to be removed again manually.

- Open the Administration Center

- Open Users

- Switch All Users tab if not already selected

- Locate the user and click the ... menu (a), then choose Edit roles (b) and remove all previously assigned roles

Environment

- Qlik Cloud Analytics

-

Qlik Sense Enterprise on Windows error when exporting apps with Qlik CLI: 400 - ...

Using Qlik-CLI to export an app without the '--exportScope all' parameter fails with the error: Error: 400 - Bad Request - Qlik Sense This is observed... Show MoreUsing Qlik-CLI to export an app without the '--exportScope all' parameter fails with the error:

Error: 400 - Bad Request - Qlik Sense

This is observed after an upgrade to Qlik Sense Enterprise on Windows November 2024 patch 10 or any later version. The error is intermittent and the export may succeed if executed several times.

Reviewing the output shows Status: 201 Created, followed by the error after retrieving the app from the temporary download path:

> qlik qrs app export create "dbb1841d-f3f6-4a49-b73f-0a24cc003b1b" --exportScope all --output-file "C:/temp/App3.qvf" --insecure --verbose

Insecure flag set, server certificate will not be verified

POST https://sense/jwt/qrs/app/dbb1841d-f3f6-4a49-b73f-0a24cc003b1b/export/e237e6c8-d367-45d3-9eff-9f191b3f1ef5?exportScope=all&xrfKey=4B21426C21AE6B85...

Status: 201 Created

{

"exportToken": "e237e6c8-d367-45d3-9eff-9f191b3f1ef5",

"appId": "dbb1841d-f3f6-4a49-b73f-0a24cc003b1b",

"downloadPath": "/tempcontent/f8deec7c-5ab4-4462-879b-38990febbd1b/Test.qvf?serverNodeId=ae6a7f27-1abd-439e-b4da-0a0b0f4a3e61",...

}

GET https://sense/jwt/tempcontent/f8deec7c-5ab4-4462-879b-38990febbd1b/Test.qvf?serverNodeId=ae6a7f27-1abd-439e-b4da-0a0b0f4a3e61&xrfKey=4B21426C21AE6B85...

Status: 400 Bad Request

400 - Bad Request - Qlik Sense

Error: 400 - Bad Request - Qlik SenseResolution

This is being investigated by Qlik as SUPPORT-3800.

Workaround

Delete the content of the cookie store ( ~/.qlik/.cookiestore) when the error occurs.

In Windows, this can be found in %USERPROFILE%\.qlik\.cookiestore

A batch job can be created to automate the deletion.

Cause

This is caused by an incorrect cookie handling by Qlik-CLI. While exporting the app, the responses include the Set-Cookie header, but qlik-cli fails to update the cookie store with the new cookie.

Environment

- Qlik Sense Enterprise on Windows

-

Qlik Talend Cloud: Can't retrieve any project from Cloud, please ask for help f...

Talend Studio Can't retrieve any project from Cloud when connects to TMC, a warning appears stating that: "Can't retrieve any project from Cloud, plea... Show MoreTalend Studio Can't retrieve any project from Cloud when connects to TMC, a warning appears stating that:

"Can't retrieve any project from Cloud, please ask for help from the administrator."

Cause

The user who is connecting to TMC has not been assigned any projects.

Resolution

Assign this user to projects within TMC. For a detailed process, please refer to this Assigning users to projects documentation.

Related Content

Qlik Talend Cloud: Unable to connect Talend Studio to Talend Cloud with SSO enabled

Environment

- Talend Cloud 7.3.1, 8.0.1

-

Security Rule Example: How to show data model viewer for published apps

How To Grant Users The Access To Data Model Viewer. With default security rules and settings, users can not see the data model for published apps. How... Show MoreHow To Grant Users The Access To Data Model Viewer.

With default security rules and settings, users can not see the data model for published apps. However, we can achieve this by creating/updating security rules through the Qlik Sense Management Console.

Resolution

Option 1: Create a new Security Rule

- Go to the Qlik Sense Management Console

- Open Security Rules

- Click Create new

- Create the following rule:

- Name = DataModelViewers

- Description = A description of your choice.

- Resource filter = App_*

- Actions = "Read","Update"

- Conditions = Users of your choice or a previously defined role you will tag the users with.

- Context = Both in hub and QMC or Only in hub

- Click Apply

Option 2: Tagging users as ContentAdmins

This requires a rework of the ContentAdmin rule and will provide far more permissions to users than Option 1. See ContentAdmin for details on what a ContentAdmin is allowed to do.

- Tag the users you want to be able to see data models with the ContentAdmin role.

- Go to the Security Rules

- Locate the default ContentAdmin rule and open it

- Modify the rule by changing QMC only to Both in hub and QMC

- Click Apply

-

Security Rule Requirements for Promoting Community Sheets

In this scenario, the administrator would like to allow a user or set of users to have the needed permissions to promote community sheets to be a base... Show MoreIn this scenario, the administrator would like to allow a user or set of users to have the needed permissions to promote community sheets to be a base sheet.

Note: The ability promote a Community sheet to a Base sheet also comes with the ability to demote a Base sheet to a Community sheet.

Environment:- Qlik Sense Enterprise June 2018 or higher to leverage the new functionality present in those builds

Resolution:

- From a security rule perspective, the user or set of users needs the Approve action allowed for the relevant app objects.

- For an example way to accomplish this:

- Resource Filter: App.Object_*

- Actions: Approve

- Conditions: ((user.name="Kyle Cadice" and resource.app.stream.name="Everyone"))

- Context: Hub

This rule provides a single named user the right to promote Community sheets to become base sheets for apps in the Everyone stream. Further customization can be made to restrict this right to specific apps (e.g. ((user.name="Kyle Cadice" and resource.app.id="d0c33707-0836-4ab4-b15f-46bb2e02eda4"))) or providing the right to users of a particular group (e.g. ((user.group="QlikSenseDevelopers and resource.app.stream.name="Everyone"))) or providing the right to a named user for all apps (e.g. ((user.name="Kyle Cadice"))).

We in Qlik Support have virtually no scope when it comes to debugging or writing custom rules for customers. That level of implementation advice needs to be handled by the folks in Professional Services or Presales. That being said, this example is provided for demonstration purposes to explain specific scenarios. No Support or maintenance is implied or provided. Further customization is expected to be necessary and it is the responsibility of the end administrator to test and implement an appropriate rule for their specific use case.

-

What version of Qlik Sense Enterprise on Windows am I running?

Where can I find the installed version or service release of my Qlik Sense Enterprise on a Windows server? You can locate the Qlik Sense Enterprise o... Show MoreWhere can I find the installed version or service release of my Qlik Sense Enterprise on a Windows server?

You can locate the Qlik Sense Enterprise on Windows version in three locations:

- The Qlik Sense Hub

- The Qlik Sense Enterprise Management Console

- The Windows Installed Programs menu

- The Engine.exe on disk

The Qlik Sense Hub

This option is only available for versions of Qlik Sense before Qlik Sense May 2025 Patch 3.

- Open the Qlik Sense Hub

- Locate and click the Profile on the landing screen (corner on the right)

- Click the About link

- The hub will display the installed version

The Qlik Sense Management Console

- Open the Qlik Sense Management Console.

- On the start screen, look in the bottom right corner for the version number.

The Windows Installed Programs menu

- Open the Windows Uninstall or change a program menu

- Expand the window until the version is visible

The Engine.exe

- Open a Windows File explorer

- Navigate to C:\Program Files\Qlik\Sense\Engine

- Right-click the Engine.exe

- Click Properties

- Switch to the Details tab

- The File version will be listed

Qlik Cloud Analytics does not list versions as the cloud version is always identical and current across the platform.

-

How to enable Qlik Sense QIX performance logging and use the Telemetry Dashboard

With February 2018 of Qlik Sense, it is possible to capture granular usage metrics from the Qlik in-memory engine based on configurable thresholds. T... Show MoreWith February 2018 of Qlik Sense, it is possible to capture granular usage metrics from the Qlik in-memory engine based on configurable thresholds. This provides the ability to capture CPU and RAM utilization of individual chart objects, CPU and RAM utilization of reload tasks, and more.

Also see Telemetry logging for Qlik Sense Administrators in Qlik's Help site.

Click here for Video Transcript

Enable Telemetry LoggingIn the Qlik Sense Management Console, navigate to Engines > choose an engine > Logging > QIX Performance log level. Choose a value:

- Off: No logging will occur

- Error: Activity meeting the ‘error’ threshold will be logged

- Warning: Activity meeting the ‘error’ and ‘warning’ thresholds will be logged

- Info: All activity will be logged

Note that log levels Fatal and Debug are not applicable in this scenario.Also note that the Info log level should be used only during troubleshooting as it can produce very large log files. It is recommended during normal operations to use the Error or Warning settings.

- Repeat for each engine for which telemetry should be enabled.

Set Threshold Parameters

- Edit C:\ProgramData\Qlik\Sense\Engine\Settings.ini If the file does not exist, create it. You may need to open the file as an administrator to make changes.

- Set the values below. It is recommended to start these threshold values and increase or decrease them as you become more aware of how your particular environment performs. Too low of values will create very large log files.

[Settings 7]

ErrorPeakMemory=2147483648

WarningPeakMemory=1073741824

ErrorProcessTimeMs=60000

WarningProcessTimeMs=30000

- Save and close the file.

- Restart the Qlik Sense Engine Service Windows service.

- Repeat for each engine for which telemetry should be enabled.

Parameter Descriptions

- ErrorPeakMemory: Default 2147483648 bytes (2 Gb). If an engine operation requires more than this value of Peak Memory, a record is logged with log level ‘error’. Peak Memory is the maximum, transient amount of RAM an operation uses.

- WarningPeakMemory: Default 1073741824 bytes (1 Gb). If an engine operation requires more than this value of Peak Memory, a record is logged with log level ‘warning’. Peak Memory is the maximum, transient amount of RAM an operation uses.

- ErrorProcessTimeMs: Default 60000 millisecond (60 seconds). If an engine operation requires more than this value of process time, a record is logged with log level ‘error’. Process Time is the end-to-end clock time of a request.

- WarningProcessTimeMs: Default 30000 millisecond (30 seconds). If an engine operation requires more than this value of process time, a record is logged with log level ‘warning’. Process Time is the end-to-end clock time of a request.

Note that it is possible to track only process time or peak memory. It is not required to track both metrics. However, if you set ErrorPeakMemory, you must set WarningPeakMemory. If you set ErrorProcessTimeMs, you must set WarningProcessTimeMs.

Reading the logs

- To familiarize yourself with logging basics, please see help.qlik.com

Note: Currently telemetry is only written to log files. It does not yet leverage the centralized logging to database capabilities.

- Telemetry data is logged to C:\ProgramData\Qlik\Sense\Log\Engine\Trace\<hostname>_QixPerformance_Engine.txt

- and rolls to the ArchiveLog folder in your ServiceCluster share.

- In addition to the common fields found described), fields relevent to telemetry are:

- Level: The logging level threshold the engine operation met.

- ActiveUserId: The User ID of the user performing the operation.

- Method: The engine operation itself. See Important Engine Operations below for more.

- DocId: The ID of the Qlik application.

- ObjectId: For chart objects, the Object ID of chart object.

- PeakRAM: The maximum RAM an engine operation used.

- NetRAM: The net RAM an engine operation used. For hypercubes that support a chart object, the Net RAM is often lower than Peak RAM as temporary RAM can be used to perform set analysis, intermediate aggregations, and other calculations.

- ProcessTime: The end-to-end clock time for a request including internal engine operations to return the result.

- WorkTime: Effectively the same as ProcessTime excluding internal engine operations to return the result. Will report very slightly shorter time than ProcessTime.

- TraverseTime: Time spent running the inference engine (i.e, the green, white, and grey).

Note: for more info on the common fields found in the logs please see help.qlik.com.

Important Engine Operations

The Method column details each engine operation and are too numerous to completely detail. The most relevent methods to investigate are as follows and will be the most common methods that show up in the logs if a Warning or Error log entry is written.

Method Description Global::OpenApp Opening an application Doc::DoReload, Doc::DoReloadEx Reloading an application Doc::DoSave Saving an application GenericObject::GetLayout Calculating a hypercube (i.e., chart object)

Comments- For best overall representation of the time it takes for an operation to complete, use ProcessTime.

- About ERROR and WARNING log level designations: These designations were used because it conveniently fit into the existing logging and QMC frameworks. A row of telemetry information written out as an error or warning does not at all mean the engine had a warning or error condition that should require investigation or remedy unless you are interested in optimizing performance. It is simply a means of reporting on the thresholds set within the engine settings.ini file and it provides a means to log relevant information without generating overly verbose log files.

Qlik Telemetry Dashboard:

Once the logs mentioned above are created, the Telemetry Dashboard for Qlik Sense can be downloaded and installed to read the log files and analyze the information.

The Telemetry Dashboard provides the ability to capture CPU and RAM utilization of individual chart objects, CPU and RAM utilization of reload tasks, and more.For additional information including installation and demo videos see Telemetry Dashboard - Admin Playbook

The dashboard installer can be downloaded at: https://github.com/eapowertools/qs-telemetry-dashboard/wiki. NOTE: The dashboard itself is not supported by Qlik Support directly, see the Frequently Asked Questions about the dashboard, to report issues with the dashboard, do so on the "issues" webpage.

1. Right-click installer file and use "Run as Administrator". The files will be installed at C:\Program Files\Qlik\Sense

2. Once installed, you will see 2 new tasks, 2 data connections and 1 new app in the QMC.

3. In QMC Change the ownership of the application to yourself, or the user you want to open the app with.

4. Click on the 'Tasks' section in the QMC, click once on 'TelemetryDashboard-1-Generate-Metadata', then click 'Start' at the bottom. This task will run, and automatically reload the app upon completion.

5. Use the application from the hub to browse the information by sheets -

Exporting and importing Security rules using Qlik-Cli

Scenario: Have Qlik-Cli installed and configured (see How to install Qlik-CLI for Qlik Sense on Windows) for more insight on how to install and confi... Show MoreScenario:

- Have Qlik-Cli installed and configured (see How to install Qlik-CLI for Qlik Sense on Windows) for more insight on how to install and configure Qlik-Cli)

- Create a PowerShell script using the following code as a base:

! Note that the code is provided as is and will need to be modified to suit specific needs. For assistance, please contact the original creators of Qlik-Cli.

Connect-Qlik -computername "QlikSenseServer.company.com" # This will export the custom security rules and output them as secrules.json Get-QlikRule -filter "type eq 'Custom' and category eq 'security'" -full -raw | ConvertTo-Json | Out-File secrules.json # This will import secrules.json into a Sense site # The ideal use case would be to move the developedsecurity rules from one environment to another, so connecting to another Sense site would likely be needed here Get-Content -raw .\secrules.json |% {$_ -replace '.*"id":.*','' } | ConvertFrom-Json | Import-QlikObjectEnvironment:

Qlik Sense Enterprise on Windows , all versions

-

How Many Months Of History Should Be Available In The Operation Monitor App

How to reduce the size of the Operations Monitor App or decide how much history is being stored. Scenario: There are only 3 months of history/data in ... Show MoreHow to reduce the size of the Operations Monitor App or decide how much history is being stored.

Scenario: There are only 3 months of history/data in Operation Monitor app. Even though there are enough logs in Archived log folder to provide information for more months.

Scenario 2: There are 3 months of history/data seen but less data is required due to too high traffic.

This article explains how Operation monitor app can be displayed more or less than 3 months of history/data. Note: We do not recommend configuring the Operations Monitor to provide a history longer than 3 months as this amount of data will lead to long loading times and large apps.

Resolution:

- Duplicate the Operation Monitor app. You can do so from the Hub or the Qlik Sense Management Console if you have access to the App.

- Open the Qlik Sense Hub, Navigate to Work and open your copy of the Operations Monitor(1)

- Open the Data load editor

- Locate line 24 in the Initialize tab (first tab)

- Line 24 will read: SET monthsOfHistory = 3

- Modify SET monthsOfHistory = 3 to your preferred value. The Operations Monitor will be able to load data as long as you have log files that go that far back.

Example:"SET monthsOfHistory = 3" -- 3 Months "SET monthsOfHistory = 6" -- 6 Months "SET monthsOfHistory = 12" -- 12 Months - Open a Windows file browser and navigate to: %PROGRAMDATA%\Qlik\Sense\Log (default install folder)

- Remove all “governance” QVDs from this folder. This step is crucial for the changes to work.

- Go to the Qlik Sense Management Console

- Open Apps

- Locate your copy of the Operations Monitor.

- Click publish

- Select the Monitoring Apps stream

- Check the Replace existing app checkbox

- Select the original Operations Monitor

- Click OK

- Go to Tasks and start the Operations Monitor task

- Your app will now show the desired number of months.

Note:

Please note that the reload time for that long of data will be rather long. - Duplicate the Operation Monitor app. You can do so from the Hub or the Qlik Sense Management Console if you have access to the App.