Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Recent Documents

-

Qlik Talend Product: How to Remove /Clean Up Kar files from Talend Runtime Serve...

For better performance of Talend Runtime Server, you could uninstall Kar files to free up storage space. This article briefly introuduces How to unin... Show MoreFor better performance of Talend Runtime Server, you could uninstall Kar files to free up storage space.

This article briefly introuduces How to uninstall kar files from Talend Runtime Server

Prerequiste

For command

kar:uninstall

It is a command used to uninstall a KAR file (identified by a name).

By uninstall, it means that:

- The features previously installed by the KAR file are uninstalled

- Delete (from the KARAF_DATA/system repository) all files previously "populated" by the KAR file

For instance, to uninstall the previously installed

my-kar-1.0-SNAPSHOT.karKAR file:karaf@root()> kar:uninstall my-kar-1.0-SNAPSHOT

Talend Runtime Server

Please run the following karaf commands to clean Kar files from your Repository

#stop running artifact task

bundle:list |grep -i <artifact-name>

bundle:uninstall <artifact-name | bundle-id>

#clean task kar cache

kar:list |grep -i <artifact-name>

kar:uninstall <artifact-name>Related Content

For more information about KAR file, please refer to Apache Content

kar:* commands to manage KAR archives.

Environment

-

Qlik Talend Cloud: OutOfMemory Error Occurs When Executing a job on Cloud Engine

Some particular job is always failing with OutOfMemory error when using Cloud Engine. Resolution Please check the job design you made to see if it ... Show MoreSome particular job is always failing with OutOfMemory error when using Cloud Engine.

Resolution

- Please check the job design you made to see if it is consuming too much memory near the maximum memory usage of Cloud Engine. One way to check it through monitoring the memory usage, by using tools like: Monitoring the resource consumption on Remote Engine and Showing JVM resource usage during Job or Route execution.

Cloud Engine has a maximum memory usage of 8GB

- Please check the VM Memory that you specified for the particular job is sufficient. Please check here for details: Specifying the limits of VM memory for a Job or Route.

- If only a particular job is facing this issues "OutofMemory" and other jobs are not, Please try to delete the current job and newly re-create a copy one which may fix the issue.

Cause

- The job may be consuming too much memory.

- There may not be enough VM memory specified for the current job.

- There may be an unknown issue with the creation flow of job.

Related Content

If you are also looking for OutOfMemory Error Occurs when executing a job on Remote Engine, please have a look at this article as below:

OutOfMemory Error Occurs When Executing a job on Remote Engine

Environment

- Please check the job design you made to see if it is consuming too much memory near the maximum memory usage of Cloud Engine. One way to check it through monitoring the memory usage, by using tools like: Monitoring the resource consumption on Remote Engine and Showing JVM resource usage during Job or Route execution.

-

Qlik Talend Cloud: Connection and Network Slow Issues between Talend Cloud and T...

There are connection and Net Work Slow issues between Talend Cloud and Talend Remote Engine The following errors are produced in the logs: org.apache.... Show MoreThere are connection and Net Work Slow issues between Talend Cloud and Talend Remote Engine

The following errors are produced in the logs:

org.apache.http.conn.ConnectTimeoutException: Connect to pair.**.cloud.talend.com:443 [pair.**.cloud.talend.com/****,

Retry processing request to {s}-> pair.**.cloud.talend.com:443

org.apache.http.conn.ConnectTimeoutException: Connect to pair.**.cloud.talend.com:443 [pair.**.cloud.talend.com/****,

pair.**.cloud.talend.com/****, pair.**.Resolution

- Check with your network team if you had any network issues at the time of occurrence. If you are using a proxy, and the logs are related to connection issues to your proxy server,please check that there are no issues connecting from the remote engine to the proxy server, and the proxy server to the URL you are connecting to (in this example, it would be the connection to Talend Cloud).

- If you see the following error or any other connection errors that relate to problems connecting to pair.ap.cloud.talend.com, please check that you can connect to the service from the remote engine server.

This service is used to communicate the status information for the Remote Engine such as its heartbeats and availability.

org.apache.http.conn.ConnectTimeoutException: Connect to pair.**.cloud.talend.com:443 [pair.**.cloud.talend.com/****,pair.**.cloud.talend.com/****, pair.**.cloud.talend.com/****] failed: Connect timed out

Retrying request to {s}->pair.**.cloud.talend.com:443

- If you see the following error or any other connection errors that relate to problems connecting to https://msg.**.cloud.talend.com:443, please check that you can connect to the service from the remote engine server.

This service is used to communicate about task runs such as receiving, deploying, or undeploying events, and sending information about task run status and metrics.

Failed to connect to [https://msg.**.cloud.talend.com:443] after: 1 attempt(s) with Failed to perform GET on: https://msg.**.cloud.talend.com:443 as response was: Connect to **** [****] failed: Connection refused: connect, continuing to retry.

- Increase the timeout from the following setting. The default value will be 300 in seconds for each parameter. Go to file <RemoteEngine InstallationFolder>/etc/org.talend.ipaas.rt.deployment.agent.cfg and increase these two parameters value from 300

to 600# In seconds wait.for.connection.parameters.timeout=300 flow.deployment.timeout=300# In seconds wait.for.connection.parameters.timeout=600 flow.deployment.timeout=600 - There may have been a Talend Cloud incident at the time of occurrence that may have caused connection issues. Please check Talend Cloud Status. For further investigation regarding Talend Cloud incidents, you can always contact our technical support team for assistance.

Cause

There are connection issues and Network slow between Talend Cloud and Talend Remote Engine.

Related Content

If you access the Internet via a proxy, you need to add the following URLs to your allowlist.

Adding URLs to your proxy and firewall allowlist

Environment

-

Qlik Talend Cloud: JVM Hanging Errors occur in Wrapper Log for Talend Remote Eng...

There are JVM hanging errors in wrapper.log during job running for Talend Remote Engine Server JVM appears hung: Timed out waiting for signal from JVM... Show MoreThere are JVM hanging errors in wrapper.log during job running for Talend Remote Engine Server

JVM appears hung: Timed out waiting for signal from JVM.

JVM did not exit on request, terminated

JVM exited in response to signal SIGKILL (9).

Unable to start a JVM

<-- Wrapper StoppedWhen the CPU is high (time interval) the jvm exited and also you see JVM hanging errors in wrapper log.

This is because when the jvm is not getting response, the wrapper tries to restart the container and try to recover from the hung behavior.

Resolution

- Please check that the memory you have allocated to your remote engine is enough to handle the jobs that you run.

In order to allocate memory to your remote engine, please check here: Specifying Java heap size for a Remote Engine service.

To monitor the resource consumption, and check if you are consuming too much memory, please use the following tools for monitoring. - Even when you check the resource consumption, the data may show normal activity. For further investigation, please try the followings:

- Check Event Logs: Review the event logs for system messages or events occurring around the time of termination for more context. (For example, for Linux, use commands like journalctl, and for Windows, Windows Event Viewer).

- Monitor System Activity: Use tools to monitor resource usage (For example, for Linux, top, htop, or performance monitor tools like atop, and for Windows, Task Manager or Performance Monitor) to track CPU, memory, and disk usage.

- Investigate External Scripts or Tools: Ensure no external scripts or tools are configured to terminate the JVM under certain conditions.

Cause

- JVM/CPU/Disk usage is very high

- Some scripts or automated system management tasks might be configured to terminate processes under specific conditions

- The OS may terminate a process if the system runs out of resources, such as memory

Environment

- Please check that the memory you have allocated to your remote engine is enough to handle the jobs that you run.

-

How Qlik NPrinting consumes resources when connecting to Qlik Sense Enterprise o...

This article outlines how Qlik NPrinting and Qlik Sense Enterprise on Windows consume resources. Environment Qlik NPrinting Qlik Sense Enterprise on... Show MoreThis article outlines how Qlik NPrinting and Qlik Sense Enterprise on Windows consume resources.

Environment

- Qlik NPrinting

- Qlik Sense Enterprise on Windows

Resolution

Qlik NPrinting interacts with Qlik Sense when a metadata reload or a task execution is started (a report preview or an On-Demand request is essentially the same as a task execution).

For metadata reloads, Qlik NPrinting directly opens a connection with the Qlik Sense Server, and the following actions are performed on the Qlik Sense side:

- The Qlik Sense app is opened in the background

- Section access is removed (Qlik NPrinting opens the app as an admin)

- All selections, bookmarks, and reductions are removed

- Qlik Sense sends the metadata to Qlik NPrinting

This includes: all object names and types, all fields and their values, and all variables - NPrinting collects this information and organizes it in the database

The resource consumption is mainly on the Qlik Sense side. In particular, opening the app requires RAM, and removing selections/bookmarks/Session Access requires calculations that consume CPU.

When a task is run, Qlik NPrinting connects to the Qlik Sense Server, which performs the following:

- Open the Sense app(s)

- Remove the selections

- Apply the filters to generate the reports

Note: Cycles, Pages, and Levels are essentially filters on each field value - Export each single table or image requested by each template with the correct selection

This is quite expensive in terms of resources, especially if many filters are present (or Cycles/Levels/Pages) because a filter is essentially a selection on the app and each selection requires calculations and, therefore, CPU usage.

Moreover, Qlik NPrinting is multi-treading. This means that multiple instances of the same app can be opened in Qlik Sense at the same time. This enables Qlik NPrinting to execute the requests of filter applications and table exports in parallel. On the other side, having many applications opened at the same time represents a resource cost for Qlik Sense.

The maximum number of apps that can be opened at the same time equals the number of logical cores on the Qlik NPrinting Engine machine. You have to sum them up if more engines are installed.

The situation is more complex if Session Access is used. In this case, Qlik NPrinting cannot apply all the requests on the apps in the same session. The app must be closed and re-opened for each Qlik NPrinting user, with a high consumption of RAM and CPU on the Sense side. This also loses the possibility to apply many of the Qlik NPrinting optimizations for the report generation.

On the Qlik NPrinting side, some resources are used by the Engine service to keep in memory images, tables, and other values sent by Sense. NPrinting then needs to place these objects correctly on the template. Also, this action is performed by the Qlik NPrinting Engine service and it is more resource-consuming on the Qlik NPrinting side. Generally, we do not expect a problematic consumption in a supported NPrinting environment, unless very heavy and complex reports are generated or many tasks are run at the same time. The main resource request is on the Qlik Sense side.

Frequently Asked Questions

Does Qlik NPrinting respect the Qlik Sense load balancing rules?

Qlik NPrinting uses the same proxy to connect as an end user. Load balancing rules will be respected.

-

Qlik Talend Cloud: OutOfMemory Error Occurs When Executing a job on Talend Remot...

Out of memory error is found in the Talend Management Center or Remote Engine Logs when executing a job on Remote Engine. Resolution Check that yo... Show MoreOut of memory error is found in the Talend Management Center or Remote Engine Logs when executing a job on Remote Engine.

Resolution

- Check that your Talend Studio job has enough VM memory to execute your job.

For information on how to specify the memory to your job, please check here: Specifying the limits of VM memory for a Job or Route. - Check if you can change your job design, in a way that will not consume large amounts of memory.

- Check that your job server (remote engine in this example) has enough memory allocated to execute the task.

For information on how to set the memory in your remote engine, please check here: Specifying Java heap size for a Remote Engine service - Monitor the memory consumption by using a monitoring tool. Please find information regarding Talend's monitoring tools from the following:

Cause

The job is consuming a lot of memory or the JVM Memory allocated to the job or remote engine is not enough to execute.

Related Content

If you are looking for allocating more memory and OutOfMemory exception from Talend Studio side, please refer to these articles

Environment

- Check that your Talend Studio job has enough VM memory to execute your job.

-

Qlik Talend Product Q&A: Multiple IP addresses show up for Talend Cloud URLs

Question Why are there multiple IP addresses showing up when using the nslookup command for Talend Cloud URLs like "api.ap.cloud.talend.com"? nslook... Show MoreQuestion

Why are there multiple IP addresses showing up when using the nslookup command for Talend Cloud URLs like "api.ap.cloud.talend.com"?nslookup api.ap.cloud.talend.com

Multiple IP addresses show up because Round-Robin DNS is being used. It ensures high availability and distributes traffic among multiple servers.Related Content

What is Round-Robin DNS? Optimize Server Load

Environment

-

File Access and Process Monitoring: How to find locked files and the processes l...

There are multiple ways to go about finding out the exact process of locking a file and preventing QlikView or Qlik Sense from carrying out a specific... Show MoreThere are multiple ways to go about finding out the exact process of locking a file and preventing QlikView or Qlik Sense from carrying out a specific operation. This article covers one possible option on a Windows Server Operating Systems, Process Monitor.

For other options and third-party implementations, please contact your Windows administrator.

Common causes for locks are:- A QVB (Reload Engine) that did not terminate correctly

- Qlik Sense Engine service holding file in error

- The QVS.exe (QlikView Server Service) holding the file in error

- AntiVirus Software during the scanning process

- Backup Software locking the file during the backup process

- Scheduled maintenance tools (Windows or Third Party)

Using the Process Monitor

- Download Windows Process Monitor and unzip it to your server (Desktop is fine, the location doesn't matter). Link: http://technet.microsoft.com/en-us/sysinternals/bb896645.aspx

- Pause capture by clicking the Capture icon

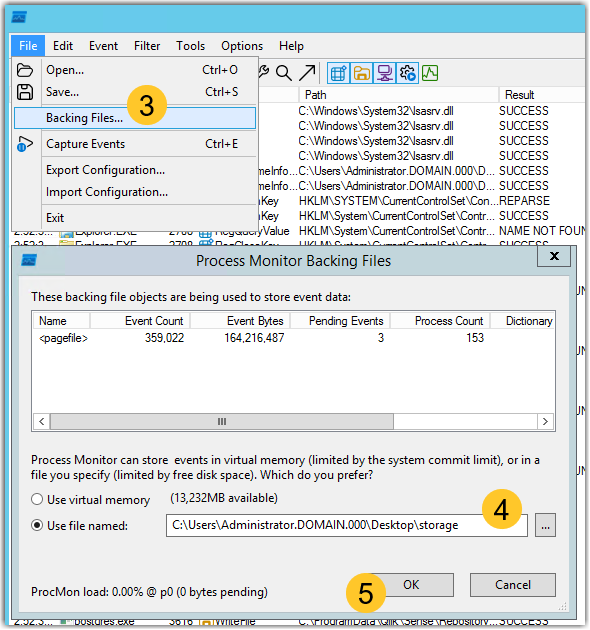

- Navigate to File > Backing Files...

- Select Use file named: and choose the storage path for the .pml file which will be created by the capture

- Click OK

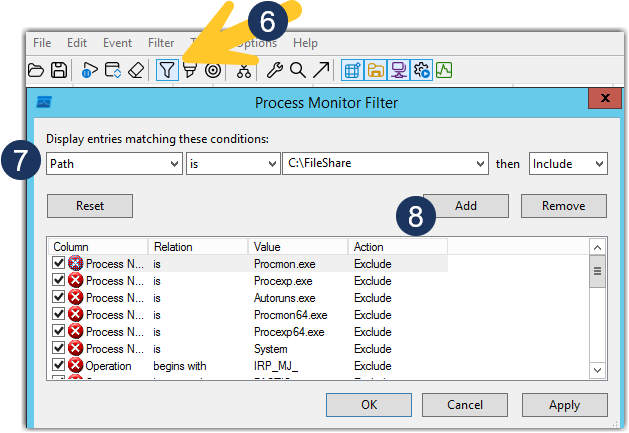

- Click Filter

- Add the File Path to the files you need to monitor

Example:

Path is C:\FileShare then include - Click Add

If you need to exclude files, use the same method, pointing the filter at specific files or folders and choosing Exclude. - Click OK to close the Process Monitor Filter dialogue

- Resume the Capture

- Verify that your filter is correct, then click Filter > Drop Filtered Events to avoid creating a too-large file

- Reproduce the issue you are looking to troubleshoot

If the issue cannot be replicated on demand, and Procmon needs to run until issue occurs, "Backing files" and "History Depth" may need to be configured to store the capture in multiple files with limited size. - Stop Capture once you have captured the issue

Note: Do not close Procmon as means to stop the capture as this may inadvertently remove the .pml files. - You can now open the saved .pml file and review it to identify the processes accessing the files you are monitoring.

-

Qlik Talend Cloud: Tasks Failed to Execute Due to "No engines were available to...

The following error is showing for tasks that were hanging in queue: No engines were available to process this task. You can try to run the task manua... Show MoreThe following error is showing for tasks that were hanging in queue:

No engines were available to process this task. You can try to run the task manually now. Run this task during a time when your processors are available.

Resolution

If you are running too many tasks in parallel, experiencing time out and tasks failed, please consider the following.

- Set max.deployed.flows to a higher value.

This value should be considered upon how much memory you can use for task executions.

This is the amount of executed tasks you have in parallel.

For more information on how to set this value, please check here: Running tasks in parallel on Remote Engine. - If the time does not matter on how long it takes for all tasks to complete,

then consider having few job executions in parallel.

For example: If you have 80 plans running at the same time, consider creating 3 plans

running at the same time with all the jobs included. - Consider using remote engines in a cluster, so you have more

remote engines that can be used to execute the tasks.

For more information on clusters, please check here: Creating Remote Engine clusters. - Increase the amount of memory you have in the environment where the remote engine is placed,

so you can allocate more memory to the remote engine. - Adjust the time when tasks execute, so timeout does not occur.

This may be the only solution if you have very limited amount of memory to be used.

Cause

Too many tasks are running at the same time, and since the tasks that were in queue timed out, it failed to execute.

Related Content

On a different note, please also review the steps to clean up the task logs on the Remote Engine

automatic-task-log-cleanupEnvironment

- Set max.deployed.flows to a higher value.

-

How to disable 'Base memory size' info and 'StaticByteSize' calls in Qlik Sens E...

After upgrading to Qlik Sense Enterprise on Windows May 2023 (or later), the Qlik Sense Repository Service may cause CPU usage spikes on the central n... Show MoreAfter upgrading to Qlik Sense Enterprise on Windows May 2023 (or later), the Qlik Sense Repository Service may cause CPU usage spikes on the central node. In addition, the central Engine node may show an increased average RAM consumption while a high volume of reloads is being executed.

The Qlik Sense System_Repository log file will read:

"API call to Engine service was successful: The retrieved 'StaticByteSize' value for app 'App-GUID' is: 'Size in bytes' bytes."

Default location of log: C:\ProgramData\Qlik\Sense\Log\Repository\Trace\[Server_Name]_System_Repository.txt

This activity is associated with the ability to see the Base memory size in the Qlik Sense Enterprise Management Console. See Show base memory size of apps in QMC.

Resolution

The feature to see Base memory size can be disabled. This may require an upgrade and will require downtime as configuration files need to be changed.

Take a backup of your environment before proceeding.

- Verify the version you are using and verify that you are at least on one of the versions (and patches) listed:

- May 2023 Patch 13

- August 2023 Patch 10

- November 2023 Patch 4

- February 2024 IR

- Back up the following files:

- C:\Program Files\Qlik\Sense\CapabilityService\capabilities.json

- C:\Program Files\Qlik\Sense\Repository\Repository.exe.config

*where C:\Program Files can be replaced by your custom installation path.

- Stop the Qlik Sense Repository Service and the Qlik Sense Dispatcher Service

- Open and edit the capabilities.json with a text editor (using elevated permissions)

- Open C:\Program Files\Qlik\Sense\CapabilityService\capabilities.json

- Locate: "enabled": true,"flag": "QMCShowAppSize"

- Change this to: "enabled": false,"flag": "QMCShowAppSize"

- Save the file

- Open and edit the repository.exe.config with a text editor (using elevated permissions)

- Open C:\Program Files\Qlik\Sense\Repository\Repository.exe.config

- Locate: </appSettings>

- Before the </appSettings> closing statement, add:

<add key="AppStaticByteSizeUpdate.Enable" value="false"/> - Save

- Start all Qlik Sense services

- Verify you can access the Management Console

If any issues occur, revert the changes by restoring the backed up files and open a ticket with Support providing the changed versions of repository.exe.config, capabilities.json mentioning this article.

Related Content

Show base memory size of apps in QMC

Internal Investigation:

QB-22795

QB-24301Environment

Qlik Sense Enterprise on Windows May 2023, August 2023, November 2023, February 2024.

- Verify the version you are using and verify that you are at least on one of the versions (and patches) listed:

-

How much RAM is consumed per application?

QlikView and Qlik Sense files are like ZIP file, as in they are highly compressed. So obtaining an estimate of how much RAM is needed for loading Apps... Show MoreQlikView and Qlik Sense files are like ZIP file, as in they are highly compressed. So obtaining an estimate of how much RAM is needed for loading Apps into memory (footprint) is difficult to provide across the board. The expected memory usage associated with the app can also depend on how many users access the app and how much they are used.

Environment:

- QlikView, all versions

- Qlik Sense Enterprise on Windows, all versions

- Qlik Cloud

An estimate of the RAM needed per app can be built on the below, but for accuracy always test by loading the app into memory and using the Qlik Scalability Tools to obtain a baseline of memory usage for each app as it is accessed by the foreseeable number of users.

Telemetry logging which allows for using the Telemetry Dashboard, is also an available suitable tool.

Since the release of the February 2019 version, Sense System Performance Analyzer monitoring App can be used to determine app footprint as well. Since the release of the June 2018 version, App Metadata Analyzer monitoring App can also be used. However, these last three may not provide the same data as the Scalability Tools.

An alternative is to use a test environment and simply observe RAM usage increases as apps are first opened (footprint), and as number of users accessing the app increase, then as number of operations are performed within the app. The test environment results for observed memory and CPU usage can be recorded as a baseline for the particular app in production, which assists in determining future sizing/scaling needs.

Need direct assistance in evaluating your Qlik Sense and QlikView apps? Qlik's Professional Services are available to assist you.

RAMInitial = SizeOnDisk × FileSizeMultiplier ; this is the initial RAM footprint for any application

FileSizeMultiplier: range between 2-10 (this is a compression* ratio depending on the data contained in the app)

*Compression is based upon the data, and how much we can compress depends upon the homogeneity of the data. The more homogeneous, the more compression Qlik can achieve.

More information on one way of optimizing a document can be found on the Qlik Design Blog: Symbol Tables and Bit-Stuffed PointersRAMperUser = RAMinitial × userRAMratio ; this is the RAM per each incremental user

userRAMratio: range between 1% -10%

Total RAM used per app :

TotalRAM = (RAMperUser × Number of users) + RAMinitial

Example:

SizeOnDisk = 1 GB

File Size Multiplier 6 (range is from 2 to 10) This is an example value. A value can be obtained by reviewing telemetry (how far does the app expand?) or opening the app in a QlikView or Qlik Sense desktop and measuring the expansion based on how large it grows in memory.

RAMinitial = 1 * 6 = 6 GB

If we take a RAM Ratio per User of 6% then it is 0.06 (range is from 1 to 10)

RAMperUser = 6 x 0.06 = 0.36GB

Then RAM Required for 30 Users

TotalRAM = (RAMperUser × No. users) + RAMinitial

TotalRAM = (0.36 * 30) + 6

TotalRAM = 16.8 GBRelated Content:

-

LogAnalysis App: The Qlik Sense app for troubleshooting Qlik Sense Enterprise on...

It is finally here: The first public iteration of the Log Analysis app. Built with love by Customer First and Support. "With great power comes great r... Show MoreIt is finally here: The first public iteration of the Log Analysis app. Built with love by Customer First and Support.

"With great power comes great responsibility."

Before you get started, a few notes from the author(s):

- It is a work in progress. Since it is primarily used by Support Engineers and other technical staff, usability is not the first priority. Don't judge.

- It is not a Monitoring app. It will scan through every single log file that matches the script criteria and this may be very intensive in a production scenario. The process may also take several hours, depending on how much historical data you load in. Make sure you have enough RAM 🙂

- Not optimised, still very powerful. Feel free to make it faster for your usecase.

- Do not trust chart labels; look at the math/expression if unsure. Most of the chart titles make sense, but some of them won't. This will improve in the future.

- MOD IT! If it doesn't do something you need, build it, then tell us about it! We can add it in.

- Send us your feedback/scenarios!

Chapters:

-

01:23 - Log Collector

-

02:28 - Qlik Sense Services

-

04:17 - How to load data into the app

-

05:42 - Troubleshooting poor response times

-

08:03 - Repository Service Log Level

-

08:35 - Transactions sheet

-

12:44 - Troubleshooting Engine crashes

-

14:00 - Engine Log Level

-

14:47 - QIX Performance sheets

-

17:50 - General Log Investigation

-

20:28 - Where to download the app

-

20:58 - Q&A: Can you see a log message timeline?

-

21:38 - Q&A: Is this app supported?

-

21:51 - Q&A: What apps are there for Cloud?

-

22:25 - Q&A: Are logs collected from all nodes?

-

22:45 - Q&A: Where is the latest version?

-

23:12 - Q&A: Are there NPrinting templates?

-

23:40 - Q&A: Where to download Qlik Sense Desktop?

-

24:20 - Q&A: Are log from Archived folder collected?

-

25:53 - Q&A: User app activity logging?

-

26:07 - Q&A: How to lower log file size?

-

26:42 - Q&A: How does the QRS communicate?

-

28:14 - Q&A: Can this identify a problem chart?

-

28:52 - Q&A: Will this app be in-product?

-

29:28 - Q&A: Do you have to use Desktop?

Environment

Qlik Sense Enterprise on Windows (all modern versions post-Nov 2019)

How to use the app:

- Go to the QMC and download a LogCollector archive or grab one with the LogCollector tool

- Unzip the archive in a location visible to your user profile

- Download the attached QVF file

- Import/open it in Qlik Sense

- Go to "Data Load Editor" and edit the existing "Logs" folder connection, and point to the extracted Log Collector archive path

- If you are using a Qlik Sense server, remember to change the Data Connection name back to default "Logs". Editing via Hub will add your username to the data connection when saved.

- Go to the "Initialize" script section and configure:

- Your desired date range or days to load

- Whether you want the data stored in a QVD

- Which Service logs to load (Repository, Engine, Proxy and Scheduler services are built-in right now, adding other Qlik Sense Enterprise services may cause data load errors).

- LOAD the data!

My workflow:

- I'm looking for a specific point in time where a problem was registered

- I use the time-based bar charts to find problem areas, get a general sense of workload over time

- I use the same time-based charts to narrow in on the problem timestamp

- Use the different dimensions to zoom in and out of time periods, down to a per-call granularity

- Log Details sheets to inspect activity between services and filter until the failure/error is captured

- Create and customise new charts to reveal interesting data points

- Bookmarks for everything!

Notable Sheets & requirements:

- Anything "Thread"-related for analysing Repository Service API call performance, which touches all aspects of the user and governance experience

- Requirement: Repository Trace Performance logs in DEBUG level. Otherwise, some objects may be empty or broken.

- Commands: great for visualizing Repository operations and trends between objects, users, and requests

- Transactions: Repository Service API call performance analysis.

- Requirement: Repository Trace Performance logs in DEBUG level. Otherwise, some objects may be empty or broken.

- Task Transactions: very powerful task scheduling analysis with time-based filters for exclusion.

- Log Details sheets: excellent filtering and searching through massive amounts of logs.

- Repo + Engine data: resource consumption and Thread charts for Repository and Engine services, great for correlating workloads.

*It is best used in an isolated environment or via Qlik Sense Desktop. It can be very RAM and CPU intensive.

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

Related Content

Optimizing Performance for Qlik Sense Enterprise - Qlik Community - 1858594

-

Recommended practice on configuration for Qlik Sense

This article provides a list of the best practices for Qlik Sense configuration. It is worth implementing each item, especially for a large environmen... Show MoreThis article provides a list of the best practices for Qlik Sense configuration. It is worth implementing each item, especially for a large environment so that your database can handle the volume of requests coming from all its connected nodes.

Max Connections

For basic information, see Max Connections.

Specifies the maximum number of concurrent connections (max_connections) to the database. The default value for a single server is 100.

In a multi-node environment, this should be adjusted to the sum of all repository connection pools + 20. By default, this value is 110 per node.

Assuming two nodes and assuming the default value of 110 per node, the value would be 240.

- Stop all of the Qlik Sense services

- Open C:\ProgramData\Qlik\Sense\Repository\PostgreSQL\X.X\postgresql.conf

- Set max_connections = 110

- Save the file

- Restart all of the Qlik Sense Services

How to determine the Database Max Pool

The value of 110 above is a default example. You can further refine the value.

The connection pool for the Qlik Sense Repository is always based on core count on the machine. To date, our advise is to take the core count of your machine and multiply it by five. This will be your max connection pool for the Repository Service for that node.

This should be a factor of CPU cores multiplied by five.

If 90 is higher than that result, leave 90 in place. Never decrease it.

For more information about Database Max Pool Size Connection, see https://wiki.postgresql.org/wiki/Number_Of_Database_Connections

Related Content:

Optimizing Performance for Qlik Sense Enterprise

PostgreSQL: postgresql.conf and pg_hba.conf explained

Database connection max pool reached in Qlik Sense Enterprise on Windows -

Qlik Talend Product: tMssql commit (insert, update) operation block other read (...

Multiple tasks were hanging for hours on Reading (select ) queries due to hanging Commit query operations with SQL Server (MSSQL) database on the sam... Show MoreMultiple tasks were hanging for hours on Reading (select ) queries due to hanging Commit query operations with SQL Server (MSSQL) database on the same table, necessitating the termination of tasks to release the blockage.

Cause

DBA's perspective

The commit query was pending on a customer business table, causing blocking issues as other concurrent read queries on the same table were held up by the blocking commit.

Job design perspective

The audit table-related connection did not have the auto-commit option enabled, leading to long-running commit operations.

TMC task schedulingExecution triggers were set too close together, causing concurrency issues where multiple tasks attempted to access the same table simultaneously, leading to resource contention.

Resolution

Job design optimizations

- Ensure the "AUTO COMMIT" option is enabled for the shared database connection used during audit write operations. This ensures commit operations complete without blocking other tasks.

- Add the WITH (NOLOCK) hint to all select queries on the audit table to prevent blocking due to read locks. This operates similarly to the READ UNCOMMITTED isolation level.

TMC scheduling optimizationStagger task execution times to run sequentially, reducing concurrency and avoiding race conditions when accessing the same audit table.

Database-side optimizationSet a lock timeout on the database side to prevent prolonged task holding due to locks: SET LOCK_TIMEOUT timeout_period.

Related Content

SQL Server table hints - WITH (NOLOCK) best practices (sqlshack.com)

Table Hints (Transact-SQL) - SQL Server | Microsoft Learn

Environment

-

Troubleshooting Qlik Replicate latency issues using the log files

For general advice on how to troubleshoot Qlik Replicate latency issues, see Troubleshooting Qlik Replicate Latency and Performance Issues. If your ta... Show MoreFor general advice on how to troubleshoot Qlik Replicate latency issues, see Troubleshooting Qlik Replicate Latency and Performance Issues.

If your task shows latency issues, one of the first things to do is to set the logging component performance to trace and run the task you identified for five to 10 minutes and review the resulting task log.

We advise you to:

- Open the task log in a text editor (such as Notepad++),

- then search source latency

- and choose Find all in current document.

This will list all available latency information. We can now identify a trend.

Remember, Target latency = Source latency + Handling latency.

No latency

[PERFORMANCE ]T: Source latency 0.00 seconds, Target latency 0.00 seconds, Handling latency 0.00 seconds (replicationtask.c:3703)

The source, target, and handling latency are all at 0 seconds.

Source latency

[PERFORMANCE ]T: Source latency 7634.89 seconds, Target latency 7634.89 seconds, Handling latency 0.00 seconds (replicationtask.c:3793)

[PERFORMANCE ]T: Source latency 7663.00 seconds, Target latency 7663.00 seconds, Handling latency 0.00 seconds (replicationtask.c:3793)

[PERFORMANCE ]T: Source latency 7690.12 seconds, Target latency 7693.12 seconds, Handling latency 3.00 seconds (replicationtask.c:3793)

[PERFORMANCE ]T: Source latency 7710.25 seconds, Target latency 7723.25 seconds, Handling latency 13.00 seconds (replicationtask.c:3793)

The source latency is higher than the handling latency. The key point is to look at handling latency, it must be lower than the source latency.

Cause:

- Source database busy

- Lack of network bandwidth to the source

- There are a lot of CDC changes to capture (the task has stopped for some time and only just resumed)

- There are a lot of CDC changes to capture (the source database has maintenance or a sudden influx of DML operations occurred)

If the source latency decreases during your monitoring, it is a good sign that the latency will recover; if it increases, review the causes mentioned above and resolve any outstanding source issues. You will want to consider reloading the task.

Target latency

[PERFORMANCE ]T: Source latency 2.05 seconds, Target latency 7116.05 seconds, Handling latency 7114.00 seconds (replicationtask.c:3793)

[PERFORMANCE ]T: Source latency 2.77 seconds, Target latency 7150.77 seconds, Handling latency 7148.00 seconds (replicationtask.c:3793)

[PERFORMANCE ]T: Source latency 2.16 seconds, Target latency 7182.16 seconds, Handling latency 7180.00 seconds (replicationtask.c:3793)

The target latency is higher than the source latency.

Cause:

- Target is busy

- Lack of network bandwidth to the target

- A bottleneck in the target database computing power, especially for cloud endpoints

If the target latency continues to increase, consider reloading the task.

Handling latency

Identifying whether or not you are looking at handling latency or target latency can be tricky. When the task has target latency, the queue is blocked, so the handling latency will automatically be higher as well (remember: Target latency = Source latency + Handling latency).

The key point to decide if it is handling latency is to check if there are a lot of swap files saved in the sorter folder inside the task folder of the Qlik Replicate server.

In addition, if the task log shows when the task is resumed, the handling latency increases dramatically from 0 seconds (or a low number) to a much higher value in a very short time. This can then be clearly identified as a handling latency:

2023-05-10T08:21:02:537595 [PERFORMANCE ]T: Source latency 5.54 seconds, Target latency 5.54 seconds, Handling latency 0.00 seconds (replicationtask.c:3788)

2023-05-10T08:21:32:610230 [PERFORMANCE ]T: Source latency 4.61 seconds, Target latency 55363.61 seconds, Handling latency 55359.00 seconds (replicationtask.c:3788)

This log shows handling latency increased from 0 seconds to 55359 seconds after only 30 seconds of a task's runtime. This is because Qlik Replicate will read all the swap files into memory when the task is resumed. In this situation, you need to reload the task or resume the task from a timestamp or stream position.

Related Content

Environment

- Qlik Replicate

-

Qlik Sense Connection Lost errors and App open delayed due to qrsData WebSocket ...

Users experiencing intermittent errors where the App load will be stuck in initializing for two minutes before advancing and the Hub will display the ... Show MoreUsers experiencing intermittent errors where the App load will be stuck in initializing for two minutes before advancing and the Hub will display the following error:

Connection lost. Make sure that Qlik Sense is running properly. If your session has timed out due to inactivity, refresh to continue working.

When this issue occurs the behavior will be seen by all users and a restart of the Qlik Sense repository services will restore normal operation (though in some deployments temporarily)

A HAR file or review of the developer tools of the Hub will show the Hub attempting to establish a connection to the qrsData WebSocket and then failing with the error message on the Hub and app load resuming once the WebSocket fails.

Additionally, if the Trace > Audit logs for the Proxy service are set to Debug the following error will be seen:Connection <Connection_ID> has been transferred to a streaming state to send a single error message <client_IP_address> <host>:4239

Port 4239 is the port used by the qrsData WebSocket.

Environment

- Qlik Sense Enterprise on Windows

Cause

This behavior was discovered in environments running the Tenable penetration test against the Qlik Sense servers, although this could be caused by other penetration testing software or other code that meets the conditions to cause the behavior.

The error is caused by a combination of timing and error handling in which a large number of bad requests are made without certificates, such that the connection is closed before Qlik Sense finishes validating the request. In this scenario, Qlik Sense does not handle the error gracefully and requires a restart of the Repository service to recover.

The error condition does not come up under normal operations due to how quickly the validation runs but the rapid requests from penetration test can lead to the error condition described.Qlik will release a fix in a future patch that will allow Qlik Sense to handle this scenario gracefully.

Workaround:

Unless specifically targeting Qlik Sense (including maintenance where applicable), exclude Qlik Sense from penetration tests.

An alternative is a scheduled restart of the Qlik Sense services following the completion of the penetration test.

Fix Version:

- May 2024 Patch 6

- February 2024 Patch 10

- November 2023 Patch 13

- August 2023 Patch 15

- May 2023 Patch 17

Internal Investigation ID(s):

QB-29033

Related Content

-

Qlik Sense Reload Jobs Stuck as Queued

Environment Qlik Sense On Windows All supported versions Issue Reported You have been running Qlik Sense normally for quite some time. Over time... Show MoreEnvironment

- Qlik Sense On Windows

- All supported versions

Issue Reported

You have been running Qlik Sense normally for quite some time. Over time you have accumulated an abundance of reload tasks configured in your Qlik Sense QMC.

Recently however, you have noticed that Qlik Sense QMC reload tasks are in the following state:

- Running reload jobs never complete and stuck in a 'started' triggered state

- Remaining reload jobs are in a 'queued' status

- The only way to get reload tasks completed are through restart of Qlik Sense services

Resolution

To resolve the issue, it is recommended to add an additional scheduler node (or nodes) in order to manage the ever increasing number of reload tasks in the affected Qlik Sense environment.

As time goes on, add additional scheduler nodes in proportion to the increasing number of reload tasks added/deployed in your environment.

Cause

The existing Qlik Sense scheduler nodes simply cannot manage the additional burden placed upon it by ever increasing reload tasks.

Related Content

Concurrent Reload Settings in Qlik Sense Enterprise

Internal Investigation ID

QB-20013

-

Qlik Replicate: Task Latency estimates fluctuating with big differences

Qlik Replicate 2023.5.0.284 has been observed to contain a behavior where source and target latency are miscalculated leading to big differences of fl... Show MoreQlik Replicate 2023.5.0.284 has been observed to contain a behavior where source and target latency are miscalculated leading to big differences of fluctuating latency estimates. This behavior is resolved in a new patch.

2024-06-11T09:04:24 [PERFORMANCE ]T: Source latency 184.42 seconds, Target latency 12256.62 seconds, Handling latency 12072.21 seconds (replicationtask.c:3789)

2024-06-11T09:04:54 [PERFORMANCE ]T: Source latency 214.42 seconds, Target latency 214.42 seconds, Handling latency 0.00 seconds (replicationtask.c:3789)

2024-06-11T09:09:24 [PERFORMANCE ]T: Source latency 484.43 seconds, Target latency 12480.20 seconds, Handling latency 11995.77 seconds (replicationtask.c:3789)

Resolution

This bug has been resolved in Qlik Replicate 2023.11 Service Pack 04 and Qlik Replicate 2024.05 General Availability release

Internal Investigation ID(s)

QB-27442

RECOB-7562Environment

- Qlik Replicate 2023.5.0.284

-

Reloads with Qlik Data Gateway slow down or fail after 3 hours

Reloads with the Qlik Data Gateway begin to slow randomly or sometimes run for three hours before automatically aborting. The same reloads usually ru... Show MoreReloads with the Qlik Data Gateway begin to slow randomly or sometimes run for three hours before automatically aborting.

The same reloads usually run faster and they can be completed in the right time if relaunched manually or automatically.

The logs do not show specific error messages.

Resolution

We recommend two actions to resolve the problem.

The first is to activate the process isolation to reduce the number of reloads running at the same time. Please, follow Extending the timeout for load requests.It is possible to start with a value of 10 for the ODBC|SAPBW|SAPSQL_MAX_PROCESS_COUNT parameter and adjust it after some tests.

The second action is to add the "DISCONNECT" command after every query and to start every new query with a "LIB CONNECT".

This will force the closure and re-creation of the connection every time it is needed.

More information about the DISCONNECT statement can be found in the documentation: Disconnect.We always recommend keeping Data Gateway on the latest version available.

Cause

Different causes can cause this intermittent problem.

In many cases, the system can't handle multiple connections efficiently and this can lead to severe slowness in the data connection. Activating a Process Isolation will help to avoid this.

It is also possible that there is a delay between the connection opening and the query.

A connection for a query can be opened at the beginning of a reload, then kept open for a while and recalled later for another table load in the script.There may be a disconnection when the connection is not working. This can happen if another connection to the same location is called by a concurrent reload or if a timeout automatically closes the connection.

It is possible to force Data Gateway to recreate the connection using the "DISCONNECT" statement in the script.

Environment

- Qlik Data Gateway all supported versions.

-

Qlik Gold Client Key Split Enhancement Overview

Key Split is a performance tuning option within Qlik Gold Client. Introduced in Qlik Gold Client 8.7.3, it is used to control which tables allow for s... Show MoreKey Split is a performance tuning option within Qlik Gold Client. Introduced in Qlik Gold Client 8.7.3, it is used to control which tables allow for splitting of table content in different Data Flows for export/import. This functionality is triggered when the configured table is a Header table in a Data Type, where it will split the table entries.

The benefit is to allow Gold Client to parallelize the import of data in the same table using multiple jobs, making the import process much faster.

Key Split is to be used carefully and for very specific export/import scenarios, especially when there is a performance issue importing tables on a target system. To maximize the functionality, parallel processing option must be used.

At this moment, some special table type fields like GUID, RAW, STRING, XSTRING, etc. should not be used with Key Split.

There are 4 different types of Key Split options available within Qlik Gold Client:

- Alphanumeric

For a specific range of documents, it will separate the documents by the first alphanumeric digit and create a Container for each of them.

A, B, C, …, 0, 1, 2, …, Special Chars.

Example: B123456789 (this entry will be added with all that start with “B”)

- Alphanumeric by Grouping (introduced in Gold Client 8.7.4 Patch 3 – 8.7.2024.05)

For a specific range of documents, it will separate the documents by the first alphanumeric digit grouping and creating a Container for each of them.

A-C, D-F, G-I, J-L, M-O, P-R, S-U, V-X, Y-Z, 0-2, 3-5, 6-8, 9-Special Chars.

Example: B123456789 (this entry will be added with all that start with “A* B* or C*”)

- Numeric

For a specific range of documents, it will separate the documents by the last numeric digit and create a Container for each of them.

0, 1, 2, 3, 4, 5, 6, 7, 8, 9

Example: 123456789 (this entry will be added with all which last digit is “*9”)

- Numeric by Grouping (introduced in Gold Client 8.7.4 Patch 3 – 8.7.2024.05)

For a specific range of documents, it will separate the documents by the last two numeric digits grouping and creating a Container for each of them.

Group 1: 00-04

Group 2: 05-09

Group 3: 10-14

…

Group 20: 95-99

Example: 1234567899 (this entry will be added with all which last digits are between “*95- 99”)

This diagram shows the Key Split logic for a set of Materials using the Alphanumeric and Alphanumeric by Grouping options (based on the first alphanumeric value):

This diagram shows the Key Split logic for a set of Materials using the Numeric and Numeric by Grouping options (based on the last numeric digit):

Tables using this logic are configured in the Configuration > Data Framework > Additional Tools > Key Split Config

To activate the key split in Gold Client, it is necessary to provide the Table name, Field name and Key split type. The Active/Inactive flag allows to enable/disable the functionality.

It is possible to deactivate Export Engine functionality to split table exports into multiple files based on keys in the Configuration > Administration > MConfig

Example – Alphanumeric and Alphanumeric by Grouping:

Splitting the export of MARA entries based on material Number – MATNR.

In the case of exporting MM – MATERIAL MASTER using Parallel Processing, for a specific range of documents, it will separate the documents by the first digit and create Containers for each of them.

During the import process, this logic will allow the import of data in parallel to MARA table faster.

Qlik Gold Client Sizing Report for table MARA Export with Key Split inactive

Qlik Gold Client Sizing Report for table MARA Export with Key Split Alphanumeric active

Qlik Gold Client Sizing Report for table MARA Export with Key Split Alphanumeric by Grouping active

Example – Numeric and Numeric by Grouping:

Splitting the export of ACDOCA entries based on document Number - BELNR.

In the case of exporting FI - FINANCE DOCUMENTS using Parallel Processing, for a specific range of documents, it will separate the documents by the last digit(s) and create Containers for each of them.

During the import process, this logic will allow the import of data in parallel to ACDOCA table faster.

Qlik Gold Client Sizing Report for table ACDOCA Export with Key Split inactive

Qlik Gold Client Sizing Report for table ACDOCA Export with Key Split Numeric active

Qlik Gold Client Sizing Report for table ACDOCA Export with Key Split Numeric by Grouping active

Please refer to the Qlik Gold Client Configuration and Utilities - User Guide for more information.

Thanks to Qlik SAP Solutions Engineer Hugo Martins for drafting this content!