Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics

- :

- New to Qlik Analytics

- :

- Re: How to limit the number of loaded rows in a st...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to limit the number of loaded rows in a straight table

Hi Experts,

this is probably a pure newbie question, but still I need to ask it anyway.

I have to do an application for investigating serial numbers, which are by nature distinct values. The amount of serial numbers is in the range of 250 million up to 1 billion and they are normally alphanumeric values. For investigating, a straight table (only dimensions) is more than sufficient for doing the investigation, but the amount of data creates the problem.

If I load the data, the application is in the range of 30 to 80 GB in the memory and the rendering of the table takes verylong. Filtering with the search field just doesn't work, because if finally get your results, the system will not mark them but do very strange things.

Since I learned, that the renderig is done on client computer, I want to try to limit the number of rows loaded into my table object to 50'0000. I thought I could try this with a parameter of maxrows or limiting the dimension of my table, But the property for limiting the dimensions is locked, so I can't use it.

Is this property locked, because I don't have a KPI in my table or am I doing something wrong?

I have seen some discussion, where a setting of default filtering criteria is suggested, but I would prefer if simply limiting the number of loaded datasets was possible.

If somebody has a suggestion how to solve this problem, I would appreciate it very much.

Thank you very much for your help.

Best regards

Dirk

- « Previous Replies

-

- 1

- 2

- Next Replies »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try something like this:

if(Rank(ITEM_NUMBER) > 100, ITEM_NUMBER)

Use this function in calculations. See how Rank() works.

If you are developing your App and you need some data to your tables from big DataWarehouse try loading with this script

Sample 0.1 Load

Sample prefix load percentage of data from Source. 0.1 means 10% of records. They would be not FIRST, They'll be Random.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dirk,

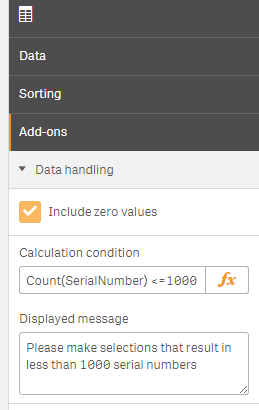

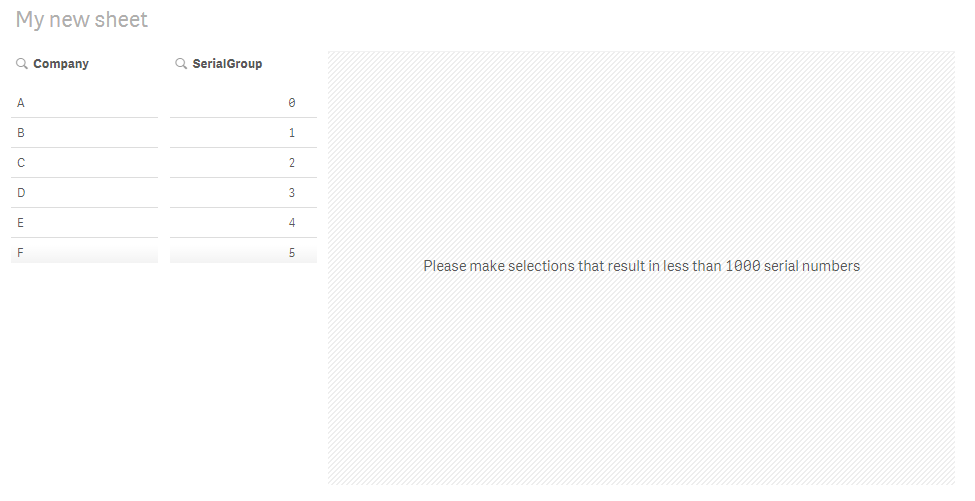

I meant using the Calculation Condition (as shown in the picture), not the Dimension Limitation. See attached app for a working example.

You probably can't do a filter search from 250M values. I suggest using some other fields to do the filtering.

-Rob

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Rob,

thank's a lot for your great example. The trick with creating intervals to reduce the number of datasets to a manageable number is just awesome.

That's exactly what I was looking for.

Thank you very much and best regards

Dirk

- « Previous Replies

-

- 1

- 2

- Next Replies »