Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Support

- :

- Support

- :

- Knowledge

- :

- Support Articles

- :

- How to get two tables (archive and non-archive) da...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

How to get two tables (archive and non-archive) data to same S3 bucket and folder

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to get two tables (archive and non-archive) data to same S3 bucket and folder

Move two tables (archive and non-archive) data to same S3 bucket and sub folder. Our assumptions are that both tables share the same DB schema and will not contain duplicate primary key.

Option 1:

You will have to create a custom script to move or copy files over to same sub folder (you may need to engage developers). Once the script is ready you can follow the instruction to configure S3 endpoint post upload processing in replicate.

- Once you have the custom script to copy/move the files to same sub folder in S3 bucket, log into Qlik Replicate console

- Open the desired task and open the target S3 endpoint

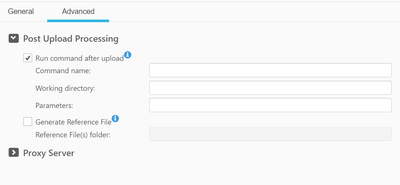

- Select Advanced tab

- Expand "Post Upload Processing", then check "Run command after upload" and provide the information to specify "Run command after upload".

Replicate will execute the command once the replication is complete. This way the files will be copied/moved to the same folder.

Option 2:

If the source has archive and non-archive tables and both the tables share same DB Schema, then you could use global rules and rename one table as the other. This will allow Replicate to merge both tables.

- Make sure the schema is the same for both tables

- Login to Qlik Replicate console

- Navigate to "Task Settings"

- Under Full Load set "If target table already exists:" to "Do nothing" and click ok

- From "Designer View" select the tables you want to do the full load and merge

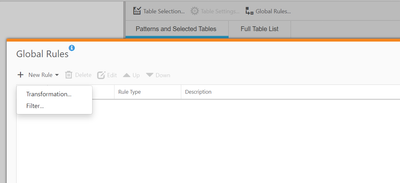

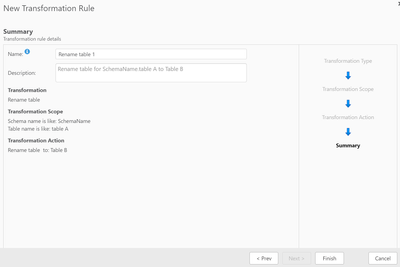

- Select "Global Rules" and choose "New Rule" and select Transformation

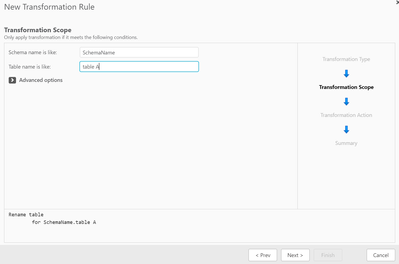

- Select Rename Table and Next

8. Enter the Schema name and Table name then select Next (if you want to merge table A with B the enter table A schema name and table name) and Next

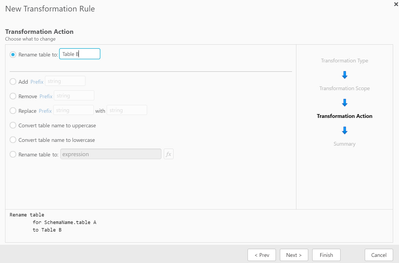

9. Select "Rename table to" and enter the destination table name (if you want to merge table A with B then enter B table name) and Next

10. Enter Name and click finish

11. Start your task

Environment

Qlik Replicate 2021.5 and above