Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Data Integration

- :

- Products & Topics

- :

- Qlik Replicate

- :

- Re: Oracle, Batch optimized apply

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oracle, Batch optimized apply

We are using Oracle for both source and target.

In our CDC task we need to preserve values in our target table when performing updates on the source. These columns do not exist on the source, so they are unmapped on the 'Transform' tab.

"Batch optimized apply" is our processing mode. We are observing that upon updating a source record, the target record is being deleted. The subsequent insert contains the updated value but the unmapped values are gone.

Is there a way to use "Batch optimized apply" whilst preserving existing, unmapped data ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

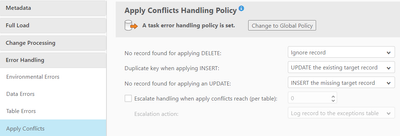

Thank you for the post to the QDI Forums. For this type of behavior can you check the Task settings for the Task under Error Handling for the Task as shown below this will have impact on the data being sent to the Target. This applies to Apply Conflicts if you are using Apply Changes and not Store Changes option

Check into this Task setting and Error handling and also confirm the Source and Target Tables match DDL for each. If further investigation is needed please share a Support case with the QDI Qlik Team who will be happy to assist you further.

Note: if a Support case is opened please have the SOURCE_CAPTURE and TARGET_APPLY set to VERBOSE only to recreate the issue and then make sure this is turned back to Info under the Loggers.

Regards,

Bill Steinagle

QDI Support

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Ole_Dufour , this may well be the effect of a non-default setting for "Task under Error Handling for the Task" as @Bill_Steinagle implies.

It's best to attach the task JSON (as TXT) for confirmation, at least 'task settings' and possibly transformations for any table not behaving as expected/desired. Leave or Omit endpoint information as you see fit.

Anyway, both 'Update existing' and 'insert when missing' are implemented as a pre-delete (if present) and (re)insert. Does that explain? You can see this is the task log using TARGET_APPLY DEBUG (for prod/QA with lots of activity) or VERBOS for a shorter DEV/TEST

Groetjes uit Eindhoven (deze week),

Hein.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you. We have opened a support case.