Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Data Integration

- :

- Products & Topics

- :

- Qlik Replicate

- :

- huge transactions waiting for commit (until source...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

huge transactions waiting for commit (until source commit)

Hello,

One day our Qlik replicate logstream task has received a huge number of CDC from our source database (IBM DB2 for LUW) in just few transactions. Unfortunately, there was a problem to process them and they were stuck on disk until source commit. Because of that, the latency spiked and there was no way to get it down. We tried running it again from certain point in time, but those changes still couldn't be processed. So we had to restart the task and accept a gap in our data.

Do you know what could be the possible cause? Could it be too much for Qlik Replicate or it had to be some issue with our source database? Can we do something from Qlik perspective to be safe in the future? I've contacted people who manage the database, but there was no infrastructural changes, failures, data cleaning etc that time, just bigger than usual number of changes.

I'm attaching screenshots.

Best regards and thank you,

Adam

- Subscribe by Topic:

-

CDC - 1-many - Log Stream

-

Connectivity - Sources or Targets

-

Performance

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @AdamWicz ,

Thanks for posting on the forum. If the latency is caused by a long running transaction on the source, your options are to wait for the task to catch up or reload the task. Replicate will not send changes to the target until a commit is received.

If there are no errors or warnings in the logs, we recommend reaching out to our Professional Services team to help with performance tuning from the Qlik side. Additionally, I would talk to source DBA about why these long running transactions occurred and if there are any recommended timeout settings.

Best,

Kelly

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As per @KellyHobson you can also confirm with the Uncommitted transactions looking into the Replicate\tasks\sorter directory for the tswp (swap) files as this is where we keep track of all the open transactions between the Source and Target endpoints. As noted if you have an influx of changes on the Source you would see an increase in these files as well.

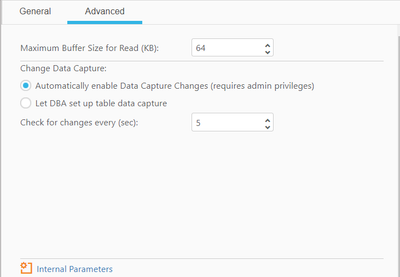

Side note as well on the DB2 LUW Source endpoint you can set the below internal parameter to 0 as this will dump the DB2 Read Speeds along with increasing the Maximum buffer size for read on the Advanced Tab.

Internal Parameter to see the read speeds from DB2 transaction logs:

|

|

0

|

Maximum buffer size for read:

Note: As per the update from @KellyHobson as long as there are no errors in the Task and or one-by-one mode the best course of action is working with the Professional Services Team.

Thanks!

Bill

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @AdamWicz ,

Thanks for posting on the forum. If the latency is caused by a long running transaction on the source, your options are to wait for the task to catch up or reload the task. Replicate will not send changes to the target until a commit is received.

If there are no errors or warnings in the logs, we recommend reaching out to our Professional Services team to help with performance tuning from the Qlik side. Additionally, I would talk to source DBA about why these long running transactions occurred and if there are any recommended timeout settings.

Best,

Kelly

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As per @KellyHobson you can also confirm with the Uncommitted transactions looking into the Replicate\tasks\sorter directory for the tswp (swap) files as this is where we keep track of all the open transactions between the Source and Target endpoints. As noted if you have an influx of changes on the Source you would see an increase in these files as well.

Side note as well on the DB2 LUW Source endpoint you can set the below internal parameter to 0 as this will dump the DB2 Read Speeds along with increasing the Maximum buffer size for read on the Advanced Tab.

Internal Parameter to see the read speeds from DB2 transaction logs:

|

|

0

|

Maximum buffer size for read:

Note: As per the update from @KellyHobson as long as there are no errors in the Task and or one-by-one mode the best course of action is working with the Professional Services Team.

Thanks!

Bill

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good day would like to make sure the post to this Community Post that @KellyHobson information and mine helped with this post. Let us know.

Thanks!

Bill

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Accumulating (until source commit)" means that the transaction should be committed in the source database, and until it is committed, the replication process will not read the changes from the source or transfer them to the target."

"The number displayed in 'Accumulating' indicates the number of transactions that have not yet been committed in the source database."

Am i correct on this.