Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Call statement issue

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Call statement issue

Hey there,

I am looking for some help with my include/call statements. I have found what I think is a bug but if anyone knows a way around this or perhaps can find a flaw in my method I would appreciate the assistance!

I am trying to do a loop to load all my script files into the application. Each script file is a SUB routine. The code below will work if I comment out the loop for the Include statements and uncomment the single include statement above the CALL statement so that makes me think there is some issue with loaded SUB routines using a loop.

Here is my code:

SET vRoot = '..\..\..\..\SQL Script Library';

/* Builds a list of Only Custom scripts in the folder*/

FOR Each File in filelist ('$(vRoot)'&'\*.qvs')

ScriptList:

Load

SubField('$(File)','\',-1) AS ScriptFullName

, SubField(SubField(SubField('$(File)','\',-1),'_',1),'.',1) AS GenericScriptName

AUTOGENERATE 1

Where SubField('$(File)','\',-1) like '*_BD*';

next File

/* Appends the remaining scripts skipping any generic scripts where custom scripts should be run instead */

FOR Each File in filelist ('$(vRoot)'&'\*.qvs')

Concatenate (ScriptList)

Load

SubField('$(File)','\',-1) AS ScriptFullName

, SubField(SubField(SubField('$(File)','\',-1),'_',1),'.',1) AS GenericScriptName

AUTOGENERATE 1

Where NOT EXISTS(GenericScriptName,SubField(SubField(SubField('$(File)','\',-1),'_',1),'.',1));

next File

/* Uses the list of script names created above and loads only those scripts into the application to be called upon. */

For i = 0 to NoOfRows('ScriptList') -1

Let vFileName = Peek('ScriptFullName',$(i),'ScriptList');

$(Include='..\..\..\..\SQL Script Library\'$(vFileName)');

TRACE $(vFileName) Loaded;

Next i

///* Clean up variable list */

//SET vRoot = '';

//SET File = '';

//SET i = '';

//SET vFileName = '';

//DROP TABLE ScriptList;

//$(Include='..\..\..\..\SQL Script Library\Eligibility_BD.qvs);

CALL Elig (vIncludeElig,vIncludeEmployer);

- « Previous Replies

-

- 1

- 2

- Next Replies »

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can ask yourself what the scope will be of a SUBroutine that is defined only inside a control statement. Will it be known outside of the loop? I don't think so.

A simple example:

LET C = 0;

FOR i = 1 TO 1

SUB ADD(A, B)

LET C = A + B;

END SUB

NEXT

CALL ADD(1, 2);

If you run this script, the script engine won't find a definition for SUB ADD, although the SUB statement has been executed at least once. SUBs can be nested or embedded inside other SUBs or control statements, but as soon as that context is destroyed, the SUB definition is lost...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

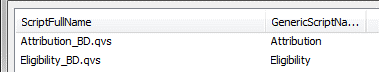

Here is what the ScriptList table looks like when loaded:

When you compare the ScriptFullName to what is being loaded with the stand alone Include statement, you see they are the same thing so I am not sure why one works and one doesn't.

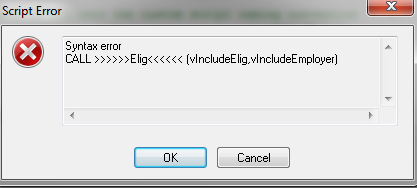

Also here is the error I get when I trying to run the code the way I had intended (with the loop for the include).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can ask yourself what the scope will be of a SUBroutine that is defined only inside a control statement. Will it be known outside of the loop? I don't think so.

A simple example:

LET C = 0;

FOR i = 1 TO 1

SUB ADD(A, B)

LET C = A + B;

END SUB

NEXT

CALL ADD(1, 2);

If you run this script, the script engine won't find a definition for SUB ADD, although the SUB statement has been executed at least once. SUBs can be nested or embedded inside other SUBs or control statements, but as soon as that context is destroyed, the SUB definition is lost...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Peter,

Thanks for the quick reply.

What you are saying is the the Sub will only exist as long as the for loop is running and that once it is exited it no longer can be called. When running through this code via debugger, you can see that each file that is included via the include statement gets a new tab and is just waiting to be run (given my code are all Subs otherwise the code would have been run at the time of the include statement). Why then wouldn't these tabs be purged/removed once the loop that created them has completed/exited? After the code gets past the loop and to the call statements, I can still see these tabs with my sub routines waiting to be run.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well (and I didn't test this, sorry) it may be that when you enter the FOR loop again, your SUBs become visible again and you could CALL them allright. But that's not what you intend to do, I guess. Once you leave the scope, the SUB definitions become invisible. While they aren't necessarily dropped and they may still be there somewhere, you simply can't reach them from the point where you'ld like to call them..

Apparently the fact that they are still visible in your debugger, doesn't mean a thing.

AFAIK there is only one solution: in the FOR loop, concatenate all script files in a variable but don't execute the SUB definitions. Then after completing the FOR loop, expand the concatenated script block inline at the outer script level so that the SUB definitions are executed and visible to all the code that follows, and issue a series of SUB CALLs in a new FOR loop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In general should your approach to load the include-files from a list in a loop be working. Also that all / most of the includes contain sub-routines isn't per se a show-stopper but you will need to be very careful with the order of them and any possible dependencies between them. Like Peter mentioned the scope of the sub-routines is to consider. AFAIK all inside the routine created variables won't be available on the outside - probably there are further more things to be bear in mind.

Another point are various syntax-issues by constructing such a complex load-approach, for example I could imagine that you might need different kinds of quotes, dollar-sign expansion and sometimes further adjustements to the variables and parameters, maybe like:

CALL Elig ('$(vIncludeElig)','$(vIncludeEmployer)');

After all I'm not sure that that you will do you a favor with this complicated approach of creating load-scripts. It's quite difficult to develop it and to maintain it will be a nightmare ...

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks again Peter for the reply.

Interesting idea here.

While I dont understand why the subs wouldnt be available outside the loop it would explain why my code worked outside the loop. I was never executing my subs within the loop previously, I just wanted a better way to load them with less coding.

I suppose a better option here would be to combine the include and Call statements in the loop. This would eliminate the issue I had been experiencing but given my calls need to be in a specific order I will need to rank my script names so that I run them in the specified order.

Do you foresee any issue with this approach being done in the DataModel layer of a 3 QVW development setup (generator, Datamodel, visual)? I assume everything after the code has been run should be available outside the loop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your input Marcus.

You do raise a good point with the complication gained by looping the CALL statements since they each will have different variables that are being passed to the SUB routines. This would be a pain to maintanence.

Let me ask you a question about variables in SUB routines.

If I write my SUBs to not have any variables being passed in, would the application still know what they are when the SUB routine is being run? For example, if I am loading a file from a specific path, instead of specifying the path in each file we have defined a variable. If I dont include the variable in the SUB parameters, shouldnt the application still know where this file resides?

The reason I ask is that alot of my SUBs have If statements to determine if the code should be run and those variables are stored in file that is loaded ahead of this loop. If I just remove the parameters from the call statements suddenly things get easier to code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

An alternative could be to load the content of every script file in a single variable (using a FOR loop), then rearrange the variables (to get the scripts in to the correct order based on some external factor) and then simply expand the variables at the outer level and in sequence. After that you can call the SUBs as you like or wherever you like.

Expanding the variables is a no-brainer. Imagine that you can have a maximum of 26 external script files, and at the moment you only have 5. Then the following series will expand whatever you have. Empty/Non-existent variables (starting from Var 6 after the FOR loop stopped executing at cycle 6) won't cause any problems.

$(vScriptExpansionA)

$(vScriptExpansionB)

$(vScriptExpansionC)

$(vScriptExpansionD)

$(vScriptExpansionE)

$(vScriptExpansionF)

:

$(vScriptExpansionZ)

If you are careful, I don't think this will cause any problems in a Server Architecture with different stages. On condition that you execute the series of scripts in the exact same way every time. And be careful to check the scope and visibility of all variables you need to be available at the outer level.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Most of my script-variables mainly paths and filetypes and similar stuff are loaded at very first point in the script with multiple include-statements within a tab "prepare" and therefore they are global everywhere until the script finished and another include-statements dropped all those variables again. This minimized the need to pass many variables within the routines.

Actually I don't use many of the sub-routines for loading anything - earlier I did a lot of such stuff to create load-scripts on the fly. I think some of them were really nice from a pure coding point of view but they was too complicated for a production environment where things must be just simply and stable.

- Marcus

- « Previous Replies

-

- 1

- 2

- Next Replies »