Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics & AI

- :

- Products & Topics

- :

- App Development

- :

- Re: Data Doubling on Incremental Load

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Data Doubling on Incremental Load

I am trying to load data in an incremental fashion. Initially we have created a data dump of 120 million rows after creating the dump i want to load data as it comes on a weekly basis. The data that will come will have a new time stamp i.e an increased date from the previous loaded date. I have used this article for creating an incremental load Link.

This Technique works on the same data that i have created, but as i try on main data it doubles with the new come rows.

I can share the code also if someone can guide me why data is doubling.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sharing the load script will definitely help identify what the issue is.

The most likely thing that is missing is a WHERE EXISTS on the incremental part to only load data from the persisted QVD that has not been loaded from the latest load. To use this you need a unique key on the table. If you don't have a unique key you may need to create one by concatenating a number of fields until it is unique.

I have my own article on incremental load here, but it looks like the one you found is spot on anyway.

Steve

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @stevedark,

I am writing the data load script which i have used with comments. The data on which increment load is being applied is 120 million.

[Table_Shipping]:

LOAD

distinct

number,

date,

l_date,

e_date,

exp_country_code,

exp_port,

port_type,

invoice_num,

invoice_item_sr_no,

i_code,

scheme_code,

license_no,

file_no,

uom_code,

fc_code,

cnt_code,

tot_fob_val_fc,

tot_fob_val_inr,

tot_qty

FROM [fact_all_shipping_bill_export.qvd]

(qvd);

[Last_Update_Date]:

load

max(date) as MaxDate //Max Date Extracted from Previous Data

Resident [Table_Shipping];

Let Last_Update_Date = peek('MaxDate',0,'Last_Update_Date');

drop table [Table_Shipping];

Second Part

[Incremental]:

LOAD

Distinct

number,

date,

l_date,

e_date,

exp_country_code,

exp_port,

port_type,

invoice_num,

invoice_item_sr_no,

i_code,

scheme_code,

license_no,

file_no,

uom_code,

fc_code,

cnt_code,

tot_fob_val_fc,

tot_fob_val_inr,

tot_qty

FROM [New_Data_.qvd] // Here New Data will be there

(qvd) where date > $(Last_Update_Date); //Where Condition is applied on 'date' so that we get data ahead of certain 'max date' from previous data

Concatenate

LOAD

Distinct

number,

date,

l_date,

e_date,

exp_country_code,

exp_port,

port_type,

invoice_num,

invoice_item_sr_no,

i_code,

scheme_code,

license_no,

file_no,

uom_code,

fc_code,

cnt_code,

tot_fob_val_fc,

tot_fob_val_inr,

tot_qty

FROM [fact_all_shipping_bill_export.qvd]

(qvd);

store [Incremental] into [fact_all_shipping_bill_export.qvd];

drop table [Incremental];

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Date formats mismatch could be a reason why not working here. Try to compare in numeric format. Make the following changes and try:

Let Last_Update_Date = Num(peek('MaxDate',0,'Last_Update_Date'));

where num(date) > $(Last_Update_Date);

If this doesn't work, check on the values of variable and date field - if they are coming as expected in numeric format.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@tresB is most likely onto something with the using of a numeric date, this could already be a number though, due to the use of Max. The easiest way to find out is to put in a trace after the PEEK.

TRACE $(Last_Update_Date);

Regardless of the incremental load, there are some things in your script that will make it hugely slow, and it will be worth sorting those out as well.

I don't see why you are loading the old data, getting the date, dropping the old data, loading the new and then loading the old again. This seems to be adding unnecessary steps. If it is because the CONCATENATE statement must be the next statement after the previous load, note that you can do CONCATENATE(Table_Shipping) to concatenate to a table much later on in the script if you need.

Getting the Max date from a loaded table can take ages, you might consider another way of recording what date your old table is up to. Perhaps saving this to a separate QVD that you can load it from?

The addition of the DISTINCT statement could be breaking the Optimized load from your QVD. It could also be removing valid duplicates which could lower your numbers. If you are concerned about dupes getting into your data it would be best to solve this when creating the QVD, not loading from it. In the main DISTINCT is not required though.

Getting those optimisations right might make it simpler to fix the incremental.

Personally, when doing incrementals on dates I tend to create separate QVDs for each period and then load them in a for each loop. For instance, you could create a QVD for each day in the current month, then after month end load all of the daily QVDs and store those into a monthly QVD. In your load script you could then loop for each prior month loading those QVDs and then for each day in the current month.

This approach works nice for intra-day loads, as you can keep overwriting the current day file during the day without having to go near the older files.

Hope that all makes sense?

Cheers,

Steve

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Further thought... if you have a unique key in number or invoice_item_sr_no, then loading the new data and then loading from the old data with WHERE NOT EXISTS (number) will be infinitely more efficient.

If neither of those are unique you could create a new unique key when building the QVDs, e.g.

LOAD

invoice_num & ':' & invoice_item_sr_no as UniqueID,

This can then be used for the WHERE NOT EXISTS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @stevedark,

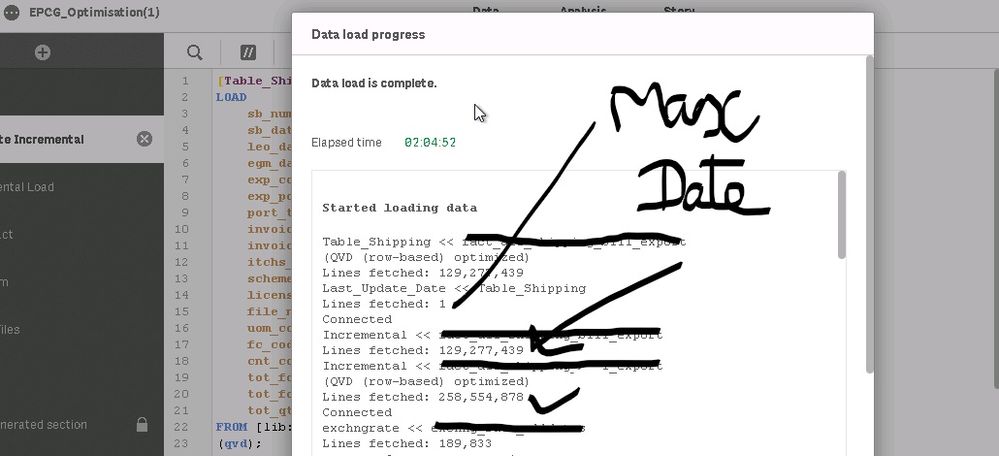

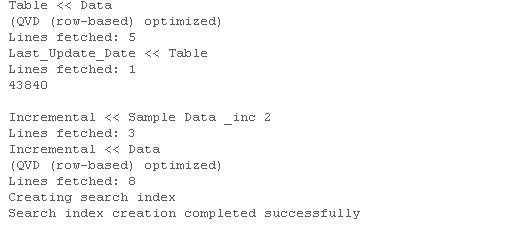

I have done poc of the method that i am implementing here. I created a file with 5 rows and 2 col. The condition that i have applied is on date. As suggested by you Trace() method was used.

In Sample data inc 2 we have 8 rows. 3 are from date ahead of previous one. Here the results are showing fine. but as soon as i get my real data. The condition is not working properly. I understand that the current procedure can be implemented in more efficient manner. But my primary concern is if the script is working fine on smaller data set why it is showing vague result in large data. Is it a limitation of qlik sense.

In this example we have previous data set and the new data which has at least 17 different rows as compared to previous data, but it does not even load a single row from NewData File.

I cannot store date in another file as it would make my data architecture very complex.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So, it appears to be getting 44018 (6th July 2020) from the historical data okay, but not finding anything since then in the New_Data_ QVD.

Suggest that you try loading the min and max dates from the New_Data_ QVD and display them as integers. You can do this using TRACE again, or load those values into an app.

My thought is that the date field in New_Data_.qvd may not be in the correct format, perhaps it's a string not a numeric date.

Where is New_Data_.qvd being created and what data source is it coming from?

Steve

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sanket_Sharma Date format match is important while you apply the incremental logic. So first check the source field date format on which you are doing incremental then you can set your variable value with same format as source like below

[Last_Update_Date]: load date(max(date),'YYYY-MM-DD') as MaxDate //Assuming source date format 'YYYY-MM-DD' Resident [Table_Shipping];

Let Last_Update_Date = peek('MaxDate',0,'Last_Update_Date');

Now your where condition should work as expected. Don't forget single quotes as highlighted below

where date > '$(Last_Update_Date)';

But catch is that above where condition doesn't work for all the data sources. For example for few oracle sources you may need to use to_date function like below to evaluate where condition

where date > to_date('$(Last_Update_Date)','YYYY-MM-DD');