Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics & AI

- :

- Products & Topics

- :

- App Development

- :

- Re: Help and pointers on specific Perfomance optim...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Help and pointers on specific Perfomance optimization

Hi all

My team has been handed over a Qlik application for production, but the performance doesn't yet seem mature for at publication. I've read the post by Jonny Poole with great interest (post ) and used the QS Document Analyzer for pointing out the most time consuming calculations.

I have a bunch of questions, and hope someone can guide me in the right direction in terms of optimization of this application.

1) Map-color by expression. A huge script is inserted as color-code for a map, including a ton of nested if's (including the same set-expression) which is both slow and impossible to understand. I've converted the essential expression to a variable, and made a pick/match expression.

Pick(Match(-1,

$(vMap_new) <= 1,

$(vMap_new) <= 1.1,

$(vMap_new) <= 1.2,

$(vMap_new) > 1.2),

RGB(83,167,28),

RGB(124,189,224),

RGB(0,126,197),

RGB(1,62,96))

But as far as I can understand, this is still heavy as every combination must be evaluated (due to the ranges?). As for the actual expression I'm not sure how to optimize. Only thing I could come up with was the Antal_Pakninger > 0. Moving it to the load-script and making a FLAG which could either be multiplied with the measure, or Sum( {<Antal_Pakninger_Flag={1}>} SUM_COLUMN)

((Sum({

<EK_YDER=,

EK_KLYNGE=,

[BK_Ekspeditions_Dato.autoCalendar.MånedSiden] = {">=$(vMonthAgoEnd)<$(vMonthAgoStart)"},

ANTAL_PAKNINGER={">0"},

[BK_Ekspeditions_Dato.autoCalendar.ÅrMåned]=,

KOMMUNE-={""},

NATIONAL={"1"},

KLYNGE=,

OWN_DATA=,

Dim_Yder.TilDato={"$(=$(vMaxDateYder))"}>}

SUM_COLUMN)

/

sum({<

EK_YDER=,

EK_KLYNGE=,

BK_Ekspeditions_Dato ={"$(=$(vMaxDate))"},

Dim_Yder.TilDato={"$(=$(vMaxDateYder))"},

EK_AGR_PERSON=,

Indikation=,

ATC_5=,

KOMMUNE-={""},

NATIONAL={"1"},

KLYNGE=,

OWN_DATA= >}

Antal_Sikret) * 1000)

/

((Sum({<

EK_YDER=,

EK_KLYNGE=,

[BK_Ekspeditions_Dato.autoCalendar.MånedSiden] = {">=$(vMonthAgoEnd)<$(vMonthAgoStart)"},

[OPGOERELSES_DATO.autoCalendar.ÅrMåned]= ,

REGION={'Region Hovedstaden', 'Region Midtjylland', 'Region Nordjylland', 'Region Sjælland', 'Region Syddanmark'},

ANTAL_PAKNINGER={">0"},

Dim_Yder.TilDato={"$(=$(vMaxDateYder))"},

NATIONAL={"1"},

KLYNGE=,

OWN_DATA= >}

total SUM_COLUMN)

/

sum({<

EK_YDER=,

EK_KLYNGE=,

BK_Ekspeditions_Dato ={"$(=$(vMaxDate))"},

EK_AGR_PERSON=,

Indikation=,

ATC_5=,

REGION={'Region Hovedstaden', 'Region Midtjylland', 'Region Nordjylland', 'Region Sjælland', 'Region Syddanmark'},

Dim_Yder.TilDato={"$(=$(vMaxDateYder))"},

NATIONAL={"1"},

KLYNGE=,

OWN_DATA= >}

total Antal_Sikret)) * 1000))

2) Surprisingly one of the filters were the slowest performer, and seems to be due to AGGR(ONLY()) combination.

=AGGR(ONLY({< [OPGOERELSES_DATO.autoCalendar.MånedSiden] = {"<39"} >} pText_2), pText_2)Apparently OPGOERELSES_DATO and pText_2 are in seperatate dimensions, with the OPGOERELSES_DATO having more than 15.000.000 rows. Furthermore it uses the AutoCalendar, and I'm not aware how to make flags on this in Qlik.

3) Some of the numbers will be masked, in case the value is < 5. Are there any good way to do this? The current way is nested if's:

IF(

sum({<xxxxxx>}SUM_COLUMN)

>4,

num(sum({<xxxxxx>}SUM_COLUMN),'#.##0',',','.'),

IF(

sum({<xxxxxx>}SUM_COLUMN) >0,dual(num('5','<#'),4)

,))

,'#.##0',',','.')

Thank you for your time 🙂

- Tags:

- set expression

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

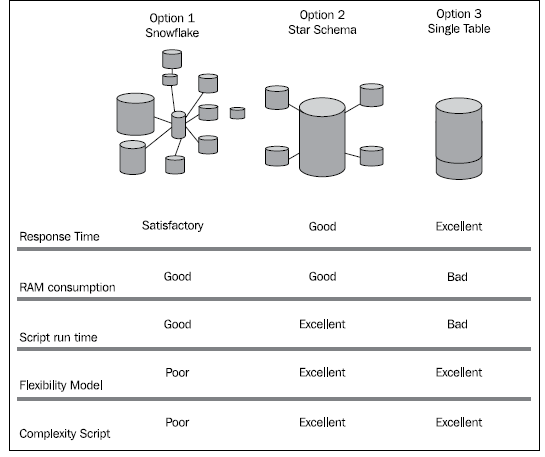

I think optimizing the UI performance should start one step before within the datamodel. It should be designed in the direction of a star-scheme and by really large datasets rather a single big table. Any link-table approaches should be avoided. Especially by complex expressions with if-loops and/or aggr-constructs the required fields should come from one table.

By checking this you should also consider if there are any UI parts which could be transferred within the script.

Further I suggest to replace the auto-calendar with a real master-calendar. Especially if the derived field comes from a big fact-table it has a negative impact on the performance.

Your pick(match()) approach is in general the same like the nested if-loops because it has multiple calculations which all compare against -1. Usually better performed an approach which calculates only ones and compared it against a fixed valuelist. Important by such method is an appropriate rounding of the result. It may look like:

pick(match(round(YourExpression, '0.1'), 0.0, 0.1, ..., 1.0, 1.1, ..., 9.9), col1, col2, ..., col11, col12, ..., col100)

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think optimizing the UI performance should start one step before within the datamodel. It should be designed in the direction of a star-scheme and by really large datasets rather a single big table. Any link-table approaches should be avoided. Especially by complex expressions with if-loops and/or aggr-constructs the required fields should come from one table.

By checking this you should also consider if there are any UI parts which could be transferred within the script.

Further I suggest to replace the auto-calendar with a real master-calendar. Especially if the derived field comes from a big fact-table it has a negative impact on the performance.

Your pick(match()) approach is in general the same like the nested if-loops because it has multiple calculations which all compare against -1. Usually better performed an approach which calculates only ones and compared it against a fixed valuelist. Important by such method is an appropriate rounding of the result. It may look like:

pick(match(round(YourExpression, '0.1'), 0.0, 0.1, ..., 1.0, 1.1, ..., 9.9), col1, col2, ..., col11, col12, ..., col100)

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Marcus

Thank you for the inputs! I'm aware of the Star schema approach and single table based on this image, but unsure how much we are allowed to deviate from the current data-model. Trying to consolidate some of the fields would definately give some performance boost, and something we will look into.

We'll look into the rounding of values for the pick/match, and see if we can come up with a good solution 🙂

Thank you for your time!