Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics

- :

- App Development

- :

- Re: Qlik Sense QVF app takes too long to open - si...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Qlik Sense QVF app takes too long to open - size 12GB

We have a Qlik Sense App with QVF size of 12GB. It takes too long to open. Can anybody suggest what could make Qlik Sense app with large data open faster?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How long is too long? How many RAM will be comsumed from this app and how many RAM is further available? If there is any swapping into the virtual RAM it will be very slow.

Another important point - do you really need all these data in the same way they are loaded within the app. I could imagine that there is a lot of potential to optimized the datamodel. A screenshot from the tableviewer an the numbers of records from the main-tables and some more details will be useful in this case.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This issue is happening on dev Qlik Sense Server (we don't have other environments yet). It has 96 GB RAM and is a physical server. What is "swapping into the virtual RAM"?

I have to check with the user about if the whole volume is needed. As of now, when I tried to open the sheets, some of the components kept loading forever. Data is loaded from different sources (QVD, odbc, excel). There were many (about 10) linked tables in the Data Model (no circular references). I tried using left joins and made them all into 1 table. But that only made opening of app faster and sheets still take forever to open. I will be checking with the user about using 'Inner Keep' option to have multiple tables with lesser data. But if there is no way to reduce the data volume significantly, what other options will we have?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Swapping into the virtual RAM means that the OS respectively Qlik hadn't enough RAM available and the hard-drive will be used to puffer the demand.

Like abobe mentioned there more details needed about the number of records, kind of data, structure from datamodel, kind and number of used objects and how the expressions look like.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

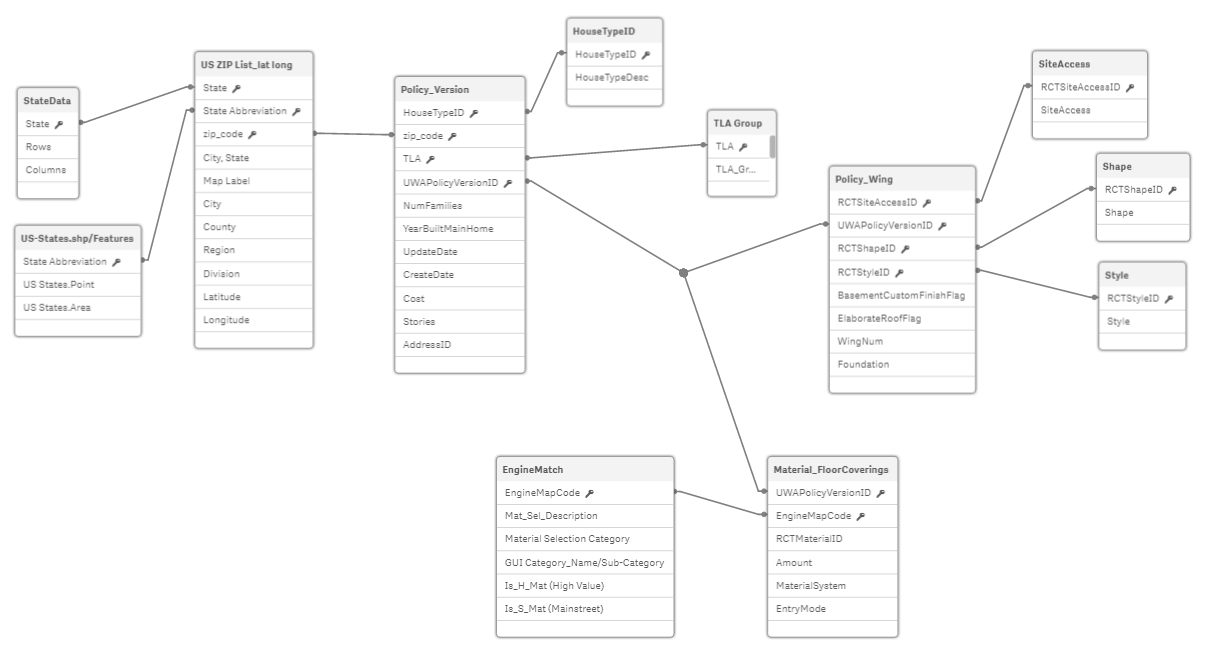

This is the Data Model of the 12 GB App. I tried using left joins to put everything into 1 single table. But that didn't help any.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think a reducing of the number of tables must have a positive effect on the gui response-times. It looked that you could it reduce quite easily to 3 tables: Policy_Version + Policy_Wing + Material_FloorCoverings. To merge those 3 tables could be more complicated because their relationship might be not 1:1 which meant with simple joining would effect the number of records. In this case could be a mapping-approach useful or if the key is really only a key to put them into an autonumber-function.

Another important point is the look on the cardinality of the fields - this meant could fields be splitted, for example a timestamp into a date and in time, see: The Importance Of Being Distinct. Further I didn't see a calendar-table which let me assume that you used the inbuilt calendar-functionalities - I have not much experience with them and I don't know how this feature is implemented but by such a large application I would test this against a real calendar.

Further essential are the kind of dimensions and expressions which you used. You should avoid any complexity here, also no aggr-functions, no calculated dimensions, no dimension/expression groups, no nested if-loops and quite probably some further performance-killer.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reading this may also help -

*** 6 Weeks in to QV Development, 30 Million Records QV Document and Help Needed!!! ****