Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics & AI

- :

- Products & Topics

- :

- App Development

- :

- Re: Why is this an error in Data Model ?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why is this an error in Data Model ?

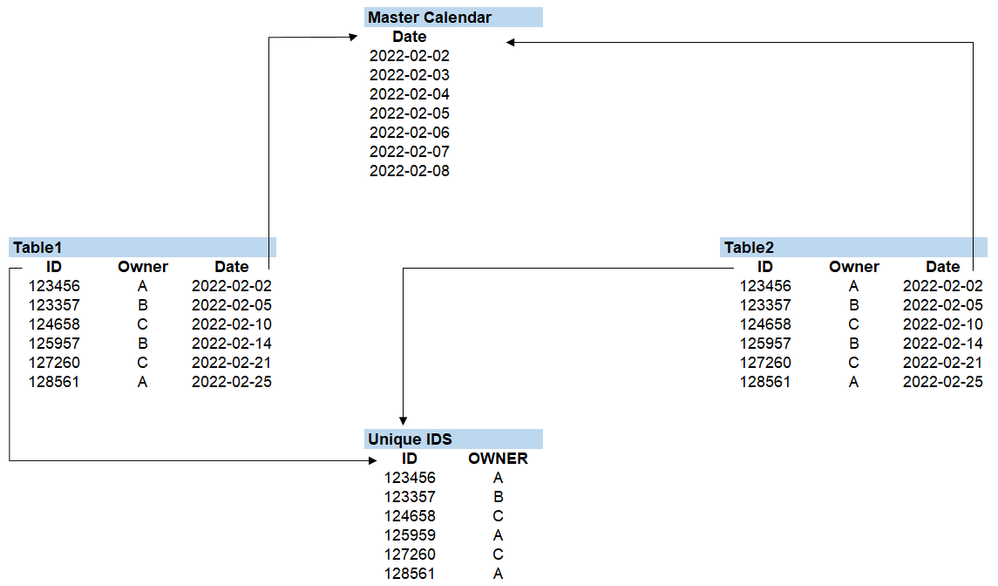

Disclaimer, I'm new to Qlik and I'm trying to figure out what's wrong with such a model below.

Qlik does not like such loop, so it seems I could either use "Master Calendar" to link table 1 and 2 OR "Unique IDS".

Now let's say I just use "Master Calendar" to link the tables, if I filter Table1 on Owner A I'm still going to get all the Owner of Table 2 matching the same date.

And vice versa if I use "Unique IDS" instead I loose the connection on date.

These are standard type of query though and I'm sure there's a way to do it in Qlik but I don't know how.

Thanks !

- « Previous Replies

-

- 1

- 2

- Next Replies »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found a solution to my problem:

I created a "Link" table using Unique IDs and Master Calendar on which all tables are connected.

Load Distinct

ID

resident Unique IDs;

Join

Load Distinct

DATE

Resident Master_Calendar;

And created the Key = ID & '-' DATE in the Link Table as well as Table1 and Table2.

Only issue I have with such an approach is that the Link Table size grow very quickly, especially if such a key involve more than 2 fields. So I'm still open to suggestion if there's a more efficient approach, I don't know if the Link table is stored in memory are recomputed every time it's queried.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The link-table is stored within the file and is also kept in memory and is used in all related UI objects to create the appropriate dimensional context within virtual tables on which the final aggregations are performed.

In general such approach worked but from a performance point of view it's by larger data-sets usually not the best choice. Therefore my above suggestion not to create a link-table else to concatenate the fact-tables directly.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @marcus_sommer , would you have some code examples so I understand what you mean by concatenating the tables please ?

In my simple example above Table 1 and 2 have the same 3 fields but in the real use case each table have around 30 fields each and most of them are completely different. So would concatenation still be a viable solution in this case ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Meant was something like:

t: load F1, F2, F3, F4, F5, F6, 'X' as F10 from X;

concatenate(t) load F1, F2, F3, F7, F8, F9, 'Y' as F10 from Y;

That the table is then more or less asynchron is neither a technically problem nor must it cause performance or logically issues. How suitable it is in regard to the degree of asynchronous and/or to the size of the data-set and/or the UI requirements depends - especially compared to any alternatives and the efforts and side-effects which come with them.

The above suggestion is further only the starting point to develop a data-model and doesn't mean that no further transformations are needed or at least sensible. For example it's possible and recommended to synchronize as many as possible of the field-names and their content, like SalesDate and BudgetDate which aren't not really different information and could be just renamed as Date - the needed context could you get from the source-information. Also measures like Sales and Budget could be kept within a single field - by many measure-fields you may further consider to transform the cross-table logic of them into a data-stream which means to create two fields for them - KPI & Value.

This leads to rather long and small tables which may potentially have slight RAM and UI performance disadvantages against short and wide tables - but for us it worked very well and we use this approach nearly everywhere and we could improve our handling + performance significantly.

Beside this some of the missing/wrong field-values might be cleaned/filled/prepared and/or outsourced in further dimension-tables and/or ...

- Marcus

- « Previous Replies

-

- 1

- 2

- Next Replies »