Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics

- :

- New to Qlik Analytics

- :

- Re: Parse JSON

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Parse JSON

I am fairly new to Qlik and I am having some trouble to parse JSON data into a new table. The way I have my data now is impossible for Qlik to read.

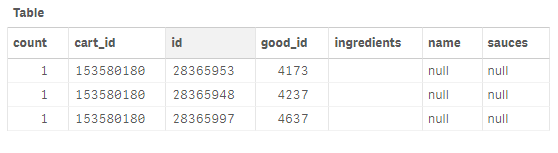

| count | cart_id | composition | id |

| 1 | 153580180 | {"good_id":4173,"ingredients":[],"name":null,"sauces":null} | 28365953 |

| 1 | 153580180 | {"good_id":4237,"ingredients":[],"name":null,"sauces":null,"old_price":null} | 28365948 |

| 1 | 153580180 | {"good_id":4637,"ingredients":[],"name":null,"sauces":null,"old_price":null} | 28365997 |

I need to parse the data inside the json file so that I would get new columns with the values good_id, ingredients, name, sauces and so on.

The result that I need to get is the following:

| Cart_id | combo_box_id | count | good_id | id | ingredients | sauces |

| 153793731 | 1 | 38797 | 26989050 | |||

| 153793731 | 1 | 38871 | 26989051 | |||

| 154116428 | 1 | 70 | 27092775 | |||

| 154116428 | 1 | 15633 | 27092776 | |||

| 154116428 | 1 | 15635 | 27092777 |

What do I have to do?

- Subscribe by Topic:

-

data extraction

-

JSON

-

new table

-

parse data

-

parse json

-

read data

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've just played around with some string functions in the preceding load to transform the data in the Data Load editor:

Load

[count],

[cart_id],

// [composition],

[id],

Mid(good_id,9) as [good_id],

Mid(ingredients,13) as [ingredients],

Mid(name,6) as [name],

Mid(sauces,8) as [sauces]

;

LOAD

[count],

[cart_id],

[composition],

[id],

Subfield(PurgeChar([composition], '{"}'), ',', 1) AS [good_id],

Subfield(PurgeChar([composition], '{"}[]'), ',', 2) AS [ingredients],

Subfield(PurgeChar([composition], '{"}'), ',', 3) AS [name],

Subfield(PurgeChar([composition], '{"}'), ',', 4) AS [sauces];

LOAD * INLINE

[

count;cart_id;composition;id

1;153580180;{"good_id":4173,"ingredients":[]],"name":null,"sauces":null};28365953

1;153580180;{"good_id":4237,"ingredients":[]],"name":null,"sauces":null,"old_price":null};28365948

1;153580180;{"good_id":4637,"ingredients":[]],"name":null,"sauces":null,"old_price":null};28365997

](delimiter is ';');

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It should look exactly like this, but in this case, I don't have 3 elements but millions of rows. What should I do in this case?

I am sorry if I am mistaken, but as far as I understand this part of the code only convert 3 elements of the dataset

LOAD * INLINE

[

count;cart_id;composition;id

1;153580180;{"good_id":4173,"ingredients":[]],"name":null,"sauces":null};28365953

1;153580180;{"good_id":4237,"ingredients":[]],"name":null,"sauces":null,"old_price":null};28365948

1;153580180;{"good_id":4637,"ingredients":[]],"name":null,"sauces":null,"old_price":null};28365997

](delimiter is ';');

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

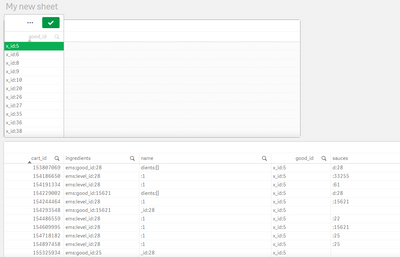

I commented these first rows of the code and almost everything went normal

// LOAD * INLINE

// [

// count;cart_id;composition;id

// 1;153580180;{"good_id":4173,"ingredients":[]],"name":null,"sauces":null};28365953

// 1;153580180;{"good_id":4237,"ingredients":[]],"name":null,"sauces":null,"old_price":null};28365948

// 1;153580180;{"good_id":4637,"ingredients":[]],"name":null,"sauces":null,"old_price":null};28365997

// ](delimiter is ';');

Picture with better resolution: https://drive.google.com/file/d/1ZiYr8Vzplh7qc3SpI6hm10RJmX9efXr5/view?usp=sharing

There were only some few elements that couldn't be read as you can see on the picture. How can I solve this problem?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

IT looks like the sample data I was using is a bit different to what you are applying it to here, can you give me a bigger sample to play with?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this one is quite messy, question tho. how many fields do you expect inside the composition field? will it be more than 100 fields?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| count | cart_id | composition | id |

| 1 | 153580180 | {"good_id":4173,"ingredients":[],"name":null,"sauces":null} | 28365953 |

| 1 | 153580180 | {"good_id":4237,"ingredients":[],"name":null,"sauces":null,"old_price":null} | 28365948 |

| 1 | 153580180 | {"good_id":4637,"ingredients":[],"name":null,"sauces":null,"old_price":null} | 28365997 |

| 1 | 162709961 | {"combo_box_id":5,"combo_box_items":[{"good_id":22,"ingredients":[],"level_id":28,"price":709,"count":1,"type":"good"},{"good_id":15621,"ingredients":[],"level_id":28,"price":639,"count":1,"type":"good"},{"good_id":18676,"ingredients":[],"level_id":29,"price":179,"count":1,"type":"good"},{"good_id":10393,"ingredients":[],"level_id":30,"price":429,"count":1,"type":"good"},{"good_id":18676,"ingredients":[],"level_id":29,"price":179,"count":1,"type":"good"},{"good_id":54695,"ingredients":[],"level_id":31,"price":59,"count":1,"type":"good"},{"good_id":54699,"ingredients":[],"level_id":31,"price":49,"count":1,"type":"good"},{"good_id":54685,"ingredients":[],"level_id":31,"price":49,"count":1,"type":"good"},{"good_id":54693,"ingredients":[],"level_id":31,"price":49,"count":1,"type":"good"}]} | 25600256 |

| 1 | 162709965 | {"combo_box_id":5,"combo_box_items":[{"good_id":25,"level_id":28,"count":1,"ingredients":[]},{"good_id":26,"level_id":28,"count":1,"ingredients":[]},{"good_id":18676,"level_id":29,"count":1,"ingredients":[]},{"good_id":18676,"level_id":29,"count":1,"ingredients":[]},{"good_id":10393,"level_id":30,"count":1,"ingredients":[]},{"good_id":54693,"level_id":31,"count":1,"ingredients":[]},{"good_id":54691,"level_id":31,"count":1,"ingredients":[]},{"good_id":54691,"level_id":31,"count":1,"ingredients":[]},{"good_id":54693,"level_id":31,"count":1,"ingredients":[]}]} | 51254026 |

| 1 | 162709568 | {"combo_box_id":5,"combo_box_items":[{"good_id":25,"level_id":28,"count":1,"ingredients":[]},{"good_id":26,"level_id":28,"count":1,"ingredients":[]},{"good_id":18676,"level_id":29,"count":1,"ingredients":[]},{"good_id":18676,"level_id":29,"count":1,"ingredients":[]},{"good_id":10393,"level_id":30,"count":1,"ingredients":[]},{"good_id":54699,"level_id":31,"count":1,"ingredients":[]},{"good_id":54695,"level_id":31,"count":1,"ingredients":[]},{"good_id":54691,"level_id":31,"count":1,"ingredients":[]},{"good_id":54693,"level_id":31,"count":1,"ingredients":[]}]} | 14215125 |

| 1 | 162702561 | {"combo_box_id":5,"combo_box_items":[{"good_id":68,"ingredients":[],"level_id":28,"price":589,"count":1,"type":"good"},{"good_id":61,"ingredients":[],"level_id":28,"price":709,"count":1,"type":"good"},{"good_id":18676,"ingredients":[],"level_id":29,"price":179,"count":1,"type":"good"},{"good_id":10393,"ingredients":[],"level_id":30,"price":429,"count":1,"type":"good"},{"good_id":54697,"ingredients":[],"level_id":31,"price":49,"count":1,"type":"good"},{"good_id":54695,"ingredients":[],"level_id":31,"price":59,"count":1,"type":"good"},{"good_id":54695,"ingredients":[],"level_id":31,"price":59,"count":1,"type":"good"},{"good_id":54695,"ingredients":[],"level_id":31,"price":59,"count":1,"type":"good"},{"good_id":18676,"ingredients":[],"level_id":29,"price":179,"count":1,"type":"good"}]} | 12523562 |

Here is the data that I've sent previously + the exceptions that I faced.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't expect to have a table in the end. I just need to parse this JSON so I can use the information inside to make the necessary calculations and visualisations. But just to clear it up, I am using the tables to check that the data was parsed correctly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is getting quite complex, with the nested combination of fields.

Not sure it is predictable enough to do this way.

Can you extract the data another way through the rest connector ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Until now I used a code on python to interpret and extract the data. Also, theoretically, I could work better with json data if I update the MySQL server to the 8.0 version, correct? I remember that there are some functions available for such situations. About your suggestion, I would be glad if you could explain how I can use REST in this case.