Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics

- :

- New to Qlik Analytics

- :

- Set analysis, mapping, table N-1, data model...I'm...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Set analysis, mapping, table N-1, data model...I'm confusing!

Hello everybody,

I've been facing a problem for a few days and wanted to get some advice from the community.

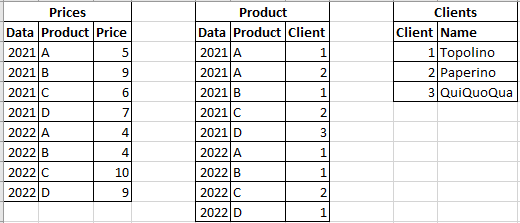

These are my tables:

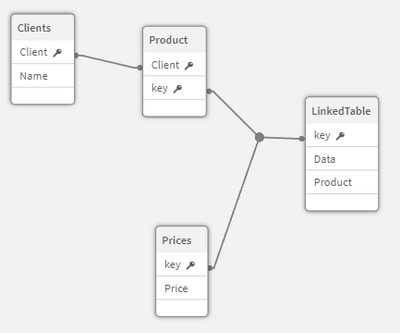

This is the data model (I created a linked table to eliminate the syntetic key)

I think the table "product" is the fact table because the tables Clients and Prices are dimension table (the columns Client and key - in Prices - are unique). Is it correct?

Question 1:

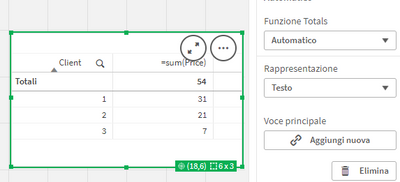

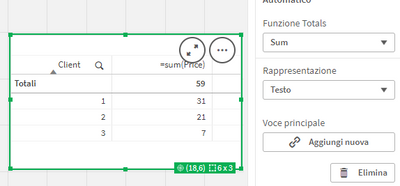

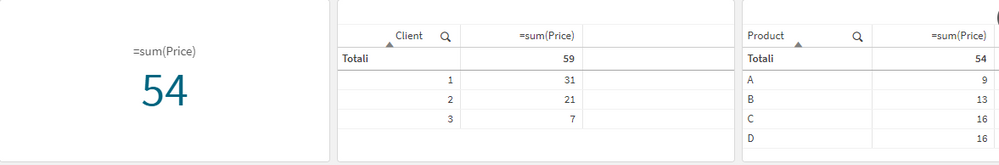

why if I selected "automatic" the sum is 54 and if I selected "sum" the value is - correctly - 59?

Question 2:

if I calculate =sum(Price) the results changed according to the dimension taken into consideration (Client o Product)...

why does this happen?

Question 3:

I googled and solved the problem with the mapping: that is, I associated to the fact table, according to the key (product-data), the correct value for each single row of the product table (fact table).

Is this method correct and does it follow the logic of correct modeling?

Question 4:

In principle, why does this happen and do we need the mapping (or join)?

Why do we have information that needs to be repeated for each row of the fact table? why doesn't the associative model do it for us?

Question 5:

In conclusion, can we say that the tables of dimensions only serve as an aggregation function of dimensions that are also present in the fact table?

I apologize for the length of the post and thank all those who want to clarify my ideas!

Alessandro

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The product table seems to be the fact-table whereby I would expect one relevant field more and this is an [Amount] information because usually a client could buy more as a single piece of everything and also a canceling of a selling should sometimes happens.

Just from looking on the post it's difficult to say exactly what went wrong but I assume it's the way how your link-table was created. IMO the link-table itself is wrongly placed - because it is created between a fact-table and a dimension-table. I don't remember of having seeing it anywhere and I doubt that there are really useful scenarios for it. Usually they were created between fact-tables.

Further link-tables are hot loved from many users but they mean mostly more efforts and complexity and a lower performance as with going the recommended way to develop a data-model which is a star-scheme (merging all facts into a single-fact-table).

If you want to comprehend which data are really loaded within your data-model you should add recno() and rowno() to all loadings by naming them unique to avoid synthetic keys and circular references and then using them together with your relevant fields within table-boxes. Now you could compare them against your raw-data.

Within charts it could become difficult to find the reasons why the results differs to your expectation because the dimension-values will be always shown distinct and the aggregation-functions return a conclusion of the underlying data but not mandatory why they are like they are.

Beside this I suggest to map the price-information to the product-table and then dropping the price-table. Further just remove the link-table because they isn't needed anyway.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Alessandro,

Perhaps the problems are caused by the overly simplified data model that you have. Typically in sales analysis, you'd expect the fact table to contain the following:

- Customer

- Product

- Date

- Quantity

- Price (which could be stored in a separate table, but preferably should be in the fact)

In this case, your formula for sales would look like this:

sum(Quantity * Price)

This formula works equally well in detailed lines, and in totals, and in dimension-less KPIs.

Your formula simply summarizes Prices from the table of Prices, and therefore the result of the formula depends on the way it's calculated:

- When it's not attached to any dimensions, then each distinct price is summed up once, which is incorrect in your case.

- in a chart with Product being the dimension, a price for each Product will get summed up once.

- In a chart with Product and Customer, a price of each combination of Product and Customer will get summed up once.

Coming back to your data structure - the right way in your case is to denormalize the structure into one flat table. Then everything will work correctly.

You can use Mapping or Join for that - it's a matter of personal preference. I prefer Mapping, while my colleague at the Masters Summit for Qlik and a fellow book author, Barry Harmsen, prefers Joining. If you can join us at the Masters Summit for Qlik in September in Madrid, you will learn why, along with lots of other valuable Qlik techniques that we teach there. Check it out:

https://masterssummit.com

Cheers,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @marcus_sommer and @Oleg_Troyansky for the quick reply!

I clarify the model I sent: it was actually built to expose my problem.

In reality, the problem is more complex:

1. I have multiple fact tables for separate "production events". I then created a link table.

2. One of the fact tables is a standardized data flow at ministerial level: this flow provides fixed information but not the value of the service offered. To better specify: the sector is the health sector and the facts table shows the unique code for each patient and the health service provided with the DRG code. In this flow, however, the enhancement of the DRG is not present.

The DRG values are present in another table (in the simplified model it is represented by the "prices" table). My intent was to create a database of DRG values - historicized - and then associate it with the ministerial flow for business analyzes.

In this more complex case, I seem to understand that the correct way is to use the mapping or the join and move the "price" information on the fact table ... is that correct?

Is it possible to say that in principle it is useful to use mapping or joining when we have dimension tables that have values inside them (for example price in this case) that must be linked to the fact table?

thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If we remain by your origin example and assume that your fact-table products contained millions of records for about 1000 different products. If now each product has a single price - the price might be kept within an own table just linked per product-ID to the fact-table.

But if the price isn't unique for a product else might be changing over the time - maybe even on a daily level - the simple link per product-ID wouldn't work else product-ID + date would be needed as link. Of course this isn't a complicated matter and easily done - but now the dimension-table might be growing to hundred thousands of records. This is not necessarily a show-stopper and will usually work fine even if it's not the most performant solution.

Going further it's not seldom that the prices are not only changing on a timely level else they are also variable against a customer-cluster, countries and the sold amount of the product and various more factors. Again it would be no big issue to extend the link for a customer + country and so on information. But now the dimension-table will be also grow to millions of records - maybe near the number of records of the fact-table because no much redundancy exists anymore. And now it's really a problem because the link-field has now many millions of distinct field-values with probably rather large string-values through the concatenating of the multiple information. Just such single field might then consume multiple GB of RAM/storage. More background could you find here:

The Importance Of Being Distinct - Qlik Community - 1466796

By developing a link-table data-model the above described risk increased and it's not seldom that a link-table has in the end a lot more records as any of the fact-tables and that the needed combined link-fields are becoming very big.

Beside this a link-table doesn't save efforts or is easier to create as merging the facts into a single table - rather the opposite will be true. In many scenarios the facts could be rather simply concatenated without much adjustments (maybe some harmonizing of the field-names or the formatting).

That this big fact-table is more or less asynchron is mostly no big issue in Qlik. If there are more needs to clean and check data and to flag them and/or to fill/populate missing values/records/information and so on - it would be needed regardless from the used data-model.

Therefore I suggest to merge all facts into a single fact-table. Even if there are in the end sensible reasons not to use a classical star-scheme else a snowflake and/or link-table and/or direct-linked facts or anything else - you should start with a star-scheme and trying to solve all challenges there because going directly to a more complex data-model and identifying and solving issues there will be much harder.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes and Yes - in your case, you need to have the "value" field populated in the Fact table, using Mapping or Joining.

Generally speaking, it's one of the best practices to keep all measures (quantity and value fields that participate in calculations) in the fact table as much as possible. It produces more accurate results and helps performance.

We teach all of these principles at the Masters Summit for Qlik - I highly recommend to check out the agenda!

Cheers,