Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Data Integration

- :

- Qlik

- :

- Qlik Compose for Data Lakes

- :

- Limit Number of parquet files when writing to HDFS

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Limit Number of parquet files when writing to HDFS

I need to limit the number of parquet files when writing to HDFS .

Currently in provisioning area compose task is writing large number of small files.

Kindly advise is there anyway to write parquet files as small number of large file size.

Is there any configuration available to limit the files as above.

- Tags:

- compose

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

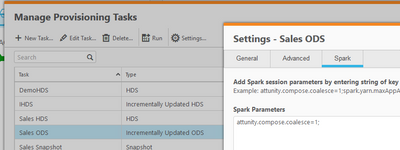

Hi - you can set this on a provisioning task by using the Spark settings. This can be applied to storage or provisioning tasks. The setting is a RUN-TIME setting (ie. doesn't require generation of code for storage tasks).

Highlight the task, click Settings - navigate to the Spark tab and enter -

attunity.compose.coalesce=N;

where N is a number (not "N" 🙂 ) which depicts the number of files.

(For example below - I've set the provisioning task to create 1 file per entity in my provisioning task)

By default Spark typically delivers 200 files for each process as its a multi-threaded execution. When this setting is seen Compose applies the spark dataframe coalesce setting to all the tables in the task.

If you have multiple tables that require different sizes you can define multiple provisioning tasks with subsets of tables (going to the same data lake storage bucket/filesystem/folder etc and same metastore database).

Hope that helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi - you can set this on a provisioning task by using the Spark settings. This can be applied to storage or provisioning tasks. The setting is a RUN-TIME setting (ie. doesn't require generation of code for storage tasks).

Highlight the task, click Settings - navigate to the Spark tab and enter -

attunity.compose.coalesce=N;

where N is a number (not "N" 🙂 ) which depicts the number of files.

(For example below - I've set the provisioning task to create 1 file per entity in my provisioning task)

By default Spark typically delivers 200 files for each process as its a multi-threaded execution. When this setting is seen Compose applies the spark dataframe coalesce setting to all the tables in the task.

If you have multiple tables that require different sizes you can define multiple provisioning tasks with subsets of tables (going to the same data lake storage bucket/filesystem/folder etc and same metastore database).

Hope that helps.