Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics & AI

- :

- Products & Topics

- :

- App Development

- :

- REST API data - Select All Fields

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

REST API data - Select All Fields

Hello,

I have a connection to one of core system's REST API set up. Everything including pagination is working fine for me.

I am using WITH CONNECTION statement to adjust the URL in order to fetch data from different tables (entities) which REST API provides connection to.

Only issue here is, that it is really tricky for me to get all the field names which those entities include. My intention is to always fetch all fields from the table but SELECT * does not seem to work.

One workaround could be to obtain those field names from API using /describe method which API offers. This call returns field names which the particular table includes. But the issue here is that the result of this call in JSON looks e. g.:

"fields": {

"company_id": {

"type": "relation",

"required": true

},

"create_ts": {

"type": "datetime",

"required": false,

"default": "now"

},

"create_user": {

"type": "char",

"allow_blank": true,

"max_length": 30,

"required": false,

"default": ""

}

Which means that each field name is returned as table name (where the table itself includes field's properties like type etc.). And I am not able to get those chidlren table names returned as values so they could be used as field names in the following call to API.

Does anyone know about any workaround/solution?

I might have been missing something very simple as I am not so familiar with SQL.

Thanks a lot. Have a great day.

- Tags:

- sql

- « Previous Replies

-

- 1

- 2

- Next Replies »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @onmysi49

You have hit on a common problem with loading from REST connections, in that they are not SQL statements and so do not have the functionality for *.

Every REST endpoint is different, so there is no one-size-fits-all solution. The fact you have managed to pull the metadata is a good start.

If you load just the metadata what do you have in the datamodel? Is it a single table with each field of the table relating to a field in the REST endpoint?

If so, you will want to enumerate around using a FOR loop for each field using the NoOfFields function and the FieldName function.

Using this loop you will be able to concatenate a string into a variable and then inject it into your REST command using dollar sign expansion.

Good luck!

Steve

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Steve,

thanks a lot for your reply and explanation!!!

Unfortunately it is not a single table with each field. Hopefully it is recognizable from my original post - actually each field in this metadata response has its each table with further details:

E.g.

"company_id": {

"type": "relation",

"required": true

},

So I can access for example the "type" field or "required" field. As they are treated as fields in QS. But I cannot access the field names (company_id in this example) because they are treated as table names in this parent-child hierarchy model in QS. By any chance is there any way e.g. to extract all children table names from parent table? This would do the trick I guess.

Also is there any document which would describe how exactly the REST calls & REST connector works "in the background" in QS. It would be interesting to know how exactly these SELECT queries are processed and call is sent to API etc. If you know where I am aiming.

Thanks a lot. Your help is much appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @onmysi49

The REST response may have different levels, but the load into Qlik will most likely put it into a single table. You should then be able to parse that.

Load the meta data and then store the table to a CSV file, it's worth renaming the table as the default table name is bonkers. You don't need all of the RESIDENT loads, as you can just deal with the RAW response.

The code will be something like:

LIB CONNECT TO 'MetaData';

tmpMetaData:

SQL SELECT

"Field1"

... etc. ...

FROM JSON (wrap on) "root" PK "__KEY_root";

STORE tmpMetaData INTO [lib://MyLib/MetaDataRaw.csv] (txt);

Once you have the CSV you will be able to see how it is structured and what column names it has.

When the REST connector has hierarchical data it usually has each level on it's own row of the same table, with values which are missing being null(). If the tables are in a colum you will want to do a LOAD DISTINCT from that column. You can then loop to build the string out.

tmpFields:

LOAD DISTINCT

fields

RESIDENT tmpMetaData

WHERE not IsNull(fields);

DROP TABLE tmpMetaData;

let vLoadStatement = '';

for iField = 0 to NoOfRows('tmpFields') -1

let vField = peek('fields', iField, 'tmpFields');

let vLoadStatement = vLoadStatement & '"' & vField & '",';

next

DROP TABLE tmpFields;

If the format of the tmpMetaData table is not as I guess then please post here how it looks and we can work out how to deal with it.

Regarding documentation https://help.qlik.com/ is probably the best place to start.

Cheers,

Steve

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Steve,

once again thank you very much for your reply!!!

Unfortunately I am still not able to come up with any working SELECT statement for metadata table. Hopefully I can clarify it a bit further.

The connection to the REST API which I use is set up for the URL which returns data from one of the tables (entities) from our WMS system. The table is called ib_shipment (so the URL ends with ib_shipment as the name of the entity). Utilizing the WITH CONNECTION statement I can change the URL in order to fetch other tables or also I can add /describe at the end of the URL to get metadata response for the particular entity.

But I have had no luck so far constructing the correct SELECT statement for the /describe URL.

I still guess that the issue comes from the structure of the JSON response where only data which I can fetch is the last values in the hieararchy (e.g. fields "type" or "required") - please see the structure of the response, the script and the resulting data model.

Part of JSON response to /describe call

e.g. company_id is a field but I cannot fetch that name as it is treated as table name in QS

"fields": {

"company_id": {

"type": "relation",

"required": true

},

"create_ts": {

"type": "datetime",

"required": false,

"default": "now"

},

"create_user": {

"type": "char",

"allow_blank": true,

"max_length": 30,

"required": false,

"default": ""

},

QS Script which fetches the last level of the hierarchy

In this example it fetches the "type" field from company_id table

I would like to fetch the value 'company_id' itself as it is the name of the field which would be used in the future call to REST API

SQL SELECT

"__KEY_root",

(SELECT

"__KEY_fields",

"__FK_fields",

(SELECT

"type"

FROM "company_id" PK "__KEY_company_id" FK "__company_id")

FROM "fields" PK "__KEY_fields" FK "__FK_fields")

FROM JSON (wrap on) "root" PK "__KEY_root"

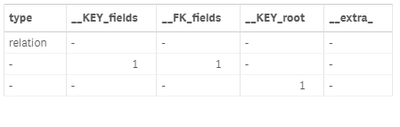

WITH CONNECTION(Url "REST API URL for the particular table/describe");Resulting table of this script above:

I must mention that I am trying to achieve this only using WITH CONNECTION as I do not have the permission to create new connection in our QS environment. So I cannot utilize the "Select data to load" window.

Thanks a lot for any inputs. Your help is highly appreciated.

Cheers,

Ondrej

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @onmysi49

I can now see your predicament! I would always use Select Data To Load on any given endpoint to just pull all of the possible columns and levels of the hierarchy to then work out what it is I actually need to load.

Can you install a copy of Sense Desktop and point this towards the endpoint? This will allow you to generate code against an API.

Even if you do that though, the fact that you can't send a wildcard to pull columns from a REST API endpoint is going to scupper you, as even with the metadata endpoint the column names are likely to only appear in columns, and therefore need to be known in advance.

Are there any other metadata endpoints in the API?

Given it is an API on one of your own systems, is it possible to get a developer to build you a new endpoint?

Sorry I don't have any easy solutions.

Steve

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Steve,

thanks a lot for your reply.

I will install a copy of Qlik Sense Desktop and try to create the connection. I will let you know then.

Thanks a lot. Have a great day.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the case of the Johns Hopkins time-series C19 data, they add a column every day - this was easy to pull in via desktop, but now as it's CSV, it has to go via a GET / REST for cloud.

Is there no way to load "all" without specifying columns?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @110

The short answer is "no, there isn't".

The slightly longer answer is that some REST APIs have endpoints which return the metadata for the other endpoints. This way it is possible to populate a variable with the load script that includes all fields and then inject that variable into the code.

If you know the format of the new field (say it was Jun22, Jul22 etc.) then you could do the same thing where you build up a variable.

Hope that helps.

Steve

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Steve , it was just reading a raw csv from github

https://github.com/CSSEGISandData/COVID-19/blob/master/csse_covid_19_data/csse_covid_19_time_series/...

then pivoting out the dates from the column headers to row.

I guess if it's possible to parameterise the column name element for the pseudo-sql select part, that's something , very frustrating though!

- « Previous Replies

-

- 1

- 2

- Next Replies »