Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView Administration

- :

- Use database source for deciding what QVWs to exec...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Use database source for deciding what QVWs to execute

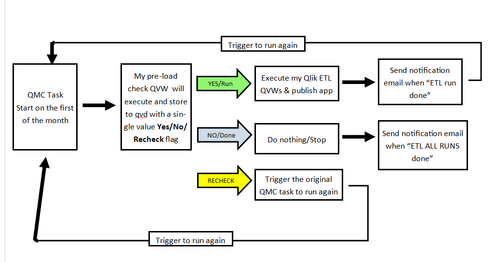

I have complex scenario that I am trying to automate that involves running conditional based ETL flows which involve QMC tasks and triggers to decide what QVWs, if any, run. On the first of the month, I want to start a QlikView QMC task that runs a "pre-check" kind of qvw against a database table to see if 1 (or more) of 3 jobs is ready for Qlik to process, meaning, all the data exists in the database for the respective job. and it is safe for Qlik to process it without leaving out rows. One of 3 results (single value) will occur from the "pre-check" and dump a qvd with those results that the next QVWs can make decisions on what to do next. The 3 possible results are Recheck (no job data is ready), Run (1 or more job data is ready), and Done (all 3 jobs have gone through Qlik etl processing). The Qlik ETL path can run up to 3 times but not more than 3 times, and is dependent upon which jobs are ready according the database that gets loaded into the “pre-check” QVW.

The trouble I am having and don’t know how to code for in the script that will decide what to do once the result value is loaded from the result qvd is how to exit the script if the condition is not met (hence, false) but if the condition is met (hence, true) have the script continue to run which then will trigger the rest of the qvws for my ETL to execute.

So with my data how can I do this:

If result = Recheck then exit script and trigger original QMC to run the “pre-check” load again

If result = Run then execute the Qlik ETL path, send notification, and trigger original QMC task again

If result = Done then exit script and send notification and do nothing else

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure of the solution you are suggesting. However have you considered using the EDX trigger functionality. I've listed some resources below that may assist you. The EDX triggered would fires off by an external program of your choice once it get confirmation of required job(s) completion.

1. QlikView Triggering EDX Enabled Tasks

https://help.qlik.com/en-US/qlikview/May2022/Subsystems/Server/Content/QV_Server/QlikView-Server/QVS...).

https://community.qlik.com/t5/QlikView-Administration/External-Event-Trigger/m-p/1566651

2. QlikView APIs and SDKs

https://help.qlik.com/en-US/qlikview-developer/May2022/Content/QV_HelpSites/APIsAndSDKs.htm

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure if an external control to trigger multiple task-chains depending on certain conditions per data-base is simplifying the job and in the end expedient. Personally I would tend to keep the entire update-chain within a single system to avoid any complexity by combining different ones.

Quite important in such cases is the data-architecture within the environment - means how many layers are implemented and are incremental approaches included or not? This means any missing data and/or load-errors mustn't mandatory lead to failures or incorrect information within the report-layer.

The most work for such tasks must be done anyway - independent of the used systems - and this means developing a valid and stable logic to identify + detect + evaluate possible data-errors and how to handle them in various branches.

For example, it's possible to read with Qlik appropriate check-tables from the data-base and/or making the checks directly within Qlik by counting the number of records or the max. date or similar things and then storing these information within another tables or just txt-parameters and/or branching into different actions like ending the script without doing anything further or generating an extra load-error to break the task - and the further task-chains reacts on them.

Of course it's not trivial and will depend on multiple factors, like having a publisher, the number of nodes and cores, the available time-frames, the hardware-resources, the mentioned data-architecture, the way and the types of notification - the last means could it be accepted and handled that intentionally task-errors are a valid notifying and maybe also a trigger for further tasks?

- Marcus