Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Re: How to handle incremental load ?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to handle incremental load ?

Hi All,

I have to implement incremental load and I have following material master on FTP now how to handle incremental load? Here itemnumber is a primary key

Material_Master:

LOAD

MATERIAL,

COLOR,

SIZE,

MATERIAL&COLOR&SIZE as itemnumber,

[Size Group],

Description,

COMPOSITION,

BRANDCD,

BRAND,

SEASON,

SEASONDESC,

THEME,

DELYCODE,

GENDER,

IF(GENDER ='LADIES', 'WOMEN', IF(GENDER='MENS','MENS', IF(GENDER='BOYS' or GENDER='GIRLS' or GENDER='BABY','KIDS', GENDER))) as Gender_Revised,

ProductHierarchy,

MaterialGroup,

[Core/Fashion],

FIT,

PATTERN,

[Key/NonKey],

RANGE,

ORIGIN,

[Top/Bottom],

SLEEVE,

NECK,

WEATHER,

OCCASSION,

CustomDesc,

MaterialGrp4,

MGRP4DESC,

EAN,

ALTEAN,

PurchaseGroup,

PurchaseGroupDesc,

CATEGORY,

SUBCAT,

BASECOMP,

MaterialType as Promotional_1,

ValuationClass,

COST,

MRP as MRP_INR,

if (MRP <1000, '<1000', if (MRP <2000, '1000-2000',if (MRP <3000, '2000-3000',if (MRP <4000, '3000-4000',if (MRP <5000, '4000-5000',if (MRP <6000, '5000-6000', '>6000')))))) as [MRP Bucket]

FROM

(qvd)

where Exists itemnumber;

- « Previous Replies

- Next Replies »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Depending on your requirements there could be different scenarios:

1. Insert only

2. Insert + Update + Delete

1 Scenario:

Old_table:

LOAD * FROM *.qvd (qvd);

New_table:

LOAD * FROM *.qvd (qvd)

Where not Exists(UniqueID);

2 Scenario:

New_table:

LOAD * FROM *.qvd (qvd);

Old_table:

LOAD * FROM *.qvd (qvd)

Where not Exists(UniqueID);

NOTE: not Exists statement should be applied only to Unique Identifier, otherwise you'll get result different than expected.

Id you're trying to find only entries to update, than for your case should be smth like below:

Old_table:

Load

.....

itemnumber,

....

FROM Old.qvd (qvd);

New Table:

Load

...

Where Exists(itemnumber, MATERIAL&COLOR&SIZE);

itemnumber field should exist in your data model before you use it in Exists() statement.

Hope this helps.

//Andrei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I want to use a scenario where i can insert and update. I have different sources from where I am getting data.

1.store details from FTP.

2. Material Master from Ftp

3. Sales data from sql tables and

4, transaction file from FTP.

Now I want to implement incremental load. Firstly I was taking full load of Material files from FTP and now I am getting incremental files on FTP so Now please tell How to handle the incremental load in my qlikview so that the data can be inserted and overwritten?

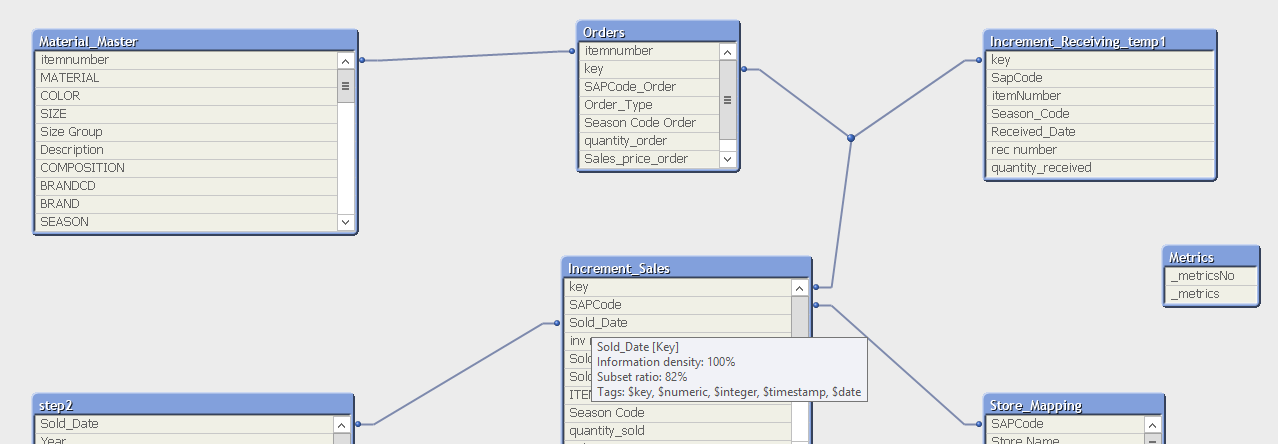

Following is the data model

i am facing problem that i am getting duplicate records in my dasboard where i am using straight table

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To be sure I understood correctly:

You loaded full table some time ago via FTP, and now receiving only incremental parts via FTP (for example last 1-2-3 days/hours)

In this scenario we're assuming that this new FTP has the most recent values, so you need to proceed with several transform steps:

1. For first run (with full) you need to store this table into qvd file... This is something you will re-use again and again.

Ex:

Table:

LOAD

....

MATERIAL&COLOR&SIZE as itemnumber,

....

FROM ftp://<path>/file.qvd (qvd);

Store table into <local path/ place>/full_file.qvd (qvd);

//// This local file will contain your full data, which you will update in the next steps (make a backup of this from to time - will reduce you a lot of headache)

2. For the rest following runs you need to read new file first, add to this table only missing entries from full table and then rewrite full.qvd file

Ex:

IncrementSales:

Load

.....

MATERIAL&COLOR&SIZE as itemnumber,

....

FROM ftp://<path>/Incremental_file.qvd (qvd);

///Here could be Concatenate statement if you're not sure that the table structure is always the same

Load * from <local path/ place>/full_file.qvd (qvd)

Where not exists(itemnumber);

store IncrementSales into <local path/ place>/full_file.qvd (qvd);

Then you can use updated <local path/ place>/full_file.qvd in your presentation app. Anyway after above steps IncrementSales table will contain only unique updated entries.

Hope this helps.

//Andrei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

What u understood is absolutely correct and I have also understood your reply but i have a query as i am delaing with the business of clothing which is season based so I have Material master for different seasons present on FtP and i am following an ETL process so if I am using full load should i add in extract folder and take the backup

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Where to implement 2nd step? is it in extract folder or transform folder ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Extract and transform folders contain qvw files, right? If so, than don't mix qvd and qvw, if not - then you can use extract folder for step 1 only once, and then everything should be in transform for step 2, therefore killing two birds with one stone - original full file always will be stored in extract folder and untouched (i.e. backup), modified file will be stored separately in transform (which also could be backed up manually or using scripting).

Anyway you can create sub-folders within QVD folder if required to follow the same procedure.

Regarding seasonality, again, do not mix everything together - extract full tables separately, for example Material_season1_2018.qvd, Material_season2_2018.qvd etc...

then in your transform apply step 2 and store modified qvds as for ex. Material_season1_2018_inc.qvd.

NOTE: You should read your initial file Material_season1_2018.qvd only once !!! otherwise you'll overwrite your changes.

Yes, you'll get a number of extra files, but it gives you flexibility to read only specific portion of data without any search (where) clauses - so always to be optimized.

Then you can use loop or * for loading multiple qvd files.

Anyway it's up to you to decide to how to proceed in your particular situation.

Good luck.

//Andrei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have extract full load from Ftp in extract folder and have taken a backup for the same . Now I have several incremental files which are created on daily basis on Ftp and which will be overwritten so now should I load all of them to Transform(qvd Folder)????

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, you should copy everything from FTP to your local server ![]()

take FULL and couple INCREMENTAL files and try to do this in "Sandbox" (i.e. separately), until you feel yourself comfortable with solution.

Ultimately, it doesn't matter how you call folders and where you place QVD files - concept will work anyway.

1. Load * from your FULL qvd file and store it with different name X in the same place (you will update this copy, but not original one). (Or just copy-paste file)

2. Load inc1 file and concatenate with just created X qvd WHERE NOT EXISTS (UniqueID);

3. Store resulting table into X qvd - overwrite changes;

4. Load inc2 file and concatenate with just updated X qvd WHERE NOT EXISTS (UniqueID);

5. Store resulting table into X qvd - overwrite changes;

....

conitnue with steps 4. and 5. infinitely.

Your X qvd file will contain updated data.

Hope this helps.

Andrei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this where condition i have to implement in old qvd(data dump ) or new inc1 file?

- « Previous Replies

- Next Replies »