Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Re: Join-Qualify, vs Join-Concatenate vs Join-Load...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Join-Qualify, vs Join-Concatenate vs Join-Load from QVD

Hi

I have the same pair of tables that have to be joined, from multiple databases (About 20).

I need to create one table with all of this tables, but I don't k now which is the best way to do this. I've tried the following:

In the real world, the pair are about 6 tables with attributed to be joined for each database.

1° Try:

-Connect to First Database

-Do a Join on each pair of tables

-Then Connect to Second Database

Concatenate and Join to First tables.

-Not working because the second Join is not working.

-I think it's the best way because I need to connect only one time to each database, and the databases will be separated in the load script. (The bad part it that it's not working ![]() )

)

-I think I will have to use qualify, but I don't now how to use here.

2° Try:

Load The Customers Table from All the Databases

Load the Income table from All the Databases

Join the Tables

-This works, but I need to connect many times to each database, and if for some reason I had troubles with one of these, I will need to edit the whole script, not only one "Tab".

3° Try

-Connect to First Database

-Do a Join on each pair of tables

-Store on a QVD.

-Drop Table.

-Connect to Second Database

-Do a Join on each pair of tables

-Join with first QVD..

-I need to connect only one time to each database, and the databases will be separated in the load script.

-The bad part it that I need to create one QVD for each table, and load to memory twice(when connecting to DB and when loading from QVD), making the script slower.

Finally, for some reason, with 2° and 3° try, I lost an ID. I think it's another trouble, but I'm not sure why I list that ID.

Hope somebody can explain

a) What is the best approach to join multiple tables without having to connect multiple times to same server and without loading multiple times the same data to memory.

b) Why one key is lost with approach 2 and 3.

Thanks!

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Personally I wouldn't worry about the number of connections else about the number of records which are to pull and how much workload it caused. If this is significant in any way I would suggest to consider incremental load-appoaches and onyl pull new/changed data.

Quite common in such scenarios is the use of multiple stages in the ETL process, for example a 3-tier or 4-tier data-architecture with several generator-qvw's which pull the data (incremental) from the database and store them into qvd's. The second step would be to use several generator-qvw's which merge the qvd-data per join/mapping or concatenating into bigger data-files - maybe also with incremental approaches.

The third/fourth step would be to create from these and other data-files a (or several) datamodels and reports.

It sounds like much work but by bigger and/or complex tasks it's very useful to split them into multiple small parts which could be more easily developed/maintained and could be of course parallelized by doing this and by a probably automated execution.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Personally I wouldn't worry about the number of connections else about the number of records which are to pull and how much workload it caused. If this is significant in any way I would suggest to consider incremental load-appoaches and onyl pull new/changed data.

Quite common in such scenarios is the use of multiple stages in the ETL process, for example a 3-tier or 4-tier data-architecture with several generator-qvw's which pull the data (incremental) from the database and store them into qvd's. The second step would be to use several generator-qvw's which merge the qvd-data per join/mapping or concatenating into bigger data-files - maybe also with incremental approaches.

The third/fourth step would be to create from these and other data-files a (or several) datamodels and reports.

It sounds like much work but by bigger and/or complex tasks it's very useful to split them into multiple small parts which could be more easily developed/maintained and could be of course parallelized by doing this and by a probably automated execution.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

a) The reason why your first script does not work as expected: After your first DB access & table join, you have a resident table with fields ID, Year and salary. In your second DB access & JOIN, the already existing salary field will be used as additional key field, and this not what you want.

You would need to create a temporary table in the subsequent table joins and concatenate the temporary table to your final table:

[Customers]:

LOAD Year&ID as Key,

Year,

ID,

Name,

RandomA

FROM

[Customers.xlsx]

(ooxml, embedded labels, table is Employee1);

Join (Customers)

LOAD Year&ID as Key,

Salary

FROM

[Customers.xlsx]

(ooxml, embedded labels, table is Income1);

//OLEDB CONNECT TO.... Second Database

TMP:

NoConcatenate

LOAD Year&ID as Key,

Year,

ID,

Name,

RandomA

FROM

[Customers.xlsx]

(ooxml, embedded labels, table is Employee2);

Join (TMP)

LOAD Year&ID as Key,

Salary

FROM

[Customers.xlsx]

(ooxml, embedded labels, table is Income2);

Concatenate (Customers)

LOAD * Resident TMP;

Drop Table TMP;

You probably noticed that this is basically your script #3 using temporary resident tables instead of QVDs.

b) if you want to get rid of the ID 6 in your resulting table, you would need to use a LEFT JOIN instead of a JOIN

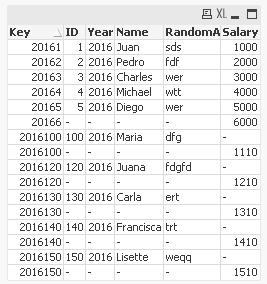

| Key | ID | Year | Name | RandomA | Salary |

|---|---|---|---|---|---|

| 20161 | 1 | 2016 | Juan | sds | 1000 |

| 20162 | 2 | 2016 | Pedro | fdf | 2000 |

| 20163 | 3 | 2016 | Charles | wer | 3000 |

| 20164 | 4 | 2016 | Michael | wtt | 4000 |

| 20165 | 5 | 2016 | Diego | wer | 5000 |

| 2016100 | 100 | 2016 | Maria | dfg | 1110 |

| 2016120 | 120 | 2016 | Juana | fdgfd | 1210 |

| 2016130 | 130 | 2016 | Carla | ert | 1310 |

| 2016140 | 140 | 2016 | Francisca | trt | 1410 |

| 2016150 | 150 | 2016 | Lisette | weqq | 1510 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you can create a SELECT statement that performs the JOIN on the remote DBMS, you can create a simple loop that iterates over all data bases, connects and read the join'ed tables, autoconcatenating to your final table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks! How can I create a loop that iterates over databases? I know how to create a loop.. but how do I change the string connection in each loop?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks!. I was trying someway to do the second join with qualify with no luck.

But your solution is more elegant, and works ![]() .

.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. I thought that multiple connections to the same server would be resource-intensive.

Your idea of multiple qvd is a better one, but as you said, it sound like much work, but seems to be easy to maintain.