Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Re: code to reload qvw only after source data is l...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

code to reload qvw only after source data is loaded successfully

Hi All,

we're having a requirement where we need to reload qvw only after source data is loaded successfully.

I have gone through EDX functionalities, but our requirement is quite different.

Our ETL team creates a table with all the source tables information and a FLAG ( 1- success, 0 - Failure) in DB.

we need to reload QVW only when the flag is 1.

For example:

| TableName | Start Date | End Date | Flag |

|---|---|---|---|

| A | 10/22/2018 10:00:00 AM | 10/22/2018 10:30:00 AM | 1 |

| B | 10/22/2018 11:00:00 AM | 10/22/2018 11:02:00 AM | 0 |

I want to load only the qvw which is using table A as source.

Could someone please help me with the code or solution to achieve the same.

Please do the helpful.

Thanking in Advance.

Regards,

Jesh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Jeshwanth,

If you used task dependency, you could put a .QVD loader at the bottom of the task chain, and then a reload per .QVW/Table pairing at the next tier up

[TABLE_UPDATES]:

LOAD * INLINE [

TableName, Start Date, End Date, Flag

A, 10/22/2018 10:00, 10/22/2018 10:30, 1

B, 10/22/2018 11:00, 10/22/2018 11:02, 0

]

WHERE Flag = 1;

//Presumption is the Table status will only have 1 distinct row per table name, so no distinct, or Max value, or grouping is required

FOR i = 0 to NoOfRows('TABLE_UPDATES') -1

Let vTableName = Peek('TableName',$(i),'TABLE_UPDATES');

Trace Perform a table update for table: $(vTableName);

[$(vTableName)]:

//LOAD .... ; //insert reload query here

STORE * FROM [$(vTableName)] INTO [QVD\$(vTableName).QVD] (qvd);

//have this .QVW reload as the first task in task dependency. It refreshes the full QVD set, and then later .QVW per TableName are dependent upon this.

NEXT //$i

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Evan,

Thanks for your suggestion.

I have one question here, I'm having a total of 95 such tables in my DB and one QVW may use mutliple tables at a time. Here, in this scenario, there might be a situation where some tables fail and some get success. If it's a success, i want my QVD to be updated with the latest data and if it's a failure, the old data needs to be as it is in QVD. How can i achieve the same?

Do I need to use the same logic for all the 95 QVWs as the first piece of code?

Regards,

Jesh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Jeshwanth,

As you add details regarding your situation, we can identify adjustments to the approach.

This situation has 3 elements to solve:

- Control table granularity needs to move from the table level to application level (each QVW needs to evaluate multiple tables before deciding to run.)

- Performed in bulk (doing every evaluation of GO vs. NOGO via chain dependent tasks would be possible, but… complicated & high maintenance. Lots of parts.)

- QVW's need to preserve rollback data when NOGO.

1. Control table granularity

Each .QVW’s reload is determined by update of 1..N source inputs, therefore a row denoting update of a single table is not enough to decide engaging mechanism whether to reload a specific .QVW

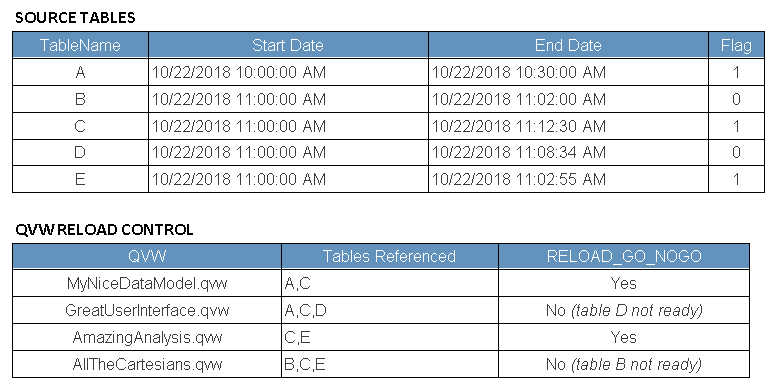

You either need a new table, which creates a row per .QVW indicating GO/NOGO reload status, or each .QVW needs the logic embedded which can evaluate the appropriate subset of table rows, to make the decision. (i.e. in tables shown in image [SOURCE TABLES] is now precursor to [QVW RELOAD CONTROL])

2. Performing in bulk

If this sequence has to cover 90+ .QVWs, creating unique sets of pre-cursor apps or dependency triggers per QVW gets overly complicated. Sequencing becomes a maintenance burden (especially if the trigger chains have to be migrated, multiple QMC backups, etc..)

We need a‘graceful exit’ from the ultimate .QVW layer, the ability to evaluate GO/NOGO conditions early in the process, and make the decision to go forward or keep the prior reload in place.

3. Preserving roll-back

Not claiming this is an approved method, but works on our current host’s QlikView platforms.

Create an ungraceful script exit, and deliberately cause the reload task to fail. This results in the prior published .QVW to remain unchanged on the AccessPoint.

By setting ErrorMode =2 QlikView will trigger an "Execution of script failed..." error message immediately on failure, without prompting the user for action beforehand.

Set ErrorMode = 2;

Let vShouldIReloadIt = *TBD* ; //stick results of the GO/NOGO logic in this variable

IF '$( vShouldIReloadIt)' = 'NO' THEN

TRACE *** DATA UNCHANGED ***;

TRACE Data inputs have unchanged timestamps;

TRACE Reload terminated;

'this is an ungraceful error in conjunction with ErrorMode 2';

ELSE

TRACE *** NEW DATA DETECTED ***;

TRACE *** NEW DATA DETECTED ***;

TRACE Data input timestamp change detected;

TRACE Carrying on;

END IF

Inserting this script prefix in front of apps lets the .QVW perform GO/NOGO check without needing dependency task configured in the QMC. I like techniques like this because mitigate moving parts.

One concern is this generates error messages on the QMC which are evidence of a healthy process. We want reload to abort if data wasn’t ready, so the error is a good thing, or at least not the present .QVW’s problem. Just don’t let data error messages get confused with genuine technical malfunction.

Maybe there’s other ways to trigger graceful exit of reload (maybe exit reload & rollback becomes a feature?), but this covers what you’re asking.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Evan, thanks for your suggestion.

I'll try the solution and let you know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Evan,

Just tried your solution.

Creating a Reload QVW control table is becoming a big task.

By your approach, do you mean that we need to have the table in DB or do we need to create the same in Qlikview.

If we need to create the same in QV, could you please guide me with the steps.

Regards,

Jesh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now, the client came up with the new requirement.

our QV needs to check the table in DB until the flag becomes 1.

If you have any idea on this, could you please help.

The QVW needs to reload only when the flag is 1 until it becomes 1 the old data should remain the same.

Actually, in our current scenario, the tables are scheduled to be loaded at 7PM.

Our QV files start loading at 8PM.

Sometimes, the table loading is getting delayed and the files are being published with 0 data. This needs to be avoided. Our QVW needs to be loaded only when the tables are loaded successfully.

Please help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Jeshwanth,

The approach outlined earlier doesn't change.

If you're running a publishing job, and the job fails ungracefully the QMC will not overwrite the distribution copy (if you're reloading in place, I would migrate away from this technique, not a best practice for distribution. This would mean you're putting copies accessible to the users with all the load scripts intact, no section access, etc..).

So the mechanism you're putting in front of each QVW is the same. If it doesn't find rows that indicate all lineage source tables are ready, it will cause the reload distribution task to fail, which means the prior distribution copy is not overwritten in the User document mount folder.

Here is an example of how you can roll-up the control tables to make your evaluation per qvw:

//These are your sequencing tables. This metadata has to be collated somehow. If there is a need to audit full data lineage across an entire large collection of .QVW in bulk, you may want to investigate the Governance Dashboard or QlikView Cookbook QV_Lineage functions. These can automate data lineage collection, but require configuration know-how to set up (closest thing can offer to a shortcut). This example starts from the point the sequencing table declares which data tables each .QVW sources as inputs.

[QVW_SOURCE_TABLES_REFERENCED]:

LOAD DISTINCT QVWName, SubField(TablesUsed,',') AS TableName;

LOAD * INLINE [

QVWName, TablesUsed

MyNiceDataModel.qvw, "A,C"

GreatUserinterface.qvw, "A,C,D"

AmazingAnalysis.qvw, "C,E"

AllTheCartesians.qvw, "B,C,E"

];

JOIN( QVW_SOURCE_TABLES_REFERENCED)

LOAD * INLINE [

TableName, Start Date, End Date, Flag

A, 10/22/2018 10:00, 10/22/2018 10:30, 1

B, 10/22/2018 11:00, 10/22/2018 11:02, 0

C, 10/22/2018 11:00, 10/22/2018 11:02, 1

D, 10/22/2018 11:00, 10/22/2018 11:02, 0

E, 10/22/2018 11:00, 10/22/2018 11:02, 1

]WHERE Flag = 1;

//The portion above would naturally be moved from INLINE tables in script to sourcing from wherever in your environment you stage your master sequencing tables

//they are INLINE here to illustrate

//The next portion is the GO vs. NO_GO evaluation used in front of every QVW. This shouldn't need changed and can be referenced via INCLUDE file. (Also a good practice, if you decide to modify or rewrite this section, you can adjust every referencing QVW without having to recode each one)

[TEST_FOR_GO_NOGO]:

LOAD * WHERE GO_NOGO = 0 AND QVWName = DocumentName();

LOAD QVWName,NullCount(Flag) AS GO_NOGO RESIDENT QVW_SOURCE_TABLES_REFERENCED GROUP BY QVWName;

IF NoOfRows('TEST_FOR_GO_NOGO') > 0 THEN

Let vReloadStatus = 'YES, LETS RELOAD ' & DocumentName() ;

ELSE

Let vReloadStatus = 'NOPE, NOT GONNA DO IT FOR ' & DocumentName();

END IF

TRACE $(vReloadStatus);

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Evan,

Do i need this code in all the 95 qvws.

And also i don't see any loop in your code to check the DB continuously to check for Success Status.

| TableName | Start Date | End Date | Status |

|---|---|---|---|

| A | 10/30/2018 05:00:00 AM | 10/30/2018 05:10:00 AM | Success |

| B | 10/30/2018 06:00:00 AM | 10/30/2018 06:10:00 AM | Success |

| C | 10/30/2018 05:20:00 AM | 10/30/2018 05:40:00 AM | Fail |

| D | 10/30/2018 05:00:00 AM | ||

| E | 10/30/2018 05:00:00 AM | - |

Let's say my QVW is using tables A and E.

There might be a scenario where DB load might take sometime and our QVW file needs to check at the table continuously until we get some status or else after long time (say, 1 hour) suspend it.

Now, my requirement is my file needs to continuously check for the statuses of A and E until they are either Success or Fail.

My QVW starts reloading only after both the statuses are available. Else, wait for some time and re-check the DB for status.

So, if both the tables are success, reload it else remain with the old data.

You have given me the solution for a success. But how to continuously check with DB for the success or fail status.

Please help. I'm totally struck with the solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Jeshwanth,

The only code you add to the actual .QVWs would need to be an include line at the very front of the script (e.g.)

$(Must_Include=D:\MyQlikViewPath\QVS\TheTableIsReadyChecker.qvs);

In that externalized .qvs file, place the script section that reads whether tables are prepared and whether to proceed with reload or not. If you use an include file your mechanism will be standardized across the .QVW palette.

The last step is creating a distribution task on the QMC set for some very short interval, perhaps 5-minutes. Every 5 minutes it will check your list of ready tables and once all are indicated present it will reload the data.

Now your new problem is once your list of ready tables indicates all the precursors are present, how do you prevent it from reloading fresh data every 5 minutes?

You need a method to switch the fresh data reload "off" once all the tables were detected present and a first pass refresh was performed. Maybe you need to extend your INCLUDE with some Now() or LastReloadTime() trapping variable that says GO / NO_GO can only succeed 1x per calendar day?

But that's a brand new problem...