Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics & AI

- :

- QlikView

- :

- Documents

- :

- Subroutine to Create Data Model for From/To Timest...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Subroutine to Create Data Model for From/To Timestamp Selection

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Subroutine to Create Data Model for From/To Timestamp Selection

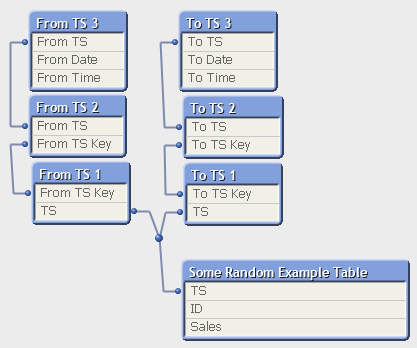

This is an expansion on and simplification of the subroutine I posted for from/to date selection. It essentially uses two "AsOf" tables in parallel, one pointing forward in time, the other pointing backwards in time. Or more generically, one pointing forward through sortable field values, one pointing backward through sortable field values. These then intersect to connect to all values that are within the defined range.

Normally that technique would fall apart with larger numbers of distinct values, as every value in the From AsOf table must connect to every value greater than it, and every value in the To AsOf table must connect to every value less than it. As a result, for N distinct values, you need N*N rows of data. If you have 100 distinct values, that's not really a problem. But if you have 100,000 distinct values, you're looking at 10,000,000,000 rows, which just isn't doable.

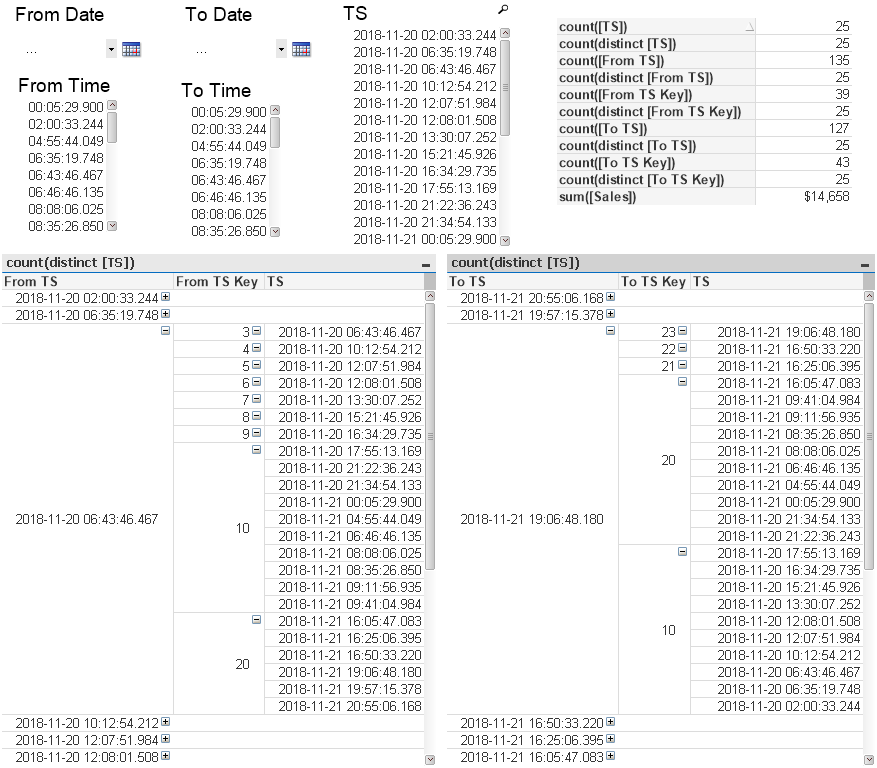

The subroutine reduces this dramatically, generating on the order of N log N rows. I'm using it successfully in an application with about 600,000 distinct timestamps, though with some minor tweaks I believe the subroutine could be adapted for any type of sortable values. You tell it the field of interest and names for all of the fields it will generate. It extracts all values of the field of interest, then sorts and numbers those values. It then builds groupings of those values by powers of 10, each with its own key. At the bottom level, 1 = 1, 2 = 2, and so on. Then you have 10 = 1-10 or 10-19, 20 = 11-20 or 20-29, and so on. Then 100 = 1-100 or 100-199, 200 = 101-200 or 200-299, and so on. And so on. The AsOf tables use those keyed groupings to link to logarithmically fewer rows.

600,000 distinct values is still a bit of a challenge, and adding these tables more than doubled the load time and size of my application. So I don't recommend going much higher than that. The subroutine caps out at 999,998 values. This limit is easily raised by orders of magnitude by bumping up the for/next loop limit, but you may not like what happens if you have 50 million distinct values in the field and try to apply this technique.

This isn't necessarily the best technique, and certainly not for every application. You can use from/to variables to trigger a selection in the main field. You can use set analysis. You have options. I just tend to prefer data model solutions. I want my complexity in the script, not in the front end. I like making normal selections rather than using variables or set analysis. And I wanted to prove to myself that it was possible to handle even a difficult case like this with the data model, and that it could be done in a generic way rather than in the very date-specific way of my previous subroutine.

For my application, I generated a from date, from time, to date, and to time, requiring the tables ending in "3". These aren't strictly needed, but I think it's a lot easier to select a date, then select a time, than to try to scroll a list of 600,000 timestamps. And while it isn't in the subroutine, my application then generated calendars with date, month, and year to connect to each to make the selection of the range even easier. Many people might prefer to use calendar objects on the date fields rather than creating calendar tables. Plenty of options. To turn this into a date-range subroutine, remove the tables ending in "3" and the associated parameters.

I'm still on QlikView 11, and tuned the script performance for that, so I can't speak to the performance in QlikView 12. Hopefully it's the same or better. Hopefully it's bug free, particularly since I have it in a live application, but if bugs are pointed out to me, I'll correct them.

This is the subroutine. I find it easier to read in the attached example file since it's colored properly there.

SUB CreateFromToLinkage(TS,FTS,TTS,FD,TD,FT,TT,FK,TK)

TS: LOAD timestamp(fieldvalue('$(TS)',recno())) as [$(TS)] AUTOGENERATE fieldvaluecount('$(TS)');

ID: LOAD [$(TS)],rowno() as ID RESIDENT TS ORDER BY [$(TS)];

FOR I = 0 TO 5

LET P = pow(10,I);

[$(FTS) 1]: LOAD floor(ID,$(P)) as [$(FK)],[$(TS)] RESIDENT ID WHERE mod(floor(ID/$(P)),10) > 0;

[$(TTS) 1]: LOAD ceil(ID,$(P)) as [$(TK)],[$(TS)] RESIDENT ID WHERE mod( ceil(ID/$(P)),10) > 0

and ceil(ID,$(P)) <= fieldvaluecount('$(TS)');

[$(FTS) 2]: LOAD floor(ID-1,$(P))+ iterno() *$(P) as [$(FK)],[$(TS)] as [$(FTS)] RESIDENT ID WHILE iterno() <= 9 - mod(floor((ID-1)/$(P)),10)

and floor(ID-1,$(P))+ iterno() *$(P) <= fieldvaluecount('$(TS)');

[$(TTS) 2]: LOAD floor(ID ,$(P))+(1-iterno())*$(P) as [$(TK)],[$(TS)] as [$(TTS)] RESIDENT ID WHILE iterno() <= mod(floor( ID /$(P)),10);

NEXT

[$(FTS) 3]: LOAD [$(TS)] as [$(FTS)],date(floor([$(TS)])) as [$(FD)],time(frac([$(TS)])) as [$(FT)] RESIDENT TS;

[$(TTS) 3]: LOAD [$(TS)] as [$(TTS)],date(floor([$(TS)])) as [$(TD)],time(frac([$(TS)])) as [$(TT)] RESIDENT TS;

DROP TABLES TS,ID;

END SUBHere are a couple of pictures from the attached example file to help show what's going on: