Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Support

- :

- Support

- :

- Product News

- :

- Release Notes

- :

- Qlik Catalog Release Notes - May 2023 Initial Rele...

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Qlik Catalog Release Notes - May 2023 Initial Release to SR4

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Qlik Catalog Release Notes - May 2023 Initial Release to SR4

Table of Contents

- What's new in Qlik Catalog May 2023 SR4

- Fix: Remove Problematic Trailing Slash After /isUserNameAvailable

- Fix: Publishing for Entities That Have Whitespace(s) In Their Names Was Failing

- Fix: podman pause-3.5 Container Installation Failed

- Fix: Broken Isolated Classpath for Kerberized Hadoop/Hive JDBC Driver

- Catalog Installer Improvements

- API Documentation Extended with Script Examples

- What's new in Qlik Catalog May 2023 SR3

- Fix: Audit Log User, for Load Jobs, Should Not Be ANONYMOUS

- Fix: Slow Performance or OutOfMemory for Load Logs with Very Large Details

- Red Hat Enterprise Linux (RHEL) 9.2 Installation Improvements

- SAML SSO Logging Improvement and Fix

- Sample Script to Invoke API for Loading Entities

- What's new in Qlik Catalog May 2023 SR2

- Fix: Field Change Detection for ADDRESSED Entities from JDBC Sources

- Fix: Podman pause Image Missing on Ubuntu 22.04 Installations

- Update: Prerequisites Installation of Node.js

- What's new in Qlik Catalog May 2023 SR1

- Enhancement: Audit Log Entries for Source, Entity, and Field Changes

- Fix: Browser Out-of-Memory Due to Large Import Logs

- Updates

- What's new in Qlik Catalog May 2023

- Scheduling Data Loads

- Multi-Node Deployment Option Removed

- Updates

- Resolved Defects

- Fixed: Properties Are Editable When They Should Not Be

- Downloads

The following release notes cover the version of Qlik Catalog released in May 2023. For questions or comments, post in the Product Forums or contact Qlik Support.

What's new in Qlik Catalog May 2023 SR4

Fix: Remove Problematic Trailing Slash After /isUserNameAvailable

The trailing slash in the /isUserNameAvailable endpoint caused unexpected encoding results in some cases. Removing it resolved the encoding issue, providing consistent results for endpoint invocations.

Fix: Publishing for Entities That Have Whitespace(s) In Their Names Was Failing

The code that manages permission changes over published files didn’t support whitespaces in entity names. This fix enables support for such cases.

Fix: podman pause-3.5 Container Installation Failed

During the Catalog Installer run on Ubuntu OS, pulling the pause-3.5 container required by podman sometimes failed. To address this, the pause-3.5 container is now included with other containers in the QDC binaries.

Fix: Broken Isolated Classpath for Kerberized Hadoop/Hive JDBC Driver

A classpath issue was identified during interactions with a Kerberized Hadoop/Hive cluster, where a mix between the isolated driver classpath and the general classpath caused library conflicts, preventing communication with the cluster. This fix ensures that the Hive JDBC driver is fully isolated from the main application classpath.

Catalog Installer Improvements

- Enhanced super-user verification during first-time installation.

- Improved Tomcat shutdown process during QDC upgrades, where the installer now waits until Tomcat is fully stopped.

- Improved Node.js installation: if Node.js is present but at a version below the required one, the installer will now upgrade it.

API Documentation Extended with Script Examples

Two example shell scripts have been added to the Swagger documentation:

- Entity API - Script for the entity loading process.

- Property Definition API - Script for property definition creation, covering both default and regular definitions.

What's new in Qlik Catalog May 2023 SR3

Fix: Audit Log User, for Load Jobs, Should Not Be ANONYMOUS

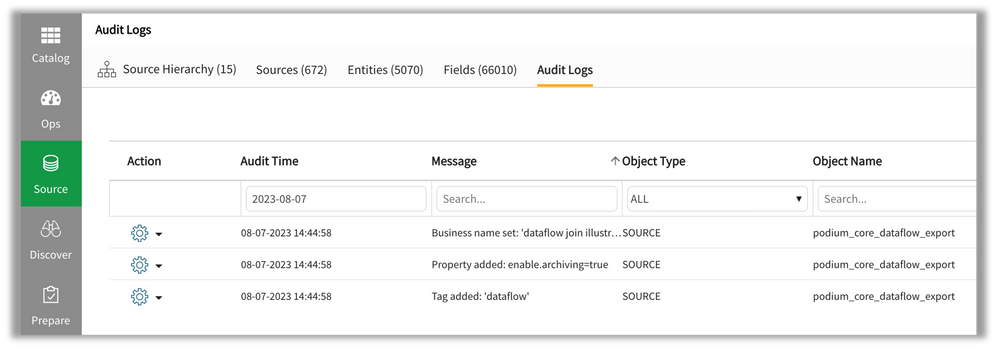

On the Source module, "Audit Logs" tab, an audit entry is created for each load, prepare and publish job that is executed, whether run immediately or scheduled.

However, the "User Name" was incorrectly recorded as "ANONYMOUS" rather than the user that initiated the job. This has been fixed.

Please note that the "IP" and "Browser Information" fields were also set to "ANONYMOUS". These two fields are now set to "NOT_APPLICABLE".

Fix: Slow Performance or OutOfMemory for Load Logs with Very Large Details

For customers that have previously run thousands of load jobs, each of which may have large details, query performance when displaying a Source's entities, or an entity's load logs (and details), could drastically decrease, even resulting in OutOfMemory. One solution is to delete old load logs. Alternatively, a new core_env property has been introduced to alter how the backend queries load logs and their details. Consider setting this property to false if this issue arises:

# There are entity retrieval APIs (e.g., /entity/external/entitiesBySrc/{srcId})

# that query the database for all load logs (aka work orders) for the entities.

# For tens-of-thousands of work orders, with large details, this can overwhelm

# the backend, resulting in OutOfMemory. Setting this property to false will

# skip the work order database query. To enable this workaround, set this

# property to false. NOTE: If set to false, total and latest record counts will

# display as "N/A" in the Source module entity grid table. The record counts are

# available in the Discover module entity grid table or when viewing load logs

# (at either the grid table or detail level).

#always.attach.load.logs.and.details=true

Red Hat Enterprise Linux (RHEL) 9.2 Installation Improvements

Two improvements were made to Catalog installation on RHEL 9.2

- Installation of Node.js version 18 during execution of CatalogFirstTime.sh (or CatalogPrereqs.sh) failed due to that version's signing algorithm. The Catalog installer now accepts the signing algorithm.

- Catalog runs four containers using podman. The launch and shutdown of these containers has been made less aggressive, leading to more reliable startup by systemd when the host system is restarted.

SAML SSO Logging Improvement and Fix

Two enhancements were made to Catalog's support for SAML single sign-on (SSO)

- Better logging was added when SAML authentication fails, easing the setup and configuration process.

- When "saml.sign.requests.using.tomcat.ssl.cert" was enabled in core_env.properties, code failed to replace variable "qdc.home" in the certificate path retrieved from Tomcat's server.xml file. This has been fixed.

Sample Script to Invoke API for Loading Entities

A sample bash shell script is now included to demonstrate how to use the API, via curl, to initiate an entity load. Please see file /usr/local/qdc/apache-tomcat-9.0.85/webapps/qdc/resources/api/loadDataForEntities.sh

What's new in Qlik Catalog May 2023 SR2

Fix: Field Change Detection for ADDRESSED Entities from JDBC Sources

One of the features of Catalog is its detection of metadata changes, particularly for JDBC sources. For REGISTERED and ADDRESSED entities, Catalog detects column additions and deletions, as well as column type changes. This capability is controlled by the following core_env property:

# If true, for:

# (1) ADDRESSED and REGISTERED entities loaded from JDBC relational sources, and # (2) ADDRESSED entities loaded from FILE sources, then

# column additions and deletions will be detected and applied. If true, business metadata, tags and properties will be

# preserved and not overwritten. Set to false for legacy behavior. Default: false (default to false so it is disabled

# for legacy customers that upgrade)

support.schema.change.detection=true

However, when this property was set to "false", for ADDRESSED entities, Catalog was detecting and applying column additions and deletions when it should not have done so.

With this fix, if the above property is "false", for ADDRESSED entities, Catalog will only detect column type updates. This change enables customers initially to select a subset of a table's columns and to have that subset remain unchanged as entities are later loaded.

Fix: Podman pause Image Missing on Ubuntu 22.04 Installations

When installing on Ubuntu 22.04, the loading of the engine and licenses images did not result in the "pause" image being loaded. This prevented those containers from starting -- the following error was seen:

$ ./launch_qlikContainers.sh

Launch Containers with Podman

Error: k8s.gcr.io/pause:3.5: image not known

The installer has been modified to check for this missing image, and to pull it if necessary.

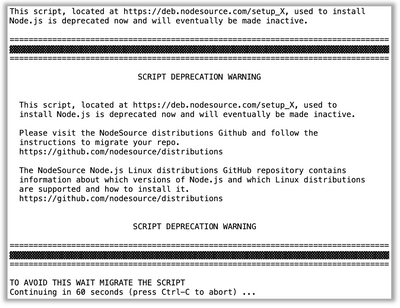

Update: Prerequisites Installation of Node.js

Node.js Deprecation Warning

Node.js Deprecation Warning

What's new in Qlik Catalog May 2023 SR1

Enhancement: Audit Log Entries for Source, Entity, and Field Changes

Audit Log entries are now created for the following changes (including additions and deletions) to Sources, Entities, and Fields:

-

business metadata

-

properties

-

tags

This feature has been implemented and tested for two common scenarios:

-

import of business metadata

-

manual edit in Catalog user interface (UI)

The ordering and display of Audit Log columns has changed:

Fix: Browser Out-of-Memory Due to Large Import Logs

The Catalog UI exhausted web browser memory when viewing the Admin / Import/Export Metadata page if there were many completed job histories and if the details of given job histories were megabytes in size. This has been addressed by truncating the details of each import/export job when the entire page of job histories is retrieved. The full details are still returned when "View Details" is invoked for an individual record.

Updates

Standard upgrades of vulnerable third-party dependencies were made.

Standard upgrade of Apache Tomcat (for first-time installs) was made.

What's new in Qlik Catalog May 2023

Scheduling Data Loads

Data load jobs may now be scheduled for automatic execution. To schedule a recurring data load, visit the Source module, select an entity, and initiate a load. Click on the Scheduling expander in the Data Load modal and enter a Quartz expression, such as:

- 0 */15 * ? * * -- run at 0, 15, 30 and 45 minutes past each hour of every day

- 0 15 10 ? * MON-FRI -- run at 10:15am Monday through Friday

Once the Quartz cron expression has been entered, click OK to schedule the data load job.

For more information, please visit the online help and search for "Scheduling" -- the topic is "Qlik Catalog Data ingest: Loading data".

Multi-Node Deployment Option Removed

Qlik Catalog no longer offers a multi-node deployment option. Multi-node deployment allowed Catalog to manage a Hadoop cluster data lake, where data was stored in HDFS or S3 and queried using Hive. Most recently, Catalog supported the Cloudera Data Platform (CDP) and AWS EMR platforms. Consequently, the installer property INSTALL_TYPE is no longer present and need not be set -- all installations now use the single node deployment option.

Updates

Standard upgrades of vulnerable third-party dependencies were made.

Standard upgrade of Apache Tomcat (for first-time installs) was made.

Resolved Defects

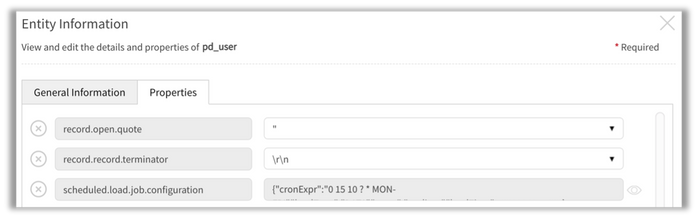

Fixed: Properties Are Editable When They Should Not Be

Jira ID: QDCB-553

Source and entity properties were editable in the case where the "editable" attribute of the property definition was set to false. This has been fixed. Note that these properties may still be deleted (removed) from the entity or source. In the following screenshot, the property "scheduled.load.job.configuration" is not editable:

Downloads

Download this release from the Product Downloads page on Qlik Community.

About Qlik

Qlik converts complex data landscapes into actionable insights, driving strategic business outcomes. Serving over 40,000 global customers, our portfolio provides advanced, enterprise-grade AI/ML, data integration, and analytics. Our AI/ML tools, both practical and scalable, lead to better decisions, faster. We excel in data integration and governance, offering comprehensive solutions that work with diverse data sources. Intuitive analytics from Qlik uncover hidden patterns, empowering teams to address complex challenges and seize new opportunities. As strategic partners, our platform-agnostic technology and expertise make our customers more competitive.