Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics & AI

- :

- Products & Topics

- :

- Visualization and Usability

- :

- Error loading data (Field id not found)

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Error loading data (Field id not found)

Hello,

I have an error loading data that I cannot resolve. And despite my research I can't find where the problem comes from (I'm new to Qlik)

Here is my script:

[ssi_failure]:

LOAD id;

Select id FROM "public"."ssi_failure";

Store [ssi_failure] into [lib://CLIENTS_QVD_FILES (fro1vhlqlik1_administrateur)/$(ClientFloderName)/SI/ssi_failure_Manut.qvd] (qvd);

Drop Table [ssi_failure];

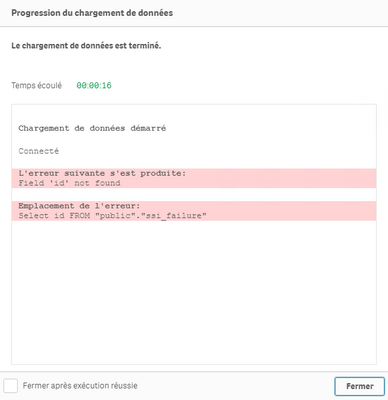

And when I start the data loading, it tells me that it is connected (to the database) then it loads without stopping and without doing anything. When I give up loading the data, I get this error:

Except that the id field is in the database. I think the problem is that there are too many rows in the database (660,000). Because when I select 70 lines the data loading works (60 lines max, after that gives me the same error):

[ssi_failure]:

LOAD id;

Select id FROM "public"."ssi_failure" FETCH FIRST 60 ROW ONLY;

Store [ssi_failure] into [lib://CLIENTS_QVD_FILES (fro1vhlqlik1_administrateur)/$(ClientFloderName)/SI/ssi_failure_Manut.qvd] (qvd);

Drop Table [ssi_failure];

For information the database uses postgreSQL as DBMS.

So I wanted to know if you have a solution to make the first script work.

Thanks in advance !

- « Previous Replies

- Next Replies »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is no problem in the table after 60 rows because I can select 60 rows after 60 rows like this for exemple :

SELECT "id"

FROM (

SELECT row_number() over() AS numLigne, *

FROM "public"."ssi_failure"

) AS sousTable

WHERE sousTable.numLigne between 50 AND 100;- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your mentioned 660 k of records is from a Qlik point of view a rather small dataset. Nevertheless you may depending on the response-time of the database for the query and of the network performance run into various timeouts. Whereby in regard to your description that even very small datasets results in errors it's rather unlikely that there is a timeout-issue in your case. Nevertheless you may also take a look within the event-log or in the logs of your security tools if there are any hints that the connection might be terminated.

Very important is to understand that Qlik doesn't execute any SQL else it transferred the statement to the driver which then connects to the database which executes the query and returned on this way back the results. If there are any missing fields or records or it breaks with any error the issue is caused from an unsuitable driver and/or wrong driver/database-settings including also all kinds of access-rights.

If your queries now breaks with nearly all fields and also with other tables you may use a wrong driver respectively setting like using a 32bit driver for a 64bit database or the database has settings which return to such an externally call only n records or n bytes or similar limitations are set within the driver or stopping each query if any locking is touched or if any access rights are violated.

Therefore I suggest you check at first if your used user has the appropriate access right - also by accessing from the outside and how the database is configured to external accesses. If this looked right you should take a look on the driver to find out if there are customizable settings or if alternative drivers available.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Clem

Given that it seems intermittent it could be a timeout or a data value issue, by any chance do you have an ID or any field that you could use to execute a loop? If possible could you try to write a query that gets maybe 500 rows ? If the query fails during the loop of gettting the data it will probably be data value related, maybe something the driver is not able to interpret, but if the loop works fine then it could probably be a timeout, I'm just wondering why you are not getting a timeout error.

It just checked and you should be able to get the timeout with the following statement/query:

https://itectec.com/database/postgresql-how-to-set-statement-timeout-per-user/

Could you verify the current value? keep in mind that when comparing PGAdmin and the connection from Qlik Sense it is necessary to use the same user.

If nothing of the above works could it be possible to use a third party driver and ODBC DSN and verify the outcome? Maybe one of these or any other you are familiar with:

https://jdbc.postgresql.org/download.html

Hope it helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Clem

Did you have the chance to try the options suggested above?

Kind Regards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @NadiaB

Sorry for the late reply.

I changed the statement for the user, but how can I get the query time back after on qlik?

And im sorry i don't understand what can i do with driver ODBC?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The load-times could be easily checked within the document-log and also with a simple trace-statement, like:

let vLoadStart = num(now());

sql ...

let vLoadTime = time(now() - $(vLoadStart));

trace $(vLoadTime );

In regard to the driver you need to install a compatible one in regard to your database-release and also all depending tools/packages - by PostgreSQL at least any java-release. Google and the PostgreSQL community should provide enough hints to select all these information.

- Marcus

- « Previous Replies

- Next Replies »