Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics & AI

- :

- Products & Topics

- :

- Management & Governance

- :

- Re: QVD building scripts are failing in random ord...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

QVD building scripts are failing in random order

I have an issue with running QlikSense tasks.

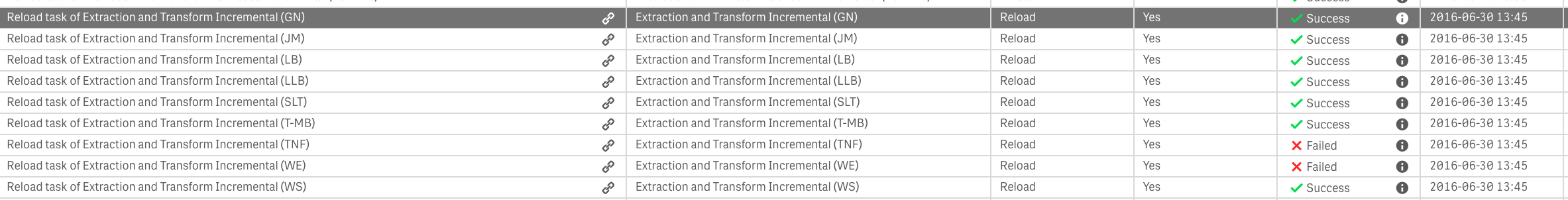

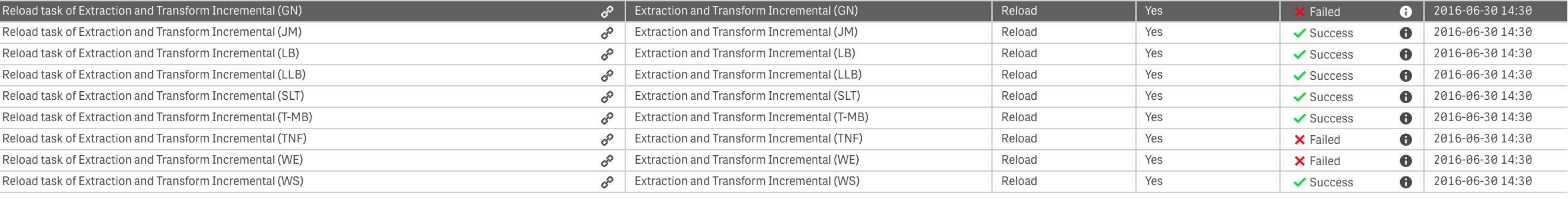

I have several scripts that generate single independent QVD each. I am starting these loads simultaneously. I was expecting these scripts will complete successfully, but they are failing in random order:

The error from the logs:

2016-06-30 18:46:01 0239 STORE Perimeter_ INTO 'lib://Transformations /_Perimeter.qvd' (QVD)

2016-06-30 18:46:01 Failed to open file in write mode for fileD:\Transformations\_Perimeter.qvd

2016-06-30 18:46:01 Error: Failed to open file in write mode for fileD:\Transformations\_Perimeter.qvd

2016-06-30 18:46:01 Execution Failed

2016-06-30 18:46:01 Execution finished.

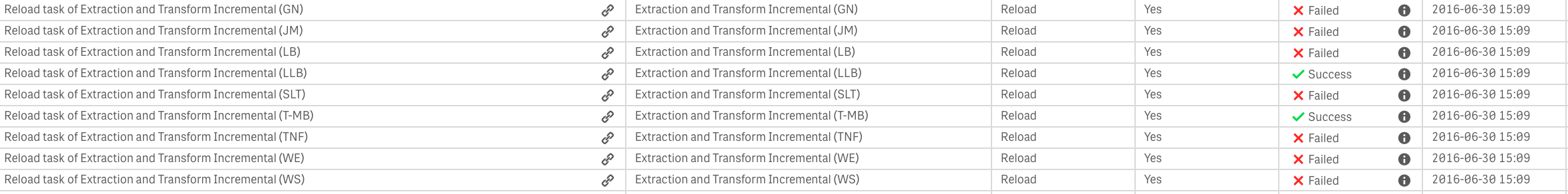

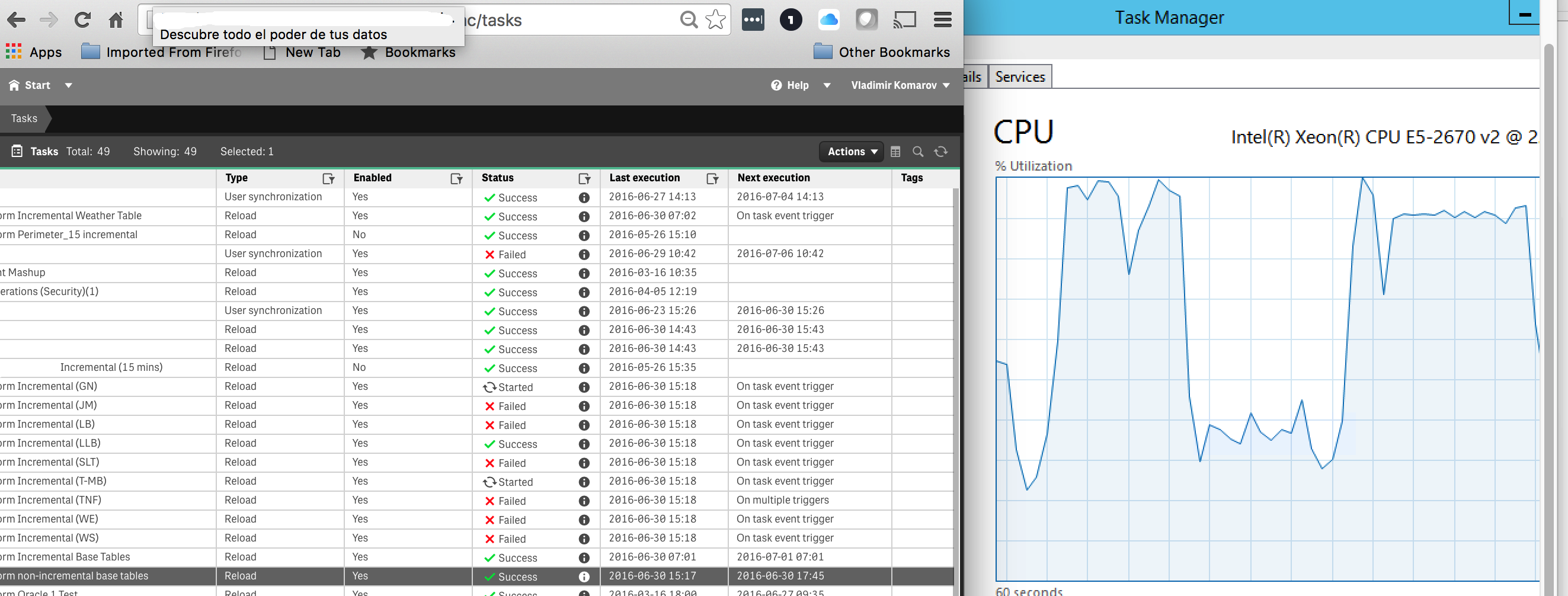

I was trying to change the number of concurrent tasks to execute (to 2-4-6) and these reloads keeps failing. Looks like a memory/resources issue because more tasks are failing when 6 simultaneous jobs are running:

All tasks have completed successfully when I've set a number of Max Concurrent loads to 1!

Is it a bug in the system that preventing these scripts from completion when system goes to 100% memory and CPU utilization? It happens pretty often here since we are running heavy data loads..

This workaround (set a number of Max Concurrent loads to 1) creates a significant delay in our daily reload process and need to be resolved...

Will appreciate any suggestions what should I do about this issue.

Regards,

Vlad

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure if qlik is responsible for this failure and would rather think that windows as OS and/or your network respectively storage system aren't capable to handle all these threads at the same time and that you reached at a certain time the max. number of (storing) handles which could be hold within the queue and/or that some timeouts happens - maybe there are settings which could be configured.

Otherwise you will need to reduce the max. numer of concurrent tasks and/or adding some delaying-methods - this meant to put the store-statement within an if-loop which checked if other qvd's already written/updated maybe per filetime() or similar and delayed then the storing per sleep-statement (whereby I think it's rather the worse case to fiddle something stable with such approach).

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Markus,

I like your idea (issue with # of available handles), but I do not think it's applicable in this case.

These tasks are running fine when I've set the Max Number of Simultaneous tasks to 1 or (usually) 2.

Setting this value to 3 creates loading errors most of the time...

I doubt that QS could not handle files (even if you consider all logs and other processes that are accessing the files) for more than 2 tasks at the same time.

My case is more like system resources (memory in particular) limitation... Just surprised that system is handling these issues that badly...

VK

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Qlik support confirmed this issue as a bug. Similar cases reported before.

For tracking purposes: Bug: QLIK-58841, should be fixed QS in 3.1.1 (September 2016).

Regards,

Vlad

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vlad, I will add my findings to your post too.

First, let me explain my theory on what is going on:

The Qlik Sense Tasks are not terminating the execution of the load script properly and the STORE command still running while the task was already terminated. That in some way, is locking the process. So the STORE command is not holding the tasks to be terminated.

What I did was to add this holding time in the load script by the following command, right after the STORE command:

DO

SLEEP 5000;

LET _fwMessage = QvdNoOfRecords ('lib://My Library\myqvdfile.qvd');

TRACE $(_fwMessage);

LOOP WHILE (LEN('$(_fwMessage)') = 0)

The QvdNoOfRecords will return NULL if the QVD file is opened and still loading with the data from your Load Script. When it is ready the code will proceed as normal and the task will be terminated.

So far I have tested that for more than 2,000 times loading more than 2,000 QVD files and about 1TB of data without any error - and loading 10 tasks at the same time.

I hope this help you. If you test this and still have errors, please just let us know.

Regards,

Mark Costa

Read more at Data Voyagers - datavoyagers.net

Follow me on my LinkedIn | Know IPC Global at ipc-global.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mark,

Thank you for a suggestion.

I've updated my code with script you've suggested and I was able to execute all my load process (with 4 tasks at a time allowed).

I will continue testing these processes more but it looks like you are correct about this issue.

Just an example (from my log of the task that used to fail before):

It took 10 of (!!!) cycles (50 seconds!!!) to close the QVD!

The output QVD contains 21,859,841 records.

"8/4/2016 3:12:12 AM| G_Perimeter STORE process completed. SLEEP loop is starting"

"8/4/2016 3:12:17 AM| G_Perimeter Loop. QVDNoOfRecords = "

"8/4/2016 3:12:22 AM| G_Perimeter Loop. QVDNoOfRecords = "

"8/4/2016 3:12:27 AM| G_Perimeter Loop. QVDNoOfRecords = "

"8/4/2016 3:12:32 AM| G_Perimeter Loop. QVDNoOfRecords = "

"8/4/2016 3:12:37 AM| G_Perimeter Loop. QVDNoOfRecords = "

"8/4/2016 3:12:42 AM| G_Perimeter Loop. QVDNoOfRecords = "

"8/4/2016 3:12:47 AM| G_Perimeter Loop. QVDNoOfRecords = "

"8/4/2016 3:12:52 AM| G_Perimeter Loop. QVDNoOfRecords = "

"8/4/2016 3:12:57 AM| G_Perimeter Loop. QVDNoOfRecords = "

"8/4/2016 3:13:02 AM| G_Perimeter Loop. QVDNoOfRecords = 21859841"

"8/4/2016 3:13:02 AM| G_Perimeter Increment load completed"

"8/4/2016 3:13:02 AM| G_Perimeter_ Table Num of Records = 21,859,841"

Another big QVD was closed after just 4 cycles (with 45,710,996 records), but by the time this QVD load was done all other QVD generators were already completed.

I will definitely escalate this case to support and I hope they will fix it ASAP.

If in fact "Qlik Sense Tasks are not terminating the execution of the load script properly and the STORE command still running while the task was already terminated" event is occurring - it qualifies as a MAJOR bug in my book :-).

Regards,

Vladimir

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just so you know this bug was not fixed in Qlik Sense 3.1 R1, 3.1.1