Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics & AI

- :

- Products & Topics

- :

- Connectivity & Data Prep

- :

- Re: AWS S3 Storage and DataBricks Data Connection

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

AWS S3 Storage and DataBricks Data Connection

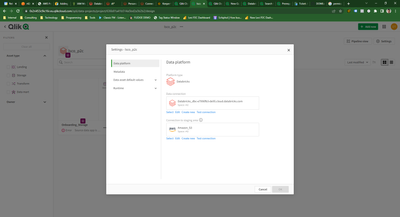

I have configured a Data Connection with DataBricks and AWS 3S Storage as per the screenshot below:

I test both connections and they were successful.

When I execute "Prepare" as per screenshot below:

I get the following error:

Failed to prepare data app 'Onboarding_Landing' in project 'lsco_p2c' ('636b81a41b514a5bd2a3b2b2') - 'message: QDI-DW-STORAGE-ENDPOINT-SYNTAX-ERROR, SQL syntax error, s3://r42qlik/lsco_p2c/onboarding_landing/jc_p2c_final_7_days_all_time: getFileStatus on s3://r42qlik/lsco_p2c/onboarding_landing/jc_p2c_final_7_days_all_time: com.amazonaws.services.s3.model.AmazonS3Exception: Forbidden; request: HEAD https://r42qlik.s3.eu-central-1.amazonaws.com lsco_p2c/onboarding_landing/jc_p2c_final_7_days_all_time {} Hadoop 3.3.2, aws-sdk-java/1.12.189 Linux/5.4.0-1086-aws OpenJDK_64-Bit_Server_VM/25.345-b01 java/1.8.0_345 scala/2.12.14 vendor/Azul_Systems,_Inc. cfg/retry-mode/legacy com.amazonaws.services.s3.model.GetObjectMetadataRequest; Request ID: RF82360G030ECDF2, Extended Request ID: GCtFNnHcr/0FUP0uJ43MyMxviVTEllVwXxd5YKrzP1xRlBSB+5y/mEy9HlI4gGBcyBTHnR8ST2f1dOzQbs6Ydg==, Cloud Provider: AWS, Instance ID: i-034c0c5312d5de1c1 (Service: Amazon S3; Status Code: 403; Error Code: 403 Forbidden; Request ID: RF82360G030ECDF2; S3 Extended Request ID: GCtFNnHcr/0FUP0uJ43MyMxviVTEllVwXxd5YKrzP1xRlBSB+5y/mEy9HlI4gGBcyBTHnR8ST2f1dOzQbs6Ydg==; Proxy: null), S3 Extended Request ID: GCtFNnHcr/0FUP0uJ43MyMxviVTEllVwXxd5YKrzP1xRlBSB+5y/mEy9HlI4gGBcyBTHnR8ST2f1dOzQbs6Ydg==:403 Forbidden.,traceId:1128fdb4d1bcc8b0a85c818b53fd5714'

As the error suggests a permission issue with the AWS S3 Bucket - r42qlik. I made sure that it had the following Bucket Policy:

{

"Version": "2012-10-17",

"Id": "Policy1668006475802",

"Statement": [

{

"Sid": "Stmt1668006452163",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::357942615838:user/tamster"

},

"Action": [

"s3:DeleteObject",

"s3:GetBucketLocation",

"s3:GetObject",

"s3:ListBucket",

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::r42qlik",

"arn:aws:s3:::r42qlik/*"

]

}

]

}

When sconfiguring AWS S3 Storage I used the principal's above access and secret key.

Any help appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@tamster , Please feel free to create a support case for further investigation. Please provide tenant id, timestamp, space id etc..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately, as I'm in a trial I do not have the option to create and add commets to a support case directly. I did receive a response from support with case number - 00059510 with the following comments:

As per the documentation, when using Databricks on AWS with tables without a primary key, reloading the tables in the landing will fail in the Storage app. The create statement does not have any PK.

To resolve this you can either:

- Define a primary key in the tables.

- Set spark.databricks.delta.alterTable.rename.enabledOnAWS to True in Databricks.

Source: https://help.qlik.com/en-US/cloud-services/Subsystems/Hub/Content/Sense_Hub/DataIntegration/TargetCo...

I have yet to try this. However, I have since beeen able to upload data from DataBricks without the need for setting up an AWS staging S3 bucket. I'm wondering what is the purpose of having an S3 bucket.