Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Support

- :

- Support

- :

- Knowledge

- :

- Member Articles

- :

- How to read .json files with Qlik Sense

- Move Document

- Delete Document

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

How to read .json files with Qlik Sense

- Move Document

- Delete Document and Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to read .json files with Qlik Sense

Sep 20, 2023 11:54:36 AM

Sep 26, 2023 2:19:30 PM

Before you start reading: The way to import .json with Qlik Sense script is undocumented and may still change at any time without notice.

Qlik script natively reads JSON (JavaScript Object Notation), it is a bit tricky and slower for large files - and an import wizard is missing, which would create the script blocks.

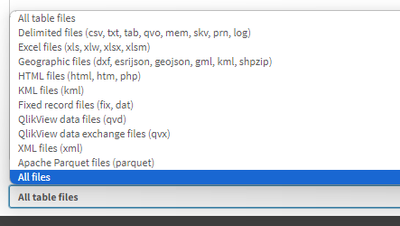

When you click on Add data in the connection pane, the file dialog for the folder will show no specific filter for .json files:

Choose "All files" and then select your .json file. Next you will see, that the wizard attempts to interpret it as csv. Pointless, but at least you get the FROM construct properly filled. Forget the rest, as we will replace it in a minute.

Just hit "Insert script" and then put LOAD * in front of FROM, and instead of (txt ...) put (json):

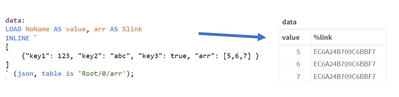

data:

LOAD *

FROM [lib://:Google_Drive/119UHVSR9_JjkT9o-mz2NAO1xyS1xU56f/01.json]

(json);

Not too complicated so far. If your .json file only has one root object (or one root array of objects) and you will already get the keys from the top level. You are already done.

Now lets learn more about the parsing!

Simple JSON Object

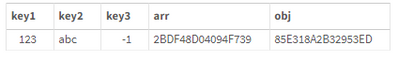

A singe json file containing only one object ...

{"key1": 123, "key2": "abc", "key3": true, "arr": [], "obj": {} }

... will result in one line of data.

- Keys with simple values will be 1:1 loaded into a Qlik Sense field

- arrays and sub-objects will get a 16 char hash-value (I will explain below)

Multi-elements JSON File

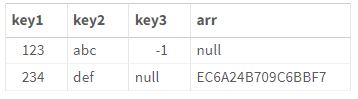

If the json file has multiple "rows" (objects in an outer array [ ... ]) you automatically will get multiple rows in Qlik.

[

{"key1": 123, "key2": "abc", "key3": true}

, {"key1": 234, "key2": "def", "arr": [5,6,7]}

]

The arrays can have different keys. Common keys will concatenate in the same field, whereas keys missed out in a given 'row' will read "null" (unfortunately as text, not as Null()) in the result.

Now lets look, how to deal with sub-objects and arrays? For this we need understand the table is construct and the hash values.

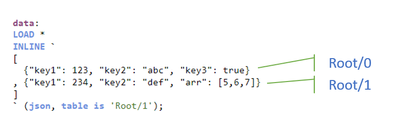

- The root element has the pseudo-table name "Root"

- Every element in an array has an offset, starting with 0, even the outmost array. Hence, the first "row" above you would get with "Root/0"

- An object or an array will come back as a hash-value

- To get the object or array contents itself, alter the "table is" construct to address this key, e.g. in above example "Root/1/arr"

- If it is an array, you need to use the Pseudo key NoName to get the value and the key itself to get the hash value to link it to its parent

Importing JSON sub-arrays

That is fine for one row, but if you have a json file with many rows, you don't want to call every line with "Root/0/arr", "Root/1/arr", "Root/2/arr" ...

The good thing: You don't have to do it yourself. This article is explaining how to load .json by hand. If you want a quick solution, this one is for you: https://github.com/ChristofSchwarz/QlikScripts/tree/master/json

Assuming a .json file with this content

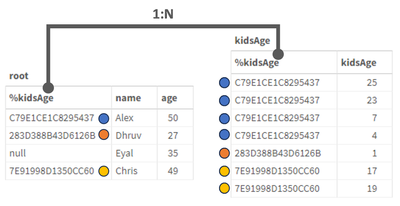

[

{"name": "Alex" , "age": 50, "kidsAge": [25,23,7,4] }

, {"name": "Dhruv" , "age": 27, "kidsAge": [1] }

, {"name": "Eyal" , "age": 35, "kidsAge": [] }

, {"name": "Chris" , "age": 49, "kidsAge": [17,19] }

]

We will introduce a FOR loop now. Note, if an array is empty [] or is null, the LOAD command inside the Loop would fail on that "row", so we set ErrorMode to 0 (=continue script upon error) and reset it to the default 1 (=fail on error) after the NEXT command

SET vFile = [lib://.../filename.json];

root:

LOAD

name, age, kidsAge AS %kidsAge

FROM [$(vFile)] (json, table is 'Root');

SET ErrorMode = 0;

FOR v = 0 TO NoOfRows('root') - 1

kidsAge:

LOAD DISTINCT

kidsAge AS %kidsAge,

NoName AS kidsAge

FROM [$(vFile)] (json, table is 'Root/$(v)/kidsAge');

NEXT v

SET ErrorMode = 1;

The result is a nice 1:N relation resolved.

The fieldname "kidsAge" also exists on the inner LOAD (next to "NoName") and it returns - yes - the same hashvalue as in the outer, first LOAD.

Importing Json sub-objects

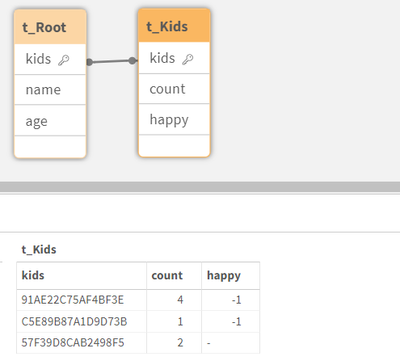

Last example is for a sub-object (instead of the sub-array): Similarily, you get hashes on the parent level and the content itself when addressing it right with the "table is" position. A FOR loop will ease things again, plus some more logic.

In this .json file we have one row where "kids" is null and the subobject doesn't always contain the key "happy"

[

{"name": "Alex" , "age": 50, "kids": {"count": 4, "happy": true } }

, {"name": "Dhruv" , "age": 27, "kids": {"count": 1, "happy": true } }

, {"name": "Eyal" , "age": 35, "kids": null}

, {"name": "Chris" , "age": 49, "kids": {"count": 2 } }

]

We can't count on auto-concatenate any more for the sub-objects (row 4 would end up in a different table than rows 1 and 2). Also, without error-tolerance, the 3rd row import would fail. So we go for this script, where we create an empty table for the "kids" and then explicitly concatenate into it. We have to use the LOAD * functionality, because the structure of the subobjects is not identical throughout the main array. (Assuming you set the vFile yourself)

SET vFile = [lib://..../filename.json];

t_Root:

LOAD // outer load

*

FROM [$(vFile)] (json, table is 'Root');

SET ErrorMode = 0;

t_Kids: LOAD * INLINE [kids]; // start empty table

FOR v = 0 TO NoOfRows('t_Root') - 1

CONCATENATE(t_Kids)

LOAD DISTINCT // inner load

*

FROM [$(vFile)] (json, table is 'Root/$(v)/kids');

NEXT v

SET ErrorMode = 1;

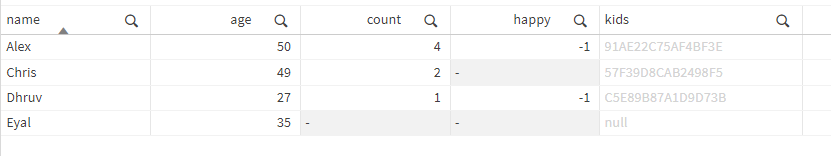

We will get now two tables, that are 1:1 related (or 1:1 or 0, to be precise)

Note: the inner LOAD * will return the "kids" as a field, too, containing the hash that links to the first table

The result can easily be used now in the Client

Uff, it can get tricky, but next week I will show you that all the manual steps (loop, field aliasing, concatenating) could be solved by a recursive SUB.

I hope though, that Qlik will also finish a wizard, that does that parsing on import like we have it for JSON objects received via the REST Connection or, likewise, as XML files are parsed.

Conclusion

Pros

- A surprise and already a good starting point for importing .json natively

- Everything can be imported using "table is" directive

Cons

- Quite some script logic needed to parse nested JSON objects

- Can be slow

- Still undocumented and may change in future

- Move Comment

- Delete Comment

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

And since October 29th, SaaS brings native support for loading JSON!

- Move Comment

- Delete Comment

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

...and as of 2025-May version, the client-managed Qlik Sense has native JSON too.

https://community.qlik.com/t5/Release-Notes/Sense-Enterprise-on-Windows-release-notes-May-2025-Initi...

The possibility to load multiple JSON files, even when their structures differ, is pretty neat!