Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics

- :

- New to Qlik Analytics

- :

- Re: Issue in loading Multiple tables with importan...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Issue in loading Multiple tables with important fields that link to one dimension table

Hi,

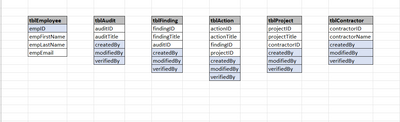

I am, making a BI App which gets data from an MIS software. Its a large application and I am, working on one segment of it. The application has a lot of audit fields i.e. createdBy, modifiedBy, verifiedBy etc that span across multiple tables and they all have the "EmployeeID" in it. While loading data to my BI App, I have tried a few things but all in vain as they bring up synthetic keys or loops in the data model. The db structure is as follows (there are more tables also will be linked later on but currently these are the few main tables that I have to work with):

I need to come up with an analysis that will show user working. for e.g. if I select an employee XYZ the visualizations should be able to show how many audits were created / modified / verified by this user, how many actions were created / modified / verified by this user, and so on.

Any help is appreciated in this regard.

Thanks.

- Subscribe by Topic:

-

Data Load Editor

-

dimension

-

General Question

-

Script

-

Visualization

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @mansoorsheraz , one option is create a unique structure with all the tables, please check this example.

Employee:

Load

empID,

empFirstName,

empLastName

Resident tblEmployee;

Data:

Load

'creator' as UserType,

createdBy as empID,

auditID,

audittitle

Resident tblAudit;

concatenate

Load

'modifier' as UserType,

modifiedBy as empID,

auditID,

audittitle

Resident tblAudit;

concatenate

Load

'verificator' as UserType,

verifiedBy as empID,

auditID,

audittitle

Resident tblAudit;

concatenate

tblFinding:

Load

'creator' as UserType,

createdBy as empID,

findingID,

findingTitle,

auditID

Resident tblFinding;

concatenate

Load

'modifier' as UserType,

modifiedBy as empID,

auditID,

audittitle,

auditID

Resident tblFinding;

concatenate

Load

'verificator' as UserType,

verifiedBy as empID,

auditID,

audittitle,

auditID

Resident tblFinding;

you can add the rest of tables using the same logic

And if it is too much data to be in just one table, you have to consider create a compound key using both fields 'UserType' and 'empID' to avoid the sinthetic key

exit script;

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes. This is exactly I was thinking as well could be a solution. Since the database is a big one and more tables will come into it so this well may be the solution. I was also thinking to have Audit, Findings and Actions also concatenated into one table , for instance, "audit, findings and actions" to avoid synthetic keys and loops issues that may come by having auditID, findingID in some of the other tables that will become part of this BI app in future. The MIS from which I am, fetching the data is highly normalized one with lots of composite keys in it. Will this be a good approach?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Within the most scenarios is a star-scheme data-model with a big fact-table and n dimension-tables regarded as best compromise to development/maintaining efforts, readability and performance. This means it's rather the opposite of normalization. Personally I start each project with this approach and mostly it worked well and doesn't need further adjustments or optimizations.

To go in this direction you could follow the from @QFabian suggested approach by concatenating the multiple tables. This might be even extended by harmonizing the ID and Title fields, too. The identification which ID has which content could be done with an extra Source field. Also various measure-fields like amount, sales, budget and so on could be loaded as Value and another extra field KPI contains the information what measure it is.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @marcus_sommer , for your detailed explanation.

@mansoorsheraz , we can say that every App/Project has its own particularities, so you must think your data model case by case, please read this PDF from Qlik, is old but still useful.

https://community.qlik.com/t5/Chile/The-QlikView-Data-Model-Best-Practices/ta-p/1760708

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi I agree that every project has its own requirements and particularities but then again I think there could be some generic approach to solve highly normalized database that has a good number of tables in it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply. I have a question, can we two different disconnected data models working in one Qlik App? For example I can have all the user management related sheets presented through one cluster of tables and the remaining visualizations from another ? I can bring them in by renaming the fields e.g. ID Audit would be ID Audit in one cluster but it could be Audit ID in the other ? This may cause issues with the filters applied as they may not work but then another question is Can we have a filter selected based on the another e.g if 1010 is selected in the ID Audit filter, the same should be automatically select in the Audit ID filter?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The above mentioned approach of developing a data-model as star-scheme is IMO the most general approach. There won't be many scenarios in which it couldn't be - completely - applied.

Further is creating a star-scheme a rather simple process. Fact-tables are concatenated with a more or less degree of harmonization of the field-names and even quite asynchron structures with a lot of NULL's is not a big issue. Normalized dimension-tables could often just be joined/mapped and sometimes it's also practically to concatenate multiple ones.

IMO requires each other kind of data-model more efforts and knowledge without providing really benefits in regard to any performance KPI (there are exceptions but they require much experience).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In general it's possible to have multiple completely and/or partly independent data-models within a single application. But IMO it's a very bad idea because it may lead to a lot of trouble within the UI and the navigation and the efforts to create a stable and not confusing user-experience could be much higher as the entire ETL part in beforehand.

Ideally there are mostly simple objects with simple expressions like sum() or count() and all the essential logic is included within the data-model and the user just selects the wanted values. The use of multiple actions/macros to navigate through sheets and controlling selections/variable-values is the complete opposite of simple and easy usage and understanding. In this regard see: