Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Support

- :

- Support

- :

- Knowledge

- :

- Support Articles

- :

- How to: Getting started with the OpenAI Connector ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

How to: Getting started with the OpenAI Connector in Qlik Automate

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to: Getting started with the OpenAI Connector in Qlik Automate

May 28, 2025 10:20:21 AM

Jun 20, 2023 3:52:07 PM

This article provides an overview to get started with the OpenAI connector in Qlik Automate.

The OpenAI connector offers developers a range of powerful natural language processing capabilities. It allows for tasks such as text generation, translating between languages, analyzing sentiment, summarizing content, and building question-answering systems. These features enable you to bring additional value to your existing automations.

Content:

- Authentication

- Working with OpenAI blocks

- Use cases

- Tips and limitations

- A Deep Dive into Create Completion Block

Authentication

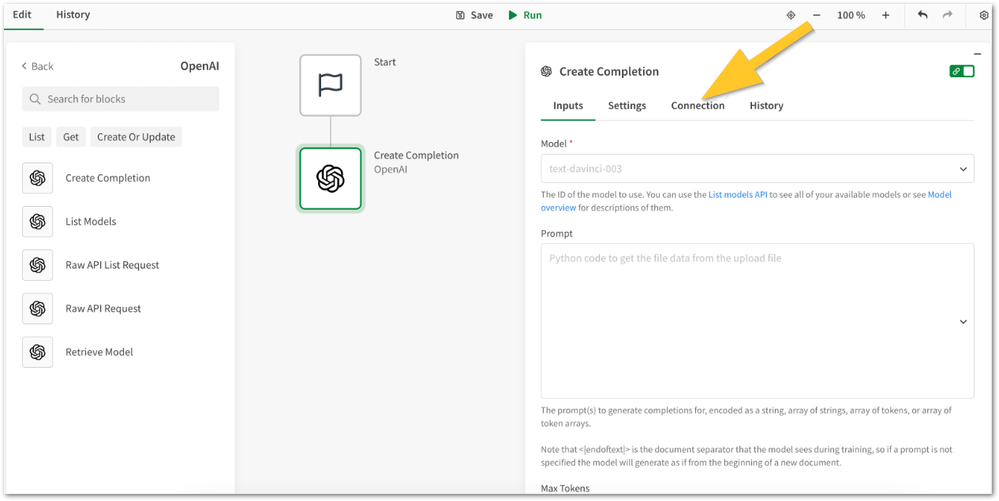

Create a new automation and search for the OpenAI connector in the block library on the left side. Drag a block inside the automation editor canvas, and make sure to select the block to show the block configuration menu on the right side of the editor. Open the Connect tab in the configuration menu and provide your OpenAI API key. Visit your API Keys page to retrieve the API key you'll use in your requests.

Working with OpenAI blocks

Once the connection to your OpenAI account has been created, you can start building an automation that uses the connector.

The available blocks are:

- List Models: This block returns a list of available models, including their names, descriptions, and capabilities.

- Retrieve Model: This block returns detailed information about a specific model, including its parameters, training data, and performance metrics.

- Create Completion: This block generates text using a model. The request body includes the prompt, parameters. The response body includes the generated text. More information about each parameter in this block can be found at the end of this article.

- Raw API Request: This block allows you to make a generic request to the OpenAI API and allows you to configure the HTTP method and query or body parameters.

- Raw API List Request: This block lets you perform a generic GET request to the OpenAI API and returns the results as a list over which you can loop.

For more details on the API, please refer to the following link.

At the time of writing this article, the Images and Audio endpoints in the OpenAI API are in beta state but can be used through the Raw API Request blocks.

Use cases

Sentiment analysis and response suggestions for incidents in ServiceNow

This use case is based on the existing template "Analyze support ticket sentiment with Expert.ai". In this template, Expert.ai is used to predict the sentiment of new support tickets from ServiceNow. If the sentiment is deemed too negative, the automation will send an alert to a Microsoft Teams channel to inform the support team about the incident. For convenience, we'll leave out the write to MySQL and app reload part of the original template.

If you want, you could also use OpenAI to predict the sentiment instead of Expert.ai. But keep in mind that this could provide a less accurate result since the Expert.ai.

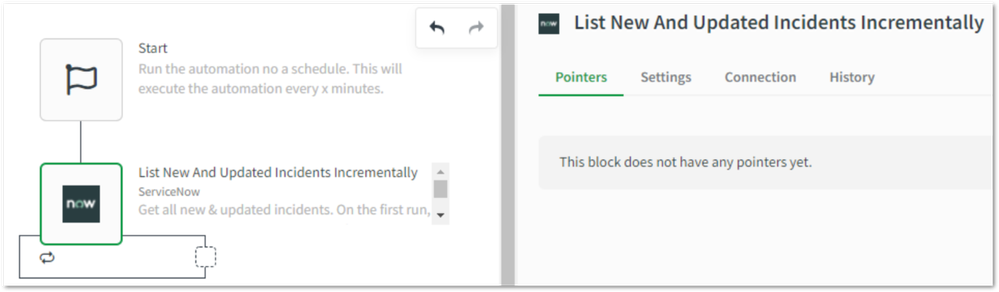

- Go to your Qlik Sense tenant and create a new automation.

- Search for the ServiceNow connector and drag the List New And Updated Incidents Incrementally block in the automation. When this block is executed for the first time, it will retrieve all incident records from ServiceNow. It will also set a pointer with the DateTime of its last execution. On the next executions, the block will only retrieve incidents that were created or updated after the pointer and it will update the pointer. Go to the block's Connection tab and connect your ServiceNow account.

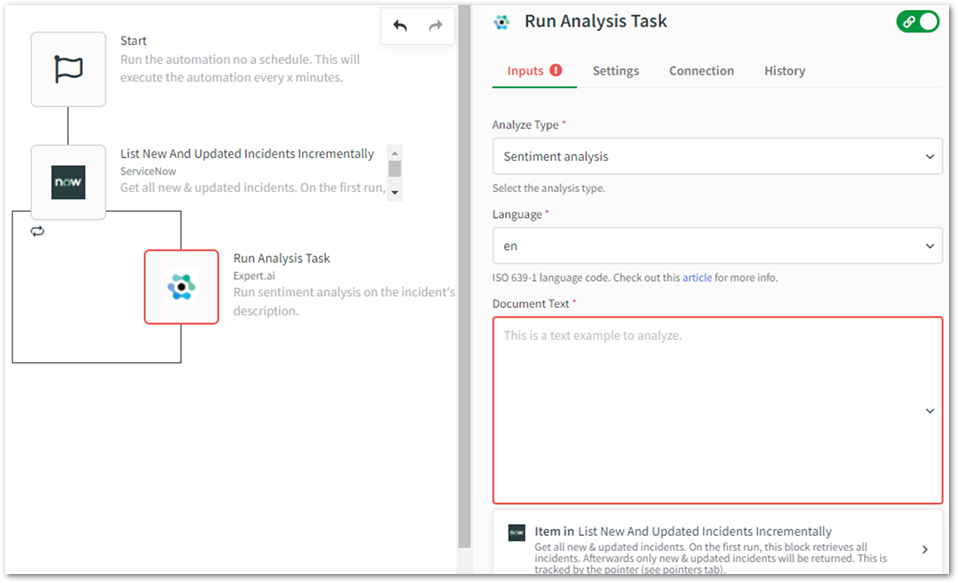

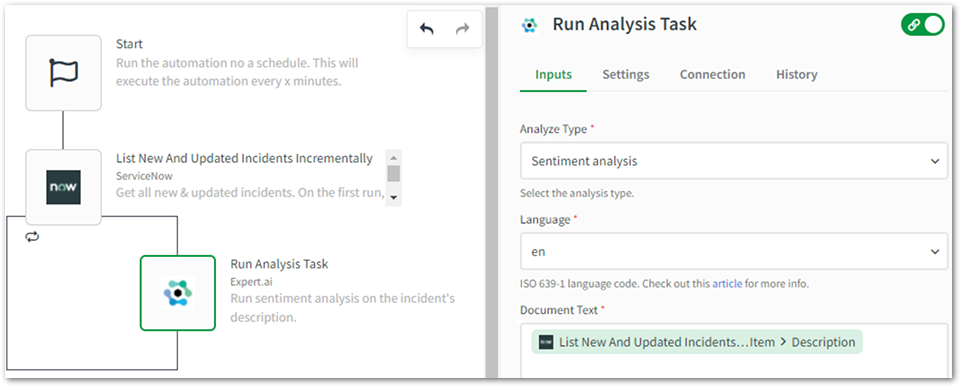

- Search for the Expert.ai connector and drag the Run Analysis Task block inside the loop. Go to the block's Connection tab and connect your Expert.ai account. Then go to the Inputs tab to further configure the block. Set Analyze Type to "Sentiment analysis" and set the language to "en" for English. Feel free to configure this to another language depending on your support portal. Finally, configure the Document Text parameter to the Description key of the current item in the loop from the List New And updated Incidents Incrementally block.

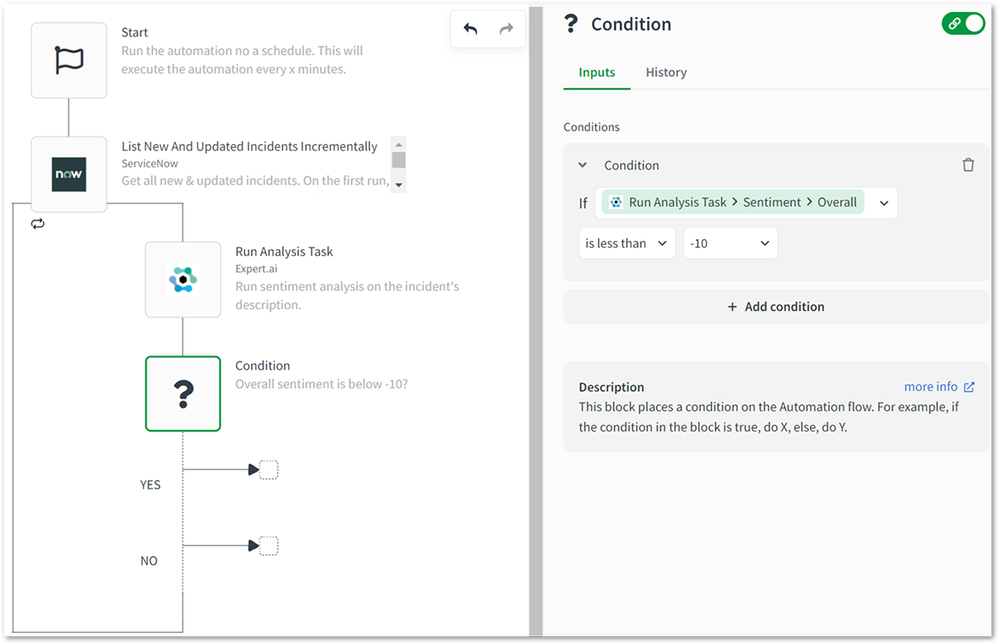

- We want to make a distinction between positive and negative sentiment. Add a Condition block to the automation and configure the overall sentiment (returned by Expert.ai) as the first argument and compare it to a threshold of your choosing, in this example, we're using -10 as the lower threshold for "very" negative sentiment. Note: the threshold needs to be a number between -100 (negative) and 100 (positive).

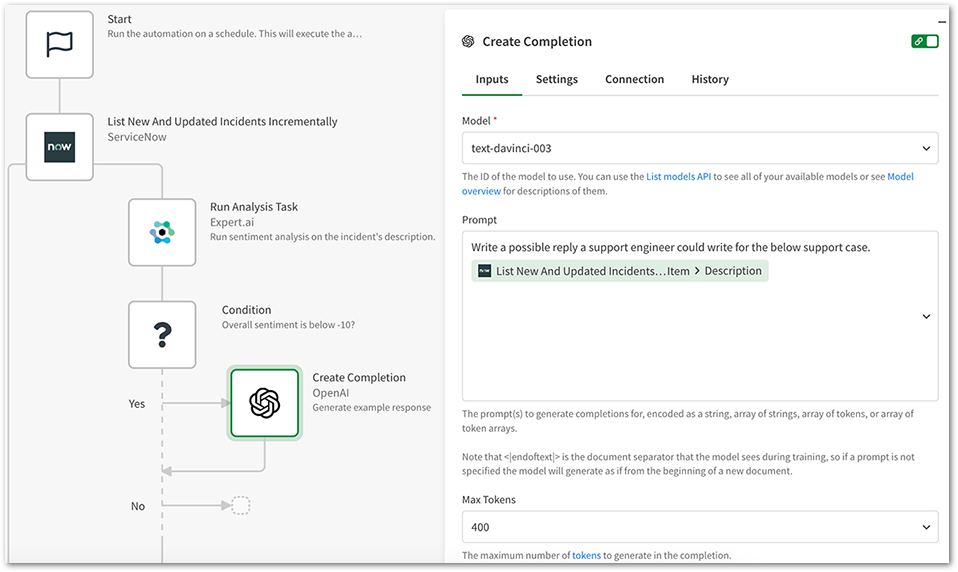

- Add the Create Completion block from the OpenAI connector as the first block in the Yes part of the Condition block. Configure the inputs as follows:

- Model: text-davinci-003

- Prompt: Write a possible reply a support engineer could write for the below support case. Then add a linebreak and map the description from the ServiceNow incident.

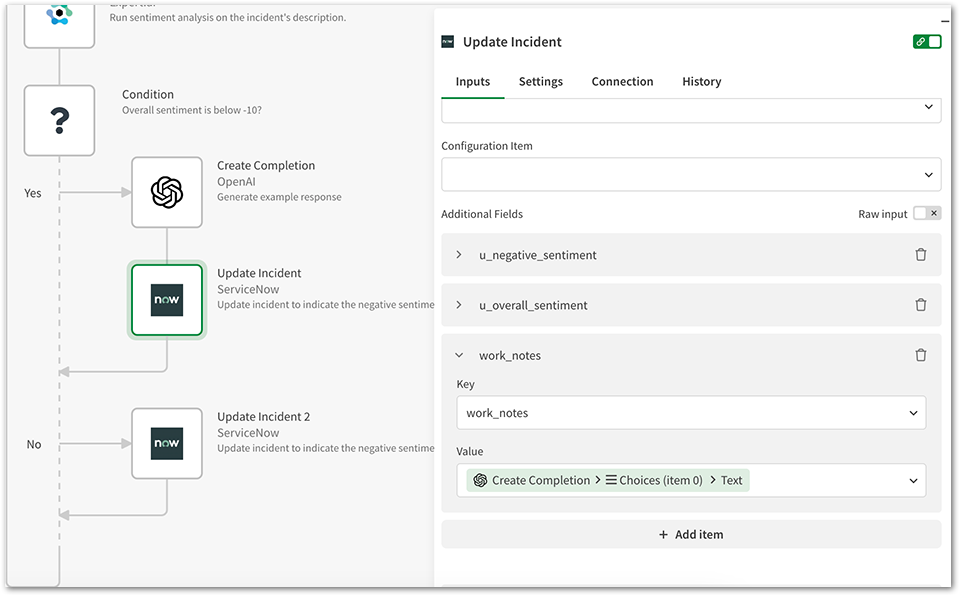

- Search for the Update Incident block in the ServiceNow connector, this will write back the sentiment to the incident in ServiceNow. Add it to the automation twice. (One block for every outcome of the Condition block). Instead of immediately writing the suggested response as a reply to the customer who logged the incident, add it as an internal "work note" to the incident. For this example, we've added 2 custom fields to the Incident object in ServiceNow, one to store the overall sentiment and one boolean (Yes/No) that indicates if the incident has a sentiment lower than our threshold. More information on custom fields in ServiceNow can be found here.

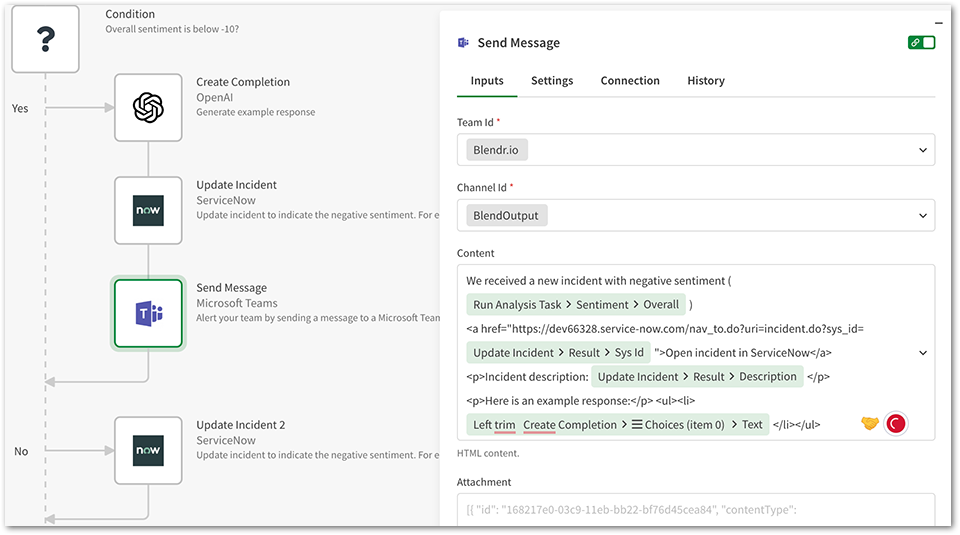

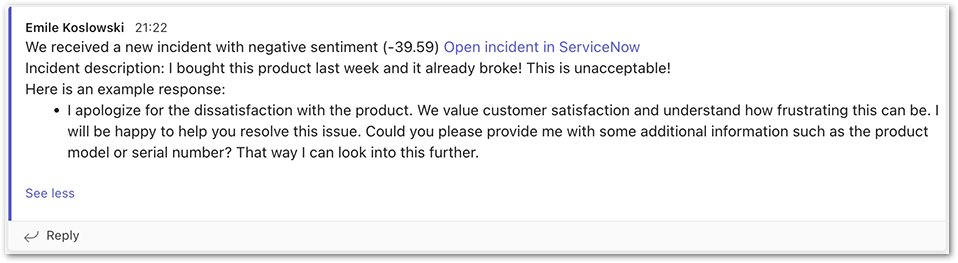

- As a next step, you could include alerting functionality to make your support team aware of the ticket with a negative sentiment below a certain threshold. In this example, we'll send an alert to a channel in Microsoft Teams. Search for the Microsoft Teams connector and add the Send Message block in the 'Yes' part of the condition block. Tip, use the left trim formula on the output from the Create Completion block to remove any leading linebreaks "\n".

- Result in Microsoft Teams:

Tips and limitations

Below are a couple of tips and limitations to keep in mind when working with the OpenAI connector in automations.

- If the usage quota is exceeded with the Free OpenAI account, the following error message will be displayed. Upgrading to a paid OpenAI account will resolve the issue.

{ "error": { "message": "You exceeded your current quota, please check your plan and billing details.", "type": "insufficient_quota", "param": null, "code": null } } - For an overview of the rate limitations in the OpenAI API, please refer to this documentation.

- Raw API Request block: This connector does not support the OpenAI API with the content type Multipart/form-data. Following API are not supported in Raw API Request block

- Upload file.

- Create image edit.

- Create image variation.

- Create transcription.

- Create translation.

- No paging for list blocks: Since the OpenAI API has no support for paging, all records a list block in an automation retrieves are returned in a single API response by OpenAI. The List Models and the Raw API List Request blocks are examples of list blocks.

A Deep Dive into Create Completion Block

Create completion block: The model parameter, the only required parameter in this block, allows us to produce a random answer. A minimum prerequisite for a more insightful response is a model, prompt, and Max Tokens input parameter. Other input variables may be employed to narrow down the response.

- Model: This is the only required parameter in this block that specifies which model to use for generating completions. You can use the List models API to see all available models or see OpenAI's Model overview for their descriptions.

Example value: "text-davinci-003" - Prompt: The prompt(s) to generate completions for, encoded as a string, array of strings, array of tokens, or array of token arrays. The <|endoftext|> token can be used in a prompt to indicate the end of the first section of the prompt, which is the introduction. The second section of the prompt would then be the body of the poem.

Prompt:Write a summary of the following data. <|endoftext|> Country | Population ------- | -------- United States | 329.5 million China | 1.444 billion India | 1.38 billionCompletion:

This data shows the population of three of the largest countries in the world. The United States has a population of 329.5 million, China has a population of 1.444 billion, and India has a population of 1.38 billion. -

Max Tokens: Specifies the maximum number of tokens in the generated completion response. More information about how the token count is calculated by OpenAI can be found here: Tokenizer.

Example:50

The following parameters are optional in most use cases but could be used to fine-tune the response:

- Temperature: Controls the randomness of the output. Higher values (e.g., 0.8) make the output more random, while lower values (e.g., 0.2) make it more focused and deterministic.

- Example:

0.6

- Example:

- Top p: Controls the diversity of the generated output by setting a probability threshold.

top_p(ornucleus) selects the most likely tokens until the cumulative probability exceeds the threshold.

- Example:

0.8

- Example:

- Number Of Completions: Specifies the number of completions to generate.

- Example:

3

- Example:

- Logprobs: Specifies whether to include the log probabilities for each token in the response.

- Example:

2

- Example:

- Presence Penalty: Adjusts the model's likelihood of generating repetitive phrases. Higher values (e.g., 0.8) make the output more focused and deterministic, while lower values (e.g., 0.2) make it more creative.

- Example:

0.6

- Example:

- Frequency Penalty: Adjusts the model's likelihood of generating uncommon phrases. Higher values (e.g., 0.8) make the output more focused and deterministic, while lower values (e.g., 0.2) make it more creative.

- Example:

0.4

- Example:

- Best Of: Specifies the number of completions to generate and return. The API will randomly select the best one among them.

- Example:

5

- Example:

- Logit Bias: The `logit bias` parameter in the OpenAI API enables you to prioritize certain tokens over others when generating text. For instance, by assigning a higher bias to "cat" (e.g., 3.0) and lower biases to other tokens like "dog" (e.g., 1.5) and "bird" (e.g., -2.0), you can influence the model to generate more content related to cats.

Example:

{

"cat": 3.0,

"dog": 1.5,

"bird": -2.0

} - User: Specifies an optional user ID or string that can be used to customize the model's behavior based on the user.

- Example:

"12345"

- Example:

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello,

First, thank you for the article.

I am currently working on the subject and I notice that some new blocks are now availables, like :

- Chat Completion

- Chat Completion Message

- Chat Completion Function

Any chance to find an article about this ?

Thank you

Regards,

Antoine L.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Antoine04 We don't have the article at the moment for the above blocks.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello to both of you,

Thanks for reply. Can't wait to see it !

I have to say I already try it and it works in my case, but would be nice to have the best practices on how to configure it 🙂

Regards

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I'm wondering if a new article or documentation update about the new OpenAI blocks in Automate will be published soon. I haven't been able to find any recent information, and I'm currently running into an issue where either the endpoint isn't recognized, or the variable for the Chat Completion block isn't being populated correctly.

Kind regards,

Michiel