Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Recent Documents

-

Qlik Automate: cannot change the name of report sent with the "Send Email with a...

Using the Get Tabular Report, Get HTML Report, or Get Pixel Perfect Report blocks in conjunction with the Send Email with attachments block results in... Show MoreUsing the Get Tabular Report, Get HTML Report, or Get Pixel Perfect Report blocks in conjunction with the Send Email with attachments block results in the file being sent with a reportId name. The name cannot be changed.

Resolution

Insert the Upload File to Temporary Content (A) and Download file from Temporary Content (B) blocks. This allows you to insert a different name.

Environment

- Qlik Automate

-

Qlik Cloud Monitoring Apps Workflow Guide

This template was updated on December 4th, 2025 to replace the original installer and API key rotator with a new, unified deployer automation. The or... Show MoreThis template was updated on December 4th, 2025 to replace the original installer and API key rotator with a new, unified deployer automation.

The original templates will remain available for a short period; these will be removed on or after January 28, 2026.

Installing, upgrading, and managing the Qlik Cloud Monitoring Apps has just gotten a whole lot easier! With a single Qlik Automate template, you can now install and update the apps on a schedule with a set-and-forget installer using an out-of-the-box Qlik Automate template. It can also handle API key rotation required for the data connection, ensuring the data connection is always operational.

Some monitoring apps are designed for specific Qlik Cloud subscription types. Refer to the compatibility matrix within the Qlik Cloud Monitoring Apps repository.

'Qlik Cloud Monitoring Apps deployer' template overview

This automation template is a set-and-forget template for managing the Qlik Cloud Monitoring Applications, including but not limited to the App Analyzer, Entitlement Analyzer, Reload Analyzer, and Access Evaluator applications. Leverage this automation template to quickly and easily install and update these or a subset of these applications with all their dependencies. The applications themselves are community-supported; and, they are provided through Qlik's Open-Source Software (OSS) GitHub and thus are subject to Qlik's open-source guidelines and policies.

For more information, refer to the GitHub repository.

Features

- Can install/upgrade all or select apps.

- Can create or leverage existing spaces.

- Programmatically verified prerequisite settings, roles, and entitlements, notifying the user during the process if changes in configuration are required, and why.

- Installs latest versions of specified applications from Qlik’s OSS GitHub.

- Creates required API key.

- Creates required analytics data connection.

- Creates a daily reload schedule.

- Reload applications post-install.

- Tags apps appropriately to track which are installed and their respective versions.

- Supports both user and capacity-based subscriptions.

Configuration:

Update just the configuration area to define how the automation runs, then test run, and set it on a weekly or monthly schedule as desired.

Configure the run mode of the template using 7 variable blocks

Users should review the following variables:

- configuredMonitoringApps: contains the list of the monitoring applications which will be installed and maintained. Delete any applications not required before running (default: all apps listed).

- refreshConnectorCredentials: determines whether the monitoring application REST connection and associated API key will be regenerated every run (default: true).

- reloadNow: determines whether apps are reloaded immediately following updates (default: true).

- reloadScheduleHour: determines at which hour of the day the apps reload at for the daily schedule in UTC timezone (default: 06)

- sharedSpaceName: defines the name of the shared space used to import the apps into prior to publishing. If the space doesn't exist, it'll be created. If the space exists, the user will be added to it with the required permissions (default: Monitoring - staging).

- managedSpaceName: defines the name of the managed space the apps are published into for consumption/ alerts/ subscription use.If the space doesn't exist, it'll be created. If the space exists, the user will be added to it with the required permissions (default: Monitoring).

- versionsToKeep: determines how many versions of each staged app is kept in the shared space. If set to 0, apps are deleted after publishing. If a positive integer value other than 0, that many apps will be kept for each monitoring app deployed (default: 0).

App management:

If the monitoring applications have been installed manually (i.e., not through this automation), then they will not be detected as existing. The automation will install new copies side-by-side. Any subsequent executions of the automation will detect the newly installed monitoring applications and check their versions, etc. This is due to the fact that the applications are tagged with "QCMA - {appName}" and "QCMA - {version}" during the installation process through the automation. Manually installed applications will not have these tags and therefore will not be detected.

FAQ

Q: Can I re-run the installer to check if any of the monitoring applications are able to be upgraded to a later version?

A: Yes. The automation will update any managed apps that don't match the repository's manifest version.

Q: What if multiple people install monitoring applications in different spaces?

A: The template scopes the application's installation process to a managed space. It will scope the API key name to `QCMA – {spaceId}` of that managed space. This allows the template to install/update the monitoring applications across spaces and across users. If one user installs an application to “Space A” and then another user installs a different monitoring application to “Space A”, the template will see that a data connection and associated API key (in this case from another user) exists for that space already. It will install the application leveraging those pre-existing assets.

Q: What if a new monitoring application is released? Will the template provide the ability to install that application as well?

A: Yes, but an update of the template from the template picker will be required, since the applications are hard coded into the template. The automation will begin to fail with a notification an update is needed once a new version is available.

Q:I have updated my application, but I noticed that it did not preserve the history. Why is that?

A: Each upgrade may generate a new set of QVDs if the data models for the applications have changed due to bug fixes, updates, new features, etc. The history is preserved in the prior versions of the application’s QVDs, so the data is never deleted and can be loaded into the older version.

-

How to migrate to the Microsoft Outlook connector in Qlik Automate

The Microsoft Outlook connector in Qlik Automate has been updated to support file attachments. This article describes how you can migrate your automa... Show More -

How to extract changes from the Change Store (Write Table) and store them in a d...

This article explains how to extract changes from a Change Store by using the Qlik Cloud Services connector in Qlik Automate and how to sync them to a... Show MoreThis article explains how to extract changes from a Change Store by using the Qlik Cloud Services connector in Qlik Automate and how to sync them to a database.

The example will use a MySQL database, but can easily be modified to use other database connectors supported in Qlik Automate, such as MSSQL, Postgres, AWS DynamoDB, AWS Redshift, Google BigQuery, Snowflake.

The article also includes:

- Two automation examples you can download and import (see Qlik Automate: How to import and export automations):

- Automation Example to Extract Change Store History to MySQL Incremental.json

- Automation Example to Bulk Extract Change Store History to MySQL Incremental.json

- Configuration instructions for the examples

Content

- Prerequisites

- Creating the automation

- Insert changes in MySQL one by one

- Making this incremental

- Bulk updates

- Attachment configuration instructions

- Bonus!

- Replace field names

- User email instead of user id

- Triggering the automation from a sheet

Prerequisites

- A working Write Table with a set of editable columns and some example values already stored in it. More information about the Write Table chart can be found in Write Table | help.qlik.com.

- A MySQL (or similar) database table with columns that match your editable columns.

- Setting up the database, extend your database with metadata fields:

- userId: to store the user ID of the user who made a change

- updatedAt: to store the datetime when a change was saved

Here is an example of an empty database table for a change store with:

- a single PK “productId”

- editable columns are “AmountToOrder”, “Priority”, and “Note”.

- additional columns “userId” and “updatedAt”, which will be used to log user activity and merge separate changes into the same record

Creating the automation

- Create a new automation. See Qlik Automate for details.

- Add the List Change Store History block from the Qlik Cloud Services connector.

- Configure this block with the change store ID. You can copy this from the write table chart.

- Perform a manual run of the automation to make sure some records are returned. The List Change Store History block will return a list of objects for each cell that contains one or more changes. Every object includes the combination of primary key(s), the editable column for that cell, and a list of all values belonging to that cell.

- You have two options on how to perform this sync:

- Insert changes one by one

- Insert changes in bulk (this option is more complex but also more performant)

Insert changes in MySQL one by one

- Add a Loop block (A)inside the List Change Store History block and configure it to loop over the list of changes inside each object returned by the List Change Store History block (B) :

- Search for the MySQL connector (A) in the automation block library and drag the Upsert Record block inside the Loop block (B) :

- Create a new connection (C) to your MySQL database in the Connection tab of the Upsert Record block and activate it for the block by clicking it once created.

- Configure the Upsert Record block as follows

- Table: the database table you have created for the Write Table

- Where: the key:value mappings for the granularity on which you want to save the changes. In this example, it is a combination of the primary key (productId) with userId and updatedAt.

- productId (primary key): this comes from the cellKey in the List Change Store History block.

- userId and createdAt: these are defined for each change, so they should be retrieved from the item in the Loop block.

The userId maps to the createdBy parameter.

- productId (primary key): this comes from the cellKey in the List Change Store History block.

- Record: this is the key:value mapping for the individual change that should get updated. The Key maps to the columnName that is returned by the List Change Store History block, the Value maps to the cellValue parameter that is returned in the Loop block:

- This is what your automation will look like now:

Run the automation manually by clicking the Run button in the automation editor and review that you have records showing in the MySQL table:

Making this incremental

Currently, there is no incremental version yet for the Get Change Store History block. While this is on our roadmap, the automation from this article can be extended to do incremental loads, by first retrieving the highest updatedAt value from the MySQL table. The below steps explain how the automation can be extended:

- Add a Do Query block from the MySQL connector to the automation and configure the query as follows:

SELECT MAX(updatedAT) FROM <your database table>

- Run a test run of the automation without the other blocks attached to verify the result in the Do Query block’s History tab:

- Add a Condition block (A) to the automation and configure it to evaluate the MAX(updatedAt) (B) field from the Do Query block.

Because the Do Query block returns the value as part of a list, the automation editor prompts you to specify which item of the list you want to use. Use the default version option Select first item from list (C).

- Configure the operator in the Condition block to is not empty. If an updatedAt timestamp is found, the Yes part of the Condition block will be executed. If no timestamp is found, the No part will be executed.

- Add a Variable block (A)to the Yes part of the Condition block and create a new variable of type String named Filter. The setting is accessed from the Manage variables (B) button in the Variables block.

- Add an operation to the Variable block to Set value of Filter and type updatedAt gt “ in the input field.

Click in the input field to add a reference to the timestamp in the Do Query block, mirroring the Condition block configuration.

After the mapping is added, append it with an additional double quote character: - Right-click the Variable block and duplicate it, then add the duplicated block to the No part of the Condition (A).

Remove the Set value of Filter step and replace it with the Empty Variable (B) operation.

Right-click each Variable block and add a comment explaining the respective function.

- Reattach the original automation after the Condition block.

Verify that it is attached after the block and not inside the Yes or No sections.

- In the List Change Store History block (A), map the Filter variable to the Filter parameter (B) :

- Run the automation and confirm it only picks up new changes on new runs.

Bulk updates

The solution documented in the previous section will execute the Upsert Record block once for each cell with changes in the change store. This may create too much traffic for some use cases. To address this, the automation can be extended to support bulk operations and insert multiple records in a single database operation.

The approach is to transform the output of the List Change Store History block from a nested list of changes into a list of records that contains the changes grouped by primary key, userId, and updatedAt timestamp.

See the attached automation example: Automation Example to Bulk Extract Change Store History to MySQL Incremental.json.

- Drag the Loop and Upsert Record block outside the List Change Store History loop, but do not delete them:

- Add a Variable block inside the List Change Store History block loop (A) and create a new variable partialChangeRecord of type Object (B).

This variable will be used to map each cell value to the primary key(s), userId and updatedAt timestamp. - Add the first operation to the Variable to Empty it (A).

This is important to ensure that for every item in the loop, we start with an empty partialChangeRecord variable.

Next, add a Set key/values operation to set the primary key(s) (B).

In our example, we have a single primary key, productId, but if you have multiple fields, you should add them one by one.

Set it to the cellKey.rowKey parameter returned in the output from List Change Store History

- Drag the Loop block back into the automation and attach it to the Variable block.

Disconnect the Upsert Record block. - Add a new Variable block inside the Loop block and configure the Variable parameter to the partialChangeRecord object.

Now set additional Key/values in the variable for the primary userId, updatedAt, and the cellValue:

- userId: createdBy parameter returned in the loop

- updatedAt: updatedAt parameter returned in the loop

- For the Cell value, set the key to the columnName returned by the List Change Store History block and set the Value to the cellValue returned from the Loop block:

- uniqueKey: add a fourth keyValue pair uniqueKey.

This will be used later to merge various cell changes into a single record.

This combines the primary key(s), userId, and updatedAt timestamp, each separated by a pipe (|) symbol.

Click the input field, map the first parameter, then type a | -symbol, and click the input field again to map the next parameter:

- Add another Variable block inside the Loop block (A) and create a new list type variable listOfPartialChanges.

Add an Add item operation to add the partialChangeRecord variable (B) to this list.

- Add a Merge Lists block after the List Change Store History block.

The Merge Lists block will be used to merge the partial change records into full records for each change.

Configure both List parameters in the Merge Lists block to use the listOfPartialChanges variable.

- Go to the Settings tab of the Merge Lists block and apply this configuration:

- Item merge strategy: Merge list 1 item and list 2 item in one new item (default)

- List 1 unique key: uniqueKey

- List 2 unique key: uniqueKey

- On duplicate unique key: Merge item from list 2 into item from list 1

- When a property exists on both lists: Keep value from list 1 (default)

Due to an ongoing defect, this parameter is only available after refreshing the automation editor. As the parameters use the default values, this should not impact you.

- Perform a manual run of the automation to verify that the Merge Lists block output is merging.

You will notice that this list contains many duplicates. - Add a Deduplicate List block (A) and configure the List parameter to use the output from the Merge Lists block, then set the key to uniqueKey (B).

- Perform another manual run and confirm that the Deduplicate List block now only contains unique entries.

- The output still contains the uniqueKey parameter that is not compatible with the database.

There are two options: either extend the database or remove uniqueKey.

To remove it, add a Transform List block and set the Input List parameter to the Deduplicate list block:

- Configure the Fields in output list parameter from the Transform List block.

To make this easier, it is best to have example data from a manual run. If you haven’t performed a manual run yet, do one now.

Click the Add Field button and configure new fields:

- For the Field parameter, click the input field and start by mapping your primary keys

- For the Value parameter, do the same and select the corresponding parameter in the Item in input list option

- Repeat this for all other items in the list except for the uniqueKey

- For the Field parameter, click the input field and start by mapping your primary keys

- Add a Loop batch block (A) to the automation and configure it to loop over the Transform List block output in batches of 50 (B).

Adjust this batch size depending on your database and the number of editable columns.

- Add an Insert Bulk block from the MySQL connector within the Loop Batch block loop.

Configure it to use your database table variable (or hardcode your table name) and set the Values parameter to the Batch in Loop Batch parameter. - Run the automation and confirm that the database gets updated.

- Optionally, collapse all empty loop blocks to clean up the automation and provide comments to explain what the blocks and functions do.

This will help you understand this automation when you revisit it in the future.

To add a comment, right-click on the block and click Edit comment.

Attachment configuration instructions

The provided automations will require additional configuration after being imported, such as changing the store, database, and primary key setup.

Automation Example to Extract Change Store History to MySQL Incremental.json

- Variable - databaseTable -> configure with the name of your database table

- Variable - changeStoreId -> configure with your change store ID

- Upsert Record - MySQL -> replace the productId with your primary key, add additional primary keys if necessary

Automation Example to Bulk Extract Change Store History to MySQL Incremental.json

- Variable - databaseTable -> configure with the name of your database table

- Variable - changeStoreId -> configure with your change store id

- Variable - partialChangeRecord -> replace the productId with your primary key, add additional primary keys if necessary

- Variable - partialChangeRecord in Loop block -> Update the uniqueKey field by replacing the productId with your primary key, add additional primary keys if necessary

- Transform List -> replace the productId with your primary key, add additional primary keys if necessary

Bonus!

Replace field names

If field names in the change store don't match the database (or another destination), the Replace Field Names In List block can be used to translate the field names from one system to another.

- Search the Replace Field Names List block and add it to your automation.

- Provide the translations for the field names that need to be changed to match the destination system.

User email instead of user id

To add a more readable parameter to track the user who made changes, the Get User block from the Qlik Cloud Services connector can be used to map User IDs into email addresses or names.

- Search the Get user block (A) in the Qlik Cloud services connector and add it to your automation.

Configure it to use the createdBy parameter (B). - Update the Upsert Record block to use the output from Get User.

A user's name might not be sufficient as a unique identifier. Instead, combine it with a user ID or user email.

Triggering the automation from a sheet

Add a button chart object to the sheet that contains the Write Table, allowing users to start the automation from within the Qlik app. See How to run an automation with custom parameters through the Qlik Sense button for more information.

Environment

- Qlik Cloud Analytics

- Qlik Automate

- Two automation examples you can download and import (see Qlik Automate: How to import and export automations):

-

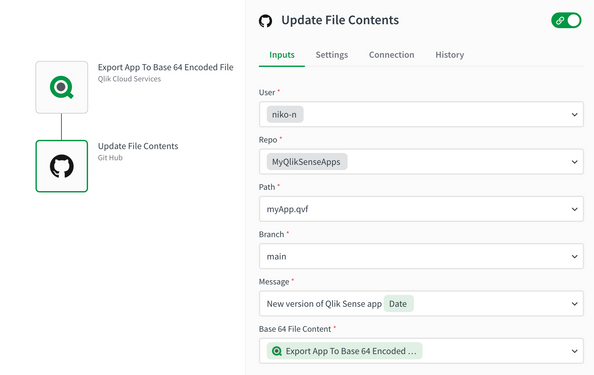

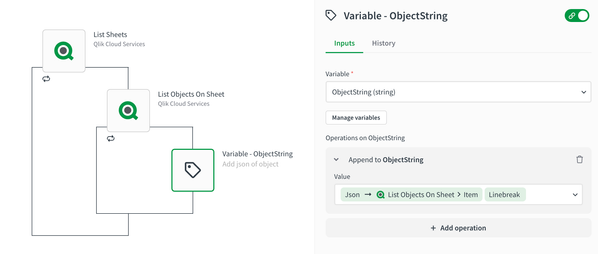

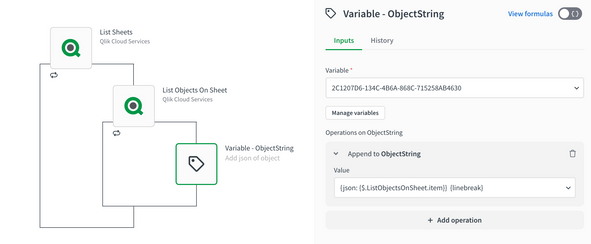

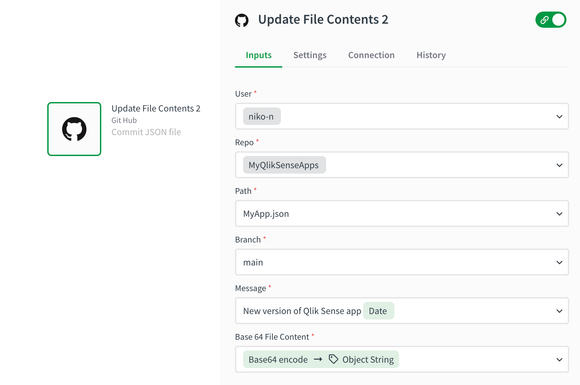

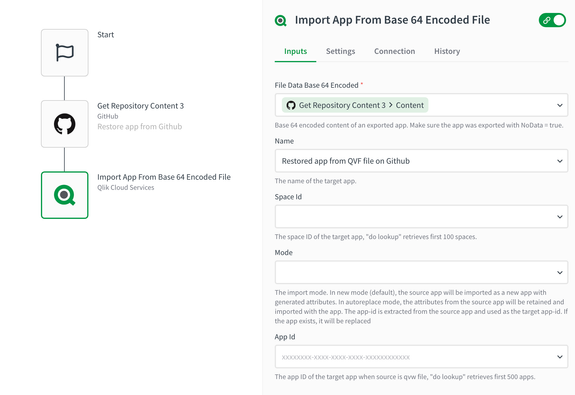

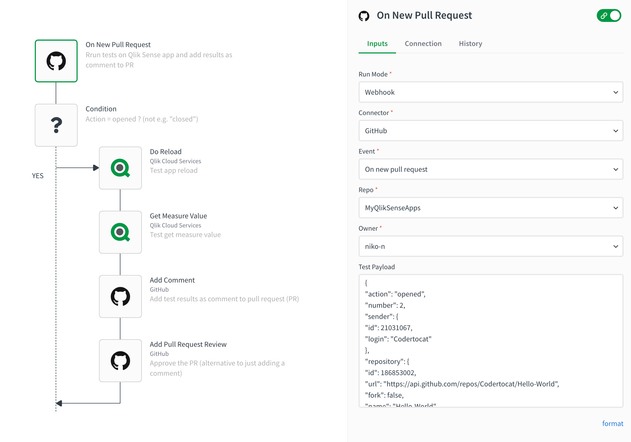

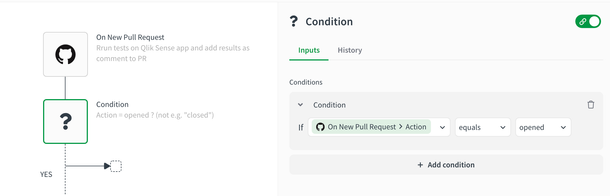

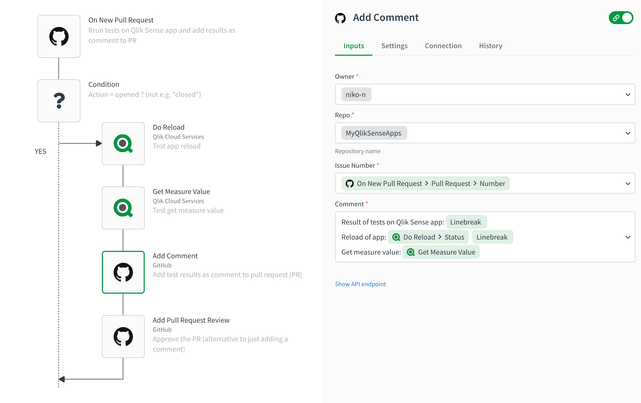

CI/CD pipelines for Qlik Sense apps with automations and Github

Automations allow you to set up CI/CD pipelines for your Qlik Sense apps, using the Github connector. Below we showcase various components of a CI/CD... Show More -

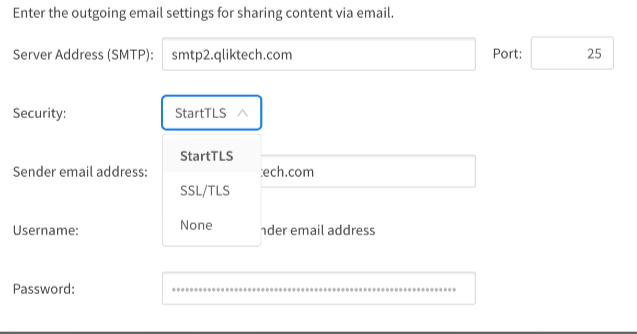

I cannot connect my SMTP server in the Qlik Application Automation ‘Mail’ connec...

The Mail connector in Qlik Application Automation cannot connect to SMTP servers. This article provided an overview of the known causes of connection... Show More -

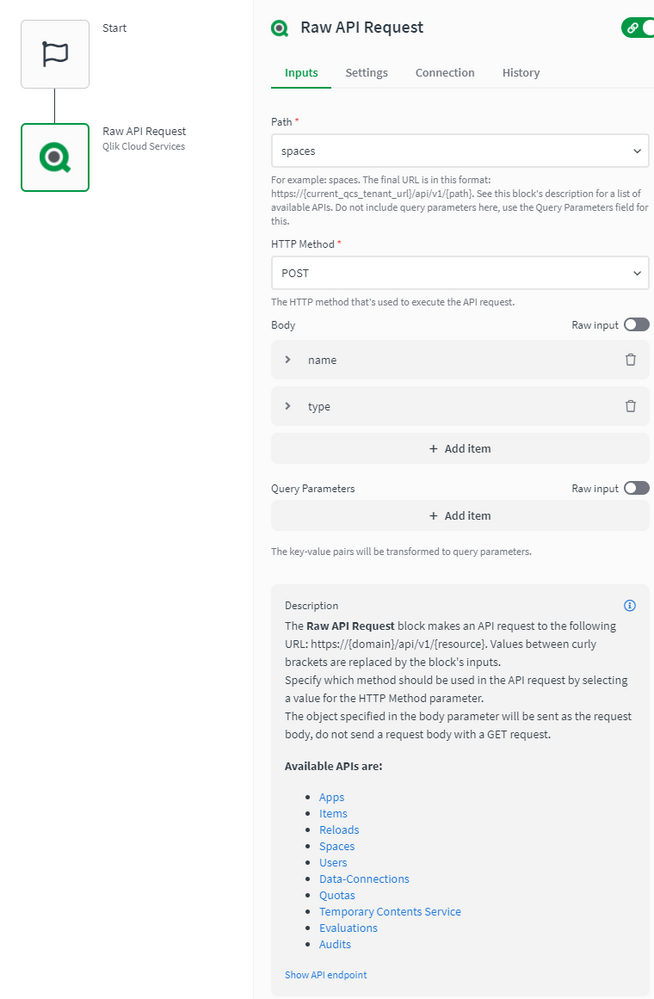

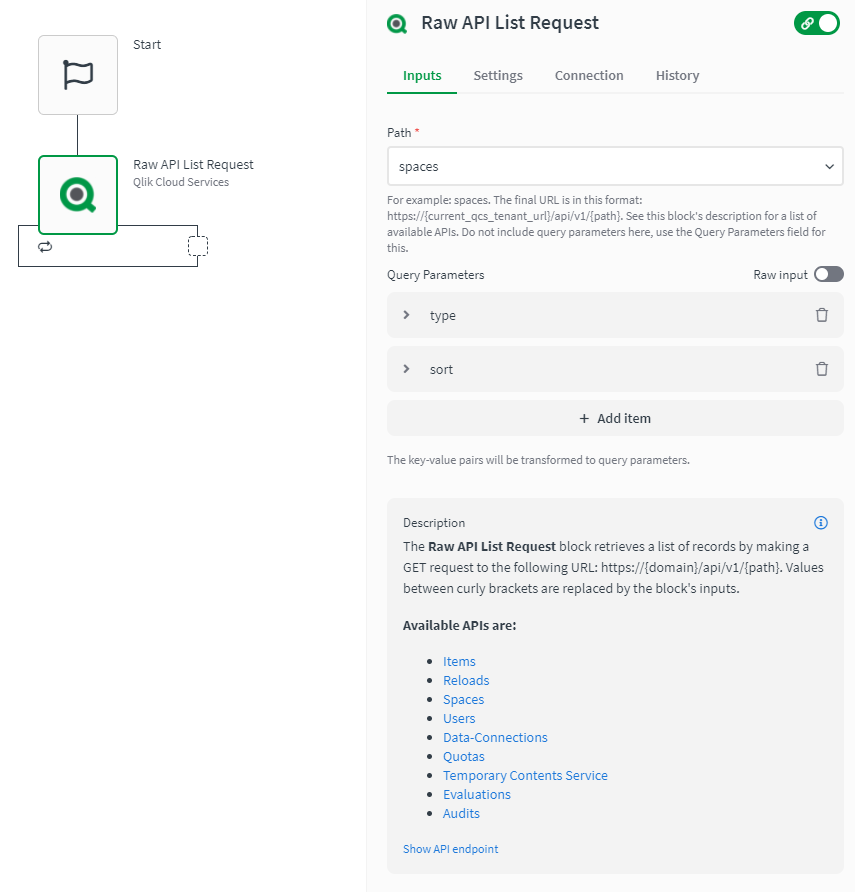

How to use the Raw API Request blocks in Qlik Automate

This article explains how the Raw API Request blocks in Qlik Automate can be used. Most connectors in Qlik Automate have a Raw API Request and a Raw ... Show More -

Build on Iceberg with Qlik Open Lakehouse

This Techspert Talks session covers: Benefits of Iceberg Lakehouse How to build an Open Lakehouse Mirroring Chapters: 01:11 - Why use a Data... Show More -

Enhancing Analytics with Qlik Predict

This Techspert Talks session covers: How ML Experiments work Real World Predictive use cases Time Series Chapters: 01:33 - Machine Learning Mo... Show More -

How to export Qlik Sense Apps from source to a target shared space using Qlik Ap...

This article gives an overview of exporting the Qlik Sense apps from the source to a target shared space for backup purposes using Qlik Application Au... Show MoreThis article gives an overview of exporting the Qlik Sense apps from the source to a target shared space for backup purposes using Qlik Application Automation.

It explains a basic example of a template configured in Qlik Application Automation for this scenario.

You can make use of the template which is available in the template picker. You can find it by navigating to Add new -> New automation -> Search templates and searching for 'Export an app to a shared space as a backup' in the search bar and clicking on the Use template option in order to use it in the automation.

You will find a version of this automation attached to this article: "Export-app-to-shared-space.json". More information on importing automations can be found here.

Full Automation

Variables used in the template:

- SourceSpaceID: The variable used to define source space ID. Please provide a shared space ID since copying an app from managed space is not permitted.

- TargetSpaceID: The variable used to define target space ID. Please provide a shared space ID since copying an app to managed space is not permitted.

- AppCount: The variable is used to get the count of apps exported to the target space successfully. Initially set this variable to 0.

Automation structure:

- Add the 'List Apps' block to get all the existing apps in the target shared space. Configure it to use the TargetSpaceID variable as the Space ID.

- Add the 'Get Space' block to fetch the details of the source shared space. Configure it to use the SourceSpaceID variable as the Space ID.

- Add the 'List Apps' block to get all the existing apps in the source shared space. Configure it to use the SourceSpaceID variable as the Space ID.

- Add the 'Filter List' block to get the apps that already exist in the target shared space.

- Add the 'Condition' block to check if there are any apps from the source shared space that already exist in the target shared space.

- If the condition block outcome evaluates to true:

- Add the 'Delete App' block to remove the previous version of the source app from the target shared space.

- Add the 'Copy App' block to copy the app from the source shared space to the target shared space.

- Add the 'Condition' block to check if the app from the source shared space was successfully exported to the target shared space.

- If yes, Add 1 to the 'AppCount' variable during each iteration. This variable will provide the total number of apps that have been backed up to the target shared space.

- If the condition block outcome evaluates to false:

- Add the 'Copy App' block to copy the app from the source shared space to the target shared space.

- Add the 'Condition' block to check if the app from the source shared space was successfully exported to the target shared space.

- If yes, Add 1 to the 'AppCount' variable during each iteration. This variable will provide the total number of apps that have been backed up to the target shared space.

- If the condition block outcome evaluates to true:

- Add the 'Condition' block to check if there are any apps that were exported to the target shared space successfully.

- If yes, add an 'Update Run Title' block to specify the number of apps that have been exported to the target shared space successfully. The job title is visible when looking at the automation history and My Automation Runs Overview. For more info: Update run title block | Qlik Cloud Help

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

Related Content

Export Qlik Sense Apps to GitHub

Environment

-

How to send straight table data to email as HTML table using Qlik Automate

This article provides an overview of how to send straight table data to email as an HTML table using Qlik Automate. The template is available on the t... Show MoreThis article provides an overview of how to send straight table data to email as an HTML table using Qlik Automate.

The template is available on the template picker. You can find it by navigating to Add new -> New automation -> Search templates and searching for 'Send straight table data to email as table' in the search bar, and clicking the Use template option.

You will find a version of this automation attached to this article: "Send-straight-table-data-to-email -as-HTML-table.json".

Content:

Full Automation

Automation structure

The following steps describe how to build the demo automation:

- Add a variable called 'EmailBody' to build the email body, and append the body opening tag and opening text of the email as value to this variable.

- Add another variable called 'EmailStraightTable' and append the table opening tag together with the table header opening tag as a value to this variable.

- Use the 'Get Straight Table Data' block from the Qlik Cloud Services connector to get data from the straight table for a specific app to be sent as an email. Specify app ID, Sheet ID, and Object ID input parameters. You can use the do-lookup functionality to find value for all these parameters. There is a limit of 100,000 records that can be retrieved through the 'Get Straight Table Data' block. This block will not work with other table types.

- Add a 'Loop' block to loop over the keys from the 'Get Straight Table Data' block response which act as the headers of the HTML table. Use the '{getkeys: {$.GetStraightTableData}}' formula in the 'Loop' block.

- Add the key/column name as the header of the HTML table in the 'EmailStraightTable' variable block. Outside the loop, add the 'EmailStraightTable' variable block, and append the table header closing tag, and table body opening tag as values to this variable.

- Add a 'Loop' block to loop over all the values from the 'Get Straight Table Data' block response. Within the 'Loop' block add the following blocks:

- Use the 'EmailStraightTable' variable block and append the table row opening tag as a value to this variable.

- Use another 'Loop' block to loop over all values from the straight table for the current key/ column of the straight table.

- Next, use the 'EmailStraightTable' variable block and append the table data opening tag along with the column value for the corresponding key from the straight table which ultimately gives a single row with values for all the keys in each iteration and the table data closing tag as value to this variable.

- Use the 'EmailStraightTable' variable block and append the table row closing tag as a value to this variable.

- Add the 'EmailStraightTable' variable block and append the table body closing tag with table footer tags as a value to this variable.

- Add the 'EmailBody' variable block and append the 'EmailStraightTable' variable, which contains the final HTML table with straight table data, together with the email footer, and body closing tag as a value to this variable.

- Add a 'Send Mail' block from the Mail connector to send an email with the above constructed HTML table that contains straight table data. Specify values for all the mandatory input parameters. Please connect to the SMTP server by adding a connection to the Mail connector.

An example output of the email sent:

The information in this article is provided as-is and will be used at your discretion. Depending on the tool(s) used, customization(s)andor other factors, ongoing support on the solution below may not be provided by Qlik Support.

Related Content

How to export more than 100k cells using Get Straight Table Data Block

Environment

- Qlik Cloud Analytics

- Qlik Automate

- Add a variable called 'EmailBody' to build the email body, and append the body opening tag and opening text of the email as value to this variable.

-

How to delete spaces in Qlik Cloud Services using Qlik Automate

This article explains how you can Delete spaces in Qlik Cloud Services using Qlik Automate. Multiple spaces can be deleted during one run. As when del... Show MoreThis article explains how you can Delete spaces in Qlik Cloud Services using Qlik Automate. Multiple spaces can be deleted during one run. As when deleting a space via the user interface, this will also delete any apps, data files or other content within the space(s) using the relevant blocks for those content types.

For information about spaces in Qlik Cloud, see Navigating Spaces.

This automation is not designed to be triggered using a webhook or on a schedule. It has been designed with manual user input in mind and requires multiple confirmations.

If you use your own automation to delete spaces, know that deleting a space via the space blocks will not delete the content in the space, and will instead result in that content being orphaned in the tenant. Leverage the examples in this automation to first delete content from spaces prior to deleting the space.

Once deleted, spaces, apps, or data files cannot be recovered.Content:

- Prerequisites

- Overview

- Start

- Confirm selected spaces

- Delete apps

- Delete data files

- Delete spaces

- Finalize

- Running the Automation

- Related Content

Prerequisites

This automation assumes you have a TenantAdmin role.

Overview

The automation is divided into five sections:

- Start

- Confirm selected spaces

- Delete apps

- Delete data files

- Delete spaces

- Finalize

Start

The Start section retrieves all available spaces and prompts you to select what spaces you want to delete.

Overview:

Setting it up:

- The List Spaces block retrieves all spaces on the tenant that you have access to.

- The Filter List block filters spaces that you have the delete permission to.

This is achieved using a condition configured such as this:

Condition

If meta.actions

list contains

delete - The Transform List block reformats the space list to improve readability in the input block (id, name and type are kept).

- The Inputs block adds a prompt for selecting the spaces to delete.

Multi-selection is allowed: - A Go To Label block moves to the next section of the automation.

Before the Go To Label block can be configured, the targeted Label must already exist. This is done in the next section.

Confirm selected spaces

This section provides the possibility to review the selected spaces before deleting them.

Overview:

Setting it up:

- The Label block is named Confirm Selected Spaces and this must also be configured in the Go To Label block from the previous section.

- A Loop block loops over selected spaces.

- Within the loop, a List Apps block retrieves apps in the space. The output of this block is stored in the variable appList which is used for reviewing what apps exist within the spaces that will be deleted.

- To retrieve the connectionId for data files in this space, a Raw API Request block is used. The path in the block is configured to use data-files/connections and the HTTP method is set to GET. A query parameter is also added where the key is set to spaceId and the value is set to {$.loop.item}.

- The connectionId retrieved in the previous step is used to retrieve data files in this space using the List Data Files block. The output of this block is stored in the variable dataFileList which is used to review the data files that exist within the spaces flagged for deletion.

- After the loop, an Output block displays which spaces will be deleted.

- We use an Inputs block to allow user confirmation before deleting apps, data files and other content within the selected spaces:

- A Go To Label block moves to the next section of the automation.

Before the Go To Label block can be configured, the targeted Label must already exist. This is done in the next section.

Delete apps

Once deleted, spaces, apps, or data files cannot be recovered.

This section deletes all existing apps inside the space(s). A space cannot be removed before all apps are deleted.

Overview:

Setting it up:

- The numeric variable iter tracks how many apps are deleted.

- A Loop block loops over the items in the variable appList.

- The variable iter is increased by 1 per loop iteration.

- The block Get App Information retrieves the app name based on the app id.

- The app is deleted using the block Delete App.

- A deleted app status will be presented using the string variable message.

- The delete apps status will be presented using an Output block:

- The delete apps status will also be presented using the block Update Run Title:

- A Go To Label moves on to the next section of the automation.

Before the Go To Label block can be configured, the targeted Label must already exist. This is done in the next section.

Delete data files

Once deleted, spaces, apps, or data files cannot be recovered.

This section deletes all data files within the selected space(s).

Overview:

Setting it up:

- The numeric variable iter tracks of how many data files are deleted.

- A Loop block loops over the items in the variable dataFileList.

- The variable iter is increased by 1 per loop iteration.

- To retrieve the data file name for datafiles we use a Raw API Request block configured such as this:

Path: data-files/{ $.loop3.item }

HTTP Method: GET - The data file is deleted using the block Delete Data File.

- A deleted data file status will be presented using the string variable message.

- The deleted data file status will be presented using an Output block:

- The delete data file status will also be presented using the block Update Run Title:

- Finally, a Go To Label block moves on to the next section of the automation.

Before the Go To Label block can be configured, the targeted Label must already exist. This is done in the next section.

Delete spaces

Once deleted, spaces, apps, or data files cannot be recovered.

This section deletes all selected spaces.

Overview:

Setting it up:

- The numeric variable iter tracks of how many spaces are deleted.

- A Loop block loops over the selected spaces.

- The variable iter is increased by 1 per loop iteration.

- To retrieve the name of the space we use a Lookup Item In List block.

- The space is deleted using the block Delete Space.

- A deleted space status will be presented using the string variable message.

- The delete space status will be presented using an Output block

- The delete space status will also be presented using the block Update Run Title.

Finalize

This section wraps up the automation and updates the final status of the automation run.

Overview:

Setting it up:

- A summary of how many apps, data files and apps have been deleted is presented using the string variable message.

- The summary is presented using an Output block:

- The summary is also returned using the block Update Run Title:

Running the Automation

- On run, choose which space to delete. Be careful not to select spaces you want to keep:

- Once you have selected the space or spaces, you are prompted to confirm the deletion of apps, data files and other content that exists within this space or spaces. If you don't want to proceed you can stop the automation. If you want to proceed you need to click on the green confirmation button:

Related Content

Navigating Spaces

Managing permissions in shared spaces

Managing permissions in managed spacesEnvironment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

Qlik Cloud Analytics Introducing Automation Sharing and Collaboration

This capability has been rolled out across regions over time: May 5th: India, Japan, Middle East, Sweden (completed) June 4th: Asia Pacific, Germany,... Show MoreThis capability has been rolled out across regions over time:

- May 5th: India, Japan, Middle East, Sweden (completed)

- June 4th: Asia Pacific, Germany, United Kingdom, Singapore (completed)

- June 9th: United States (completed)

- June 12th: Europe (completed)

- June 26th: Qlik Cloud Government

With the introduction of shared automations, it is now possible to create, run, and manage automations in shared spaces.

Content

- Allow other users to run an automation

- Collaborate on existing automations

- Collaborate through duplication

- Extended context menus

- Context menu for owners:

- Context menu for non-owners:

- Monitoring

- Administration Center

- Activity Center

- Run history details

- Metrics

Allow other users to run an automation

Limit the execution of an automation to specific users.

Every automation has an owner. When an automation runs, it will always run using the automation connections configured by the owner. Any Qlik connectors that are used will use the owner's Qlik account. This guarantees that the execution happens as the owner intended it to happen.

The user who created the run, along with the automation's owner at run time, are both logged in the automation run history.

These are five options on how to run an automation:

- Run an automation from the Hub and Catalog

- Run an automation from the Automations activity center

- Run an automation through a button in an app

You can now allow other users to run an automation through the Button object in an app without needing the automation to be configured in Triggered run mode. This allows you to limit the users who can execute the automation to members of the automation's space.

More information about using the Button object in an app to trigger automation can be found in How to run an automation with custom parameters through the Qlik Sense button. - Programmatic executions of an automation

- Automations API: Members of a shared space will be able to run the automations over the /runs endpoint if they have sufficient permissions.

- Run Automation and Call Automation blocks

- Note for triggered automations: the user who creates the run is not logged as no user specific information is used to start the run. The authentication to run a triggered automation depends on the Execution Token only.

Collaborate on existing automations

Collaborate on an automation through duplication.

Automations are used to orchestrate various tasks; from Qlik use cases like reload task chaining, app versioning, or tenant management, to action-oriented use cases like updating opportunities in your CRM, managing supply chain operations, or managing warehouse inventories.

Collaborate through duplication

To prevent users from editing these live automations, we're putting forward a collaborate through duplication approach. This makes it impossible for non-owners to change an automation that can negatively impact operations.

When a user duplicates an existing automation, they will become the owner of the duplicate. This means the new owner's Qlik account will be used for any Qlik connectors, so they must have sufficient permissions to access the resources used by the automation. They will also need permissions to use the automation connections required in any third-party blocks.

Automations can be duplicated through the context menu:

As it is not possible to display a preview of the automation blocks before duplication, please use the automation's description to provide a clear summary of the purpose of the automation:

Extended context menus

With this new delivery, we have also added new options in the automation context menu:- Start a run from the context menu in the hub

- Duplicate automation

- Move automation to shared space

- Edit details (owners only)

- Open in new tab (owners only)

Context menu for owners:

Context menu for non-owners:

Monitoring

The Automations Activity Centers have been expanded with information about the space in which an automation lives. The Run page now also tracks which user created a run.

Note: Triggered automation runs will be displayed as if the owner created them.

Administration Center

The Automations view in Administration Center now includes the Space field and filter.

The Runs view in Administration Center now includes the Executed by and Space at runtime fields and filters.

Activity Center

The Automations view in Automations Activity Center now includes Space field and filter.

Note: Users can configure which columns are displayed here.

The Runs view in the Automations Activity Center now includes the Space at runtime, Executed by, and Owner fields and filters.

In this view, you can see all runs from automations you own as well as runs executed by other users. You can also see runs of other users's automations where you are the executor.

Run history details

To see the full details of an automation run, go to Run History through the automation's context menu. This is also accessible to non-owners with sufficient permissions in the space.

The run history view will show the automation's runs across users, and the user who created the run is indicated by the Executed by field.

Metrics

The metrics tab in the automations activity center has been deprecated in favor of the automations usage app which gives a more detailed view of automation consumption.

-

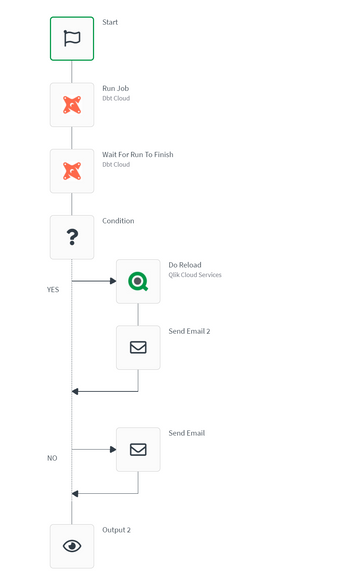

How to: Getting started with the dbt Cloud connector in Qlik Application Automat...

This article gives an overview of the available blocks in the dbt Cloud connector in Qlik Application Automation.The purpose of the dbt cloud connecto... Show MoreThis article gives an overview of the available blocks in the dbt Cloud connector in Qlik Application Automation.

The purpose of the dbt cloud connector is to be able to schedule or trigger your jobs in dbt from action in Qlik Sense SaaS.Authentication

Authentication to dbt Cloud happens through an API key. The API key can be found in the user profile when logged into dbt Cloud under API Settings. Instructions on the dbt documentation are at: https://docs.getdbt.com/dbt-cloud/api-v2#section/Authentication

Available Blocks

The blocks we have available are built around the objects Jobs and Runs. Furthermore for easy use of the connector there are helper blocks for accounts and projects. For any gaps we have raw API request blocks to allow more freedom to end users where our blocks do not suffice.

Blocks for Jobs:

- List Jobs

- Get Job

- Run Job

Blocks for Runs:

- List Runs

- Get Run

- List Run Artifacts

- Wait for Run to Finish

- Cancel Run

How to run a job and reload Qlik App:

The following automation is added as an attachment and shown as an image and will run a job in dbt Cloud and if successful reloads an app in Qlik Sense SaaS. It will always send out an email, this of course can be changed to a different channel. Also it would be possible to extend this to multiple dbt jobs:

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

How to send straight table data to Microsoft Teams as a table using Qlik Automat...

This article provides an overview of how to send straight table data to Microsoft Teams as a table using Qlik Automate. The template is available on t... Show MoreThis article provides an overview of how to send straight table data to Microsoft Teams as a table using Qlik Automate.

The template is available on the template picker. You can find it by navigating to Add new -> New automation -> Search templates, searching for 'Send straight table data to Microsoft Teams as a table' in the search bar, and clicking the Use template option.

You will find a version of this automation attached to this article: "Send-straight-table-data-to-Microsoft-Teams-as-a-table.json".

Content:

Full Automation

Automation structure

The following steps describe how to build the demo automation:

- Add the 'Get Straight Table Data' block from the Qlik Cloud Services connector to get data from the straight table for a specific app to send to the Microsoft Teams channel as a table. Specify the app ID, Sheet ID, and Object ID in the input parameters. You can use the do-lookup functionality to find value for all these parameters. There is a limit of 100,000 records that can be retrieved through the 'Get Straight Table Data' block. This block will not work with other table types.

- Add a 'Loop' block to loop over the keys from the 'Get Straight Table Data' block response which acts as the table header. Use the '{getkeys: {$.GetStraightTableData}}' formula in the 'Loop' block. Within the 'Loop' block add the following blocks:

- Add an object variable called 'teamsTextBlockObject' to build the header and pass this variable to another list variable called 'cardTableColumn'.

- Add another 'Loop' block to loop over all the values for the current key of the straight table. Within the 'Loop' block add the following blocks:

- Add a 'Condition' block to check whether the column value is empty or not.

- If the condition block outcome evaluates to true:

- Add the 'teamsTextBlockObject' object variable, set the text value as 'NA' since the column value is null/empty, and pass this variable to the 'cardTableColumn' list variable.

- Add the 'teamsTextBlockObject' object variable, set the text value as 'NA' since the column value is null/empty, and pass this variable to the 'cardTableColumn' list variable.

- If the condition block outcome evaluates to false:

- Add the 'teamsTextBlockObject' object variable, set the text value as the column value of the straight table, and pass this variable to the 'cardTableColumn' list variable.

- Add the 'teamsTextBlockObject' object variable, set the text value as the column value of the straight table, and pass this variable to the 'cardTableColumn' list variable.

- If the condition block outcome evaluates to true:

- Add a 'Condition' block to check whether the column value is empty or not.

- Add another object variable called 'columnObject' and pass this variable to the 'columns' list variable. These variables are used to build the straight table data into a specific format which is required to build the structure of the team's message as a card attachment.

- Add an object variable called 'teamsTextBlockObject' to build the header and pass this variable to another list variable called 'cardTableColumn'.

- Add a string variable called 'title' which is used to provide the title to the message.

- Add the 'Create Attachment Adaptive Card' block from the Microsoft Teams connector to build the structure for card attachment. Make sure to transform the 'columns' list to JSON text using the JSON formula.

- Add 'Send Message' block from the Microsoft Teams connector to send straight table data as a table to the Teams channel with the card attachment which was created in the previous block.

Specify the Team ID and the Channel ID in the input parameters. You can use the do-lookup functionality to find the value of all these parameters.

An example output of the table sent to the Teams channel:

The information in this article is provided as-is and will be used at your discretion. Depending on the tool(s) used, customization(s), and/or other factors, ongoing support on the solution below may not be provided by Qlik Support.

Related Content

- How to get started with Microsoft Teams

- Send straight table data to Microsoft Teams as a message

- Send straight table data to email as HTML table

Environment

- Qlik Cloud Analytics

- Qlik Automate

- Add the 'Get Straight Table Data' block from the Qlik Cloud Services connector to get data from the straight table for a specific app to send to the Microsoft Teams channel as a table. Specify the app ID, Sheet ID, and Object ID in the input parameters. You can use the do-lookup functionality to find value for all these parameters. There is a limit of 100,000 records that can be retrieved through the 'Get Straight Table Data' block. This block will not work with other table types.

-

How to deploy Qlik Talend Cloud Pipelines across spaces using Qlik Automate

Qlik Automate is a no-code automation and integration platform that lets you visually create automated workflows. It allows you to connect Qlik capabi... Show MoreQlik Automate is a no-code automation and integration platform that lets you visually create automated workflows. It allows you to connect Qlik capabilities with other systems without writing code. Powered by Qlik Talend Cloud APIs, Qlik Automate enables users to create powerful automation workflows for their data pipelines.

Learn more about Qlik Automate.

In this article, you will learn how to set up Qlik Automate to deploy a Qlik Talend Cloud pipeline project across spaces or tenants.

To ease your implementation, there is a template on Qlik Automate that you can customize to fit your needs.

You will find it in the template picker: navigate to Add new → New automation → Search templates and search for ‘Deploying a Data Integration pipeline project from development to production' in the search bar, and click Use template.ℹ️ This template wil be generally available on October 1, 2025.

Use case and pre-requisites

In this deployment use case, the development team made changes to an existing Qlik Talend Cloud (QTC) pipeline.

As the deployment owner, you will redeploy the updated pipeline project from a development space to a production space where an existing pipeline is already running.

To reproduce this workflow, you'll first need to create:

- Two data spaces:

- A DEV space

- A PROD space

- Two source databases

- A DEV source database

- A PROD source database

- Two target databases

- A DEV target database

- A PROD target database

Using separate spaces and databases ensures a clear separation of concerns and responsibilities in an organization, reduces the risk to production pipelines while the development team is working on feature changes.

Workflow steps:

- Export the updated pipeline project from the DEV space

- Get the project variables from DEV

- Update the project variables for PROD before import

- Stop the pipeline currently running in production

- Import your project to the PROD space

- Prepare your project and check the status

- Restart the project in PROD

ℹ️ Note: This is a re-deployment workflow. For initial deployments, create a new project prior to proceeding with the import.

Step-by-step workflow

1. Export the updated pipeline project from the DEV space

Use the 'Export Project' block to call the corresponding API, using the ProjectID.

This will download your DEV project as a ZIP file. In Qlik Automate, you can use various cloud storage options, e.g. OneDrive. Configure the OneDrive 'Copy File on Microsoft OneDrive' block to store it at the desired location.

To avoid duplicate file names (which may casue the automation to fail) and to easily differentiate your project exports, use the 'Variable' block to define a unique prefix (such as dateTime).

2. Get the project variables from DEV

From the 'Qlik Talend Data Integration' connector, use the 'Get Project Binding' block to call the API endpoint.

The 'bindings' are project variables that are tied to the project and can be customized for reuse in another project. Once you test-run it, store the text response for later use from the 'History' tab in the block configuration pane on the right side of the automation canvas:

3. Update the project variables for PROD before import

We will now use the 'bindings' from the previous step as a template to adjust the values for your PROD pipeline project, before proceeding with the import.

From the automation, use the 'Update Project Bindings' block. Copy the response from the 'Get Project Binding' block into the text editor and update the DEV values with the appropriate PROD variables (such as the source and target databases). Then, paste the updated text into the Variables input parameter of the 'Update Project Binding' block.

ℹ️ Note: these project variables are not applied dynamically when you run the 'Update Bindings' using the Qlik Automate block. They are appended and only take effect when you import the project.

4. Stop the pipeline currently running in production

For a Change Data Capture (CDC) project, you must stop the project before proceeding with the import.

Use the 'Stop Data Task' block from the 'Qlik Talend Data Integration' connector. You will find the connectors in the Block Library pane on the left side of the automation canvas.

Fill in the ProjectID and TaskID:

ℹ️ We recommend using a logic with variables to handle task stopping in the automation. Please refer to the template configuration and customize it to your needs.

5. Import your project to the PROD space

You’re now ready to import the DEV project contents into the existing PROD project.

⚠️ Warning: Importing the new project will overwrite any existing content in the PROD project.

Using the OneDrive block and the 'Import Project' blocks, we will import the previously saved ZIP file.

ℹ️ In this template, the project ID is handled dynamically using the variable block. Review and customize this built-in logic to match your environment and requirements.

After this step is completed, your project is now deployed to production.

6. Prepare your project and check the status

It is necessary to prepare your project before restarting it in production. Preparing ensures it’s ready to be run by creating or recreating the required artifacts (such as tables, etc).

The 'Prepare Project' block uses the ProjectID to prepare the project tasks by using the built-in project logic. You can also specify one or more specific tasks to prepare using the 'Data Task ID' field. In our example, we are reusing the previously set variable to prepare the same PROD project we just imported.

If your pipeline is damaged, and you need to recreate artifacts from scratch, enable the 'Allow recreate' option. Caution: this may result in data loss.

Triggering a 'Prepare' results in a new 'actionID'. This ID is used to query the action status via the 'Get Action Status' API block in Qlik Automate. We use an API polling strategy to check the status at a preset frequency.

Depending on the number of tables, the preparation can take up to several minutes.

Once we get the confirmation that the preparation action was 'COMPLETED', we can move on with restarting the project tasks.

If the preparation fails, you can define an adequate course of action, such as creating a ServiceNow ticket or sending a message on a Teams channel.

ℹ️ Tip: Review the template's conditional blocks configuration to handle different preparation statuses and customize the logic to fit your needs.

7. Restart the project in PROD

Now that your project is successfully prepared, you can restart it in production.

In this workflow, we use the 'List Data Tasks' to filter on 'landing' and 'storage' for the production project, and restart these tasks automatically.

All done: your production pipeline has been updated, prepared, and restarted automatically!

Now it’s your turn: fetch the Qlik Automate template from the template library and start automating your pipeline deployments.

Related Content

Start a Qlik Talend Cloud® trial

How to get started with the Qlik Talend Data Integration blocks in Qlik Automate

-

Automate deployment of the Capacity consumption app with Qlik Automate

This template was released on September 29, 2025. Many customers rely on the Capacity Consumption Report in Qlik Cloud to understand usage and billing... Show MoreThis template was released on September 29, 2025.

Many customers rely on the Capacity Consumption Report in Qlik Cloud to understand usage and billing patterns. Until now, deploying and refreshing this app required repetitive manual steps: downloading from the admin console, publishing it into a managed space, and re-publishing every time a new version was needed.

This approach made monitoring difficult and often left customers with out-of-date insights.

What's new

A new Qlik Automate template automates the end-to-end deployment of the consumption app into a managed space of your choice. Once set up, the app is refreshed daily - ready for admins or billing users to work with.

With this in place, you can:

-

Stay current – The app refreshes once per day (morning CET), so your analytics reflect the latest usage data.

-

Collaborate easily – Place the app directly into a managed space for shared access.

-

Enable subscriptions and alerts – Users can subscribe to sheets or KPIs, or create alerts on thresholds that matter to them.

-

Maintain governance – The template creates/uses the right spaces and applies roles automatically.

-

Eliminate manual work – No more re-publishing or searching through personal space copies.

How it works

-

The automation checks that you are on a capacity-based subscription (your app size must be 100MB or less; larger data sets still need to be deployed manually or via script).

-

It ensures you are a TenantAdmin (required for license and app access).

-

It creates a staging space (for imports) and a managed space (for sharing).

-

It imports the latest version of the app into staging, then publishes it to the managed space.

-

You can configure how many historic versions you want to keep in the shared space. By default, no staged apps are kept.

Scheduling

Since the underlying data is refreshed once per day in the morning CET, we recommend scheduling this automation to run around midday CET. This ensures that your managed space always holds the latest daily version of the app.

Requirements

-

TenantAdmin role

-

Capacity-based subscription

-

Report size ≤100MB (larger reports cannot currently be deployed with Qlik Automate)

Get Started

-

Create a new automation, navigate to the App Installer category, and select the Capacity consumption app deployer template.

-

Update the variables (space names, versions to keep).

-

Test manually once.

-

Schedule it to run daily (around midday CET).

Once enabled, you’ll have a single source of truth for consumption reporting - ready to drive alerts, subscriptions, and collaborative analysis across your admins.

Related Content

- Review the Help documentation on the capacity report to understand its metrics and counters.

Environment

- Qlik Cloud

-

-

How to create NPrinting GET and POST REST connections

NPrinting has a library of APIs that can be used to customize many native NPrinting functions outside the NPrinting Web Console. Environment: Qlik N... Show MoreNPrinting has a library of APIs that can be used to customize many native NPrinting functions outside the NPrinting Web Console.

Environment:

An example of two of the more common capabilities available via NPrinting APIs are as follows

- Connection reloads

- Publish Task executions

These and many other public NPrinting APIs can be found here: Qlik NPrinting API

In the Qlik Sense data load editor of your Qlik Sense app, two REST connections are required (These two REST Connectors must also be configured in the QlikView Desktop application>load where the API's are used. See Nprinting Rest API Connection through QlikView desktop)

- GET

- POST

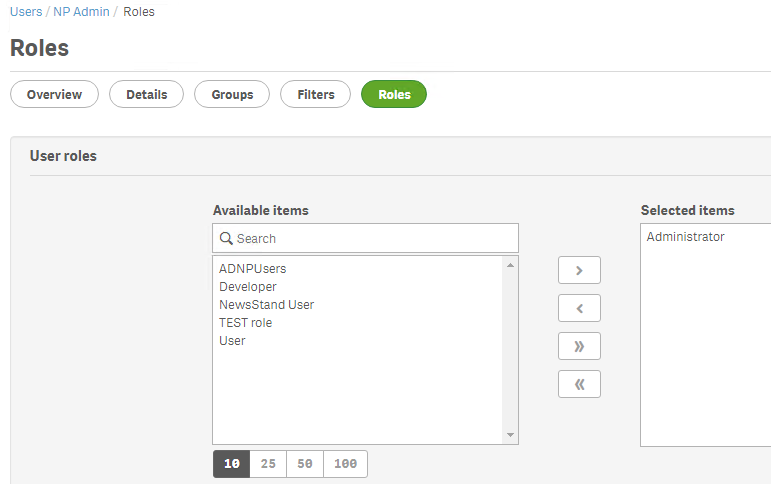

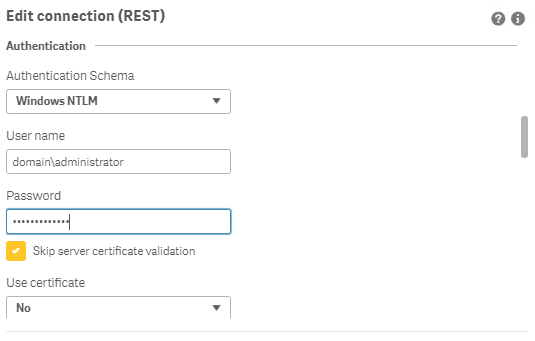

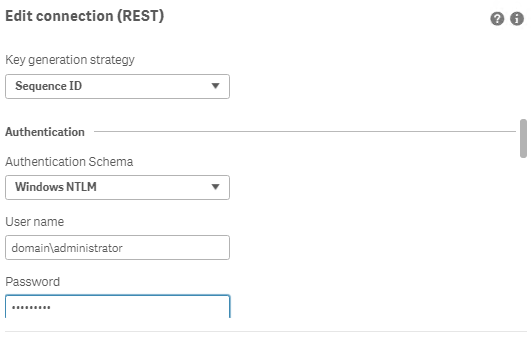

Requirements of REST user account:

- Windows Authentication is required in both these connectors. The required user account is the NPrinting service account (which is also ROOTADMIN on the Qlik Sense server)

- This user account must also be a member of the NPrinting 'Administrators' Security Role on the NPrinting Server.

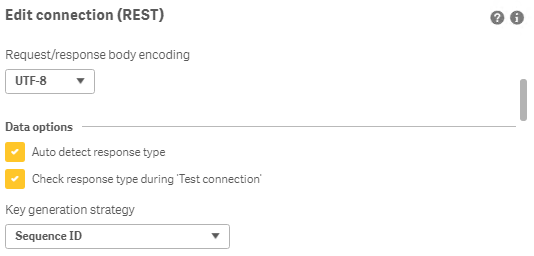

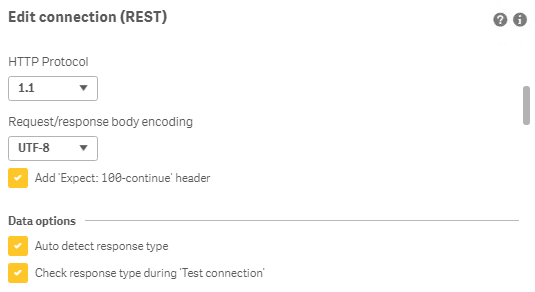

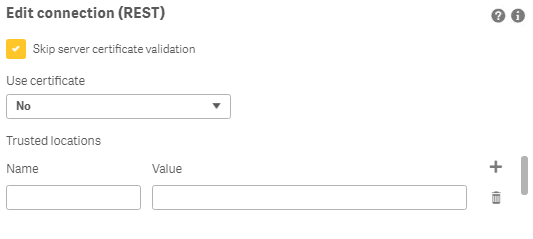

Creating REST "GET" connections

Note: Replace QlikServer3.domain.local with the name and port of your NPrinting Server

NOTE: replace domain\administrator with the domain and user name of your NPrinting service user account

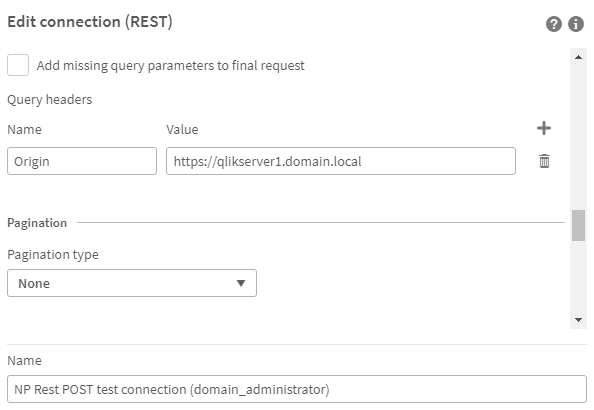

Creating REST "POST" connections

Note: Replace QlikServer3.domain.local with the name and port of your NPrinting Server

NOTE: replace domain\administrator with the domain and user name of your NPrinting service user account

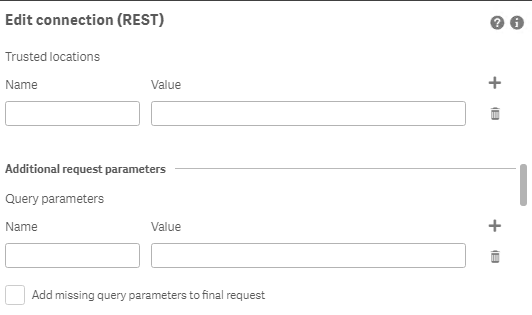

Ensure to enter the 'Name' Origin and 'Value' of the Qlik Sense (or QlikView) server address in your POST REST connection only.

Replace https://qlikserver1.domain.local with your Qlik sense (or QlikView) server address.

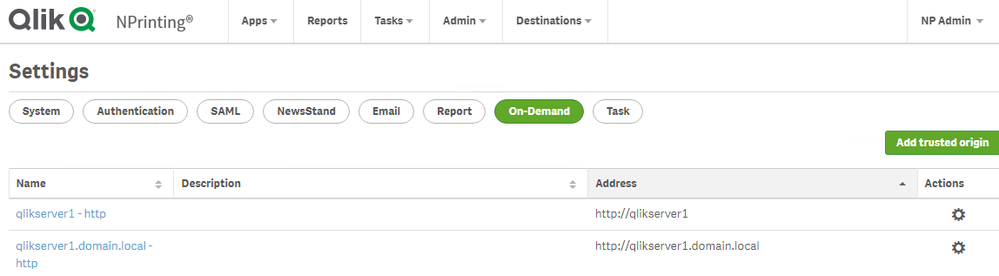

Ensure that the 'Origin' Qlik Sense or QlikView server is added as a 'Trusted Origin' on the NPrinting Server computer

Related Content

- Distribute NPrinting reports after reloading a Qlik App

- Extending Qlik NPrinting

- Run a Qlik NPrinting API POST command via QlikView reload script

- Troubleshooting Common NPrinting API Errors

NOTE: The information in this article is provided as-is and to be used at own discretion. NPrinting API usage requires developer expertise and usage therein is significant customization outside the turnkey NPrinting Web Console functionality. Depending on tool(s) used, customization(s), and/or other factors ongoing, support on the solution below may not be provided by Qlik Support.

-

Qlik Cloud Webhooks: Migration to new event formats is coming

The event payloads emitted by the Qlik Cloud webhooks service are changing. Qlik is replacing a legacy event format with a new cloud event format. Any... Show MoreThe event payloads emitted by the Qlik Cloud webhooks service are changing. Qlik is replacing a legacy event format with a new cloud event format.

Any legacy events (such as anything not already cloud event compliant) will be updated to a temporary hybrid event containing both legacy and cloud event payloads. This will start on or after November 3, 2025.

Please consider updating your integrations to use the new fields once added.

A formal deprecation with at least a 6-month notice will be provided via the Qlik Developer changelog. After that period, hybrid events will be replaced entirely by cloud events.

Webhook automations in Qlik Automate will not be impacted at this time.

Events impacted by this migration

The webhooks service in Qlik Cloud enables you to subscribe to notifications when your Qlik Cloud tenant generates specific events.

At the time of writing, the following legacy events are available:

Service Event name Event type When is event generated API keys API key validation failed com.qlik.v1.api-key.validation.failed The tenant tries to use an API key which is expired or revoked Apps (Analytics apps) App created com.qlik.v1.app.created A new analytics app is created Apps (Analytics apps) App deleted com.qlik.v1.app.deleted An analytics app is deleted Apps (Analytics apps) App exported com.qlik.v1.app.exported An analytics app is exported Apps (Analytics apps) App reload finished com.qlik.v1.app.reload.finished An analytics app has finished refreshing on an analytics engine (not it may not be saved yet) Apps (Analytics apps) App published com.qlik.v1.app.published An analytics app is published from a personal or shared space to a managed space Apps (Analytics apps) App data updated com.qlik.v1.app.data.updated An analytics app is saved to persistent storage Automations (Automate) Automation created com.qlik.v1.automation.created A new automation is created Automations (Automate) Automation deleted com.qlik.v1.automation.deleted An automation is deleted Automations (Automate) Automation updated com.qlik.v1.automation.updated An automation has been updated and saved to persistent storage Automations (Automate) Automation run started com.qlik.v1.automation.run.started An automation run began execution Automations (Automate) Automation run failed com.qlik.v1.automation.run.failed An automation run failed Automations (Automate) Automation run ended com.qlik.v1.automation.run.ended An automation run finished successfully Reloads (Analytics reloads) Reload finished com.qlik.v1.reload.finished An analytics app has been refreshed and saved Users User created com.qlik.v1.user.created A new user is created Users User deleted com.qlik.v1.user.deleted A user is deleted Any events not listed above will remain as-is, as they already adhere to the cloud event format.

How are events changing

Each event will change to a new structure. The details included in the payloads will remain the same, but some attributes will be available in a different location.

The changes being made:

- All new property names will be lowercase, which may cause some duplication of existing properties within the

dataobject. - The following properties or objects will be updated:

cloudEventsVersionis replaced byspecversion. For most events this will be fromcloudEventsVersion: 0.1tospecversion: 1.0+.contentTypeis replaced bydatacontentypeto describe the media type of the data object.eventIdis replaced byid.eventTimeis replaced bytime.eventTypeVersionis not present in the future schema.eventTypeis replaced bytype.extensions.actoris replaced byauthtypeandauthclaims.extensions.updatesis replaced bydata._updatesextensions.meta, and any other direct objects onextensionsare replaced by equivalents indatawhere relevant.- The direct properties of the

extensionsobject will be moved to the root and renamed to be lowercase if needed, such astenantId,userId,spaceId, etc.

Example: Automation created event

This is the current legacy payload of the automation created event:

{ "cloudEventsVersion": "0.1", "source": "com.qlik/automations", "contentType": "application/json", "eventId": "f4c26f04-18a4-4032-974b-6c7c39a59816", "eventTime": "2025-09-01T09:53:17.920Z", "eventTypeVersion": "1.0.0", "eventType": "com.qlik.v1.automation.created", "extensions": { "ownerId": "637390ef6541614d3a88d6c3", "spaceId": "685a770f2c31b9e482814a4f", "tenantId": "BL4tTJ4S7xrHTcq0zQxQrJ5qB1_Q6cSo", "userId": "637390ef6541614d3a88d6c3" }, "data": { "connectorIds": {}, "containsBillable": null, "createdAt": "2025-09-01T09:53:17.000000Z", "description": null, "endpointIds": {}, "id": "cae31848-2825-4841-bc88-931be2e3d01a", "lastRunAt": null, "lastRunStatus": null, "name": "hello world", "ownerId": "637390ef6541614d3a88d6c3", "runMode": "manual", "schedules": {}, "snippetIds": {}, "spaceId": "685a770f2c31b9e482814a4f", "state": "available", "tenantId": "BL4tTJ4S7xrHTcq0zQxQrJ5qB1_Q6cSo", "updatedAt": "2025-09-01T09:53:17.000000Z" } }This will be the temporary hybrid event for automation created:

{ // cloud event fields "id": "f4c26f04-18a4-4032-974b-6c7c39a59816", "time": "2025-09-01T09:53:17.920Z", "type": "com.qlik.v1.automation.created", "userid": "637390ef6541614d3a88d6c3", "ownerid": "637390ef6541614d3a88d6c3", "tenantid": "BL4tTJ4S7xrHTcq0zQxQrJ5qB1_Q6cSo", "description": "hello world", "datacontenttype": "application/json", "specversion": "1.0.2", // legacy event fields "eventId": "f4c26f04-18a4-4032-974b-6c7c39a59816", "eventTime": "2025-09-01T09:53:17.920Z", "eventType": "com.qlik.v1.automation.created", "extensions": { "userId": "637390ef6541614d3a88d6c3", "spaceId": "685a770f2c31b9e482814a4f", "ownerId": "637390ef6541614d3a88d6c3", "tenantId": "BL4tTJ4S7xrHTcq0zQxQrJ5qB1_Q6cSo", }, "contentType": "application/json", "eventTypeVersion": "1.0.0", "cloudEventsVersion": "0.1", // unchanged event fields "data": { "connectorIds": {}, "containsBillable": null, "createdAt": "2025-09-01T09:53:17.000000Z", "description": null, "endpointIds": {}, "id": "cae31848-2825-4841-bc88-931be2e3d01a", "lastRunAt": null, "lastRunStatus": null, "name": "hello world", "ownerId": "637390ef6541614d3a88d6c3", "runMode": "manual", "schedules": {}, "snippetIds": {}, "spaceId": "685a770f2c31b9e482814a4f", "state": "available", "tenantId": "BL4tTJ4S7xrHTcq0zQxQrJ5qB1_Q6cSo", "updatedAt": "2025-09-01T09:53:17.000000Z" }, "source": "com.qlik/automations" }Timeline

- November 3, 2025: Hybrid events start emitting (combined legacy and cloud event payloads).

- At least 6 months after a formal deprecation notice: Legacy fields will be removed, leaving fully cloud events only.

Environment

- Qlik Cloud

- Qlik Cloud Government

- All new property names will be lowercase, which may cause some duplication of existing properties within the

-

Qlik Cloud Analytics: when querying Apps API endpoint, Scripts, Data Flows and T...

When querying the /api/v1/apps endpoint in Qlik Cloud Analytics, the results also include Scripts, Data Flows, and Table Recipes. Is there a way to se... Show MoreWhen querying the /api/v1/apps endpoint in Qlik Cloud Analytics, the results also include Scripts, Data Flows, and Table Recipes.

Is there a way to see only the apps?

Example output:

{ "attributes": { "_resourcetype": "app", "createdDate": "2025-08-27T06:31:14.767Z", "custom": {}, "description": "", "dynamicColor": "", "encrypted": true, "hasSectionAccess": false, "id": "54b3b705-f00f-48ce-bbff-2497926f79e0", "isDirectQueryMode": false, "lastReloadTime": "", "modifiedDate": "2025-08-27T06:31:15.215Z", "name": "THIS IS ACTUALLY A TABLE RECIPE", "originAppId": "", "owner": "auth0|omitted", "ownerId": "omitted", "publishTime": "", "published": false, "thumbnail": "", "usage": "SINGLE_TABLE_PREP" }, "create": [ { "canCreate": true, "resource": "sheet" ...Resolution

This is working as designed. From the Qlik Cloud Analytics perspective, Scripts, Data Flows, and Table Recipes are considered apps.

To recognize them, the “usage” attribute needs to be checked:

"usage": “ANALYTICS” → apps

"usage": "SINGLE_TABLE_PREP" → table recipes

"usage": "DATA_PREPARATION" → scripts

"usage": "DATAFLOW_PREP" → data flowsEnvironment

- Qlik Cloud Analytics