- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Qlik Application Automation: How to keep your automations synced over multiple tenants

In this article, we will explain how to set up one automation as a source and keep multiple target automations in other tenants synced with the changes made to the original. We will be using an intermediary tool for this process, in our case Github, to store the changes made to the source automation so you could also say this article doubles as a version control article for your future automations.

Setting up the source automation and syncing changes to Github

To get the initial automation data inside a Github repository, all we need to do is construct a quick automation that contains the "Get Automation" block and run it to receive the much-needed data in the block history. We can then copy that data and paste it all into a file inside our working repository. For example purposes, our working file is called 'automation.json' and is situated in the main directory of the demo repository.

Now that our initial setup is complete, we will list the steps needed to keep our repository up to date with all the changes to our automation. This is how the workflow will look:

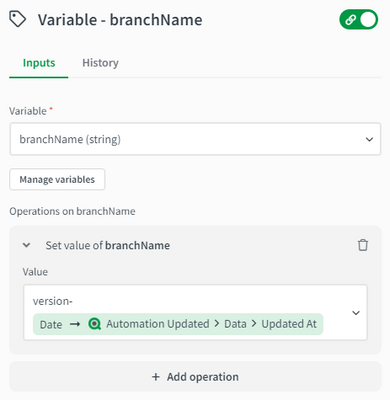

You can see that our initial start block is replaced with a Qlik Cloud Services webhook that listens to all automations being updated in our tenant. We have also created a variable to keep the GitHub branch name in memory and keep it as a version number for the cases where it might be needed:

The formula used takes the date at which the automation was modified, transforming it to a UTC format, and attachs it to the branch name: version-{date: {$.AutomationUpdated.data.updatedAt}, 'U'}

We also need to attach a condition block to our flow to make sure only our source automation gets sent to our repository:

In this case, we are hardcoding the id of our automation in the condition input parameter. Next, on the 'yes' branch of the condition, comes the 'Get automation' block that will return the latest version of our source automation, followed by the flow that sends that data to a new branch in our Github repository. First, we create json object variable to store automation content from the 'Get automation' block:

Secondly, we create a new branch:

Thirdly, we update the automation.json file we had in our main repository with the new data we stored in the 'automationExport' json object variable block:

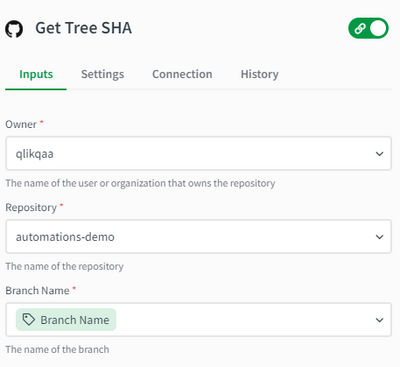

As you can see, GitHub platform only accepts BASE64 encoded file contents, so the JSON content received from 'automationExport' json object variable block will be transformed to that format. To create the commit and pull request, we have one more step, which is to find out the branch TREE SHA information:

With this information, we can go ahead and create the commit:

The final step in our flow is to create the pull request :

Keeping your target automation up to date with the latest version

To do this, we now move to our target tenant and create a separate automation there. The workflow involved is a simple one:

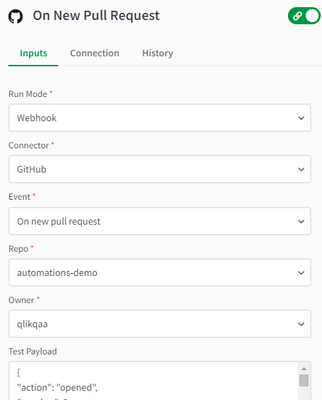

Again, the start block will get replaced by the Github webhook that is listening to all new pull requests done in our repository:

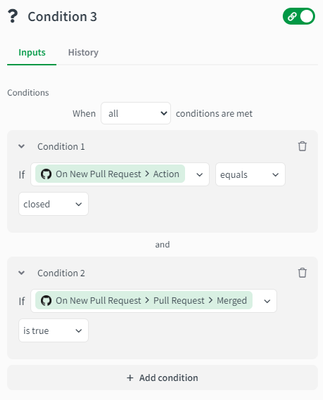

We also create an empty object variable that will be later used to save the incoming information from the repository. We need to create a condition block as well with the following two conditions:

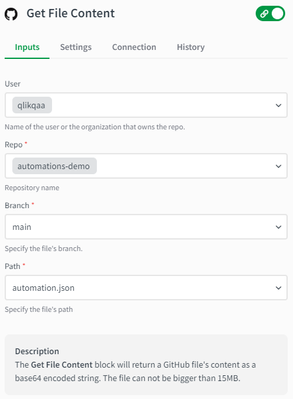

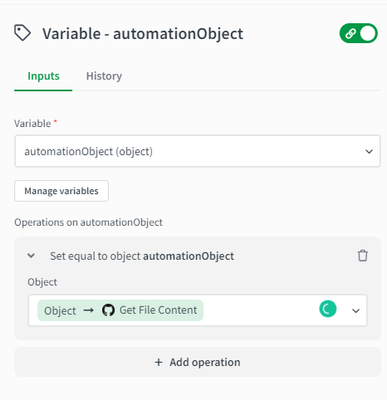

This lets the automation flow continue only if the pull request has been closed and its contents have been merged into the main branch of our repository. It tells us that the pull request has been approved and we can sync all changes into our target automations. For that, we query the file contents of the repository and save that information in our object variable after we have transformed it from a BASE64 encoding format to a text-based format:

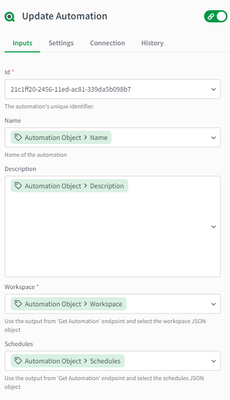

The formula used when assigning the value to the variable is {object: '{base64decode: {$.getFileContent}}'} which just removes the base64 formatting and turns the string into a JSON object to better handle it in the next block. Now all that is left is to use the 'Update Automation' block to bring the new changes to our target automation:

As you can see, we hardcoded the GUID of our target automation, but the do lookup functionality can be used as well to select one of the automations in our tenant. Finally, we need to send the correct parameters from our object to the input parameters of the block. This can easily be done by selecting the Name, Description, Workspace and Schedule parts of the object.

This should let you keep your automations synced over multiple tenants. Your target automation history should not be impacted by the syncs in any way.

Please be aware that if the target automation is open during the sync process, the changes to it will not be reflected in real-time and a reopening of the automation will be needed in order to see them.

Attached to this article you can also find the JSON files that contain the automation flows presented.

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

Related Content

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Marius,

First, thank you for this.

I wanted to point out the the Automation Update trigger is very broad. It will trigger when an automation is enabled/disabled, the owner is changed, I even noticed that sometime it will be triggered after an automation is triggered. I don't want to store each version into Git.

I was wondering you have figured out how to determine what about the automation has changed to make a decision if I want to store that version into Git or not.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Don't see any solution recommendations, so I resorted to comparing the current workspace and schedule of the automation against what's in Git, if there's differences then something has changed, if no differences then nothing has changed. Way too complicated solution for something that should be simple.

Note : if you're publishing your automation into Git, you should remove the executionToken from the generated workspace.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I also don't want to commit everything on an event but need it controlled.

I modified these templates and use a url to trigger the commit of apps and automations to github. It works well. The automation also sets a load script on an app to write a log of the who committed what and when.

The problem I still have though is that when you import an automation it will only bring in the latest version from github. If I download the json from a historical commit, that json has had it's structure affected by the Git block and as such is unreadable when imported into a workspace.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Sorry Edward, can't help there. Since I'm committing every update to Git, I have every version in Git, hence I do not need the versions in Qlik.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

it to a new workspace, it doesn't work. When QAA writes the json to git,

it is changing the file. At the start of a manually downloaded automation

json is the word "block", that is missing from the file committed to

github. The only way (I know of) to import it back is by using the block in

QAA which you are using for import but that will only be able to restore

the latest version and not prior commits. I've raised this with Qlik

support.