Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Data Integration

- :

- Products & Topics

- :

- Qlik Replicate

- :

- Oracle change data capture error

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oracle change data capture error

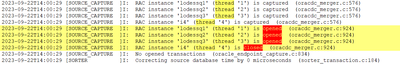

Hi, we are getting an error when we try to perform a change data capture on a large oracle table. It looks like it is trying to find an log file from two years ago. We turned on the change data capture after the initial load. We just turned on archive logging on this database so we are not sure why it is trying to find this archive log number. Thread one looks like it processed just fine. But thread two is failing.

I get an error archived log doesn't exist which it doesn't. What I am trying to understand is why thread 2 is looking for sequence 520 whereas thread 1 is looking for sequence 7288 which it finds. How does Replicate determine which log files it needs?

Let me know if you need any more information.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, this has been resolved. The developer changed Change Data Capture to only look for read changes from the last day.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Team,

Thanks for reaching out to Qlik via Qlik Community Support page. " What I am trying to understand is why thread 2 is looking for sequence 520 whereas thread 1 is looking for sequence 7288 which it finds. " It seems NODE 2 is added afterwords, or Node removed. Still using a RAC database of standalone.

We would require Log to do the Analysis of the same. To overcome the issue. What you can do. Stop the task and take the timestamp from the log file when it was stopped. and Start QR task the taken timestamp rather than resume.

Regards,

Sushil Kumar

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So this is a RAC environment right?

Perhaps it is one with a heavily use Primary and little used or unsused secondary?

When/How are the REDO logs rolled?

Strictly by size (when full) or also by schedule (every few minutes?, hours?, days?)

Could it be that log 520 started wicked long ago and caught a big SCN range?

Any RESETLOG operations?

Anyway, you want to investigate the time/SCN number Replicate is looking for according to the reptask__xxx.log against the contents of v$archived_log;

Something like:

select THREAD#, FIRST_CHANGE#, NEXT_CHANGE#, STATUS, DELETED, FIRST_TIME, NEXT_TIME, SEQUENCE#, RESETLOGS_CHANGE#

from v$archived_log where <SCN_replicate_is_looking_for> between FIRST_CHANGE# and NEXT_CHANGE#

>> We turned on the change data capture after the initial load.

How? Did you then ADVANCED RUN ... Tables are already loaded. Start processing changes from: xxxx ? Why not run with CDC right away? Was that large table also very busy during the load?

Hein.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We performed a full load of the table first. The reason we did that because when we turned on the change data capture immediately the task would fail with the same error. I will get the log from the developer and confirm what SCN QLIK is looking for. I do know that sequence number that is listed in the error log from QLIK doesn't exist on the v$archived_log file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, to be clear, this is the log from QLIK? I will get it from the developer and send it. This is a RAC Exadata environment. So, the full scenario. We started the table load with change data capture. That failed. We then just performed the full data load. That succeeded. We turned on the change data capture. It still failed looking for the sequence number that doesn't make sense.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have attached the file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, so really de fullload only would get the initial data over but could not be expected to 'fix' the CDC related errror.

The log looks correct, but does NOT show a start by time-stamp. It may be a retry log. Did you start by timestamp?

I suspect this is an issue in not setting up DEST_ID correctly.

The log does not show an SCN. It may be hidden in the 'context' but I forgot those details. You may want to start with LOGGING for SOURCE_CAPTURE set to debug.

This may be an issue with DEST_ID ( which is similar to THREAD#) you may need to set the internal parameter archivedLogDestId and possibly additionalArchivedLogDestId & capturedRacThreads.

Be sure to carefully read and re-read the Replicate User Guide searching for DEST_ID.

Check the details on the log 7288 in V$ARCHIVED_LOG - do they make sense? How do they compare with log 520.

You may need to log a support call if this all gets too much.

Good luck,

Hein.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @SungChungAmeren ,

From the task log file line #56:

last source timestamp 1695296201000000

It's epoch format time, the corresponding GMT time is 2023.09.21 11:36:00. Compared to the task run time, it's about 26 hours ago, looks good. The redo log files should not be too long away.

In fact, Replicate does not know which redo log files should be read, it's determined by Oracle view v$archived_log. Replicate queries this view to know which redo log files should be open/read. You can get the result of "select * from v$archived_log where name is not null" and export the result to Excel file for analysis.

Also please set SOURCE_CAPTURE to Verbose (then reproduce the error) and attach both files to the support ticket, support team will help you further. Please do not upload these files here as community is publicly accessible to all visitors.

BTW, this is a 4 nodes RAC (node 4 is closed). Are you sure the RAC works fine without any exception? any information is helpful to understand the environment.

Regards,

John.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, this has been resolved. The developer changed Change Data Capture to only look for read changes from the last day.