Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Data Integration

- :

- Qlik

- :

- Qlik Replicate

- :

- Re: Replicate to Cloudera in ORC format, with tabl...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Replicate to Cloudera in ORC format, with table properties set.

Good day

We need to do a full refresh to Cloudera in ORC format, with the following table properties set.

'transactional'='true'

'transactional_properties'='insert_only'

We are using the Hadoop endpoint and the syntax file is Hive13.

I believe this should be possible by changing the syntax file. Any idea where in the syntax file this could be changed?

Thanx

Abrie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Abrie_M ,

The default Hive13 table creation syntax is

"create_table": "CREATE ${TABLE_TYPE} TABLE ${QO}${TABLE_NAME}${QC} ( ${COLUMN_LIST} )",For your scenario it should be like:

"create_table": "CREATE ${TABLE_TYPE} TABLE ${QO}${TABLE_NAME}${QC} ( ${COLUMN_LIST} stored as orc TBLPROPERTIES('transactional'='true','transactional_properties'='insert_only'))",

However it depends on the Cloudera cluster version. if your Cloudera Cluster is CDP 7.x then Replicate 7.0 does not support ORC storage format. if it's a lower Cloudera version (still in support scope) then maybe it's doable . We'd like to suggest you open a support case. And even we recommend getting our Professional Services team involved.

BTW, how about if you create the target tables with additional properties out of Replicate ? Then you let Replicate to use the pre-defined tables rather than re-create them again.

Regards,

John.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi John

Thanx for the reply. I will try your suggestion.

We are using CDP 7.x and although we could not get the CDP type endpoint working (CDP endpoint only supports WebHDFS, and not HttpFS, as in the Hadoop endpoint), we are continuing using the Hadoop endpoint and want to use the ORC format.

Our BI developers requested the specific ORC format and properties. Unfortunately there are more than a thousand tables, so I'm looking for the easiest way to provide the tables.

Regards

Abrie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Abrie_M ,

From Hive 3 Hive is enforcing a strict separation between the hive.metastore.warehouse.external.dir vs hive.metastore.warehouse.dir. That leads the lower versions of Replicate cannot work with CDP 7.x.

Replicate 7.x supports CDP 7.x by Endpoint type "Cloudera Data Platform (CDP) Private Cloud" however you know there is some limitations, include does not support HttpFS, does not support ORC format etc.

I'm afraid you cannot use general Hadoop endpoint type "Hadoop" connect to CDP 7.x.

Regards,

John.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi John

Please see case nr 02094431, where we worked with Qlik support to resolve the CDP endpoint setup, as well as the final confirmation we received from Qlik regarding support for the Hadoop endpoint with CDP.

"Hello Abrie,

We can confirm that Qlik Replicate supports Hadoop endpoint to connect to CDP cluster.

Let us know if we can help with anything else.

Kind regards,

Pedro

09 March 2021 at 15:43"

I will discuss the possible issues with our architects to decide on the way forward.

Thanx again for help in this matter.

Regards

Abrie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Abrie_M ,

I apologize for my mistake. The general "Hadoop" endpoint should work with CDP 7.x.

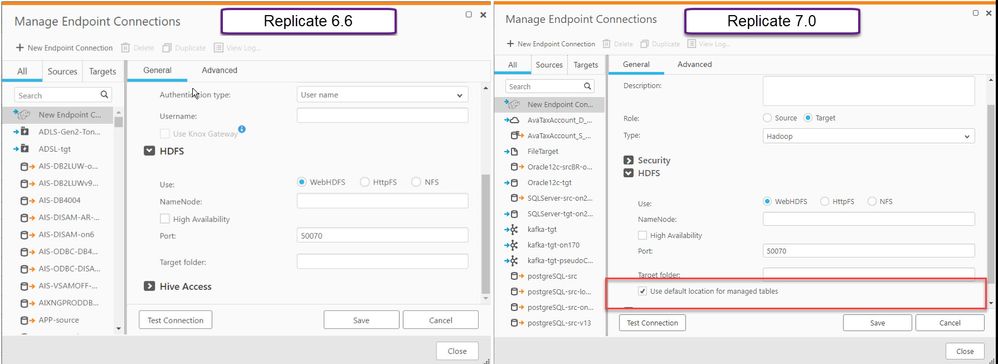

Replicate 7.0 introduces a new parameter "Use default location for managed tables" in "Hadoop" endpoint. That means Replicate 7.0 distinguish the file locations between the external tables and internal (managed) tables. It solved the problem of Hive 3 enforcing strict I mentioned above. I'm attaching a screen copy of the comparison between 6.6 and 7.0.

Good luck,

John.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi John,

we are using the Hadoop endpoint as target and using parquet file format for CDP7.x version. The issue we are facing is in wrt the external table creation with parquet files its always defaulting to the managed table creation in the HIVE. Is there any solution to create the external table with the parquet..

I tried changing the Hive13 syntax and also by adding the internal parameter$info.query_syntax.create_table = CREATE EXTERNAL TABLE ${QO}${TABLE_NAME}${QC} ( ${COLUMN_LIST} stored as parquet TBLPROPERTIES('transactional'='true','transactional_properties'='insert_only'))

But its not reflecting in the target and always defaulted to the managed table type. we need this as external table with parquet using Hadoop. Please do the needful.