Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Re: LOAD times

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

LOAD times

Hi,

I have a question rgd. LOAD times for one specific table:

- The table is very big, but on one field it can be split: One part (only one value in that field) has about 5% of all records, the other part (all other values in that field) has about 95% of records.

- There is nów only one filter splitting the table in two: >> TRANCODE = 'SPRECEIVE' << and >> TRANCODE <> 'SPRECEIVE' <<

<=> The first part (LOADed from a qvd file) takes much longer to load than the second one.

These are the two loads: FIRST LOAD:

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>><<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

Trans_Dtl_Rec:

LOAD

ITEM_NUMBER as Rec.ITEM_NUMBER,

TRAN_DATE_TD as Rec.TRAN_DATE_TD,

TRAN_DATE_Time as Rec.TRAN_DATE_Time,

TRAN_CODE as Rec.TRAN_CODE,

QUANTITY as Rec.QUANTITY,

BIN as Rec.BIN,

TRACKING_NUMBER as Rec.TRACKING_NUMBER,

CONTROL_NUMBER as Rec.CONTROL_NUMBER,

SERIAL_LOT_NUMBER as Rec.SERIAL_LOT_NUMBER

FROM $(v_Pfad_TransDtl) (qvd)

WHERE TRAN_CODE = 'SPRECEIVE';

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>><<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

SECOND LOAD

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>><<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

Trans_Dtl_aside:

LOAD

ITEM_NUMBER as Aside.ITEM_NUMBER,

TRAN_DATE_TD as Aside.TRAN_DATE_TD,

TRAN_DATE_Time as Aside.TRAN_DATE_Time,

TRAN_CODE as Aside.TRAN_CODE,

QUANTITY as Aside.QUANTITY,

BIN as Aside.BIN,

TRACKING_NUMBER as Aside.TRACKING_NUMBER,

CONTROL_NUMBER as Aside.CONTROL_NUMBER,

SERIAL_LOT_NUMBER as Aside.SERIAL_LOT_NUMBER

FROM $(v_Pfad_TransDtl) (qvd)

WHERE TRAN_CODE <> 'SPRECEIVE';

=> Is there any way I can speed that up?

Thanks a lot!

Best regards,

DataNibbler

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Marcus,

thanks for the patience!

I'll try that.

In any case, I now have an "inbetween app" loading all the necessary tables so that, in the actual report, the users can just load that BINARY - that should be even faster, I think - the tables would also have to be loaded from a server_filepath, so no difference there - then I can make each LOAD in the actual report RESIDENT.

That moves all the loading_business one step further up the road, taking the stress off it - but the issue about the optimized LOAD is a very general one, so I need to understand it.

Problem is, we had a virus yesterday, many files are missing which are loaded in my INCLUDEs, so many apps just don't work. I have to see what I can do.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okay,

I have a new issue again:

I now have all the required tables in RAM (through a BINARY LOAD), now I have a LOAD from one of those tables - originally, it was

- Header_table with one EXISTS() clause

- JOIN body_table with one more EXISTS() clause

Since I do the joining in that new inbetween_app of mine, I can load the entire table into RAM - but for some reason, when I integrate both of the EXISTS() clauses into that LOAD, I don't get the same number of records ...

Very strange - the EXISTS() clauses are the same as before, I introduced them to reduce the nr. of records to load - and the two values exist of course: When I deactivate both EXISTS() clauses and just have the complete table in RAM and then I select the right value in the one field, only one corresponding value is there in the other.

P.S.: Ah, no, seems I have been confusing myself - the progress_window shows more lines in the old version, but the table in the end has only one - the same, so that's okay.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Marcus,

with that BINARY, it all seems to be running faster now, plus I don't need the SWITCH statement anymore, but there's another issue to replace it: The BINARY must be the very first statement, no? So I cannot first query the user for the plant and then activate the one or the other BINARY, can I? Or can I create an INPUT box on the GUI and use that?

Best regards,

DataNibbler

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi DataNibbler,

that's correct - the binary must be the very first statement but after them you could change the datamodel with further loadings which filter or add data or remove/rename tables and fields and so on.

But you could also use an inputbox (maybe with defined list of variable-values) like:

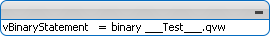

and then:

$(vBinaryStatement); // and it worked

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks! I'll try that.

The BINARY approach would speed it all up, or so I hope. But I guess I cannot then combine that with a partial_load_approach. Well, one cannot do everything at the same time ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My BINARY app for the one plant now works - I'm just preparing the second one for the other plant.

I do have one issue, though: I can reload it manually and it's just fine. I can do the same via the QMC - according to the log, the script executes just as well, there is no error recorded - but the QMC says "failed" after a short while ... I don't understand. Do you?

Thanks a lot!

Best regards,

DataNibbler

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

it was quite simple - I had renamed the app earlier and saved it in another directory and the QMC just couldn't follow, there was some confusion - now I took out the schedule, scheduled the app in its new place again, and it works 😉

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Marcus,

I'm just trying this variable after I have made sure that BINARY_approach works at all - it now works with either of the BINARY_apps I have - one for each plant, with all the required tables.

When I type the complete BINARY command (twice) into the Inputbox - or even just the path to the qvw to be loaded - that works fine, I can use it in the script and it all runs just like it's supposed to.

However, I want to keep it as simple as possible for the users. I'd like to just have them select one plant and then use another variable to build on this - there is just a very small part of the BINARY_command that differs between the plants. That doesn't quite work yet, I just get a "Garbage after expression ..." error for the place where the plant_name is supposed to go.

Best regards,

DataNibbler

P.S.: Seems I'm having trouble with the quotes - I need to use a lot of them (around the variables) to display them in a textbox on the GUI - but it seems those very quotes keep the resulting command from working properly in the script. I'll get behind that, it will just take a while of trial_and_error ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi DataNibbler,

beside a further syntax-check you might need some checking routines within your variables - I mean something like: if('$(var)' = ..., ..., ...) and to concat the various variables into your final variable. Quite helpful is it also to use predefined lists within the variables (and this lists could be expressions, too) to avoid erroneous user-inputs.

- Marcus