Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Support

- :

- Support

- :

- Knowledge

- :

- Support Articles

- :

- How to build a backfill sync with a scheduled blen...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

How to build a backfill sync with a scheduled blend

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to build a backfill sync with a scheduled blend

Mar 15, 2022 4:38:14 AM

May 24, 2021 12:09:24 PM

A backfill sync is a process that syncs historical data from a source to a target.

When performing a backfill sync, the total amount of records that need to be synced between multiple systems is split over multiple blend runs. This allows the total sync to take longer than the maximum execution time of a single blend run.

In this article, scheduling will be used to execute a single blend multiple times.

It would also be possible to build a solution with a triggered blend that calls itself, but this is more error-prone as a failed blend execution can break the chain.

When building backfills, a scheduled blend offers 2 advantages over a triggered blend:

- other scheduled and triggered blends won't be blocked by the partial sync

- a failed run won't impact the schedule and a retry strategy can be implemented

The downside of using a scheduled blend is that it might take longer, the shortest possible interval for the schedule is 30 seconds. If there are many syncs that have to process an empty range of records, some time will be lost on these syncs.

1. Setting the schedule

Set the template to run in "scheduled" run-mode. As long as the schedule is set, the blend will keep executing according to it.

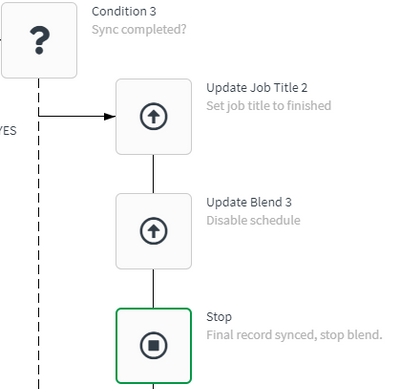

When the sync is finished, the schedule needs to be disabled.

This can be done by executing the "Update Blend" block with the "Schedule every" parameter set to "Disabled". Combine this block with a condition block that checks if the sync has finished.

2. Dividing records and storing a state

The total amount of records needs to be split over multiple blend runs. And each blend run will need to know the current state of the sync. In other words, which part of the records it should process.

How this is done depends on the available list blocks for the connector belonging to the platform where data is fetched from. Our Shopify connector will be used as an example as it has all 3 types of list blocks.

2.1 Incremental block

If an incremental block is available, the pointer can be set using the "Set Pointer" block. This pointer is stored in the Blendr backend so there's no need to worry about storing the state. To prevent the incremental block from fetching all records, set the "Maximum numbers of items to retrieve" parameter in the block's settings tab to the number of records each run should process.

This approach only works if the records are returned sorted from old to new.

After all records for a certain run are processed, use the"Update Pointer" block to change the pointer to the timestamp from the newest record returned by the list incremental block.

2.2 Search block

For an example, see the attached file: Shopify scheduled backfill sync - search block.json

How to import a blend from a JSON file

A search block is a block that allows you to retrieve a list of records with a certain query. This can be an SQL-like query or some predefined fields. The block can be used with multiple strategies, this article focuses on a time window strategy. This consists of fetching records between two timestamps for example, "timestamp_min" and "timestamp_max". These timestamps define the time window.

Time window

The size of each batch is defined by the time between the timestamps that make up the time window, this should be a fixed value, for example, 5 days or a couple of hours. This depends on the "density" of the data.

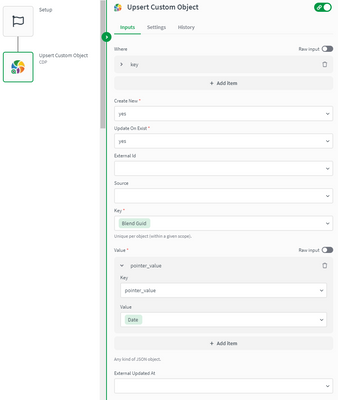

To keep track of the current batch that needs to be processed (the state of the backfill), one timestamp should be saved in the CDP or Data Store. The other timestamp can be determined by adding or subtracting the time window size. The formula {blendguid} can be used as a unique identifier to scope variables to their template. During development, this will be parsed into the template's GUID. After a user installs the template, it will be parsed into the GUID of that specific installation.

When processing records from new to old, save the pointer in the CDP (or Data Store) during the setup flow. Use the "Date" formula with "now" as input time to generate a datetime string for the time of the template's installation.

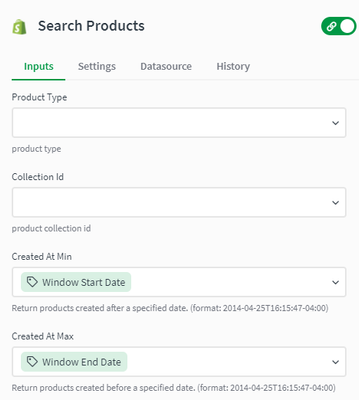

The first steps of the template's main flow will be to fetch the state from the CDP or Data Store and define variables for the timestamps that make up the time window. In the included example, these variables are called "WindowStartDate" and "WindowEndDate".

Casting these values to clearly named variables will lower the template's complexity and will make it easier to use them later on in the backfill sync.

When performing the backfill from new to old, WindowEndDate will be set equal to the saved pointer. WindowStartDate will be set to the pointer minus the time window size.

Once these variables are defined, they can be used in the search block.

Other strategies

There are many other possible strategies to split the records into batches when using a search block. This will differ from connector to connector as not all APIs have a search endpoint. Please contact our support team if a connector is missing a search block (that should be available according to the API documentation).

2.3 Regular list block

If for a certain connector an incremental list block and a search block are both not available. A regular list block is the only possibility. This approach is not efficient and can take a long time.

The strategy is to store the ids of every processed record. After fetching a new batch of records, compare the ids of those records to the ids of already processed records by using the "Compare Lists" block.

This approach will take a long time and the "Compare Lists" block might take too long to run on big lists.

3. Implementing a retry strategy

3.1 Manual retry

Optionally an inputs field can be included in the settings flow with additional parameters like

- a final date, records older than this date will be ignored

- a custom start date, records younger than this date will be ignored

The custom start date can be used to restart the full sync from a point in time different than the moment of installation. It's even possible to retry a specific time window by specifying both a final date and a custom pointer.

3.2 Auto retry

Add a new variable "max_retry" to the CDP or Data Store and assign a numeric value to it. This equals the number of times the blend should retry a run if an error is encountered.

Go in the settings tab for each block that could cause an error and change "On Error" to "Warning". After each of these blocks, catch a potential error with a condition block. Retrieve the max_retry variable, if it's greater than 0, subtract 1 and stop the blend without updating the pointer in the CDP or Data Store. This will cause the next run to use the same time window. If the max_retry variable equals 0, update the pointer in the CDP or Data Store and use an alerting connector (for example, email) to notify a user or your services team that a portion of the backfill has failed for a certain time window.

Reset the max_retry variable and stop the blend. Optionally, store the information of failed runs in a new variable and send only one notification of all incomplete runs.

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.